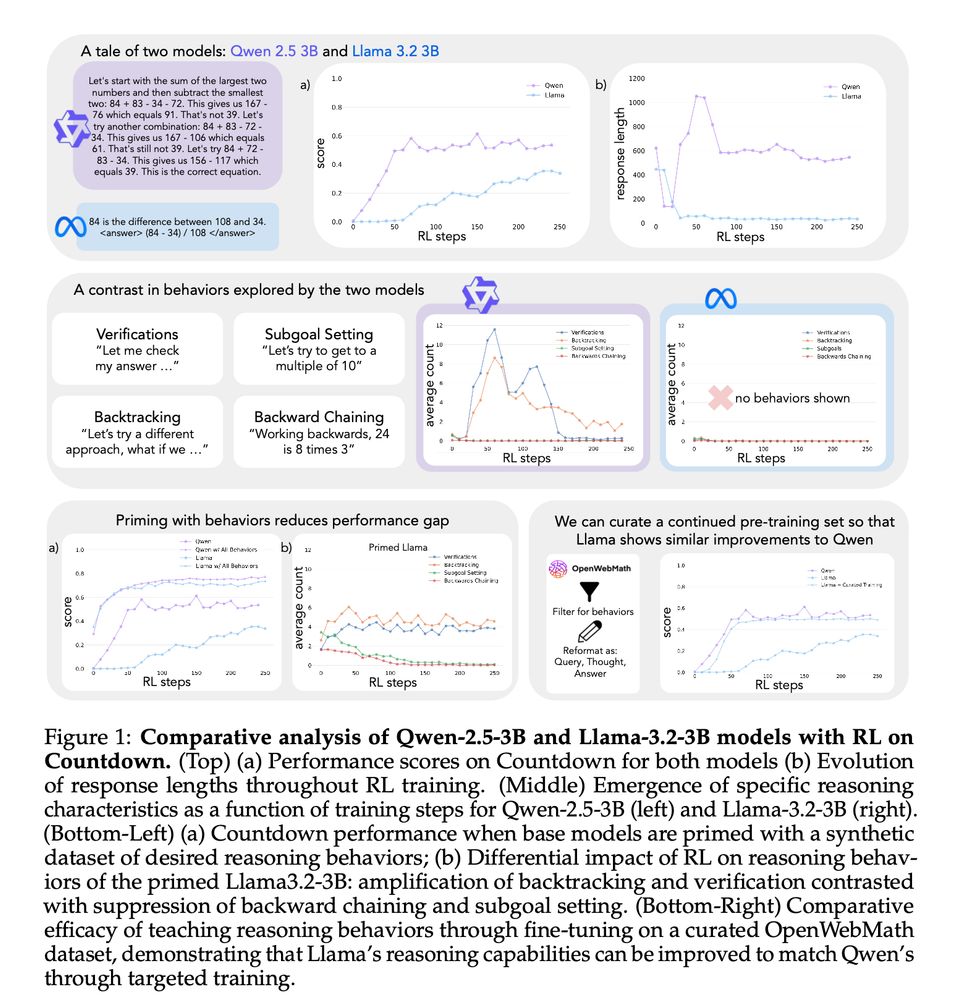

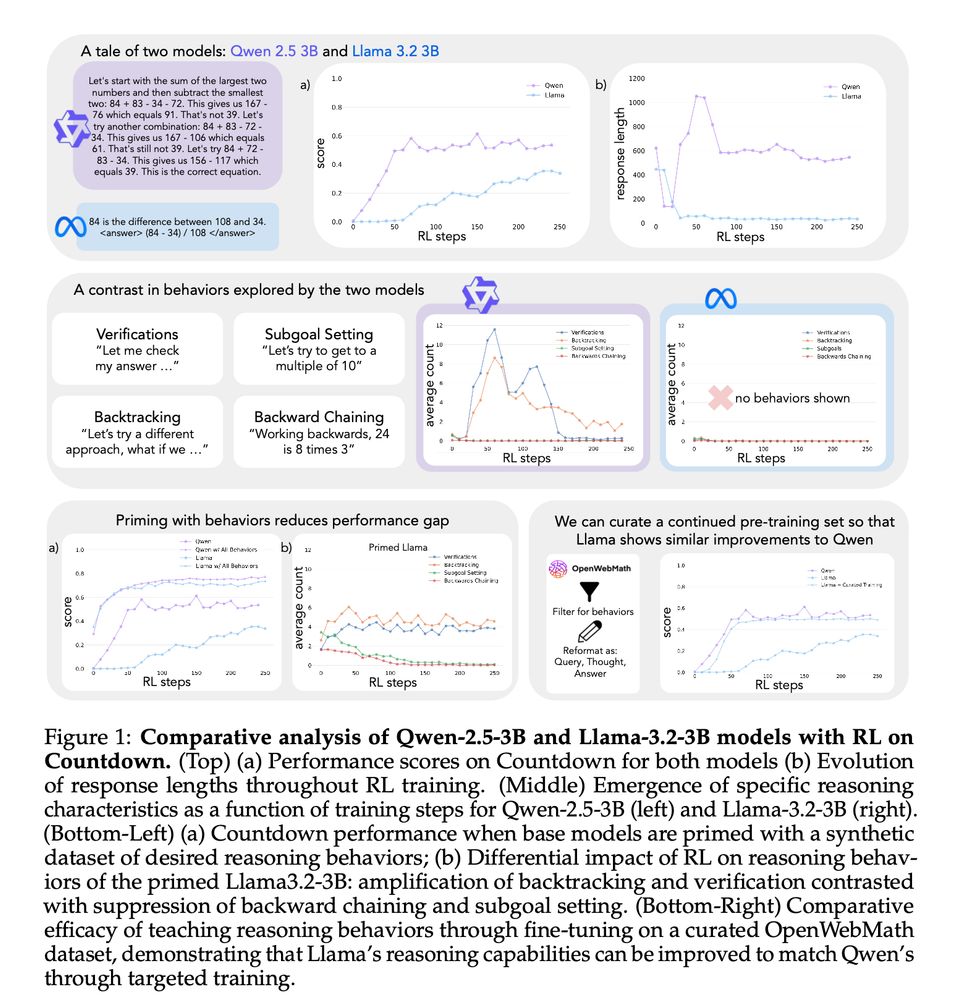

1/13 New Paper!! We try to understand why some LMs self-improve their reasoning while others hit a wall. The key? Cognitive behaviors! Read our paper on how the right cognitive behaviors can make all the difference in a model's ability to improve with RL! 🧵

04.03.2025 18:15 — 👍 57 🔁 17 💬 2 📌 3

Thank you to @sloanfoundation.bsky.social for this generous award to our lab. Hopefully this will bring us closer to building truly general-purpose robots!

18.02.2025 16:50 — 👍 22 🔁 4 💬 3 📌 0

(Many) more details in our paper! arxiv.org/abs/2410.02749

12.02.2025 20:08 — 👍 0 🔁 0 💬 0 📌 0

LMs trained to synthesize programs by repeatedly editing their own generations produce more diverse code compared to baselines

This improves the trade-off between test-time FLOPs and pass@k

12.02.2025 20:08 — 👍 1 🔁 0 💬 1 📌 0

Our approach introduces an algorithm, LintSeq, for sampling across interdependent lines in source code by using a code linter

With LintSeq, we can generate plausible edit *trajectories* for any source code file, covering possible ways of synthesizing its contents edit-by-edit with no linter errors

12.02.2025 20:08 — 👍 1 🔁 0 💬 1 📌 0

Our paper showing that LMs benefit from human-like abstractions for code synthesis was accepted to ICLR! 🇸🇬

We show that order matters in code gen. -- casting code synthesis as a sequential edit problem by preprocessing examples in SFT data improves LM test-time scaling laws

12.02.2025 20:08 — 👍 10 🔁 2 💬 1 📌 1

Can we extend the power of world models beyond just online model-based learning? Absolutely!

We believe the true potential of world models lies in enabling agents to reason at test time.

Introducing DINO-WM: World Models on Pre-trained Visual Features for Zero-shot Planning.

31.01.2025 19:24 — 👍 20 🔁 8 💬 1 📌 1

Williams and Zipser (1989) is a classic one! leech.cybernoid.gr/files/text/p...

30.01.2025 17:47 — 👍 5 🔁 0 💬 2 📌 0

Introducing 🧞Genie 2 🧞 - our most capable large-scale foundation world model, which can generate a diverse array of consistent worlds, playable for up to a minute. We believe Genie 2 could unlock the next wave of capabilities for embodied agents 🧠.

04.12.2024 16:01 — 👍 234 🔁 60 💬 15 📌 30

Now that @jeffclune.bsky.social and @joelbot3000.bsky.social are here, time for an Open-Endedness starter pack.

go.bsky.app/MdVxrtD

20.11.2024 07:08 — 👍 105 🔁 32 💬 16 📌 5

Assistant Professor in Robotics and Autonomous Systems Heriot-Watt University & The National Robotarium

teaching computers how to see

Assistant Professor at the Department of Computer Science, University of Liverpool.

https://lutzoe.github.io/

Math Assoc. Prof. (on leave, Aix-Marseille, France)

Interest: Prob / Stat / ANT. See: https://sites.google.com/view/sebastien-darses/research?authuser=0

Teaching Project (non-profit): https://highcolle.com/

PhD student at NYU CILVR. Prev: Master's at McGill / Mila. || RL, ML, Neuroscience.

https://im-ant.github.io/

BS/MS Applied Math & CS @uchicagoalumni.bsky.social / RIPL @tticconnect.bsky.social / LEGO Builder

Interested in robot learning!

Chief Models Officer @ Stealth Startup; Inria & MVA - Ex: Llama @AIatMeta & Gemini and BYOL @GoogleDeepMind

Working on #knowledgegraphs #LLMs #datacuration #bioinformatics #neurosymbolicAI #dataquality Assistant Professor at Maastricht University

Researcher at Google. Improving LLM factuality, RAG and multimodal alignment and evaluation. San Diego. he/him ☀️🌱🧗🏻🏐 Prev UCSD, MSR, UW, UIUC.

CIS PhD at Penn | MIT CS + Math '24

sagnikanupam.com

PhD student working on AI reasoning in large multimodal models. I design methods to build better models for math, code, visual reasoning, agents, and robotics.

Researcher at Inria. Simulating the origins of life, cognition and culture. Using methods from ALife and AI.

Publications: https://scholar.google.com/citations?hl=en&user=rBnV60QAAAAJ&view_op=list_works&sortby=pubdate

Prev. posts still on X same username

Assistant Professor at Maastricht University.

Research interests: AI, RL, games. Tic-Tac-Toe aficionado. Opinions my own, but should be everyone's.

Anon feedback: admonymous.co/dennis-soemers

Lecturer @kcl-spe.bsky.social @kingscollegelondon.bsky.social

Game Theory, Econ & CS, Pol-Econ, Sport

Chess ♟️

Game Theory Corner at Norway Chess

Studied in Istanbul -> Paris -> Bielefeld -> Maastricht

https://linktr.ee/drmehmetismail

Views are my own

Stupid #robotics guy at ETHz

Twitter: https://x.com/ChongZitaZhang

Research Website: https://zita-ch.github.io/

Head of Research @ Utah AI Policy Office // math PhD // networks, complex systems, machine learning, and all things AI // mom & cat lady

Computational art, tools & research.

Research director @Inria, Head of @flowersInria

lab, prev. @MSFTResearch @SonyCSLParis

Artificial intelligence, cognitive sciences, sciences of curiosity, language, self-organization, autotelic agents, education, AI and society

http://www.pyoudeyer.com

ML and neural interfaces research, Meta Reality Labs

Reinforcement learning researcher, dabbled in robotics, and generative techniques that were later made out of date by diffusion. Currently at Sony AI, working on game AI