Excited to share our research on what matters in sparse LLM pre-training. Stop by our poster @ ICLR 🗓️ April 24th session #2.

22.04.2025 10:09 — 👍 9 🔁 1 💬 1 📌 0Tian Jin

@tjin.bsky.social

PhD student @MIT CSAIL

@tjin.bsky.social

PhD student @MIT CSAIL

Excited to share our research on what matters in sparse LLM pre-training. Stop by our poster @ ICLR 🗓️ April 24th session #2.

22.04.2025 10:09 — 👍 9 🔁 1 💬 1 📌 0Scaling Laws provide a valuable lens in guiding model design and computational budgets. Our recent work extends this lens to the realm of _fine-grained_ sparsity. Check out our #ICLR2025 paper, and the thread below from lead-author @tjin.bsky.social summarizing our findings.

22.04.2025 01:32 — 👍 2 🔁 1 💬 0 📌 0Tian and Karolina and team are at ICLR. Come say hi.

21.04.2025 13:00 — 👍 8 🔁 1 💬 0 📌 0Please visit us at Poster Session #2 on April 24th. Looking forward to meeting you all at ICLR! 9/N

21.04.2025 07:15 — 👍 0 🔁 0 💬 0 📌 0We're releasing 4 pairs of sparse/dense LLMs (~1B parameters) with matching average parameter counts and identical training tokens, at sparsity levels from 20-80% (see 2nd post for code/models). 8/N

21.04.2025 07:15 — 👍 0 🔁 0 💬 1 📌 0Key insight: sparse pre-training decouples average parameter count (which governs quality) from final parameter count (which determines inference costs), enabling a new Pareto frontier on the training cost vs. inference efficiency trade-off. 7/N

21.04.2025 07:15 — 👍 2 🔁 0 💬 1 📌 0

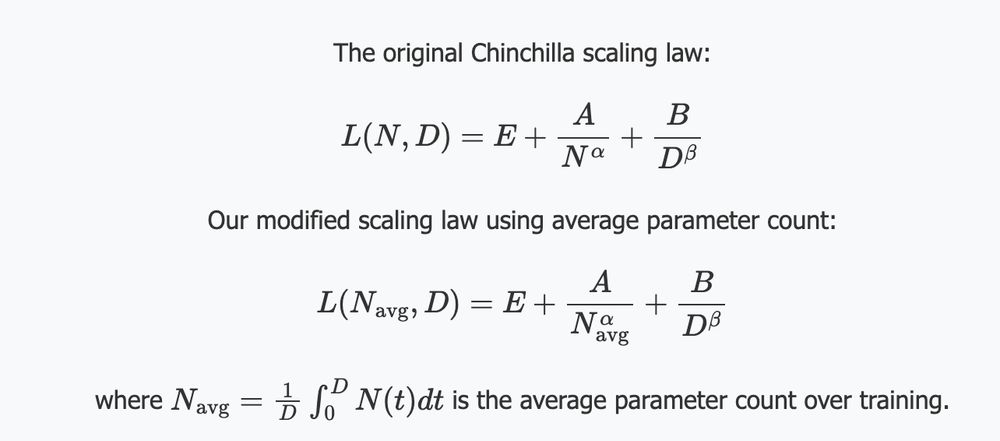

Our results validate this unified scaling law across 30 LLM pre-training runs, spanning models from 58M to 468M parameters with up to 80% final sparsity, trained on up to 20x the Chinchilla-optimal token budget. 6/N

21.04.2025 07:15 — 👍 1 🔁 0 💬 1 📌 0

Building off this observation, we extend the Chinchilla scaling law to account for both sparse/dense pre-training by using average param count during pre-training as the model size term. 5/N

21.04.2025 07:15 — 👍 0 🔁 0 💬 1 📌 0

Surprisingly, this pair of models achieve matching quality (eval ppl)! We tested this on models with 162M starting parameters and 20-80% final sparsity. The results are consistent: sparse and dense models with the same average param count reach the matching final eval loss. 4/N

21.04.2025 07:15 — 👍 1 🔁 0 💬 1 📌 0Consider two parameter count vs. training step curves, w/ an equivalent area under the curve, ie., training FLOPs. Solid line = dense pre-training, dashed line = sparse pre-training w/ gradual pruning. While they differ in final param count, they match in average param count. 3/N

21.04.2025 07:15 — 👍 0 🔁 0 💬 1 📌 0This is joint work with

Ahmed Imtiaz Humayun,

Utku Evci

@suvinay.bsky.social

Amir Yazdanbakhsh,

@dalistarh.bsky.social

@gkdziugaite.bsky.social

Project/Code/Models: sparsellm.com

Paper: arxiv.org/abs/2501.12486

Session: April 24 Poster Session #2 (Hall 3 + Hall 2B #342)

2/N

📣 The Journey Matters: Our #ICLR2025 paper shows how to pretrain sparse LLMs with half the size of dense LLMs while maintaining quality. We found that the average parameter count during sparse pre-training predicts quality, not final size. An MIT/Rice/Google/ISTA collab 🧵 1/N

21.04.2025 07:15 — 👍 5 🔁 0 💬 1 📌 3

Curious to learn more? Check out our paper! arxiv.org/abs/2502.11517

Joint work with @ellieyhc.bsky.social

@zackankner.bsky.social

Nikunj Saunshi,

Blake M. Elias,

Amir Yazdanbakhsh,

Jonathan Ragan-Kelley,

Suvinay Subramanian,

@mcarbin.bsky.social

Our work adds to an emerging line of work we call Asynchronous Decoding. Unlike synchronous (parallel) decoding (eg spec dec) that decodes one contiguous chunk in parallel, async dec decodes multiple non-contiguous chunks in parallel, letting LLMs jump ahead during decoding. 13/N

27.02.2025 00:38 — 👍 2 🔁 0 💬 1 📌 0To make this all work efficiently, we designed a high-performance interpreter that acts on Pasta-Lang annotations to orchestrate asynchronous decoding on-the-fly during LLM decoding. 12/N

27.02.2025 00:38 — 👍 1 🔁 0 💬 1 📌 0The quality-speedup trade-off keeps improving with more training - showing no signs of saturation! We took 4 snapshots at different points of preference optimization (10% Round 1, 100% R1, 10% R2, 60% R2). As we train more, this trade-off improves toward the optimal top-right corner. 11/N

27.02.2025 00:38 — 👍 1 🔁 0 💬 1 📌 0We show that PASTA Pareto-dominates all existing async decoding methods! We achieve geometric mean speedups ranging from 1.21× to 1.93× with corresponding quality changes of +2.2% to -7.1%, measured by length-controlled win rates against sequential decoding baseline. 10/N

27.02.2025 00:38 — 👍 1 🔁 0 💬 1 📌 0We then use these scored examples for preference optimization - teaching the model to generate responses that are both fast and high quality. A quality weight hyperparameter λ lets us tune which aspect (quality vs speed) to prioritize more.

27.02.2025 00:38 — 👍 1 🔁 0 💬 1 📌 0Stage 2: This is where it gets interesting! For each instruction prompt, we sample multiple Pasta-annotated responses and score them based on:

- Decoding latency (how fast and parallel is the decoding?)

- Response quality (evaluated by another LLM)

8/N

Stage 1: We first prompt the Gemini model to annotate instruction-following responses with Pasta-Lang. We then finetune our base LLM on this dataset to learn the basic syntax and semantics of Pasta-Lang annotations. 7/N

27.02.2025 00:38 — 👍 2 🔁 0 💬 1 📌 0How do we train LLMs to do this? Through a two-stage training process that requires less than 10 human annotations! 6/N

27.02.2025 00:38 — 👍 2 🔁 0 💬 1 📌 0<sync/> tag signals when subsequent decoding requires async decoded chunks. At this point, the interpreter pauses to wait for all async decoding to complete before proceeding, ensuring correctness when dependencies involving async decoded chunks exist. 5/N

27.02.2025 00:38 — 👍 1 🔁 0 💬 1 📌 0To enable this, we introduce PASTA (PArallel STructure Annotation)-LANG tags and interpreter: <promise/> tags are placeholders for semantically independent chunks, and <async> tags wrap each such chunk, which the interpreter decodes asynchronously in parallel to each other. 4/N

27.02.2025 00:38 — 👍 1 🔁 0 💬 1 📌 0We developed and evaluated a suite of such LLMs capable of asynchronously parallel decoding on a benchmark of 805 prompts from AlpacaEval. One shows 1.46x geometric mean speedup at a small quality drop of 1.3%, measured by length-controlled win rates. 3/N

27.02.2025 00:38 — 👍 2 🔁 0 💬 1 📌 0In the figure above, when computing a line segment's length, extracting coordinates and recalling the formula are two semantically independent chunks. Our system trains the LLM to identify this and decodes both chunks asynchronously in parallel, then sync for the final calculation! 2/N

27.02.2025 00:38 — 👍 1 🔁 0 💬 1 📌 0Excited to share our work with friends from MIT/Google on Learned Asynchronous Decoding! LLM responses often contain chunks of tokens that are semantically independent. What if we can train LLMs to identify such chunks and decode them in parallel, thereby speeding up inference? 1/N

27.02.2025 00:38 — 👍 16 🔁 9 💬 1 📌 1

Curious to read more? Check out our paper! arxiv.org/abs/2502.11517

Joint work with @ellieyhc.bsky.social

@zackankner.bsky.social

Nikunj Saunshi,

Blake M. Elias,

Amir Yazdanbakhsh,

Jonathan Ragan-Kelley,

Suvinay Subramanian,

@mcarbin.bsky.social

Our work adds to an emerging line of work we call Asynchronous Decoding. Unlike synchronous (parallel) decoding (e.g., spec dec) that decodes one contiguous chunk in parallel, async dec decodes multiple non-contiguous chunks in parallel, letting LLMs jump ahead during decoding. 12/N

26.02.2025 23:42 — 👍 0 🔁 0 💬 1 📌 0To make this all work efficiently, we designed a high-performance interpreter that acts on Pasta-Lang annotations to orchestrate asynchronous decoding on-the-fly. 11/N

26.02.2025 23:42 — 👍 0 🔁 0 💬 1 📌 0The quality-speedup trade-off keeps improving with more training - showing no signs of saturation! We took 4 snapshots at different points of preference optimization (10% Round 1, 100% R1, 10% R2, 60% R2). As we train more, this trade-off steadily improves toward the optimal top-right corner. 10/N

26.02.2025 23:42 — 👍 0 🔁 0 💬 1 📌 0