thats a wrap!

you can find the code here: github.com/maxxxzdn/erwin

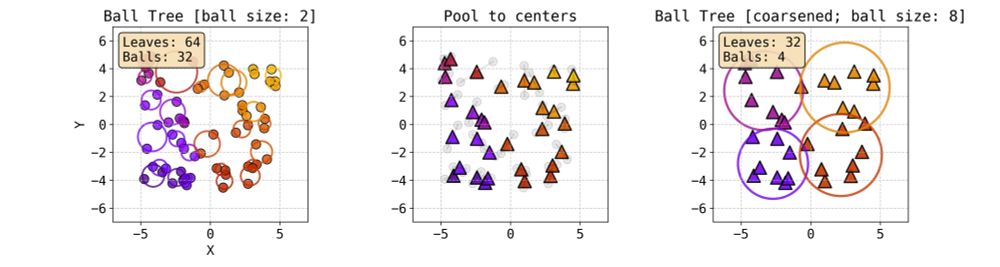

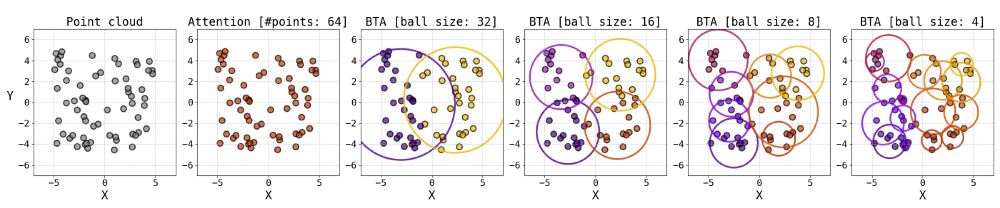

and here is a cute visualisation of Ball Tree building:

@maxxxzdn.bsky.social

PhD candidate at AMLab with Max Welling and Jan-Willem van de Meent. Research in physics-inspired and geometric deep learning.

thats a wrap!

you can find the code here: github.com/maxxxzdn/erwin

and here is a cute visualisation of Ball Tree building:

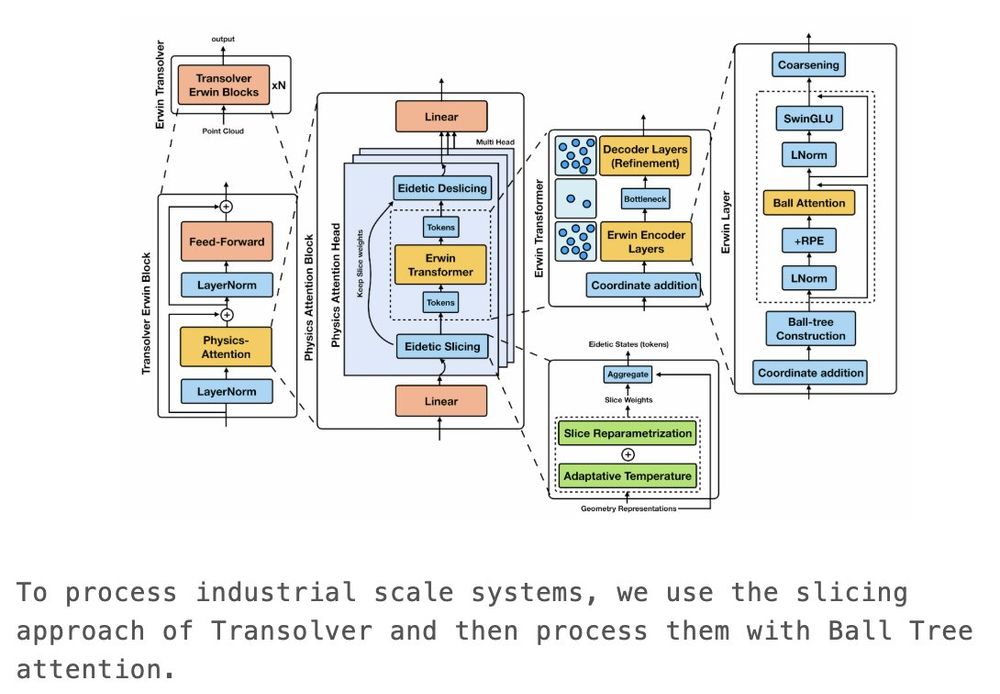

To scale Erwin further to gigantic industrial datasets, we combine Transolver and Erwin by applying ball tree attention over latent tokens.

The key insight is that by using Erwin, we can afford larger bottleneck sizes while maintaining efficiency.

Stay tuned for updates!

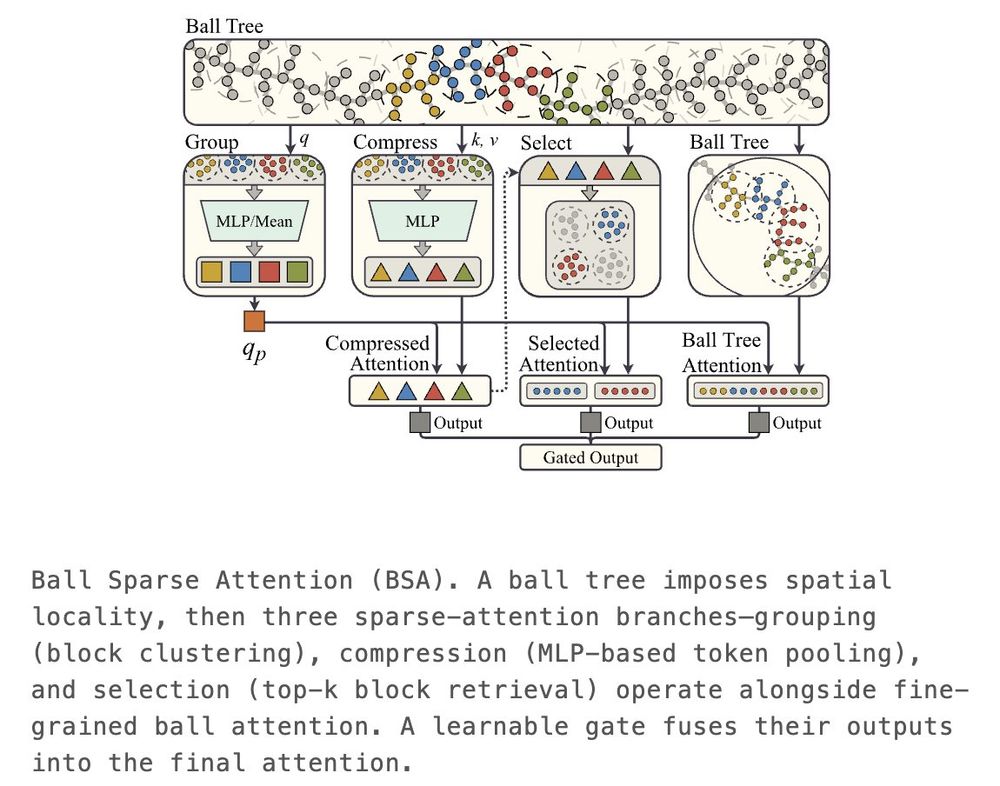

To improve the processing of long-range information while keeping the cost sub-quadratic, we combine the ball tree with Native Sparse Attention (NSA), which align naturally with each other.

Accepted to Long-Context Foundation Models at ICML 2025!

paper: arxiv.org/abs/2506.12541

There are many possible directions for building upon this work. I will highlight two on which I have been working together with my students.

21.06.2025 16:22 — 👍 1 🔁 0 💬 1 📌 0

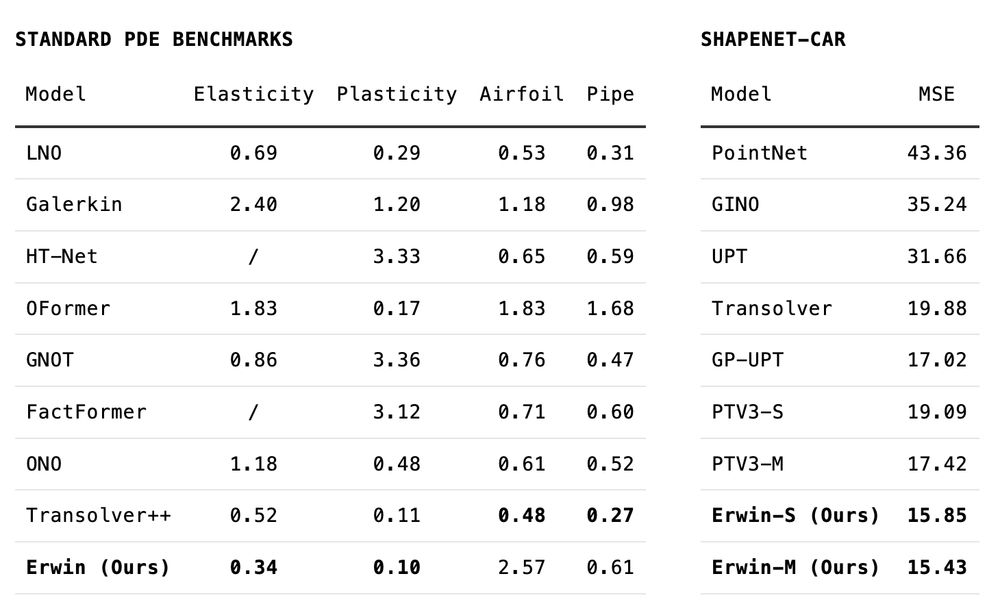

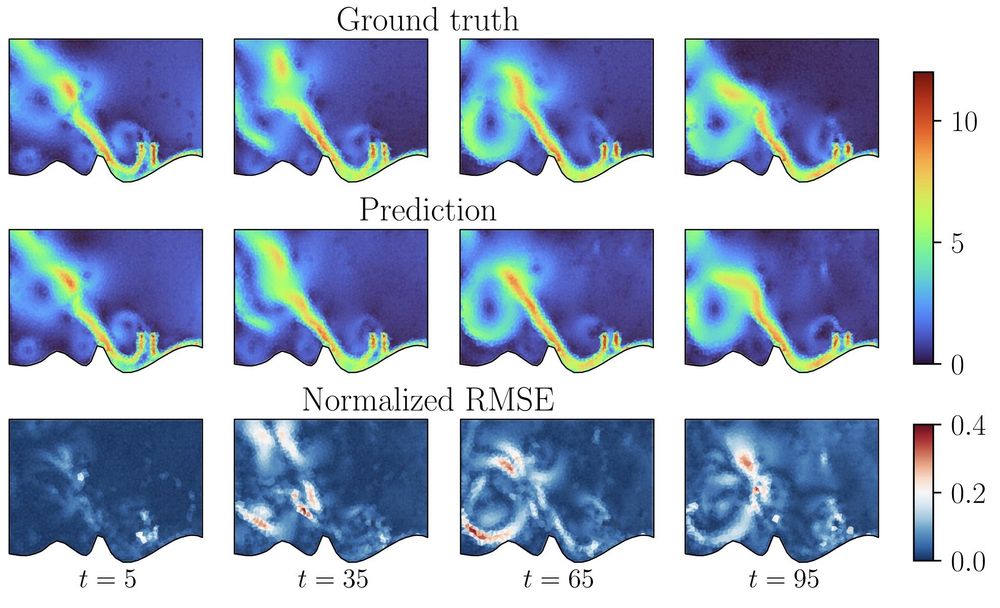

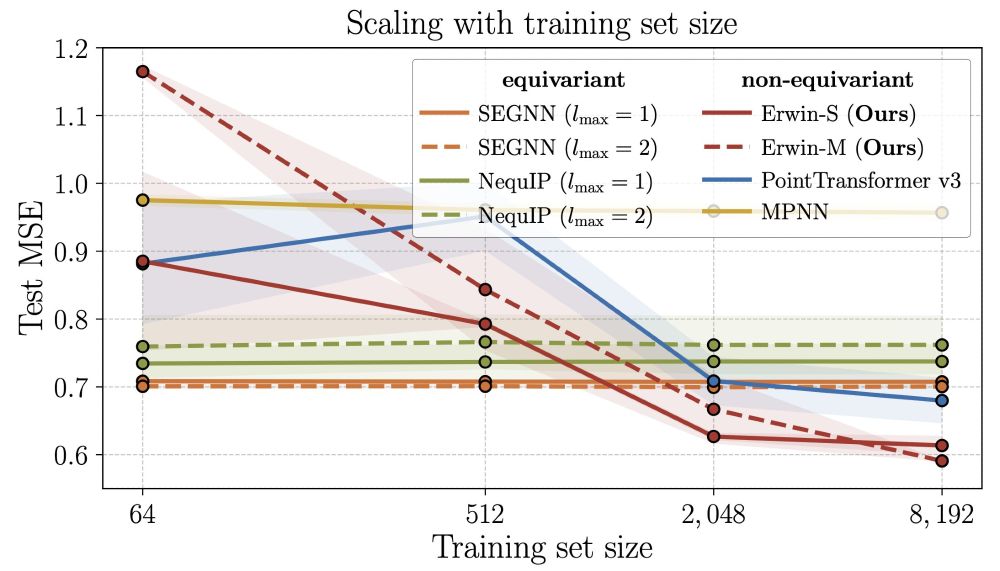

On top of the initial experiments in the first version of the paper, we include two more PDE-related benchmarks, where Erwin shows strong performance, achieving state-of-the-art on multiple tasks.

21.06.2025 16:22 — 👍 1 🔁 0 💬 1 📌 0

Original experiments: cosmology, molecular dynamics and turbulent fluid dynamics (EAGLE).

21.06.2025 16:22 — 👍 2 🔁 0 💬 1 📌 0

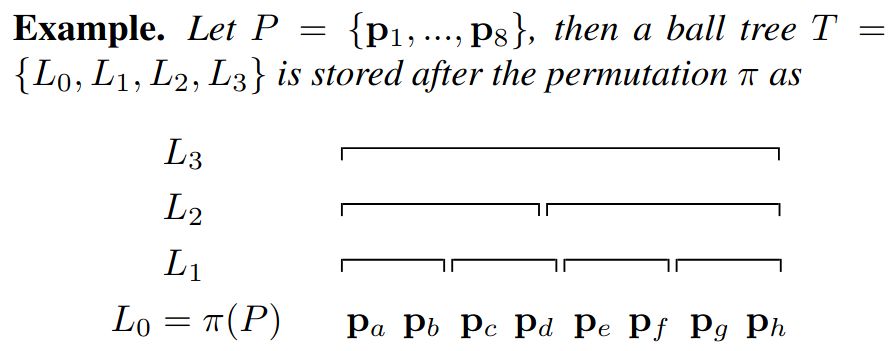

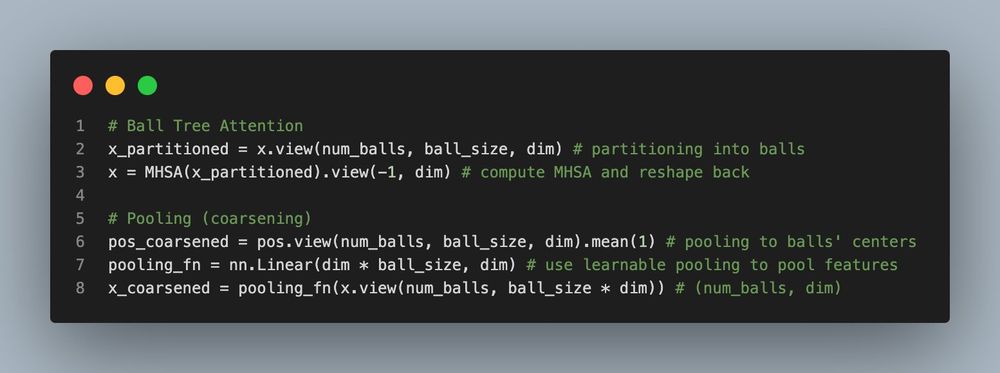

Ball tree representation comes with a huge advantage of contiguous memory layout, making all operations extremely simple and efficient.

21.06.2025 16:22 — 👍 1 🔁 0 💬 1 📌 0

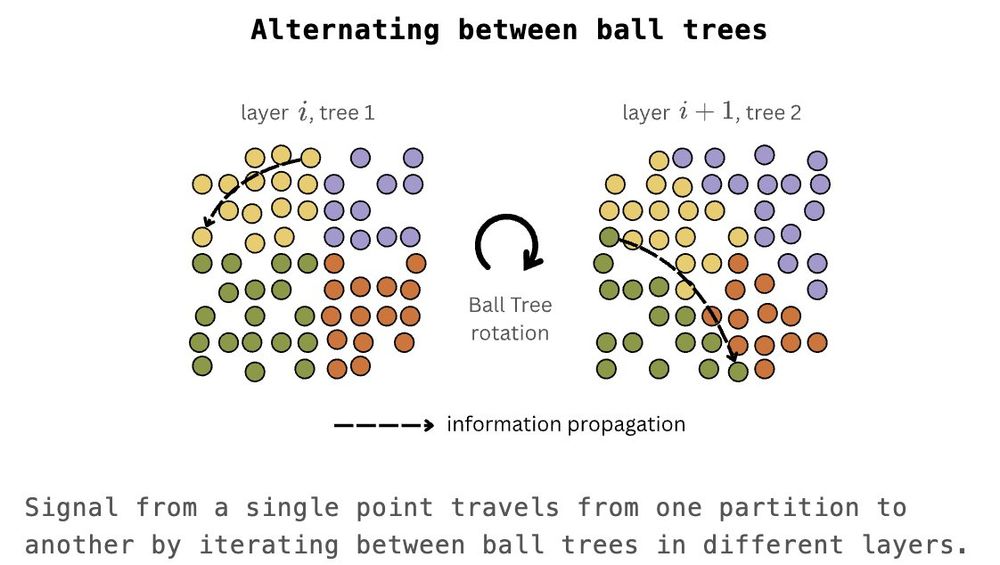

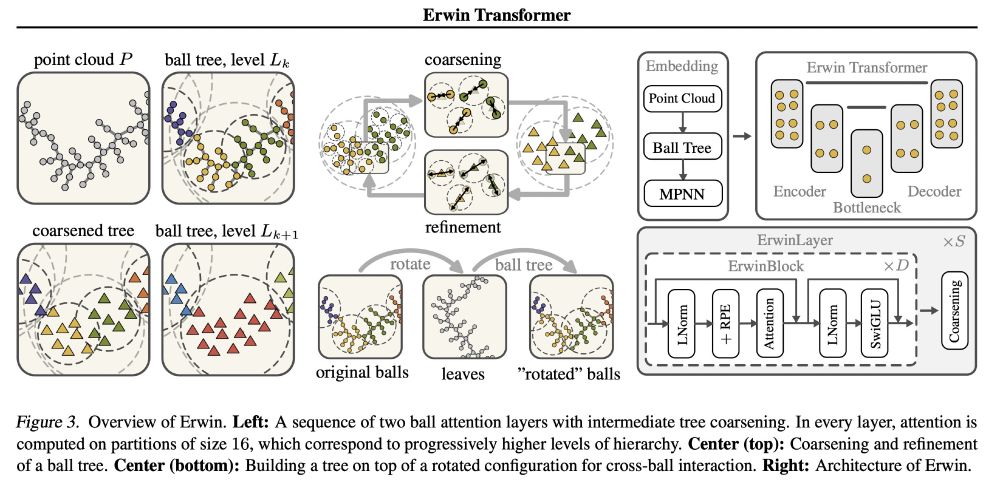

Implemented naively, however, the model will not be able to exchange information between partitions - and thus unable to capture global interactions.

To overcome this, we adapt the idea from the Swin Transformer, but instead of shifting windows, we rotate trees.

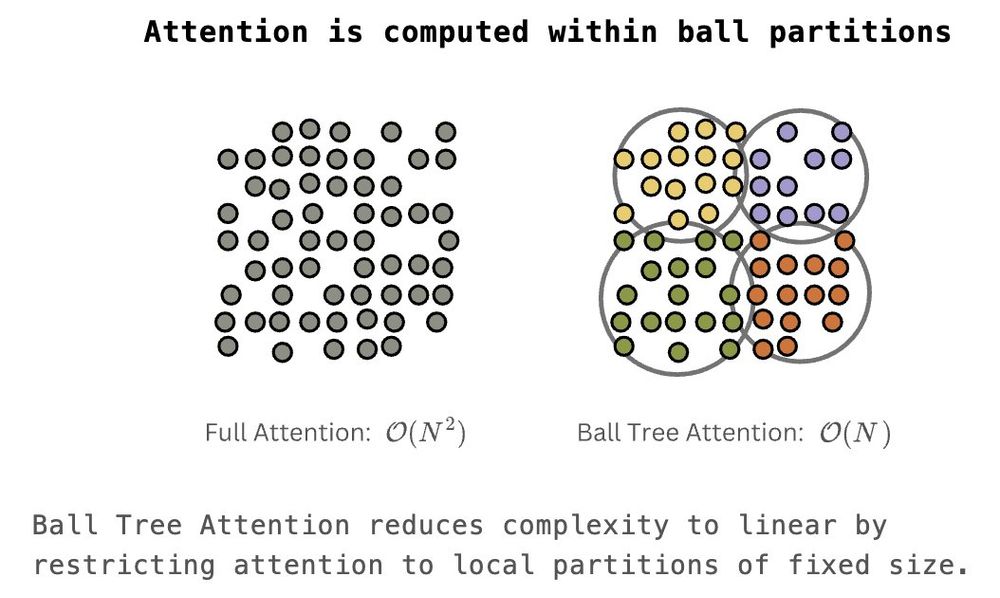

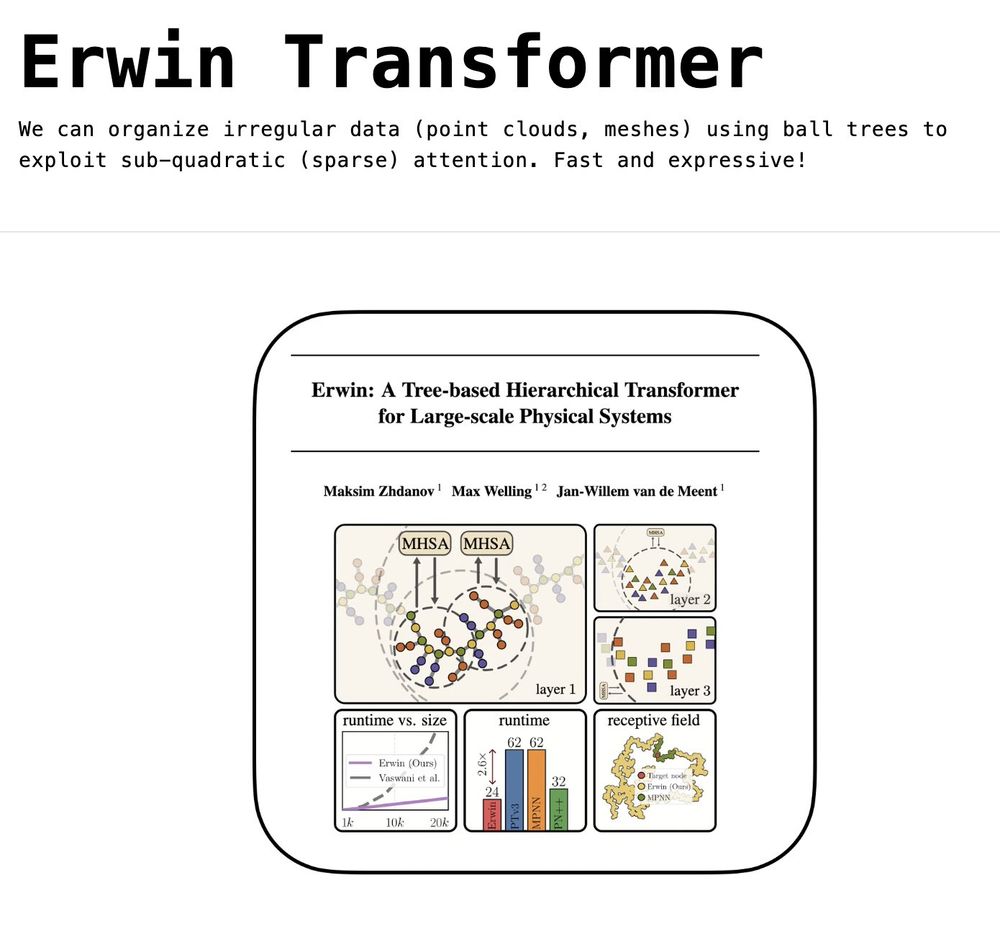

For that reason, we impose a regular structure onto irregular data via ball trees.

That allows us to restrict attention computation to local partitions, reducing the cost to linear.

Erwin follows the second approach as we believe that working at full resolution and the original representation should be done for as long as possible - let the data guide the model to decide which information to use and which to discard.

21.06.2025 16:22 — 👍 1 🔁 0 💬 1 📌 0

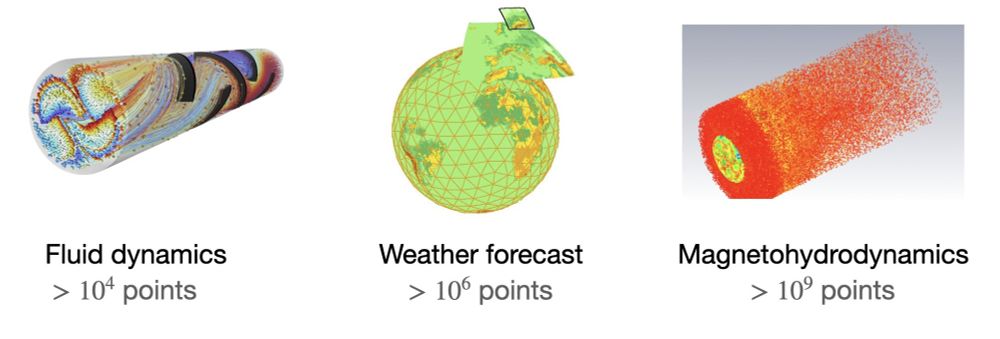

In the context of modeling large physical systems, this is critical, as the largest applications can include millions of points, which makes full attention unrealistic.

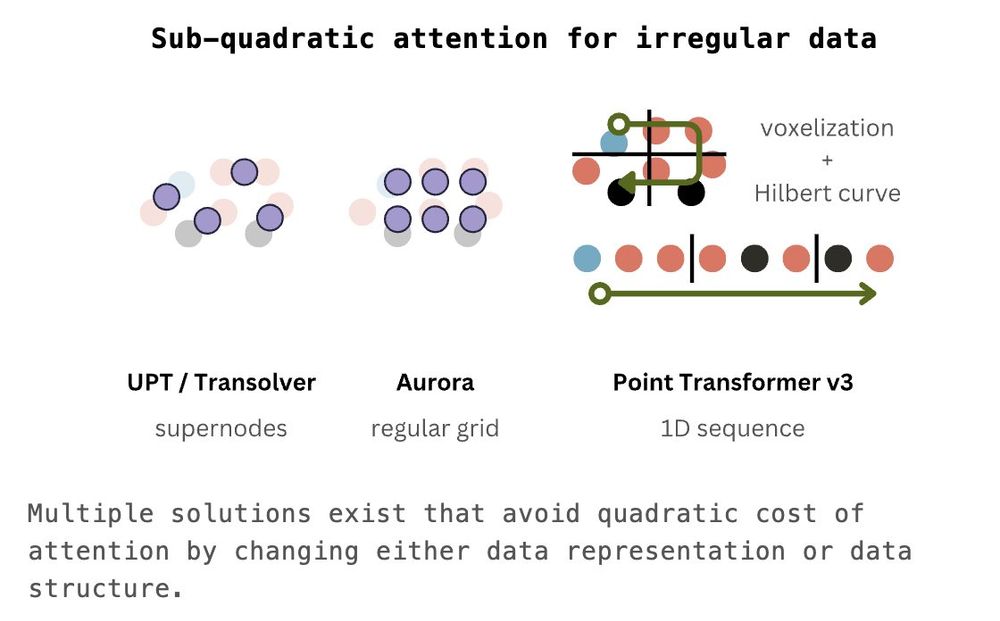

There are two ways: either change the data representation or the data structure.

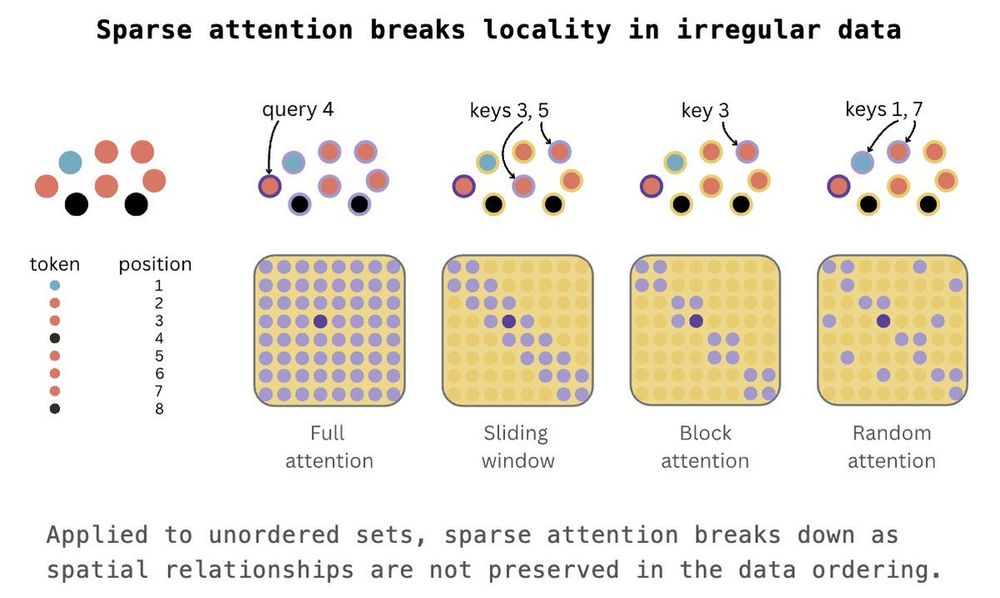

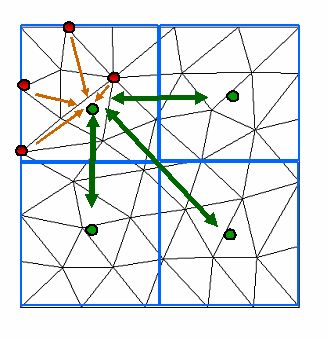

When it comes to irregular data, however, there is no natural ordering of points, hence standard sparse attention mechanisms break down as there is no guarantee that the locality they were based upon still exists.

21.06.2025 16:22 — 👍 1 🔁 0 💬 1 📌 0

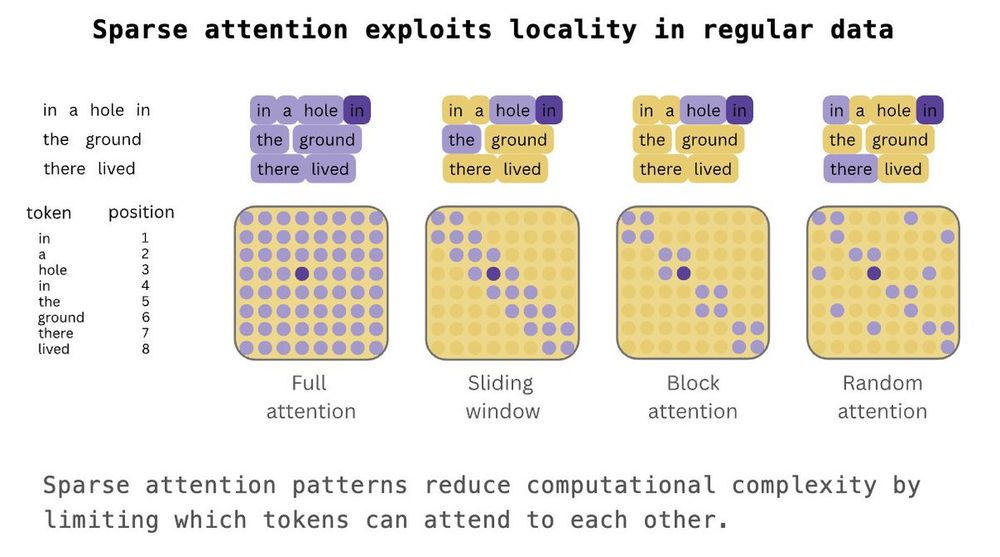

We start with sparse (sub-quadratic) attention coming in different flavors but all following the same idea - exploiting the regular structure of the data to model interactions between tokens.

21.06.2025 16:22 — 👍 2 🔁 0 💬 1 📌 0

🤹 New blog post!

I write about our recent work on using hierarchical trees to enable sparse attention over irregular data (point clouds, meshes) - Erwin Transformer, accepted to ICML 2025

blog: maxxxzdn.github.io/blog/erwin/

paper: arxiv.org/abs/2502.17019

Compressed version in the thread below:

Can only speak for my ICML reviewing batch, but the hack of putting scary, convoluted and wrong math still works.

25.03.2025 07:13 — 👍 2 🔁 0 💬 0 📌 0And that is a wrap! 🫔

We believe models like Erwin will enable the application of deep learning to physical tasks that handle large particle systems and where runtime was previously a bottleneck.

for details, see the preprint: arxiv.org/abs/2502.17019

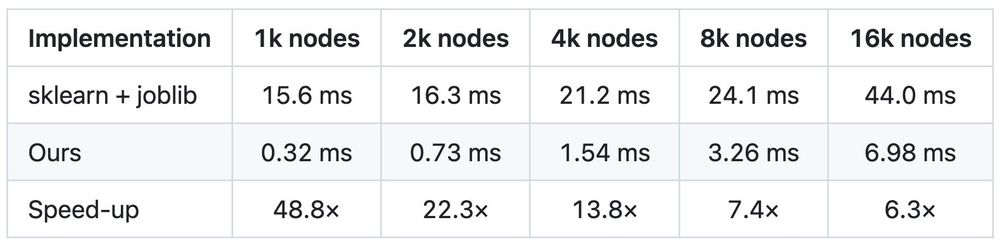

Bonus: to minimize the computational overhead of ball tree construction, we develop a fast, parallelized implementation in C++.

15/N

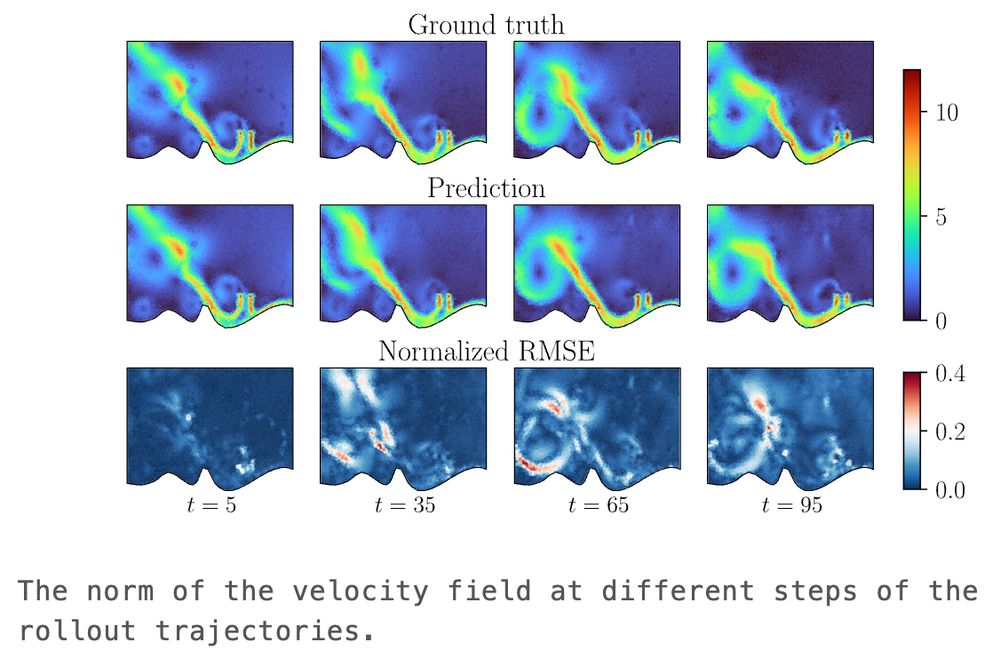

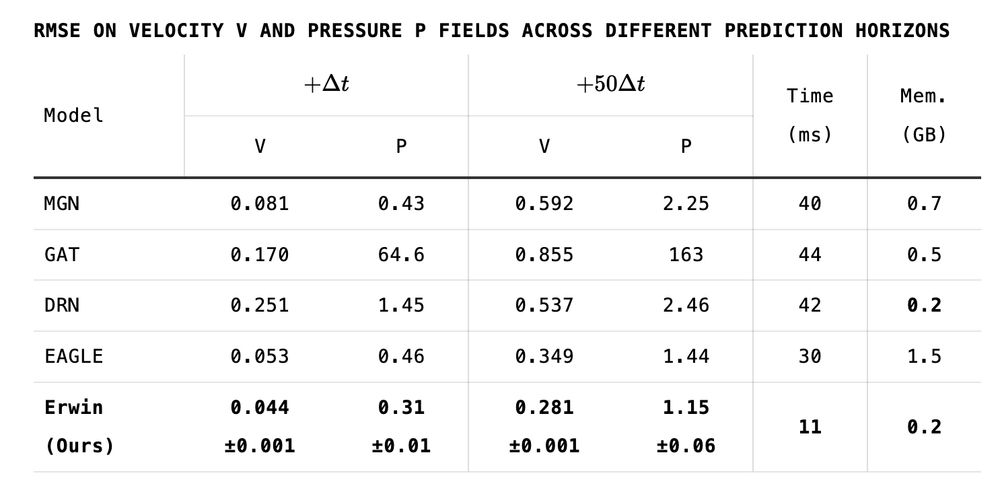

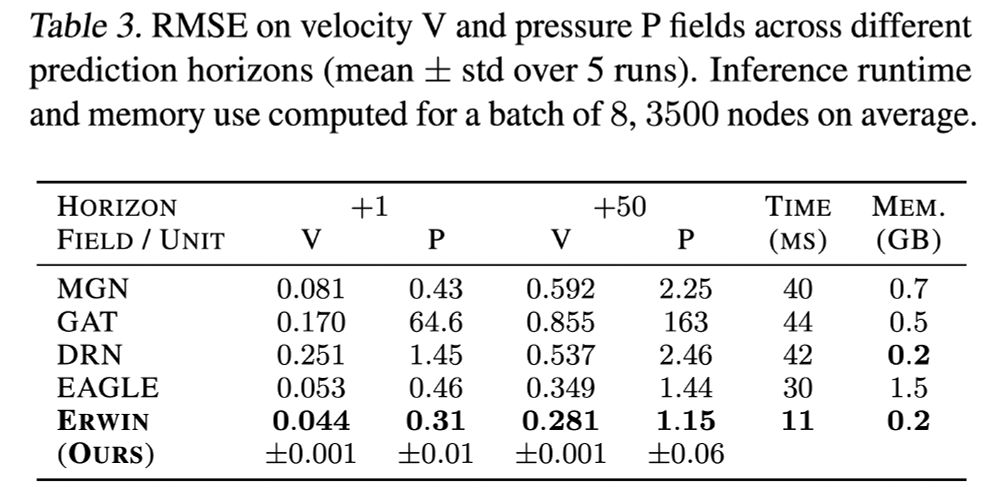

On the large-scale EAGLE benchmark (1M meshes, each with ~3500 nodes), we achieve SOTA performance in simulating unsteady fluid dynamics.

14/N

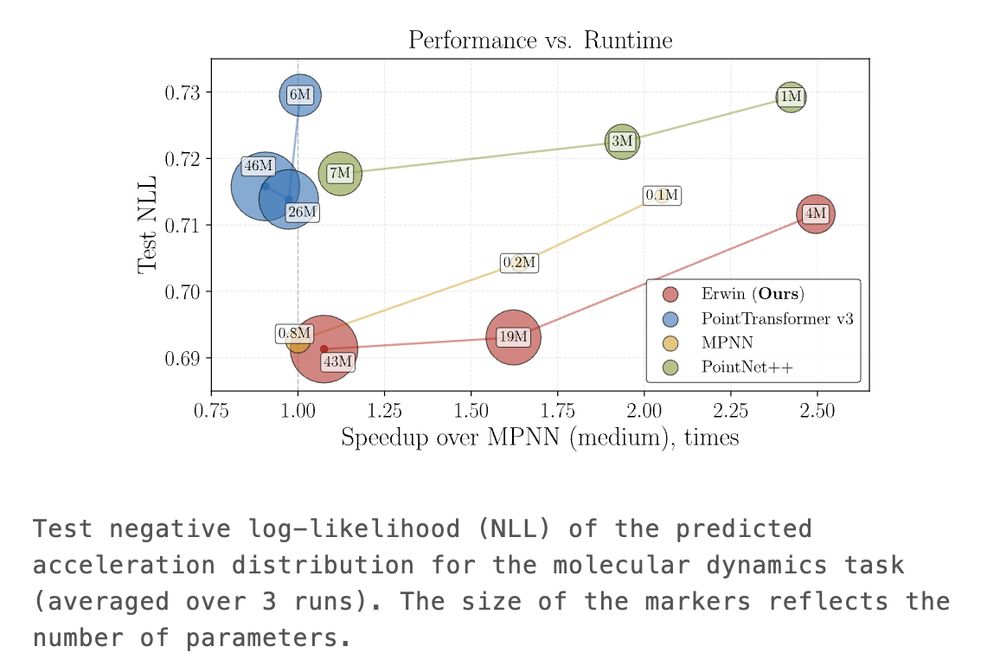

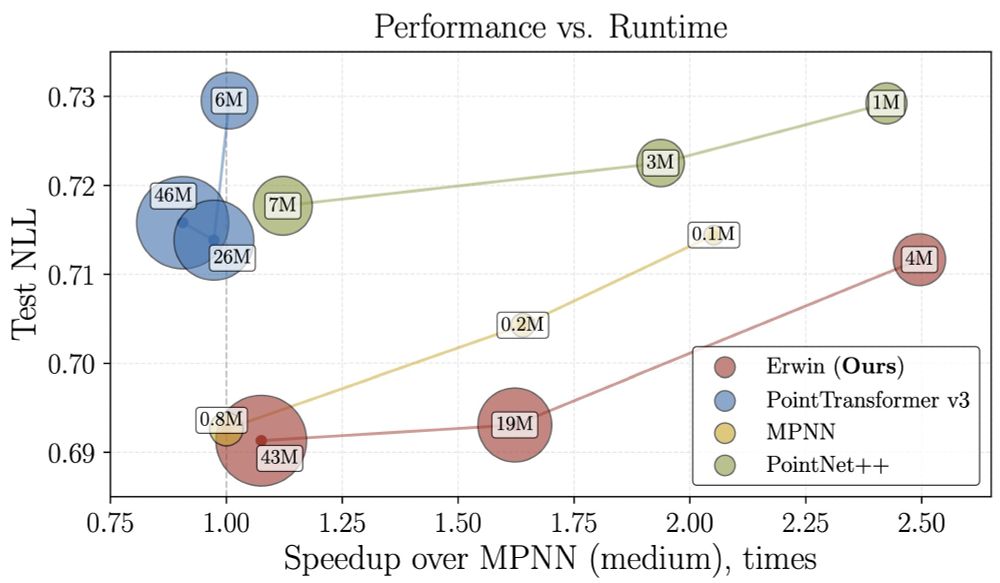

At the molecular dynamics task, Erwin pushes the Pareto frontier in performance vs runtime, proving to be a viable alternative to message-passing based architectures.

13/N

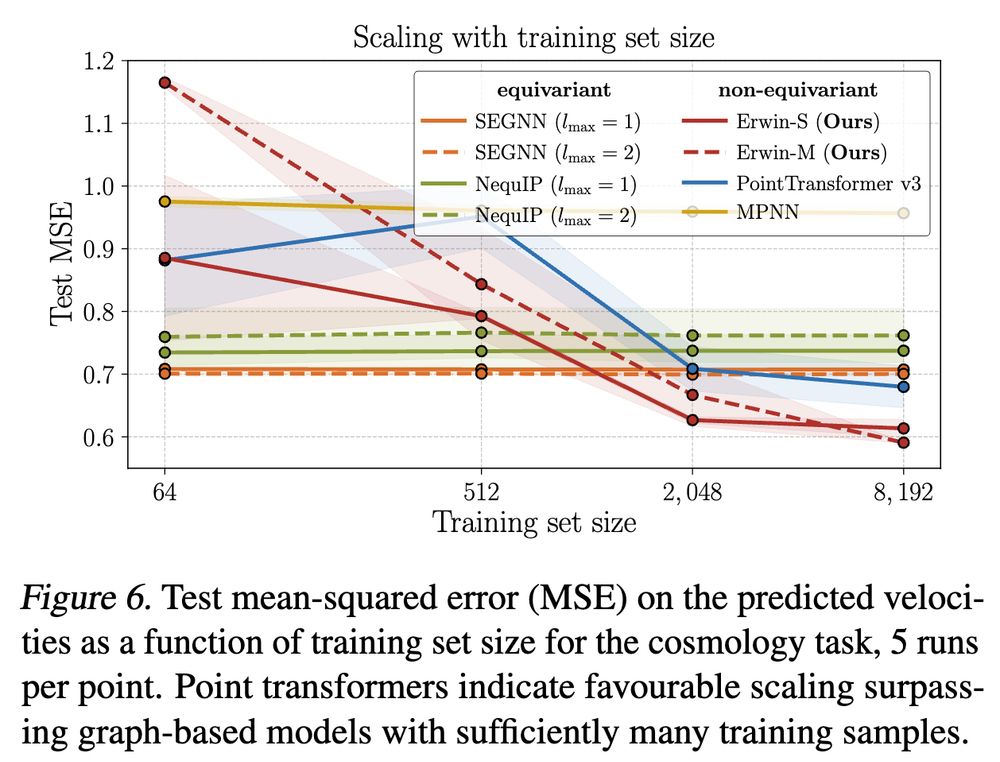

The cosmological data exhibits long-range dependencies. As each point cloud is relatively large (5,000 points), this poses a challenge for message-passing models. Erwin, on the other hand, is able to capture those effects.

12/N

We validated Erwin's performance on a variety of large-scale tasks, including:

- cosmology (5k nodes per data point)

- molecular dynamics (~1k nodes per data point)

- turbulent fluid dynamics (~3.5k nodes per data point)

11/N

Due to the simplicity of implementation, Erwin is blazing fast. For a batch of 16 point clouds, 4096 points each, it only takes ~30 ms to compute the forward pass!

10/N

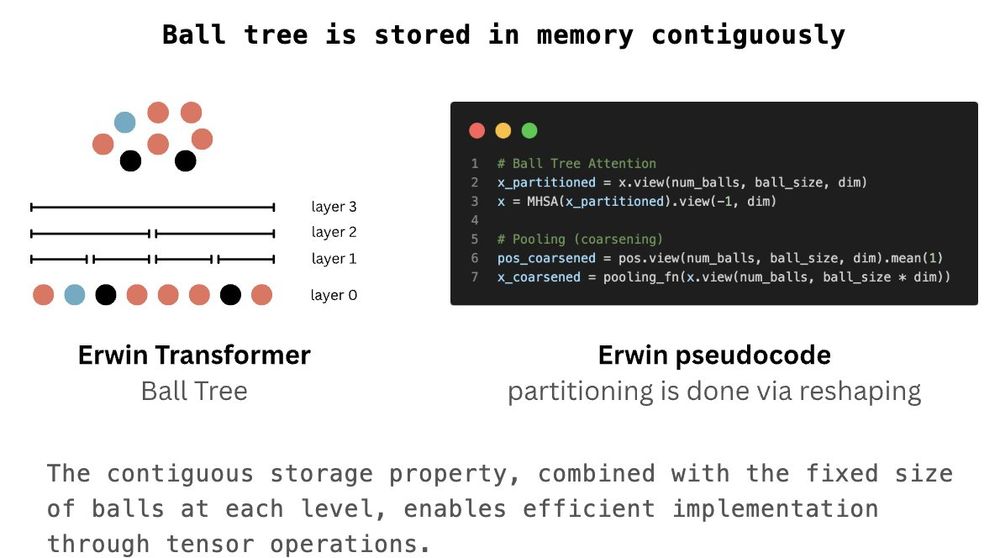

The ball tree is stored in memory contiguously - at each level of the tree, points in the same ball are stored next to each other.

This property is critical and allows us to implement the key operations described above simply via .view() or .mean()!

9/N

As Ball Tree Attention is local, we progressively coarsen and then refine the ball tree while keeping the ball size for attention fixed, following a U-Net-like architecture.

This allows us to learn multi-scale features and effectively increase the model's receptive field.

8/N

The main idea of the paper is to compute attention within the ball tree partitions.

Once the tree is built, one can choose the level of the tree and compute attention (Ball Tree Attention, BTA) within the balls in parallel.

7/N

Erwin organizes the computation via a ball tree - a hierarchical data structure that recursively partitions points into nested sets of similar size, where each set is represented by a ball that covers all the points in the set.

6/N

In this paper, we combine the scalability of tree-based algorithms and the efficiency of attention to build a transformer that:

- scales linearly with the number of points;

- captures global dependencies in data through hierarchical learning across multiple scales.

5/N

While highly successful in numerical simulations, tree-based algorithms synergize poorly with GPUs.

On the other hand, attention is highly hardware-optimized, yet it suffers from the problem tree-based algorithms solve - quadratic cost of all-to-all computations.

4/N

The problem was studied extensively in the 1980s in many-body physics.

To avoid the brute-force computation, tree-based algorithms were introduced that hierarchically organize particles, and the resolution of interactions depends on the distance between particles.

3/N

Physical systems with complex geometries are often represented using irregular meshes or point clouds.

These systems often exhibit long-range effects that are computationally challenging to model, as calculating all-to-all interactions scales quadratically with node count.

2/N