(1/n)🚨Train a model solving DFT for any geometry with almost no training data

Introducing Self-Refining Training for Amortized DFT: a variational method that predicts ground-state solutions across geometries and generates its own training data!

📜 arxiv.org/abs/2506.01225

💻 github.com/majhas/self-...

10.06.2025 19:49 — 👍 12 🔁 4 💬 1 📌 1

David is disrupting everone's neurips grind by putting out amazing works, such a dirty trick!

02.05.2025 17:18 — 👍 7 🔁 1 💬 0 📌 0

Renormalisation is a central concept in modern physics. It describes how the dynamics of a system change at different scales. A great way to understand and visualise renormalisation is the Ising model

(some math, but one can follow without it )

1/13

21.04.2025 07:29 — 👍 194 🔁 37 💬 4 📌 2

06.03.2025 21:06 — 👍 0 🔁 0 💬 0 📌 0

06.03.2025 21:06 — 👍 0 🔁 0 💬 0 📌 0

06.03.2025 21:06 — 👍 0 🔁 0 💬 1 📌 0

06.03.2025 21:06 — 👍 0 🔁 0 💬 1 📌 0

06.03.2025 21:06 — 👍 0 🔁 0 💬 1 📌 0

06.03.2025 21:06 — 👍 0 🔁 0 💬 1 📌 0

06.03.2025 21:06 — 👍 0 🔁 0 💬 1 📌 0

06.03.2025 21:06 — 👍 0 🔁 0 💬 1 📌 0

06.03.2025 21:06 — 👍 0 🔁 0 💬 1 📌 0

06.03.2025 21:06 — 👍 0 🔁 0 💬 1 📌 0

06.03.2025 21:06 — 👍 0 🔁 0 💬 1 📌 0

06.03.2025 21:06 — 👍 0 🔁 0 💬 1 📌 0

Every image was generated using SuperDiff for SDXL with two different prompts. Now, what are the prompts?🤔

06.03.2025 21:06 — 👍 0 🔁 0 💬 1 📌 0

SuperDiff goes super big!

- Spotlight at #ICLR2025!🥳

- Stable Diffusion XL pipeline on HuggingFace huggingface.co/superdiff/su... made by Viktor Ohanesian

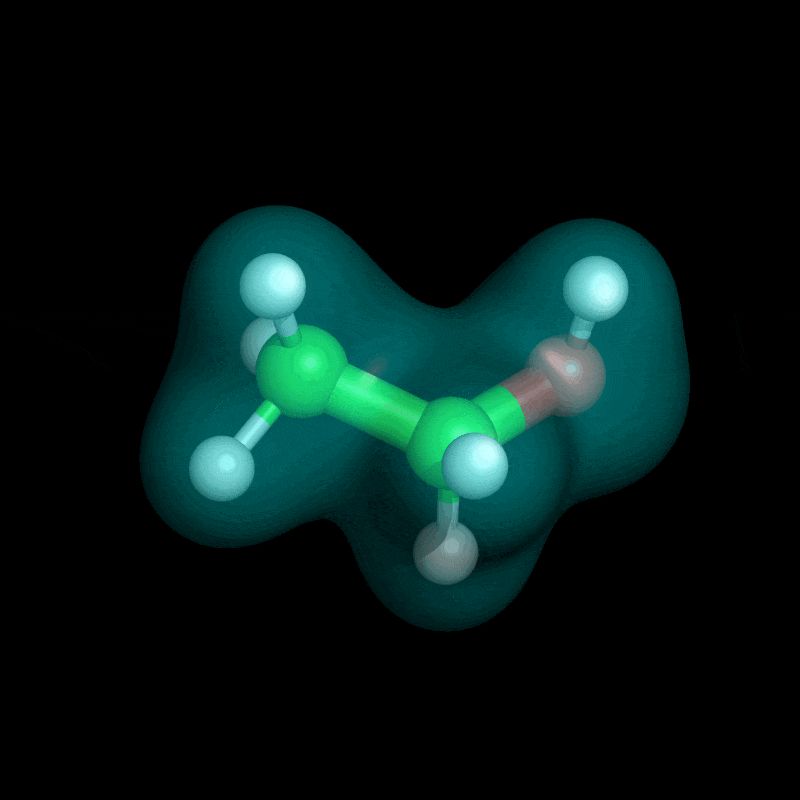

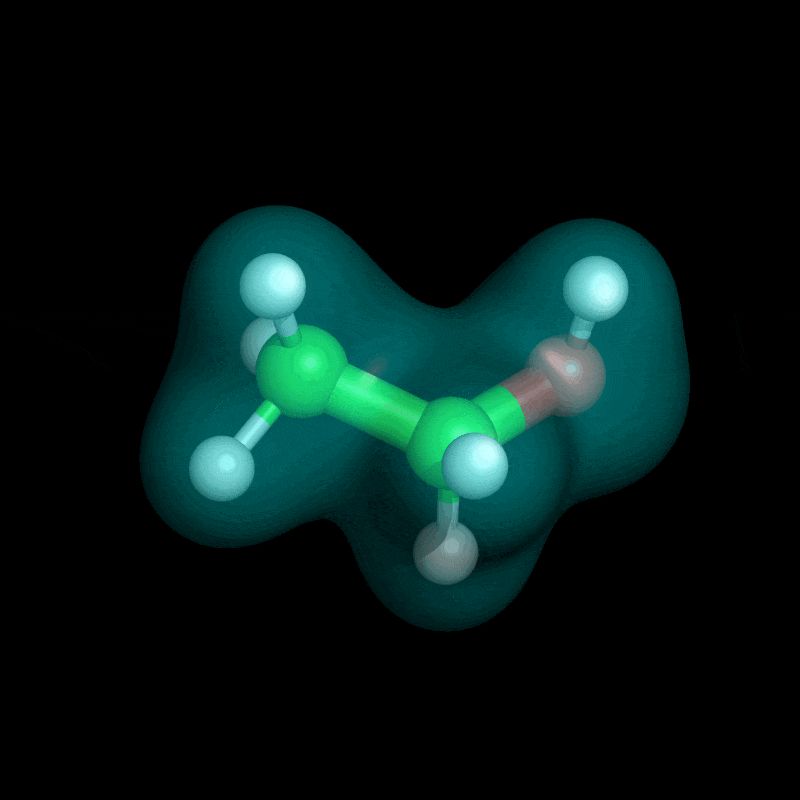

- New results for molecules in the camera-ready arxiv.org/abs/2412.17762

Let's celebrate with a prompt guessing game in the thread👇

06.03.2025 21:06 — 👍 14 🔁 4 💬 1 📌 1

We've been sharing these projects during the year, but today, they have been accepted at #ICLR2025 (1-3) and #AISTATS2025 (4)

22.01.2025 17:58 — 👍 6 🔁 1 💬 1 📌 0

🧵(7/7) The main result that unlocks all these possibilities is our new Itô density estimator, an efficient way to estimate the density of the generated samples for an already-trained diffusion model (assuming that we know the score). It does not require any extra computations, just the forward pass!

28.12.2024 14:32 — 👍 3 🔁 1 💬 0 📌 0

🧵(6/7) We try out SuperDiff on generating images with #StableDiffusion by superimposing two prompts so that the image satisfies both. Ever wondered what a waffle cone would look like if it doubled as a volcano? Check out our paper! You’ll find marvellous new creatures in there such as an otter-duck

28.12.2024 14:32 — 👍 1 🔁 0 💬 1 📌 0

🧵(5/7) We test our model for unconditional de novo protein generation, where we superimpose two diffusion models: Proteus generates more designable and novel proteins, while FrameDiff generates more diverse proteins. SuperDiff combines them to generate designable and novel and diverse proteins!

28.12.2024 14:32 — 👍 4 🔁 0 💬 1 📌 0

🧵(4/7) Here’s a 2D example for intuition: given two already trained models, we combine their outputs (vector fields) based on estimated densities, allowing us to generate samples from all modes (e.g. for continual learning) or from the surface of equal densities (e.g. for concept interpolation).

28.12.2024 14:32 — 👍 3 🔁 0 💬 1 📌 0

The Superposition of Diffusion Models Using the Itô Density Estimator

The Cambrian explosion of easily accessible pre-trained diffusion models suggests a demand for methods that combine multiple different pre-trained diffusion models without incurring the significant co...

🧵(2/7)We provide a new approach for estimating density without touching the divergence. This gives us the control to easily interpolate concepts (logical AND) or mix densities (logical OR), allowing us to create one-of-a-kind generations! ⚡🌀🤗

28.12.2024 14:32 — 👍 2 🔁 1 💬 1 📌 0

🧵(1/7) Have you ever wanted to combine different pre-trained diffusion models but don't have time or data to retrain a new, bigger model?

🚀 Introducing SuperDiff 🦹♀️ – a principled method for efficiently combining multiple pre-trained diffusion models solely during inference!

28.12.2024 14:32 — 👍 44 🔁 7 💬 1 📌 4

Steering towards safe self-driving laboratories

The past decade has witnessed remarkable advancements in autonomous systems, such as automobiles that are evolving from traditional vehicles to ones capable of

navigating complex environments without ...

10 minutes ago

I am excited to share a perspective on the much-needed topic of hashtag#safety for hashtag#selfdrivinglaboratories. As the field progresses, understanding the challenges and gaps in building safe setups will be crucial for scaling up this technology!

doi.org/10.26434/che...

23.12.2024 17:39 — 👍 34 🔁 9 💬 3 📌 0

With some delay, JetFormer's *prequel* paper is finally out on arXiv: a radically simple ViT-based normalizing flow (NF) model that achieves SOTA results in its class.

Jet is one of the key components of JetFormer, deserving a standalone report. Let's unpack: 🧵⬇️

20.12.2024 14:39 — 👍 42 🔁 7 💬 2 📌 1

Come join us in Singapore at #ICLR2025 to discuss the latest developments everywhere where Learning meets Sampling!

18.12.2024 19:10 — 👍 10 🔁 1 💬 0 📌 0

We're presenting our spotlight paper on transition path sampling at #NeurIPS2024 this week! Learn how to speed up the conventional Monte Carlo approaches by orders of magnitude

Wed 11 Dec 4:30 pm #2606

arxiv.org/abs/2410.07974

first authors = {Yuanqi Du, Michael Plainer, @brekelmaniac.bsky.social}

10.12.2024 02:05 — 👍 16 🔁 4 💬 0 📌 0

this picture provides a great comparison with other methods

27.11.2024 20:45 — 👍 0 🔁 0 💬 0 📌 0

27.11.2024 20:40 — 👍 0 🔁 0 💬 1 📌 0

so happy to see that Action Matching finds its applications in physics, outperforming diffusion models and Flow Matching!

wonderful work by Jules Berman, Tobias Blickhan, and Benjamin Peherstorfer!

arxiv.org/abs/2410.12000

27.11.2024 20:40 — 👍 11 🔁 2 💬 1 📌 0

Professor of Theoretical Chemistry @sorbonne-universite.fr & Head @lct-umr7616.bsky.social| Guest Faculty @ Argonne| Co-Founder & CSO @qubit-pharma.bsky.social (My Views) |

https://piquemalresearch.com | https://tinker-hp.org

Postdoc @vectorinstitute.ai | organizer @queerinai.com | previously MIT, CMU LTI | 🐀 rodent enthusiast | she/they

🌐 https://ryskina.github.io/

https://malkin1729.github.io/ / Edinburgh, Scotland / they≥she>he≥0

Mathematician/informatician thinking probabilistically, expecting the same of you.

‘Tis categories in the mind and guns in their hands which keep us enslaved.

Assistant professor in Data Science and AI at Chalmers University of Technology | PI: AI lab for Molecular Engineering (AIME) | ailab.bio | rociomer.github.io

A computational quantum physics postdoc at ETH Zurich. I love coding, math tricks and fancy dinners. Enthusiast procrastinator.

AI Scientist at Xaira Therapeutics & PhD student at Mila

PhD candidate at AMLab with Max Welling and Jan-Willem van de Meent.

Research in physics-inspired and geometric deep learning.

Principal Research Scientist at NVIDIA | Former Physicist | Deep Generative Learning | https://karstenkreis.github.io/

Opinions are my own.

Statistical mechanic working on generative models for biophysics and beyond. Assistant professor at Stanford. https://statmech.stanford.edu

Research scientist, Google DeepMind

Research director | @McGillU @Mila_Quebec @IVADO_Qc | My team designs machine learning frameworks to understand biological systems from new angles of attack

machine learning researcher @ Apple machine learning research

Lecturer in Maths & Stats at Bristol. Interested in probabilistic + numerical computation, statistical modelling + inference. (he / him).

Homepage: https://sites.google.com/view/sp-monte-carlo

Seminar: https://sites.google.com/view/monte-carlo-semina

junior fellow at @Harvard.edu, incoming prof at @HSEAS and @Kempnerinstitute.bsky.social studying machine learning and its applications to nature and the sciences

messing up with gaussians

MIT PhD Student - ML for biomolecules - https://hannes-stark.com/

PhD student at Mila | Diffusion models and reinforcement learning 🧐 | hyperpotatoneo.github.io

Incoming Assistant Prof, University of Alberta. Research Fellow at Amii. Currently, postdoc fellow the Broad Institute.

06.03.2025 21:06 — 👍 0 🔁 0 💬 0 📌 0

06.03.2025 21:06 — 👍 0 🔁 0 💬 0 📌 0

06.03.2025 21:06 — 👍 0 🔁 0 💬 1 📌 0

06.03.2025 21:06 — 👍 0 🔁 0 💬 1 📌 0

06.03.2025 21:06 — 👍 0 🔁 0 💬 1 📌 0

06.03.2025 21:06 — 👍 0 🔁 0 💬 1 📌 0

06.03.2025 21:06 — 👍 0 🔁 0 💬 1 📌 0

06.03.2025 21:06 — 👍 0 🔁 0 💬 1 📌 0

06.03.2025 21:06 — 👍 0 🔁 0 💬 1 📌 0

06.03.2025 21:06 — 👍 0 🔁 0 💬 1 📌 0

06.03.2025 21:06 — 👍 0 🔁 0 💬 1 📌 0

06.03.2025 21:06 — 👍 0 🔁 0 💬 1 📌 0