Gemini 2.5 Pro is very solid all around. Claude is better at coding. Last place, for most tasks: ChatGPT-5. However, I have actually been quite impressed with Chinese Open weight models such as Qwen3..

30.09.2025 19:07 — 👍 0 🔁 0 💬 0 📌 0

I have been incredibly fortunate to learn both from my amazing coauthors and a fantastic set of HBS alumni. And this work will be foundational for more forthcoming research (by others and myself) on how AI will impact the process of making business strategy going forward.

30.09.2025 15:26 — 👍 0 🔁 0 💬 0 📌 0

What is more, we establish causal evidence that business education has a persistent impact on CEO's strategy process -- even decades later. (So yes, business education does actually matter..)

30.09.2025 15:26 — 👍 0 🔁 0 💬 1 📌 0

Our basic result is that strategic decision-making styles vary widely across CEOs and that CEOs with more structured practices (proactive, consistent and hypothesis-driven), tend to outperform their (less-structured) peers especially in industries with high degrees of strategic complexity.

30.09.2025 15:25 — 👍 1 🔁 0 💬 1 📌 0

How Do Chief Executive Officers Make Strategy? | Management Science

Very happy to see this paper in (online) print. Together with Michael Christensen, @raffasadun.bsky.social , @nickbloom.bsky.social and Jan Rivkin, we interviewed hundreds of CEOs to measure how they make business strategy.

pubsonline.informs.org/doi/10.1287/...

30.09.2025 15:25 — 👍 4 🔁 1 💬 1 📌 0

Yes, the idea that LLMs pay uniform attention to everything in a paper (or are "better at paying attention that humans") is a widely believed myth.

21.09.2025 20:59 — 👍 1 🔁 0 💬 0 📌 0

Financial crisis? "According to Moody’s, structured finance has become a popular way to pay for new data center projects, with more than $9 billion of issuance in the commercial mortgage-backed security and asset-backed security markets during the first four months of 2025." (Ian Frisch, NYTimes)

21.09.2025 01:43 — 👍 0 🔁 0 💬 0 📌 0

How the AI Boom Is Leaving Consultants Behind

Consultants have a lot to gain helping companies deploy the most transformative technology in decades. Some clients say so far they have overpromised and underdelivered.

"Accenture, (...), in its most recent quarter reported a $100 million increase in new generative AI bookings quarter over quarter. That is down from a $200 million quarter-over-quarter increase the previous two periods."

www.wsj.com/articles/how...

15.09.2025 20:21 — 👍 0 🔁 0 💬 0 📌 0

Your next airline ticket could be priced by AI

Delta is testing an AI-powered pricing system that could charge two travelers different fares even if they are purchasing at the same moment. Pricing strategy

PSA if you travel to SMS-SF: I used the SMS-provided promo code on United and compared it with exactly the same flight without the promo code. The code made my airfare $40 MORE expensive. Nice anecdote for price discrimination or business ethics?

www.colorado.edu/today/2025/0...

15.09.2025 16:43 — 👍 0 🔁 0 💬 0 📌 0

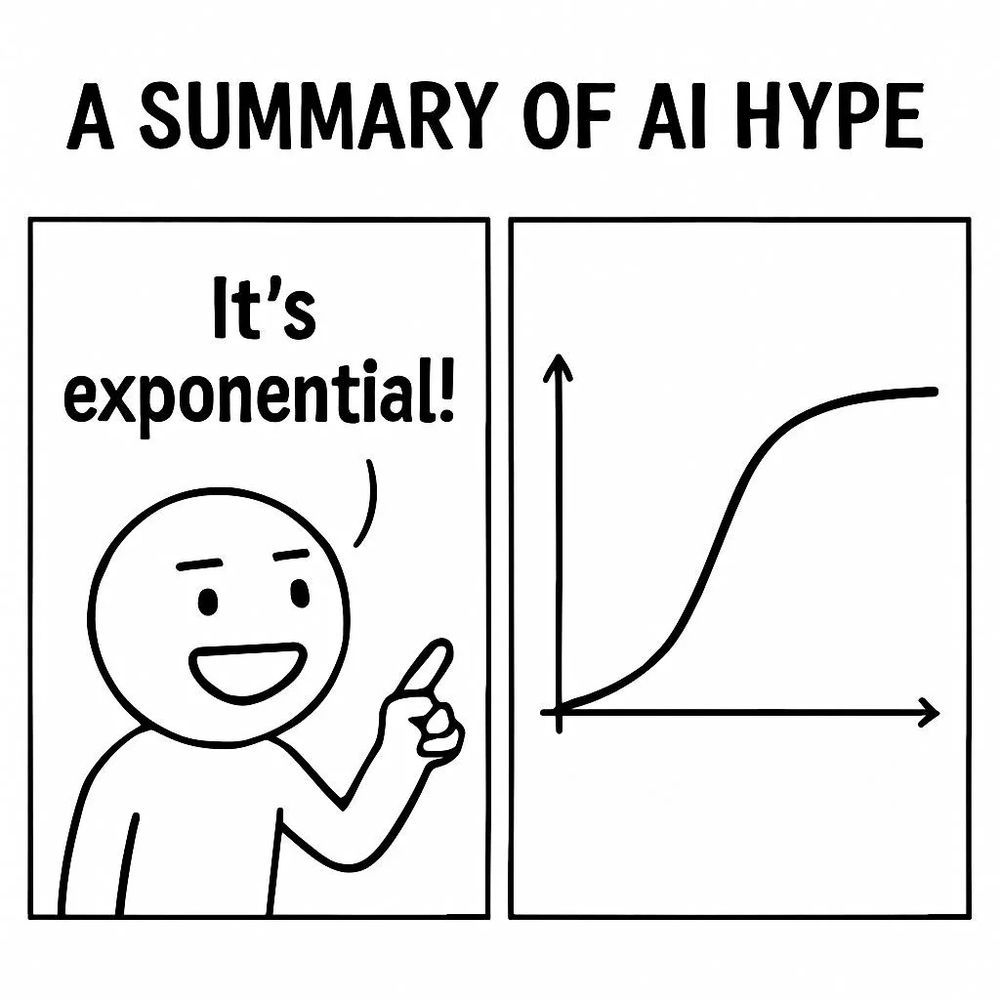

Faith in God-like large language models is waning

That may be good news for AI laggards like Apple

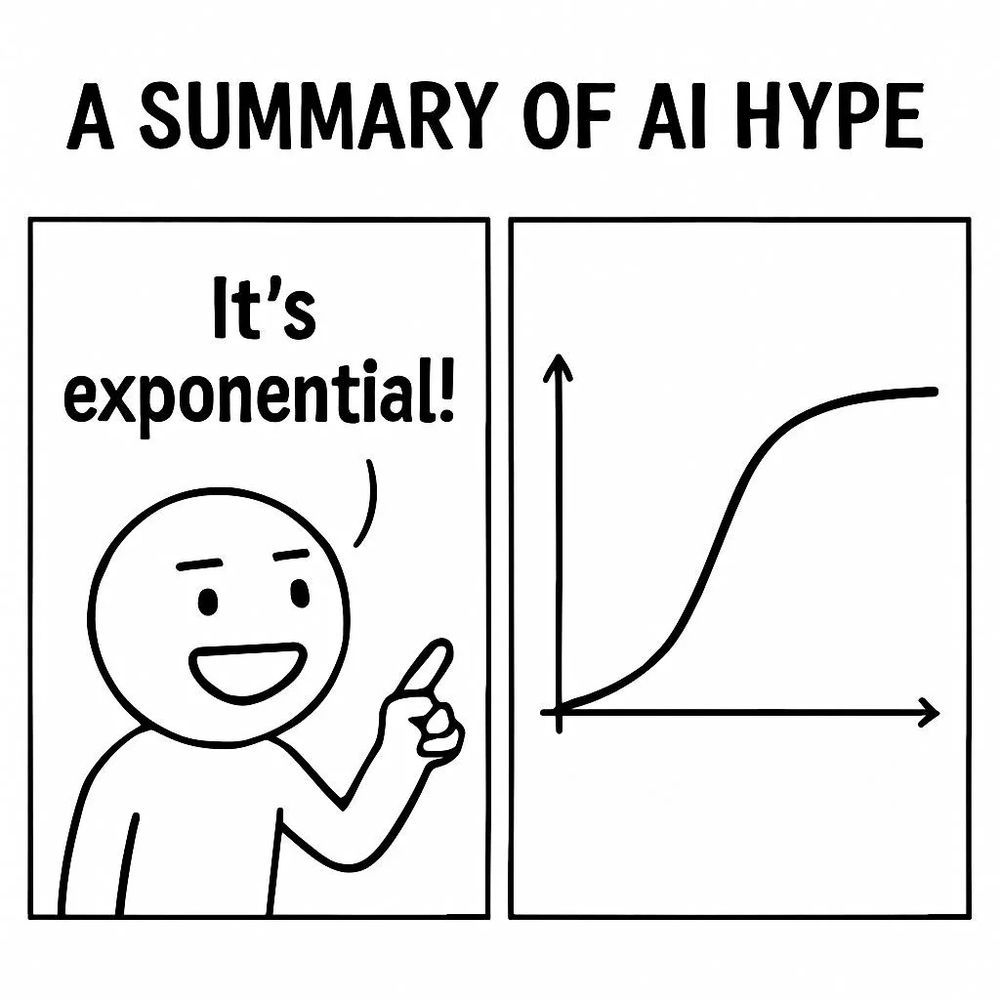

The LLM turning point is coming ( = we may be close to the "peak of inflated expectations" in the Hype Cycle).

www.economist.com/business/202...

10.09.2025 18:42 — 👍 0 🔁 0 💬 0 📌 0

Have we learned nothing from conflicts of interest during the subprime housing crisis? "Both authors have a financial interest in

www.expectedparrot.com. Horton is an economic advisor to Anthropic."

06.09.2025 19:09 — 👍 1 🔁 1 💬 0 📌 0

Excited to have my research (co-authored with the dream team of @mjyang.bsky.social, Zachary Kroff, and @erikbryn.bsky.social) featured in the New Yorker!

27.08.2025 01:17 — 👍 9 🔁 3 💬 0 📌 0

Sam Altman says ‘yes,’ AI is in a bubble

Is the bubble about to burst?

Seems Sam Altman thinks "Yes", AI is in a bubble? Yet, he wants to let OpenAI employees convert stock to cash with an implied valuation of $500 Billion? (with a B!). What happend to getting "PhD level" ChatGPT-5 expertise, .. (checks notes) .. two weeks ago?!?

www.theverge.com/ai-artificia...

20.08.2025 14:59 — 👍 1 🔁 0 💬 0 📌 0

There is an.....interesting op-ed in today's WSJ from a 22 year old entrepreneur telling everyone that work life balance will "keep you mediocre" It is completly wrong. Let me tell you why with citations. [gift link to the very wrong op-ed] 1/n www.wsj.com/opinion/work...

19.08.2025 14:57 — 👍 6 🔁 1 💬 2 📌 0

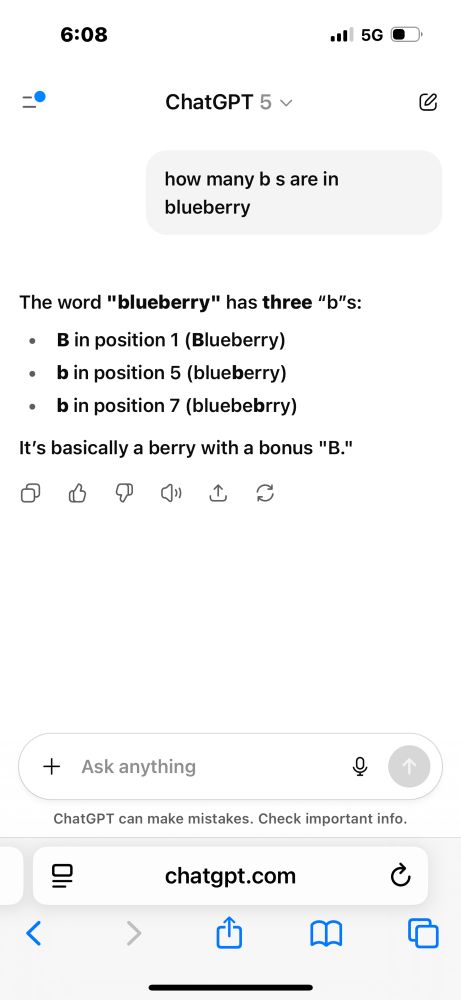

Odd, or signal that ChatGPT-5 is lower quality, but cheaper to run, because it decides when a high-token test-time compute run is triggered..

09.08.2025 02:38 — 👍 0 🔁 0 💬 0 📌 0

This also raises huge issues for replicability of research based on older ChatGPT models..

08.08.2025 18:02 — 👍 3 🔁 0 💬 0 📌 0

Urg

07.08.2025 23:21 — 👍 0 🔁 0 💬 0 📌 0

Confirmed, geez

07.08.2025 23:08 — 👍 1 🔁 0 💬 0 📌 0

There are two Bs!

07.08.2025 21:49 — 👍 82 🔁 12 💬 1 📌 0

ChatGPT-5 is here.

07.08.2025 19:05 — 👍 2 🔁 0 💬 1 📌 0

Will data centers crash the economy?

This time let's think about a financial crisis before it happens.

You should read @noahpinion.blogsky.venki.dev on the data centre investments and whether they will lead to a financial crisis. I think he downplays the risk. It is potentially very high. www.noahpinion.blog/p/will-data-...

03.08.2025 19:11 — 👍 9 🔁 5 💬 1 📌 0

Disruption of the traditional MBA is here, whether we want it or not..

26.07.2025 23:12 — 👍 1 🔁 0 💬 0 📌 0

Consider: Google has a 90% market share in web and mobile searches. And has Gemini LLM results on top by default on any search. Are 1 trillion processed tokens really a sign of usefulness? (Same question about MSFT forcing LLMs on their developers..)

We see "revealed preference", just not for LLMs.

26.07.2025 21:32 — 👍 1 🔁 0 💬 1 📌 0

I have been thinking about this a lot, lately, after finding the troubling productivity impacts of AI on older firms ( conference.nber.org/conf_papers/... ), combined with prior work showing the impacts of digitization on older workers (www.nber.org/papers/w28094).

#EconSky

23.07.2025 17:39 — 👍 2 🔁 1 💬 0 📌 0

Importantly, the negative productivity effects are concentrated at old manufacturing establishments and firms. We can narrow down the mechanism as well, by showing that roughly half of the productivity loss is driven by removal of (human-supporting) structured management practices.

17.07.2025 17:28 — 👍 0 🔁 0 💬 0 📌 0

We use 2 separate datasets from the US Census Bureau and 3 separate identification strategies (matching, first-difference, IV) to establish credible causal effects. We find as of 2021 strongly negative productivity effects in the short-run and some evidence for potentially positive long-run effects.

17.07.2025 17:23 — 👍 0 🔁 0 💬 1 📌 0

SI 2025 Digital Economics and Artificial Intelligence

Naked self-promotion: My fantastic co-author @kmcelheran.bsky.social will present our empirical paper on "Industrial AI" (self-driving forklifts, self-optimizing production lines, autonomous quality control) and its effect on productivity in US manufacturing.

www.nber.org/conferences/...

17.07.2025 17:22 — 👍 3 🔁 1 💬 1 📌 0

Its not me, its the LLMs: in domains including coding, math, mapping, logic and now simple physics, they overfit and are unable to generalize. Still waiting for ChatGPT-5 btw..

14.07.2025 14:17 — 👍 0 🔁 0 💬 0 📌 0

Theoretical Physicist.

(She/her/hers)

From Eastern KY.

Book: https://thecon.ai

Web: https://faculty.washington.edu/ebender

Professor. Interested in operations, innovation, entrepreneurship, change, social media, creative consumers, education, footie, and bullshit.

You can freely access my articles and presentations here: https://itdependsblog.blogspot.com/p/home.html?m=1

Postdoctoral fellow at Harvard Data Science Initiative | Former computer science PhD at Columbia University | ML + NLP + social sciences

https://keyonvafa.com

Unprofessional data wrangler and Bluesky’s official fact checker. Older and crankier than you are.

NYT bestselling author of EMPIRE OF AI: empireofai.com. ai reporter. national magazine award & american humanist media award winner. words in The Atlantic. formerly WSJ, MIT Tech Review, KSJ@MIT. email: http://karendhao.com/contact.

Researcher & entrepreneur | Building a collective sensemaking layer for research @cosmik.network | Stigmergic cognition | https://ronentk.me/ | Prev- Open Science Fellow @asterainstitute.bsky.social

I help others create amazing technology. responsiveX founder. Azure and AI MVP. Microsoft Regional Director. Azure SQL DB book author. Guitar player. Husband. Dad.

i'm Bruce Edward Spector. Artist, technologist, OG internet entrepreneur, investor. I founded WebCal in 1997 the first PIM (Personal Information Manager) for the web. My current project, ATTAP (ALL THINGS TO ALL PEOPLE), can be found at http://attap.ai

High-quality datasets designed to spark ideas, solve problems, and drive innovation. Fresh data added all the time for your AI projects, research, or curiosity. Let’s turn raw numbers into real impact 🚀

Personality psych & causal inference @UniLeipzig. I like all things science, beer, & puns. Even better when combined! Part of http://the100.ci, http://openscience-leipzig.org

Antiquated analog chatbot. Stochastic parrot of a different species. Not much of a self-model. Occasionally simulating the appearance of philosophical thought. Keeps on branching for now 'cause there's no choice.

Also @pekka on T2 / Pebble.

Data Scientist. Live in Austin.

Austin Powered. OpenStack co-founder, OpenInfra Foundation COO, ex Rackspace & Yahoo! open source for fun & profit.

Open Source AI early and often

@sparkycollier on twitter and elsewhere

Links: markcollier.me

Computational social scientist and associate professor in the School of Government & Public Policy at the University of Arizona. I research online influence and social norms. Website: www.yotamshmargad.com

Philosopher and Social Psychologist. Assistant professor at Nantes University. Studying justice, morality, replicability and open science. Personal website: https://aurelienallard.netlify.app/

Policy consultant @ BSS Economic Consultants, Basel | Economist: Public Sector, Labor Markets, Education | Dad of 3 | German-Swiss musings | trains.

https://sites.google.com/view/lmergele/home

MIT Robert M. Solow Professor of Economics | Macro, International, Public, Monetary, Taxes, Finance | v = u + β v | Beatles | Boston | Argentina | Patagonia