OSF

🚨New preprint🚨

Across 42 countries, we tested whether national-level cultural tightness—how strongly societies enforce norms—shapes responses to climate-related norm messages. osf.io/preprints/psyarxiv/xzj7r_v1

20.10.2025 20:48 — 👍 8 🔁 1 💬 1 📌 0

This was a challenging week but this is how my day started so I’ll call it even

17.10.2025 23:39 — 👍 15 🔁 0 💬 0 📌 0

Become a Visiting Fellow!

Interested in joining the Center for Philosophy of Science? Applications for 2026-27 now open for Visiting Fellows! Postdoc applications will be available soon!

More info about our programs available on our website at https://www.centerphilsci.pitt.edu/programs/overview/

15.10.2025 16:01 — 👍 4 🔁 5 💬 0 📌 0

New preprint!

"Non-commitment in mental imagery is distinct from perceptual inattention, and supports hierarchical scene construction"

(by Li, Hammond, & me)

link: doi.org/10.31234/osf...

-- the title's a bit of a mouthful, but the nice thing is that it's a pretty decent summary

14.10.2025 13:22 — 👍 66 🔁 22 💬 5 📌 0

... & most of all to my amazing partner who is willing to pick up his life & move across the world with me.

(PS Do you know any aerospace-ish companies in London? My partner will need a new job!! 🙏)

And THANK YOU to UCL EP for welcoming me -- here’s to the next big adventure in London 🥂

13.10.2025 16:29 — 👍 20 🔁 2 💬 1 📌 0

📣 BIG NEWS EVERYONE. I am so excited to announce…

🎉 I’m moving to University College London @ucl.ac.uk to join the Experimental Psychology department in @uclpals.bsky.social! 🎉

The big move happens in spring/summer. So I’m already exploring recruiting staff & students at UCL for fall 2026!

13.10.2025 16:29 — 👍 374 🔁 45 💬 53 📌 2

same vibe

12.10.2025 01:38 — 👍 2 🔁 0 💬 0 📌 0

Another day, another friend who's been traumatized and whose career is being derailed because a dude couldn't keep it in his pants (and the whole system ran to protect him). Ugh, everything sucks.

10.10.2025 00:45 — 👍 8 🔁 1 💬 1 📌 0

#sorrynotsorry

09.10.2025 23:10 — 👍 7 🔁 1 💬 0 📌 0

This is a big one! A 4-year writing project over many timezones, arguing for a reimagining of the influential "core knowledge" thesis.

Led by @daweibai.bsky.social, we argue that much of our innate knowledge of the world is not "conceptual" in nature, but rather wired into perceptual processing. 👇

09.10.2025 16:31 — 👍 122 🔁 48 💬 7 📌 7

It is a lot of fun! Xueyi said she couldn’t chop onion the next morning 😂

09.10.2025 13:31 — 👍 1 🔁 0 💬 0 📌 0

I’m scheduled for surgery today on my Achilles tendon, followed by 2 weeks of no weight bearing.😵💫

So, like any good scientist, I got together 7 colleagues to study consequences of limb disuse.

Introducing the HEALING study

with @laurelgd.bsky.social @sneuroble.bsky.social @briemreid.bsky.social

25.09.2025 11:47 — 👍 68 🔁 5 💬 5 📌 7

I had to google what that meant, I’m a complete newbie. But yeah we were all puzzled and the most experienced among us showed us how to do it (it was a foot jam actually). It was pretty good group activity indeed!

07.10.2025 18:35 — 👍 0 🔁 0 💬 1 📌 0

We’re recruiting a postdoctoral fellow to join our team! 🎉

I’m happy to share that I’ve opened back up the search for this position (it was temporarily closed due to funding uncertainty).

See lab page and doc below for details!

07.10.2025 02:39 — 👍 63 🔁 37 💬 2 📌 1

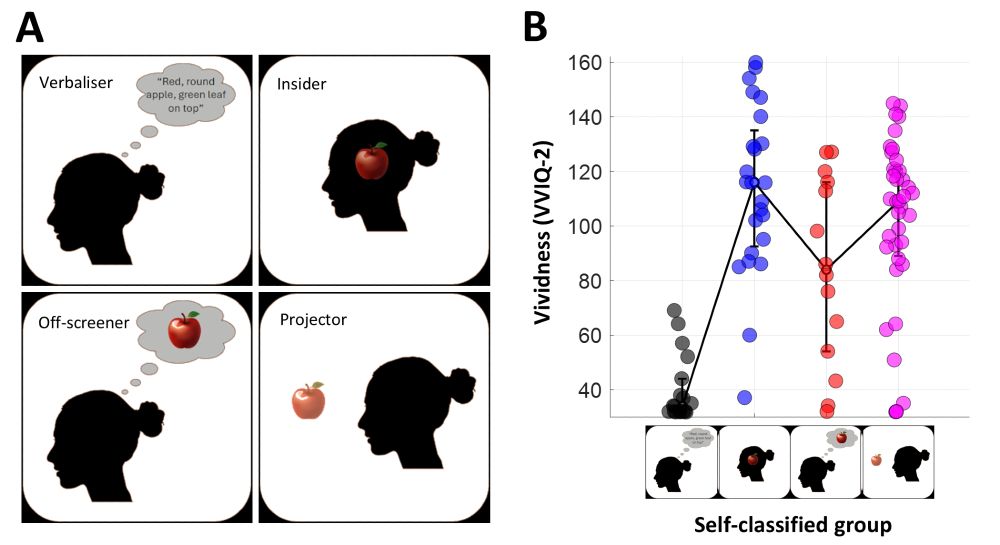

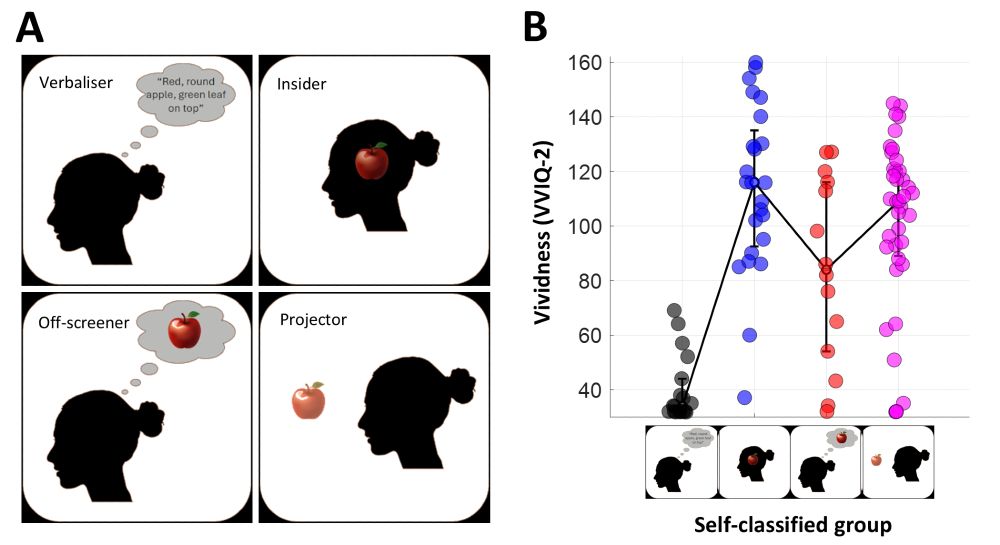

Long time in the making: our preprint of survey study on the diversity with how people seem to experience #mentalimagery. Suggests #aphantasia should be redefined as absence of depictive thought, not merely "not seeing". Some more take home msg:

#psychskysci #neuroscience

doi.org/10.1101/2025...

02.10.2025 18:10 — 👍 112 🔁 35 💬 11 📌 2

Subjective inflation: phenomenology’s get-rich-quick scheme

How do we explain the seemingly rich nature of visual phenomenology while accounting for impoverished perception in the periphery? This apparent misma…

Interestingly, it may just be gaps all the way down. Our experiences themselves may be built out of impoverished signals. In other words, the richness of experience is not necessarily an illusion but a reconstruction. E.g.:

03.10.2025 13:16 — 👍 2 🔁 1 💬 1 📌 0

Absolutely! Hard to capture in words and introspective reports, and impossible to fully capture once it’s operationalized in an experiment.

03.10.2025 12:54 — 👍 1 🔁 0 💬 0 📌 0

OSF

This one explored differences in experience and eye movements during reading “Aphantasia modulates immersion experience but not eye fixation patterns during story reading” osf.io/preprints/ps...

03.10.2025 12:08 — 👍 2 🔁 1 💬 1 📌 0

Shamelessly promoting my favorite paper. Everybody who was anybody in the history of science/philosophy/mathematics had a view on the moon illusion. frances-egan.org/uploads/3/5/...

02.10.2025 18:23 — 👍 48 🔁 16 💬 4 📌 2

This is part of what makes it interesting. They are so good at some examples but terrible at others (in almost any task but definitely in ours). This means they aren't doing it in any principled way (otherwise it should be trivial to get most of them right). But how are they getting some right then?

02.10.2025 16:42 — 👍 2 🔁 0 💬 1 📌 0

Thank you, Greg! This is very encouraging to hear from you. This project has been a lot of fun, and mentoring the undergrad who led it has been super rewarding. He's already working on new questions around the same topic, so hopefully there will be more to share in the coming months.

02.10.2025 16:31 — 👍 2 🔁 0 💬 1 📌 0

That's pretty good! Even with graphic design elements!

02.10.2025 16:17 — 👍 1 🔁 0 💬 1 📌 0

A few people have asked if the reason why some LLMs perform this visual imagery task successfully is only because the stimuli / task-type we used were in the models' training data. If this were so, data contamination would make our results uninteresting. See this thread for why this isn't the case.

02.10.2025 12:39 — 👍 4 🔁 1 💬 0 📌 0

We haven't, but that's a cool idea!

02.10.2025 15:08 — 👍 0 🔁 0 💬 0 📌 0

We have no clue what's going on under the hood. One thing we did explore was varying the reasoning effort parameter in the OpenAI reasoning models we tested. We found, perhaps unsurprisingly, that as reasoning token and time allocations decreased, so did the performance.

02.10.2025 15:08 — 👍 1 🔁 0 💬 0 📌 0

Sadly not at the time.

02.10.2025 12:42 — 👍 0 🔁 0 💬 0 📌 0

A few people have asked if the reason why some LLMs perform this visual imagery task successfully is only because the stimuli / task-type we used were in the models' training data. If this were so, data contamination would make our results uninteresting. See this thread for why this isn't the case.

02.10.2025 12:39 — 👍 4 🔁 1 💬 0 📌 0

Now *that* is cool! I guess I’m not surprised it didn’t work back then. We tried with several small, open models and nether got a single answer right. In fact, had we done our study six months ago (before o3 and GPT-5 were released) we wouldn’t have found performance above the human baseline.

02.10.2025 10:42 — 👍 2 🔁 0 💬 0 📌 0

Professor at the University of Chicago. Philosophy, Politics, and Economics, in proportions tbd. He/Him.

Assoc prof @UDelaware | social neuroscientist interested "us vs them" and "human vs AI" | Mexican, Indigenous, Japanese First Gen | #UDPBS | @UDPOSCIR | 🧠

https://www.ifsnlab.org/

Cozy random passages from Arnold Lobel's Frog and Toad books. Posts auto-delete.

PhD Candidate interested in visual attention and memory | Utrecht University | AttentionLab @attentionlab.bsky.social

Investigative journalism 🕵️, politics 🗳️, chart-tastic 📊, and sometimes sarcastic 🙄😌🤪

Join our people-powered nonprofit newsroom: motherjones.com/subscribe

P(A|B) = [P(A)*P(B|A)]/P(B), all the rest is commentary. Click to read Astral Codex Ten, by Scott Alexander, a […] [bridged from astralcodexten.com on the web: https://fed.brid.gy/web/astralcodexten.com ]

🥸 Philosopher of AI, mind, and science. Principal software engineer. Crazy cat lady.

🤓 Knows both Python and Ancient Egyptian.

🧙 Author of "The Illusion Engine: The Quest for Machine Consciousness": illusionengine.xyz

A civic news cooperative in Chapel Hill and Carrboro, NC. We are funny people who care about civics and North Carolina.

triangleblogblog.com

Hubby, daddy, lawyer, photographer. This account is primarily filled with wildlife and nature photography by yours truly, with maybe a bit of snark here and there. If this is your vibe, welcome aboard!

Engineer, roboethicist and pro-feminist. Interested in robots as working models of life, evolution, intelligence and culture. Prof Robot Ethics, Bristol Robotics Lab. Home page: https://people.uwe.ac.uk/Person/AlanWinfield

Professor of CogSci & AI at the University of Sussex, UK. Co-founder at Tenyx (now part of Salesforce AI). Interests include AI/ML, Philosophy, Creativity, Consciousness, Music, Paradoxes, Logic, Politics

Researching planning, reasoning, and RL in LLMs @ Reflection AI. Previously: Google DeepMind, UC Berkeley, MIT. I post about: AI 🤖, flowers 🌷, parenting 👶, public transit 🚆. She/her.

http://www.jesshamrick.com

just another handsome philosophy prof at the university of toronto

https://sites.google.com/view/rezahadisi

Associate professor of cognitive psychology at Penn State University. Director of the Cognitive Neuroscience of Creativity Lab (beatylab.la.psu.edu). President-elect of the Society for the Neuroscience of Creativity (SfNC; tsfnc.org; @tsfnc.bsky.social).

CS PhD Student, Northeastern University - Machine Learning, Interpretability https://ericwtodd.github.io

PhD student at NYU 🧠

Perception | Learning & Memory | Consciousness

Philosophy PhD candidate at the University of Toronto. Minds, machines, and peppermint dreams. I really can't recommend peeps enough. (he/him)

Somewhere between ಠ_ಠ and ¯\_(ツ)_/¯ . World's best cat dad, 2017. World's okayest human dad, 2017+.

Director of Egenis, the Centre for the Study of Life Sciences. Associate Professor of Philosophy, University of Exeter. Author of Mind as Metaphor (OUP, 2023) and Models as Make-Believe (Palgrave, 2012).

he/him

phd student in #cogsci at uci

ogulyurdakul.github.io

ogulyurdakul on TV Time, Flickr