I am very excited to announce that over the holidays, my first ever paper (w/ @samiyousif.bsky.social) was published in Cognitive Science! Here, we describe a new illusion of *number*: The Crowd Size Illusion!

onlinelibrary.wiley.com/doi/10.1111/...

05.01.2026 17:04 —

👍 36

🔁 12

💬 0

📌 1

Apply - Interfolio

{{$ctrl.$state.data.pageTitle}} - Apply - Interfolio

Well this is exciting!

The Department of Psychological & Brain Sciences at Johns Hopkins University (@jhu.edu) invites applications for a full-time tenured or tenure-track faculty member in Cognitive Psychology, in any area and at any rank!

Application + more info: apply.interfolio.com/178146

02.12.2025 03:18 —

👍 93

🔁 55

💬 1

📌 3

Congratulations (and thank you) to @talboger.bsky.social, who lectured in front of nearly 500 @jhu.edu undergraduates today on the psychology of music! They didn’t see it coming, and then they loved it :)

11.11.2025 22:09 —

👍 15

🔁 2

💬 1

📌 0

(from lapidow & @ebonawitz.bsky.social's awesome 2023 explore-exploit paper)

14.10.2025 21:45 —

👍 3

🔁 0

💬 0

📌 0

methods from lapidow & bonawitz, 2023. children are "dropped"

a falling child

can't believe the IRB approved this part — hope the children are ok!

14.10.2025 21:44 —

👍 67

🔁 7

💬 2

📌 2

What a lovely 'spotlight' of @talboger.bsky.social's work on style perception! Written by @aennebrielmann.bsky.social in @cp-trendscognsci.bsky.social.

See Aenne's paper below, as well as Tal's original work here: www.nature.com/articles/s41...

08.10.2025 17:27 —

👍 29

🔁 4

💬 0

📌 0

When a butterfly becomes a bear, perception takes center stage.

Research from @talboger.bsky.social, @chazfirestone.bsky.social and the Perception & Mind Lab.

06.10.2025 20:02 —

👍 35

🔁 8

💬 2

📌 2

Out today!

www.cell.com/current-biol...

06.10.2025 14:56 —

👍 39

🔁 11

💬 1

📌 1

important question for dev people: when reporting demographics for a paper involving both kids and adults, we want some consistency in how we report that information. so do you call the kids "men" and "women", or do you call the adults "boys" and “girls"?

01.10.2025 15:33 —

👍 4

🔁 0

💬 1

📌 0

sami is such a creative, thoughtful, and fun mentor. anyone who gets to work with him is so lucky!

15.09.2025 18:23 —

👍 2

🔁 0

💬 0

📌 1

Can we “see” value? Spatiotopic “visual” adaptation to an imperceptible dimension

In much recent philosophy of mind and cognitive science, repulsive adaptation effects are considered a litmus test — a crucial marker, that distinguis…

Visual adaptation is viewed as a test of whether a feature is represented by the visual system.

In a new paper, Sam Clarke and I push the limits of this test. We show spatially selective, putatively "visual" adaptation to a clearly non-visual dimension: Value!

www.sciencedirect.com/science/arti...

28.08.2025 20:18 —

👍 42

🔁 15

💬 2

📌 1

It's true: This is the first project from our lab that has a "Merch" page!

Get yours @ www.perceptionresearch.org/anagrams/mer...

19.08.2025 19:28 —

👍 33

🔁 4

💬 3

📌 1

The present work thus serves as a ‘case study’ of sorts. It yields concrete discoveries about real-world size, and it also validates a broadly applicable tool for psychology and neuroscience. We hope it catches on!

19.08.2025 16:39 —

👍 7

🔁 0

💬 1

📌 0

Though we manipulated real-world size, you could generate anagrams of happy faces and sad faces, tools and non-tools, or animate and inanimate objects, overcoming low-level confounds associated with such stimuli. Our approach is perfectly general.

19.08.2025 16:39 —

👍 5

🔁 0

💬 1

📌 0

Overall, our work confronts the longstanding challenge of disentangling high-level properties from their lower-level covariates. We found that, once you do so, most (but not all) of the relevant effects remain.

19.08.2025 16:39 —

👍 11

🔁 0

💬 1

📌 0

(Never fear, though: As we say in our paper, that last result is consistent with the original work, which suggested that mid-level features — the sort preserved in ‘texform’ stimuli — may well explain these search advantages.)

19.08.2025 16:39 —

👍 8

🔁 0

💬 1

📌 0

whereas previous work shows efficient visual search for real-world size, we did not find a similar effect with anagrams. our study included a successful replication of these previous findings with ordinary objects (i.e., non-anagram images).

Finally, visual search. Previous work shows targets are easier to find when they differ from distractors in their real-world size. However, in our experiments with anagrams, this was not the case (even though we easily replicated this effect with ordinary, non-anagram images).

19.08.2025 16:38 —

👍 11

🔁 0

💬 1

📌 0

people prefer to view real-world large objects as larger than real-world small objects, even with visual anagrams.

Next, aesthetic preferences. People think real-world large objects look better when displayed large, and vice versa for small objects. Our experiments show that this is true with anagrams too!

19.08.2025 16:37 —

👍 15

🔁 2

💬 1

📌 0

results from the real-world size Stroop effect with anagrams. performance is better when displayed size is congruent with real-world size.

First, the “real-world size Stroop effect”. If you have to say which of two images is larger (on the screen, not in real life), it’s easier if displayed size is congruent with real-world size. We found this to be true even when the images were perfect anagrams of one another!

19.08.2025 16:36 —

👍 16

🔁 0

💬 1

📌 0

Then, we placed these images in classic experiments on real-world size, to see if observed effects arise even under such highly controlled conditions.

(Spoiler: Most of these effects *did* arise with anagrams, confirming that real-world size per se drives many of these effects!)

19.08.2025 16:35 —

👍 13

🔁 0

💬 1

📌 0

anagrams we generated, where rotating the object changes its real-world size.

We generated images using this technique (see examples). Each pair differs in real-world size but are otherwise identical* in lower-level features, because they’re the same image down to the last pixel.

(*avg orientation, aspect-ratio, etc, may still vary. ask me about this!)

19.08.2025 16:35 —

👍 31

🔁 2

💬 4

📌 1

depiction of the "visual anagrams" model by Geng et al.

This challenge may seem insurmountable. But maybe it isn’t! To overcome it, we used a new technique from Geng et al. called “visual anagrams”, which allows you to generate images whose interpretations vary as a function of orientation.

19.08.2025 16:34 —

👍 25

🔁 0

💬 1

📌 1

the mind encodes differences in real-world size. but differences in size also carry differences in shape, spatial frequency, and contrast.

Take real-world size. Tons of cool work shows that it’s encoded automatically, drives aesthetic judgments, and organizes neural responses. But there’s an interpretive challenge: Real-world size covaries with other features that may cause these effects independently.

19.08.2025 16:33 —

👍 18

🔁 0

💬 2

📌 1

The problem: We often study “high-level” image features (animacy, emotion, real-world size) and find cool effects. But high-level properties covary with lower-level features, like shape or spatial frequency. So what seem like high-level effects may have low-level explanations.

19.08.2025 16:33 —

👍 19

🔁 0

💬 2

📌 1

On the left is a rabbit. On the right is an elephant. But guess what: They’re the *same image*, rotated 90°!

In @currentbiology.bsky.social, @chazfirestone.bsky.social & I show how these images—known as “visual anagrams”—can help solve a longstanding problem in cognitive science. bit.ly/45BVnCZ

19.08.2025 16:32 —

👍 352

🔁 105

💬 19

📌 30

Out today! www.nature.com/articles/s41...

05.08.2025 21:58 —

👍 61

🔁 18

💬 3

📌 2

Lab-mate got my ass on the lab when2meet

24.07.2025 15:56 —

👍 199

🔁 10

💬 5

📌 1

Amazing new work from @gabrielwaterhouse.bsky.social and @samiyousif.bsky.social! I'm convinced the crowd size illusion is real, but the rooms full of people watching Gabe give awesome talks at @socphilpsych.bsky.social and @vssmtg.bsky.social were no illusion!

26.06.2025 16:37 —

👍 6

🔁 0

💬 0

📌 0

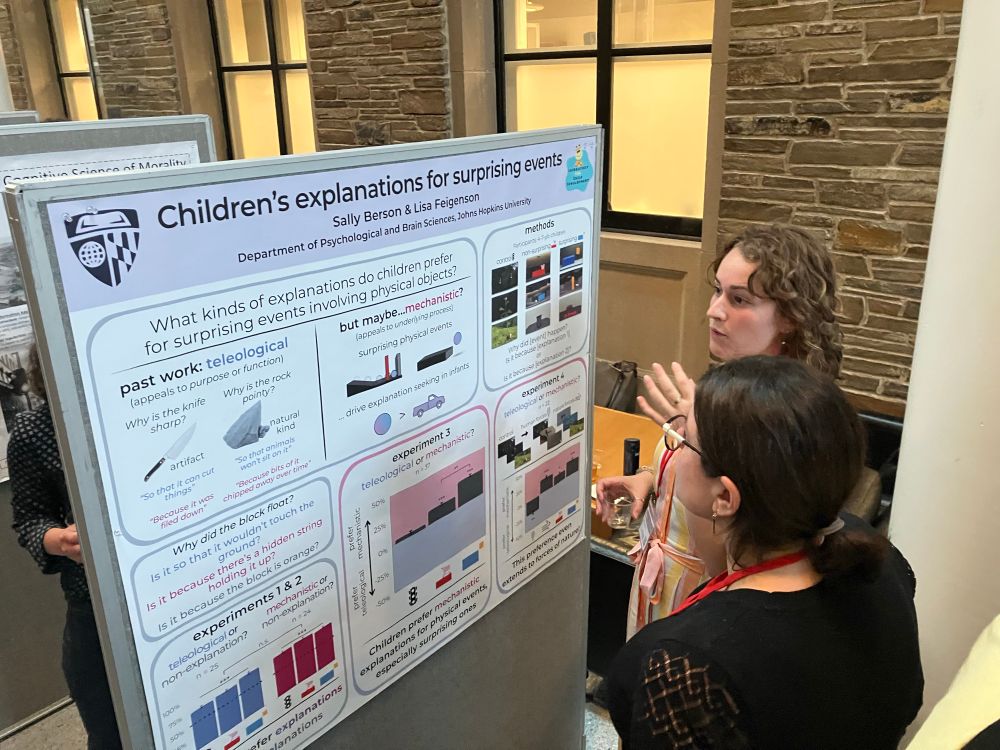

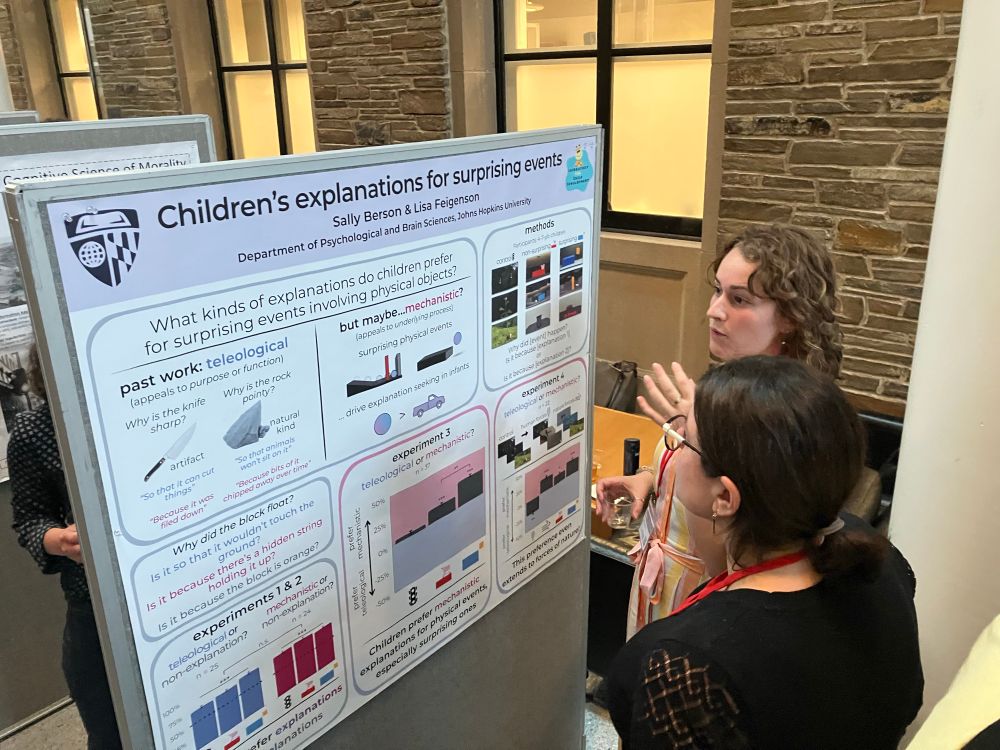

@sallyberson.bsky.social in action at @socphilpsych.bsky.social! #SPP2025

21.06.2025 00:10 —

👍 15

🔁 2

💬 0

📌 0

Susan Carey sitting in the front row of a grad student talk (by @talboger.bsky.social) and going back and forth during Q&A is what makes the @socphilpsych.bsky.social so special! Loved this interaction 🤗

19.06.2025 19:39 —

👍 35

🔁 1

💬 1

📌 1