Congratulations, Charley! I'm so excited to see how your lab will grow and evolve over the coming years. :)

01.10.2025 23:21 — 👍 1 🔁 0 💬 0 📌 0

I just had a lovely morning teaching memo with Lio Wong at #COSMOS2025 in Tokyo! Charley and Wataru have put together an absolutely *fantastic* summer school. Fascinating talks, delightful people… and excellent location. I feel so lucky to be here. If you ever get a chance to attend COSMOS, take it!

01.10.2025 07:14 — 👍 10 🔁 3 💬 0 📌 0

The full, uncropped picture explains what is going on here: the sun is reflected by two buildings with different window tints. The reflection from the blue building casts the yellow shadow, and vice versa. (Studying graphics reminds me just how overwhelmingly beautiful the everyday visual world is…)

13.08.2025 05:36 — 👍 3 🔁 0 💬 0 📌 0

Three years ago, at SIGGRAPH '22 in Vancouver, I took this picture of a pole casting two shadows: one blue and one yellow, from yellow and blue streetlamps respectively.

Today, back in Vancouver for SIGGRAPH '25, I saw the same effect in sunlight! How can one sun cast two colored shadows? Hint in 🧵

13.08.2025 05:36 — 👍 4 🔁 0 💬 1 📌 0

While I was in Rotterdam for CogSci '24, I visited the Escher Museum and fell in love with his work all over again. Now, a year later, I'm delighted by this SIGGRAPH paper led by my friend Ana!

(Say what you will about the technical details, you must admit that we came up with the ~perfect~ title.)

05.08.2025 17:41 — 👍 15 🔁 5 💬 0 📌 1

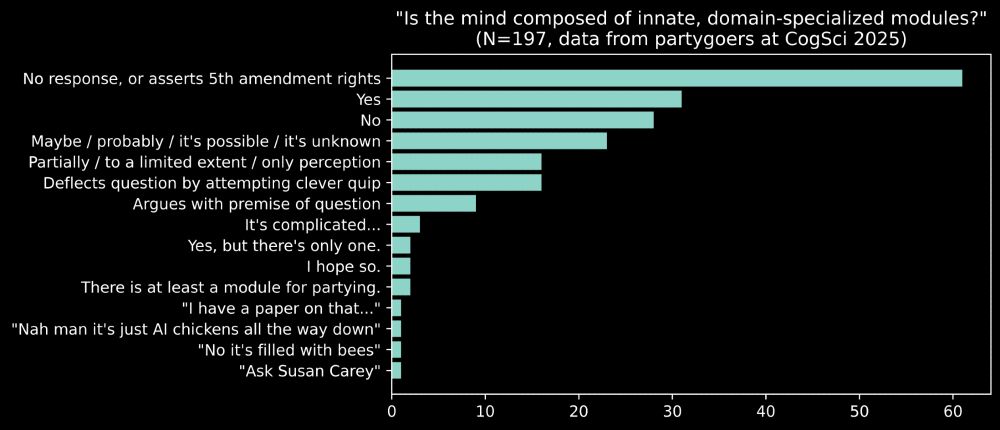

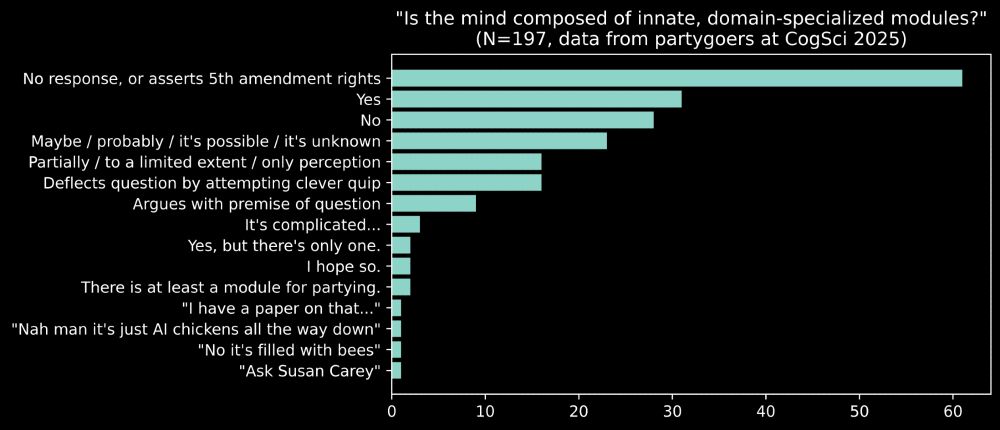

A bar chart showing frequencies of answers to the question "Is the mind composed of innate, domain-specialized modules?" with N=197. The most prominent bars are no response (~60), yes (~30) and yo (~30). The rest of the entries are funny, e.g. "I hope so."

Thanks to a rogue Partiful RSVP form at #cogsci2025, I seem to have collected an unexpectedly large dataset (N=197) of whether cognitive scientists think the mind is composed of innate, domain-specialized modules…

02.08.2025 16:56 — 👍 34 🔁 6 💬 0 📌 2

I'm excited to give a ~mysterious new talk~ at this very special SIGGRAPH workshop on art & cognitive science! See you soon in Vancouver. :)

22.07.2025 18:00 — 👍 9 🔁 1 💬 0 📌 0

We've planned a fun, interactive session with something for everything: whether you're a curious beginner looking to get started, or a seasoned expert looking to push the frontiers of what's possible. There will be games, live-coding, flash talks, and more. Max, Dae and I can't wait to see you! :)

18.07.2025 13:56 — 👍 1 🔁 0 💬 0 📌 0

If you're curious what memo is: it's a programming language specialized for social cognition and theory-of-mind. It lets you write models concisely, using special syntax like "Kartik knows" and "Max chooses," and it compiles to fast GPU code. Lots of CogSci '25 papers use memo! github.com/kach/memo

18.07.2025 13:56 — 👍 1 🔁 0 💬 1 📌 0

As always, CogSci has a fantastic lineup of workshops this year. An embarrassment of riches!

Still deciding which to pick? If you are interested in building computational models of social cognition, I hope you consider joining @maxkw.bsky.social, @dae.bsky.social, and me for a crash course on memo!

18.07.2025 13:56 — 👍 21 🔁 6 💬 1 📌 0

If you're here at RLDM today, you are invited to check out this exciting workshop on social cognition organized by Joe Barnby and Amrita Lamba! I'm giving a talk on programming languages for theory-of-mind at 11:30. Here's the schedule: sites.google.com/view/rldm202...

@rldmdublin2025.bsky.social

11.06.2025 22:29 — 👍 3 🔁 0 💬 0 📌 0

(The *real* puzzle: how come our visual systems make such incredible inferences from shadows, and yet we barely notice such glaring inconsistencies unless we go looking for them? I just read Casati and Cavanagh's book "The Visual World of Shadows," which has made me fall in love with this question…)

05.06.2025 14:27 — 👍 2 🔁 0 💬 0 📌 0

Visual hint #2

05.06.2025 14:27 — 👍 0 🔁 0 💬 1 📌 0

Visual hint #1

05.06.2025 14:27 — 👍 1 🔁 0 💬 1 📌 0

Here is a little inverse graphics puzzle: The no-parking sign on my side of Vassar Street casts its shadow in a dramatically different direction from the leafless tree on the far side of the street — almost 90º apart. How is that possible, given that the sun casts parallel rays? (Hints in thread.)

05.06.2025 14:27 — 👍 2 🔁 0 💬 2 📌 0

Hi there! Thanks for the kind words.

I think everything should be linked from my homepage here: cs.stanford.edu/~kach/

And you can still subscribe to the RSS feed for new posts.

03.02.2025 16:43 — 👍 1 🔁 0 💬 1 📌 0

I thought I would share these incantations in case anyone else is having the same realization today, and is up for an adventure. :)

See also: pandoc.org/MANUAL.html

31.01.2025 13:56 — 👍 3 🔁 0 💬 0 📌 0

(1) First, you can convert your LaTeX to plain text like this:

pandoc --wrap=none main.tex -o main.txt

(2) But this does not automatically format references. For that, you can run this additional command:

pandoc --wrap=none --bibliography=refs.bib --citeproc main.txt | pandoc -t plain --wrap=none

31.01.2025 13:55 — 👍 3 🔁 0 💬 1 📌 0

Last night, I realized that a workshop's submission portal only accepted plain text copy-pasted into a text box. Instead of manually de-TeX-ifying my beautifully-formatted PDF (5-minute job), I decided to work out how to do it automatically with pandoc (1-hour research project). Here's what I found…

31.01.2025 13:55 — 👍 9 🔁 0 💬 1 📌 0

Yes! As one example of the impact SGI sponsorships have, I got the opportunity to attend SGI 2024 in Boston thanks to a generous industry sponsorship. I really hope other students can have that same opportunity in future years!

18.12.2024 17:47 — 👍 1 🔁 0 💬 0 📌 0

(I don't think it was just that we students were hungry and/or easy to bribe. I think the professor's gesture made us feel like the class was a community of friends who were there for more than simply giving/getting grades. After that, showing up every day felt like the obvious natural thing to do!)

04.12.2024 11:39 — 👍 1 🔁 0 💬 1 📌 0

Possibly-helpful anecdote: my undergrad complexity theory professor once went shopping for a new carpet at the mall. Next to the carpet store was a bakery, so he spontaneously decided to buy cupcakes for everyone in class the next day. From then on, he got near-perfect attendance for every lecture…

04.12.2024 11:33 — 👍 1 🔁 0 💬 1 📌 0

There's much more to say about this—especially about why we think these ideas are important to graphics. For more, see our paper arxiv.org/abs/2409.13507 or Matt's upcoming talk at SIGGRAPH Asia.

In the meantime, enjoy a video where every sound effect is a vocal imitation produced by our method! :)

30.11.2024 20:29 — 👍 3 🔁 0 💬 0 📌 0

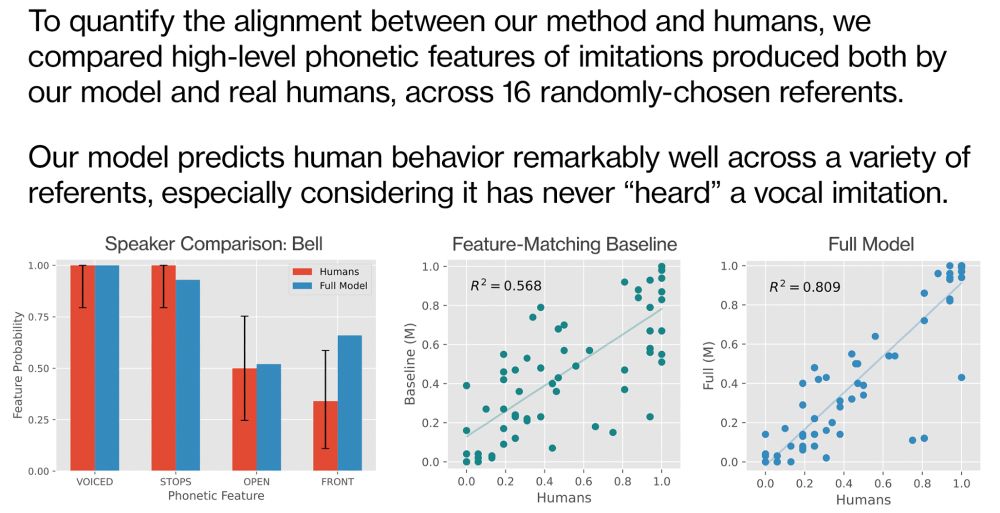

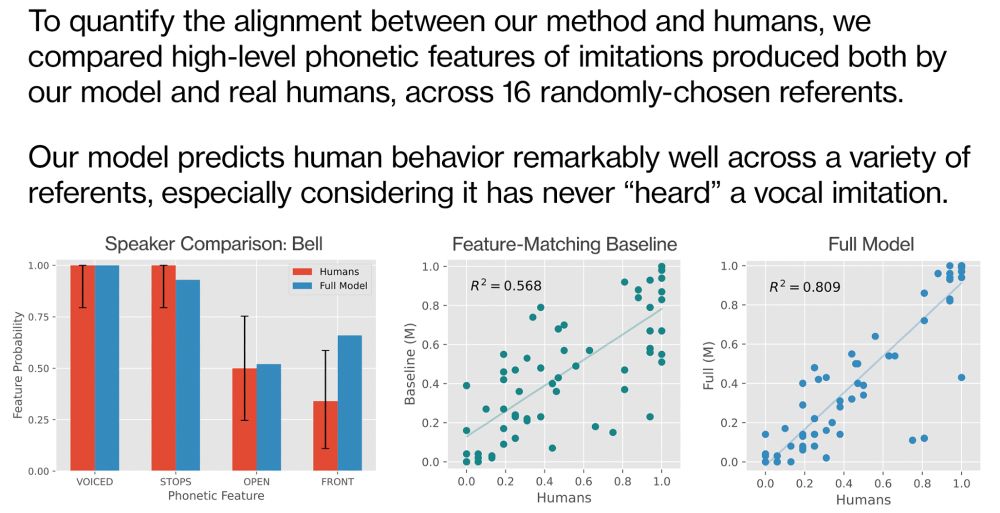

A bar plot and scatter plots showing a tight correlation between our method and humans.

When compared to actual vocal imitations produced by actual humans, our model predicts people's behavior quite well…

But we never "trained" our model on any dataset of human vocal imitations! Human-like imitations emerged simply from encoding basic principles of human communication into our model.

30.11.2024 20:29 — 👍 2 🔁 0 💬 1 📌 0

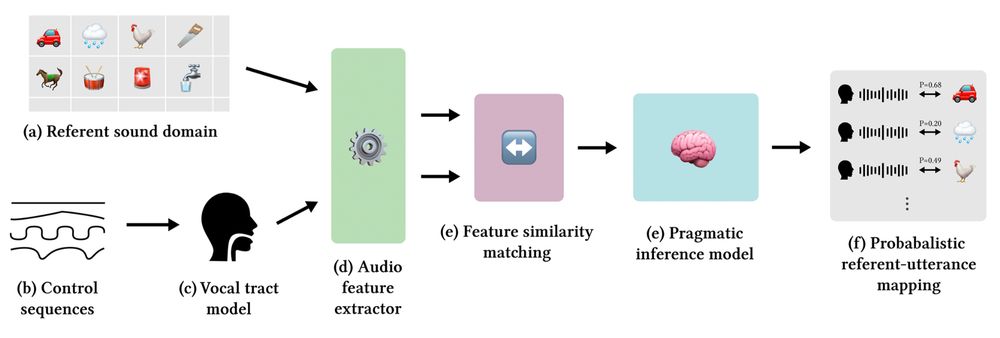

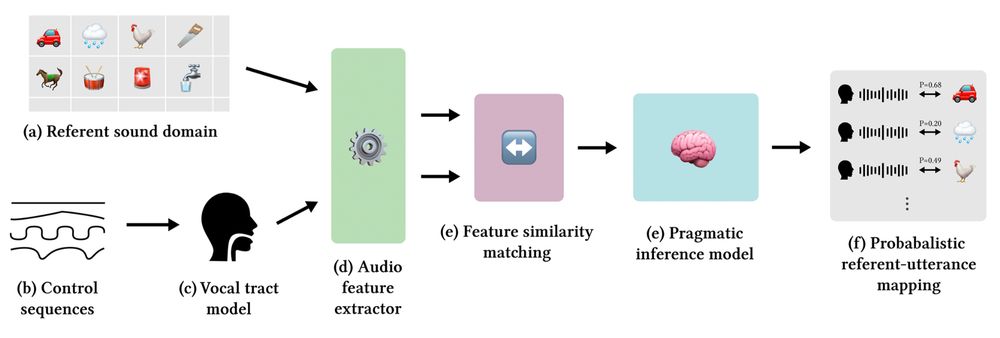

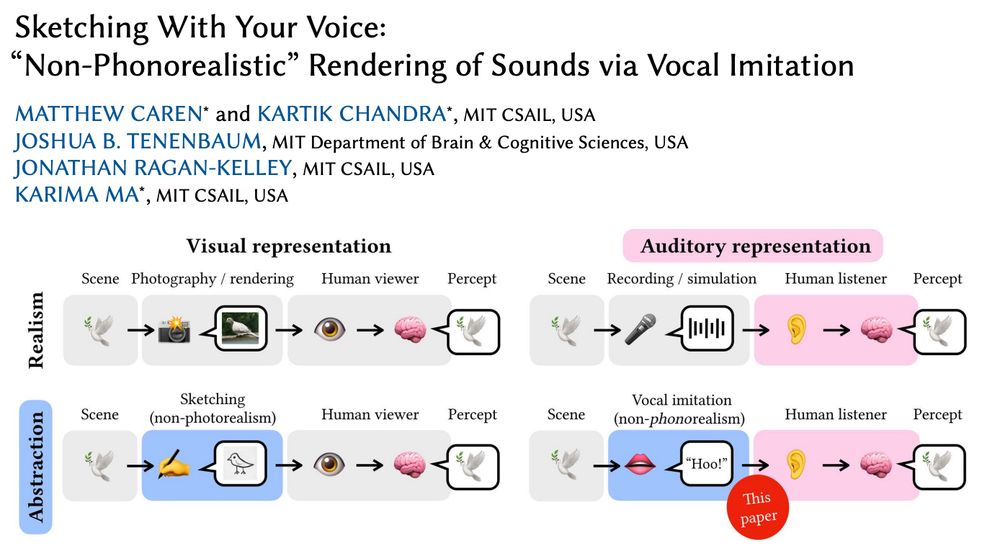

A system diagram showing how the components of our system connect.

We designed a method for producing human-like vocal imitations of real-world sounds. It works by combining models of the human vocal tract (like "Pink Trombone"), human hearing (via feature extraction), and human communicative reasoning (the "Rational Speech Acts" framework from cognitive science).

30.11.2024 20:29 — 👍 3 🔁 0 💬 1 📌 0

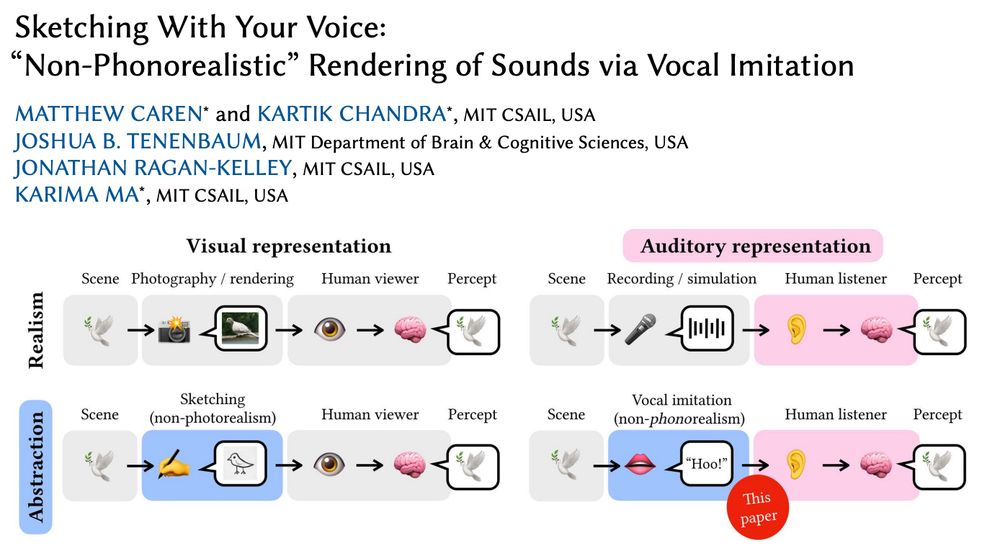

We are interested in *vocal imitation*: how we use our voices to "sketch" and communicate real-world sounds (e.g. crow → "caw," bell → "dong"). It's effortless, intuitive, and we do it all the time.

Some of my favorite examples are from callers describing engine sounds on the radio show "Car Talk"…

30.11.2024 20:29 — 👍 2 🔁 1 💬 1 📌 0

A screenshot of the first page of the paper.

Graphics has long studied how to make:

(1) realistic images 📷

(2) non-photorealistic images ✍️

(3) realistic sounds 🎤

What about (4) "non-phono-realistic" sounds?

What could that even mean?

Next week at SIGGRAPH Asia, MIT undergrad Matt Caren will present our proposal… 🧵

arxiv.org/abs/2409.13507

30.11.2024 20:29 — 👍 46 🔁 12 💬 2 📌 1

A screenshot of a Scratch project showing a janky 3D raycaster, made in 2010.

Oooh, that reminded me of my own initial foray into graphics research… 👀

26.11.2024 23:58 — 👍 1 🔁 0 💬 0 📌 0

Congratulations, Mitch! :) You've definitely brought a lot of joy and meaning to the past 5,387 days of *my* life. (That's the age of my Scratch account!)

22.11.2024 13:24 — 👍 3 🔁 0 💬 0 📌 0

Hi Amir! Could I please be added to this list? I'm a PhD student at MIT CSAIL. :)

18.11.2024 18:55 — 👍 1 🔁 0 💬 0 📌 0

Behavioural scientist running the Computational Group Dynamics (COGNAC) lab in RIKEN CBS. Also affiliating the Uni Konstanz & MPI Animal Behaviour. Love Collective Dynamics, whiskies, wine, and vintage shops.

🇹🇭 phd candidate w/ @lukejchang.bsky.social in the computational social affective neuroscience lab (cosanlab.com) at @DartmouthPBS.bsky.social

i study social interactions & communication

wasita.space

Certified bird brain, researching cognitive and social ecology of parrots and parids. Currently holding down a dual position at the Australian National University and the University of Zurich

visual.cs.brown.edu - vision, graphics, HCI, design+fabrication

Professional Hula Dancer @csail.mit.edu

Computer vision for drug discovery

Prev @insitro.bsky.social @czbiohub.bsky.social

third-year phd student at jhu psych | perception + cognition

https://talboger.github.io/

psychology phd student @ johns hopkins | cognitive development & explanation

Assoc Prof at UC San Diego, exploring the mind & its origins. Cognitive development, social cognition, music cognition, open science, all of interest. http://madlab.ucsd.edu

Studying how people understand visualizations and graphics. PhD @ MIT CSAIL. Prev. @ Stanford Psych; BS/MS @ UofT CompSci.

Studies social cognition in children and grown-ups. Teaches in the psychology and cognitive science programs at Yale.

Lab: socialcogdev.com

Cognitive scientist studying the development of the social mind. Assistant professor at UCSB. 🇨🇦🏳️🌈 (he/him)

bmwoo.github.io

Promoting Cognitive Science as a discipline and fostering scientific interchange among researchers in various areas.

🌐 https://cognitivesciencesociety.org

Origins of the Social Mind • Apes • Dogs • Evolutionary Cognitive Scientist, Assistant Professor @JohnsHopkins • he/him

Assoc. Prof. Learning Sciences, Harvard GSE. Study learning in early childhood using computational modeling & empirical studies. Speaking for self only. She/her

an aspiring human

doing AI/cogsci research most of the time

learning how to tattoo some of the time

https://milenaccnlab.github.io/

Assistant Prof at the University of Maryland, PI of Social Learning and Decisions lab, broadly interested in social neuroscience and computational psychiatry. Avid traveller. Views my own.