I'm still waiting for you to write that package that recovers pupil size from mri recordings - gotta find a way to make all that fmri data useful ;-)

25.09.2025 14:55 — 👍 4 🔁 0 💬 0 📌 0

Incredible study by Raut et al.: by tracking a single measure (pupil size), you can model slow, large-scale dynamics in neuronal calcium, metabolism, and brain blood oxygen through a shared latent space! www.nature.com/articles/s41...

25.09.2025 08:53 — 👍 66 🔁 17 💬 1 📌 1

I'll show some (I think) cool stuff about how we can measure the phenomenology of synesthesia in a physiological way at #ECVP - Color II, atrium maximum, 9:15, Thursday.

say hi and show your colleagues that you're one of the dedicated ones by getting up early on the last day!

27.08.2025 21:13 — 👍 9 🔁 1 💬 0 📌 0

Redirecting

A good reason to make it to the early #visualcognition session of today's #ecvp2025 👉 @anavili.bsky.social will talk about how attending to fuzzy bright/dark patches that have faded from awareness (through adaptation-like processes) still affect pupil size! ⚫👀⚪ Paper: dx.doi.org/10.1016/j.co...

27.08.2025 05:45 — 👍 6 🔁 2 💬 0 📌 0

#ECVP @ecvp.bsky.social will be so much fun!

24.08.2025 16:31 — 👍 2 🔁 0 💬 0 📌 0

#ECVP2025 starts with a fully packed room!

I'll show data, demonstrating that synesthetic perception is perceptual, automatic, and effortless.

Join my talk (Thursday, early morning.., Color II) to learn how the qualia of synesthesia can be inferred from pupil size.

Join and (or) say hi!

24.08.2025 16:28 — 👍 18 🔁 3 💬 1 📌 1

A review of the costs of eye movements

Nature Reviews Psychology - Eye movements are the most frequent movements that humans make. In this Review, Schütz and Stewart integrate evidence regarding the costs of eye movements and...

Eye movements are cheap, right? Not necessarily! 💰 In our review just out in @natrevpsychol.nature.com, Alex Schütz and I discuss the different costs associated with making an eye movement, how these costs affect behaviour, and the challenges of measuring this… rdcu.be/eAm69 #visionscience #vision

12.08.2025 10:44 — 👍 23 🔁 10 💬 1 📌 0

I think there is a lot one doesn't think of intuitively. Lossy compression of audio files directly built on psychophysics, for instance (no (hardcore experimental)psychology, no spotify!). Or take all the work foundational for artificial neural networks that comes from cognitive psych&modeling

01.08.2025 20:12 — 👍 1 🔁 0 💬 0 📌 0

Together with @ajhoogerbrugge.bsky.social, Roy Hessels and Ignace Hooge - thanks all!

29.07.2025 07:39 — 👍 3 🔁 0 💬 0 📌 0

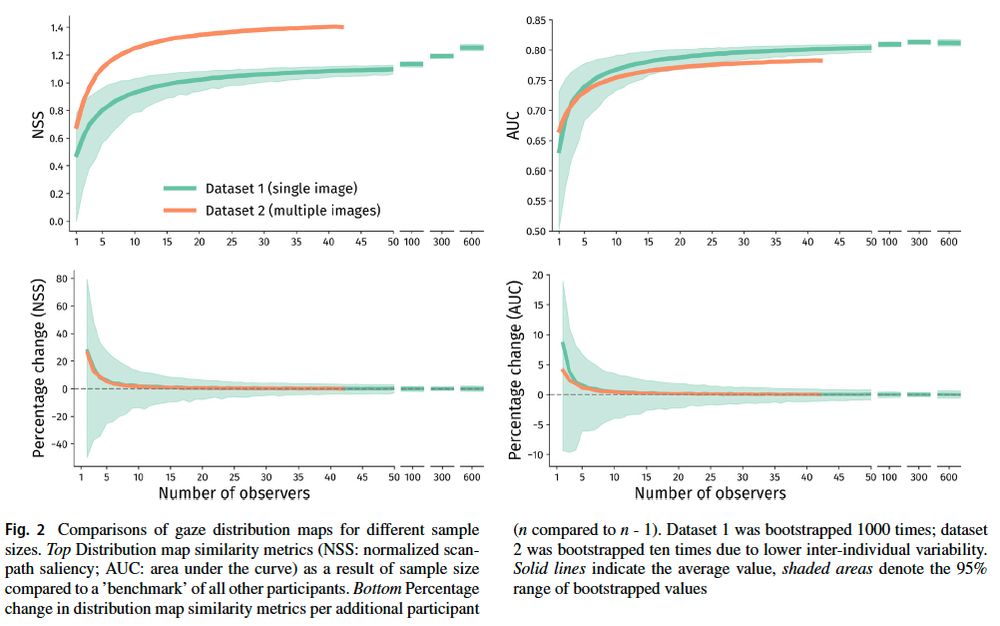

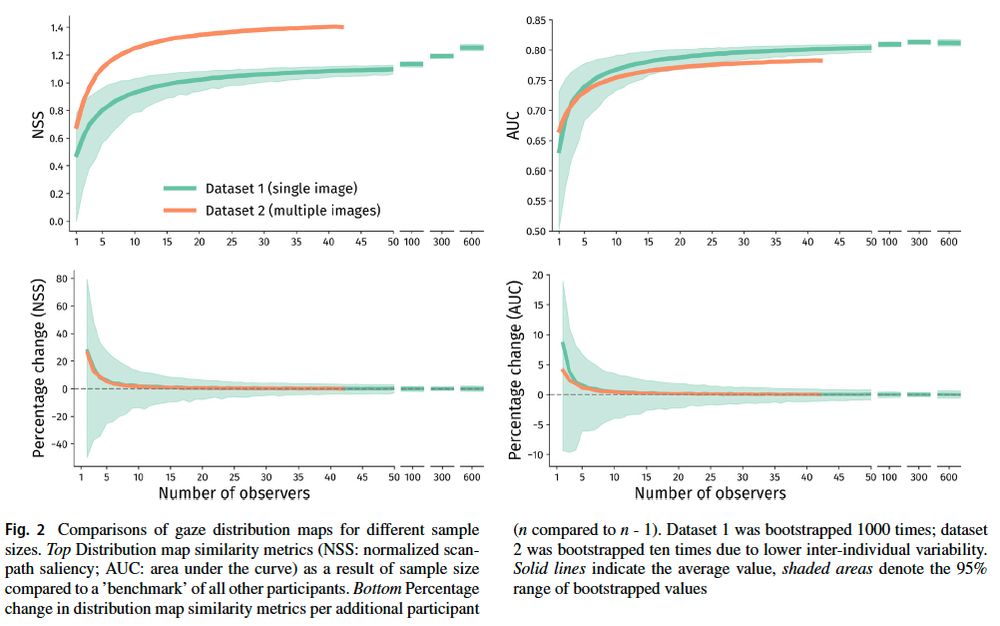

data saturation for gaze heatmaps. Initially, any additional participant will bring the total NSS or AUC as measures for heatmap similarity a lot closer to the full sample. However, the returns diminish increasingly at higher n.

Gaze heatmaps (are popular especially for eye-tracking beginners and in many applied domains. How many participants should be tested?

Depends of course, but our guidelines help navigating this in an informed way.

Out now in BRM (free) doi.org/10.3758/s134...

@psychonomicsociety.bsky.social

29.07.2025 07:37 — 👍 9 🔁 2 💬 1 📌 0

good god

23.07.2025 10:56 — 👍 1 🔁 0 💬 1 📌 0

Vacatures bij de RUG

Jelmer Borst and I are looking for a PhD candidate to build an EEG-based model of human working memory! This is a really cool project that I've wanted to kick off for a while, and I can't wait to see it happen. Please share and I'm happy to answer any Qs about the project!

www.rug.nl/about-ug/wor...

03.07.2025 13:29 — 👍 15 🔁 21 💬 1 📌 1

PhD position — Rademaker lab

Curious about the visual human brain, a vibrant and collaborative lab, and pursuing a PhD in the heart of Europe? My lab is recruiting for a 3-year PhD position. More details: www.rademakerlab.com/job-add

01.07.2025 06:43 — 👍 47 🔁 46 💬 1 📌 4

so nice, they are lucky to have you over there!

19.06.2025 10:57 — 👍 2 🔁 0 💬 1 📌 0

We had a splendid day: great weather, got to wear peculiar/special clothes, and then Alex even defended his PhD (and nailed it!).

Congratulations dr. Alex, super proud of your achievements!!!

18.06.2025 14:50 — 👍 4 🔁 0 💬 0 📌 0

Now published in Attention, Perception & Psychophysics @psychonomicsociety.bsky.social

Open Access link: doi.org/10.3758/s134...

12.06.2025 07:21 — 👍 14 🔁 8 💬 0 📌 0

@vssmtg.bsky.social

presentations today!

R2, 15:00

@chrispaffen.bsky.social:

Functional processing asymmetries between nasal and temporal hemifields during interocular conflict

R1, 17:15

@dkoevoet.bsky.social:

Sharper Spatially-Tuned Neural Activity in Preparatory Overt than in Covert Attention

18.05.2025 09:41 — 👍 6 🔁 4 💬 1 📌 0

Cool new preprint by Damian. Among other findings: eye pupillometry, EEG & IEMs show that the premotor theory of attention can't be the full story: eye movements are associated with an additional, separable, spatially tuned process compared to covert attention, hundreds of ms before shifts happen.

13.05.2025 08:17 — 👍 4 🔁 1 💬 1 📌 0

A move you can afford

Where a person will look next can be predicted based on how much it costs the brain to move the eyes in that direction.

Where a person will look next can be predicted based on how much it costs the brain to move the eyes in that direction.

04.05.2025 08:21 — 👍 15 🔁 1 💬 0 📌 0

Thanks!

26.04.2025 06:19 — 👍 0 🔁 0 💬 0 📌 0

Let me know if you're still unconvinced and, if so, why. I'm also happy to present it in more detail at a lab meeting or online.

Cheers!

25.04.2025 07:46 — 👍 0 🔁 0 💬 0 📌 0

Redirecting

All together, it's certainly correct that pupillometry requires care as it's just two output systems and in many (but not all) ways just one with multiple inputs. But they are well understood (shameless plug to my tins papers here):

doi.org/10.1016/j.ti...

doi.org/10.1016/j.ti...

25.04.2025 07:34 — 👍 1 🔁 0 💬 1 📌 0

Lastly, are there other physiological measures that point to similar effects? Yes. Saccade latencies show similar effects - providing convergent evidence to our bottom line of effort driving saccade selection. Latencies are just not as nice as they are not separate from the movement itself

25.04.2025 07:28 — 👍 0 🔁 0 💬 1 📌 0

There are very systematic investigations into pupil size across fixation location for methodological reasons (the foreshortening of pupil size relative to the eyetracker). Fixation location itself does not show up/down differences on pupil size.

25.04.2025 07:00 — 👍 1 🔁 0 💬 1 📌 0

The difference in pupil size when preparing upward vs downward directed saccades (~0.1z) is absolutely meaningless relative to the effect of a PLR (~3-4 is not uncommon, depending on luminance change). If there would be a hard-coded map built in, it wouldn't serve any meaningful protective role

25.04.2025 06:55 — 👍 0 🔁 0 💬 1 📌 0

both the up/down and the cardinal/oblique differences are highly predictive of participants' free choices in the separate saccade selection task (no delay here, no pupil measurement here). But your point would then be that cardinal/oblique indeed reflects effort minimization, up/down doesn't?

25.04.2025 06:55 — 👍 1 🔁 0 💬 1 📌 0

It's important to realize that our pupil measures are taken when the eye is perfectly still in the center. The only difference being that participants know which direction the will have to make a saccade to later.

Are you on board with larger pupil size = more effort here when preparing diagonals?

25.04.2025 06:48 — 👍 2 🔁 0 💬 1 📌 0

the PLR has two primary functions, one of which is to protect the retina from excess luminance. The other is to optimize contrast perception. A hard-wired up/down map would jeopardize the later in many cases. Given how important contrast perception is for vision, I highly doubt that would be smart.

25.04.2025 06:46 — 👍 1 🔁 0 💬 1 📌 0

Psychiatrist and neuroscientist @UniBasel | predictions, perception, psychosis | Translational Psychiatry Lab https://translat-psych.medizin.unibas.ch | https://www.upk.ch

Professor, Neuroscientist, Group Leader @lmumuenchen.bsky.social interested in how your brain 🧠 converts feelings into actions. 🇺🇸 in 🇩🇪. @erc.europa.eu Awardee. annaschroederlab.com

Assistant Professor of Cognitive Neuroscience | UConn Psych Sciences | attention, cognition, mental health, development, environment, personalized neuroscience

arielleskeller.wixsite.com/attention

appliedcognitionlab.psychology.uconn.edu

opinions my own

PhD Candidate, Neuroscience of Addiction Lab, Erasmus University Rotterdam - Social Cognition - Alcohol - Cannabis - Developmental Psychology

PhD Student at the University of Bath | Neuropsychology | Movement Perception and Understanding | Brain Stimulation | Open Science | https://tms-rat.org/

👨⚕️ PGY4 psychiatry resident @ Penn Med, ⚡️🧠 neuroscientist postdoc and 🌈 🏔 human person. Previously WashU MSTP, Haverford College, and Scribbles Pre-school. Views my own.

Prof at University of Glasgow, School of Psychology & Neuroscience. Trying to understand how the human brain reconstructs memories of past events. She/her

#Memory #Neuroscience #Cognition #LoveGlasgow

PhD student @neuroMADLAB studying vagus nerve stimulation, motivation and decision-making🧠 Based in Bonn 🇩🇪

Neuroscientist working on computational psychiatry @neuromadlab in Bonn

Incoming postdoc at Columbia/NYU. Sponsored by New York Academy of Sciences through Leon Levy Foundation. PhD from Princeton University, Yale '19

working in neuroscience / psychology / cognition @UChicago w/ Awh-Vogel Lab

The „Tagung experimentell arbeitender Psycholog*innen“ (TeaP) will take place at University of Tübingen in 2026 🧠💡✏️ Join us for pre-conference workshops on March 15 and for the main conference from March 16 through 18 🧑🎓👩🎓👨🎓 Website: https://coms.app/teap26/

PhD student in the Object Vision Group at CIMeC, University of Trento. Interested in neuroimaging and object perception. He/him 🏳️🌈

https://davidecortinovis-droid.github.io/

Senior Lecturer at University of Stirling. Interested in face perception, individual differences, and spaniels.

See https://tenuretracker.info/ for the most complete & worldwide overview of open postdoc, tenure track, (junior) professor, and lecturer positions.

Visit the site to search for specific positions and subscribe to email notifications

ELLIS PhD Student @ Centre for Cognitive Science, Technical University of Darmstadt | (Inverse) Computational Modeling of Sensorimotor Behavior | https://tobias-niehues.com/

PhD student @gestaltrevision.bsky.social @KU_Leuven | Interested in Aesthetics, Visual Perception, Perceptual Organization, Psychophysics and Multistability

SNSF Ambizione fellow & Research group leader «Cognitive & Developmental Neuroscience of Language» @ Developmental Psychology: Infancy and Childhood, Department of Psychology, University of Zurich | https://sites.google.com/site/sauppes

Neurogeneticist interested in the relations between genes, brains, and minds. Author of INNATE (2018) and FREE AGENTS (2023)