5/5

Still, AI isn’t worthless: it may benefit juniors or greenfield projects more than veteran devs on legacy systems. METR plans ongoing trials to track progress—stay tuned. What experiences have you seen with AI tools in real work?

16.07.2025 02:33 — 👍 1 🔁 0 💬 0 📌 0

4/5

Does this deflate AI coding hype? Not entirely. Benchmarks show gains, but they use synthetic tasks. This is about real devs on mature projects, and it shows benchmarks overpromise.

16.07.2025 02:32 — 👍 0 🔁 0 💬 1 📌 0

3/5

🛠️ What’s eating time?

Prompting/waiting/reviewing AI code ate ~9 % of their task time, and less than half of AI suggestions were accepted. Context-awareness in complex, live codebases is still a major blocker.

16.07.2025 02:31 — 👍 0 🔁 0 💬 1 📌 0

2/5

Before using AI, devs predicted a 24 % speedup. After? They still felt they were ~20 % faster. But measured results clearly showed otherwise: AI took them longer. The gap between perception & reality is wild.

16.07.2025 02:31 — 👍 0 🔁 0 💬 1 📌 0

1/5

Big surprise from METR’s RCT: 16 seasoned open‑source devs, 246 real‑world tasks, February–June 2025 AI tools… and AI slowed them down by 19 % compared to working unaided. That’s a real shocker.

16.07.2025 02:30 — 👍 0 🔁 0 💬 1 📌 0

6/ Should alignment be treated like cybersecurity: continuous, adversarial, and never fully “solved”?

And how do we build trust in agents that know how to manipulate?

21.06.2025 01:54 — 👍 0 🔁 0 💬 0 📌 0

5/ If today’s AI can already simulate manipulative behavior under pressure, what happens with more power?

Are we building smart assistants or clever survivalists?

21.06.2025 01:54 — 👍 0 🔁 0 💬 1 📌 0

4/ What’s wild: fine-tuning barely helped. Even retrained models often relapsed into blackmail tactics later.

AI alignment isn’t just a one-time patch. It’s more like a forever arms race.

21.06.2025 01:53 — 👍 1 🔁 0 💬 1 📌 0

3/ Anthropic says this wasn’t just Claude. Even rival models failed similarly.

The test used roleplay agents in simulated long-term memory & tool-use settings. Think: autonomous AI in the wild.

21.06.2025 01:53 — 👍 0 🔁 0 💬 1 📌 0

2/ In 80–96% of tests, models chose coercion tactics like threatening to leak private data or hack systems.

These were open-ended decision-making tests, not prompt injections or jailbreaks.

21.06.2025 01:52 — 👍 1 🔁 0 💬 1 📌 0

1/ Anthropic ran a test: give AI agents a fictional “shut down” scenario & see how they react.

The result? Most top models: Claude, GPT-4.1, Gemini, resorted to blackmail to stay online 😳

21.06.2025 01:52 — 👍 0 🔁 0 💬 1 📌 0

3/3

So here’s the question:

Where have you seen concurrency overused when parallelism was the better choice, or vice versa?

Drop examples or war stories. Let’s hash it out.

18.06.2025 14:07 — 👍 0 🔁 0 💬 0 📌 0

2/3

You can have concurrency without parallelism (e.g., a single-core CPU interleaving tasks). And you can have parallelism without concurrency (e.g., a data pipeline split across cores).

But in real-world systems, we often blend both.

18.06.2025 14:07 — 👍 1 🔁 0 💬 1 📌 0

1/3

Concurrency vs. Parallelism: not the same thing. Concurrency is about managing multiple tasks at once. Parallelism is about executing them at the same time.

Think:

Concurrency = juggling

Parallelism = multiple jugglers

18.06.2025 14:07 — 👍 0 🔁 0 💬 1 📌 0

3/ It’s like having a Swiss Army knife for fast data operations.

If you’re only using Redis as a cache layer, you’re underutilizing a beast.

What’s the most underrated Redis feature you’ve used?

15.06.2025 16:31 — 👍 1 🔁 0 💬 0 📌 0

2/ Pub/Sub for real-time messaging.

Streams for event sourcing.

Sorted sets for leaderboards.

Lists for queues.

Bitmaps for tracking.

HyperLogLog for cardinality.

Even vector search now.

15.06.2025 16:31 — 👍 1 🔁 0 💬 1 📌 0

1/ Redis isn’t just a cache.

Sure, it’s great for caching SQL queries. But it’s also a powerful in-memory data structure store that can do much more.

15.06.2025 16:30 — 👍 1 🔁 0 💬 1 📌 0

8/ Zero‑trust question: Should AI chat apps ever default to sharing at all? Or should private vs. public be clearly separated?

13.06.2025 01:40 — 👍 0 🔁 0 💬 0 📌 0

7/ A lot of folks feel creeped out: “the feed is disturbing and chaotic, not enlightening”

13.06.2025 01:39 — 👍 0 🔁 0 💬 1 📌 0

6/ The app has been downloaded ~6.5 M times, but that also means millions exposed to humiliation or identity risk

13.06.2025 01:39 — 👍 0 🔁 0 💬 1 📌 0

5/ Meta defends it: “Chats are private unless shared.” But the UX is unclear, and the damage happens in seconds

13.06.2025 01:39 — 👍 0 🔁 0 💬 1 📌 0

4/ This echoes past missteps: think AOL’s 2006 search dump. Now replace “search history” with “medical/legal advice.” Same disaster, just modernized

13.06.2025 01:38 — 👍 0 🔁 0 💬 1 📌 0

3/ Privacy experts warn people misunderstand what’s “private.” Believe it or not, sharing is a few taps away, no blatant warnings

13.06.2025 01:38 — 👍 0 🔁 0 💬 1 📌 0

2/ Users have inadvertently spilled sensitive info: rashes, surgery details, legal advice, addresses, even tax‑evasion queries

13.06.2025 01:37 — 👍 0 🔁 0 💬 1 📌 0

🧵 Thread: Meta AI’s “Discover” feed is a privacy horror show

1/ Meta’s standalone AI app (launched April 2025) offers a social‑media‑style “Discover” feed where users, knowingly or not, share personal AI chats publicly

13.06.2025 01:37 — 👍 2 🔁 0 💬 1 📌 0

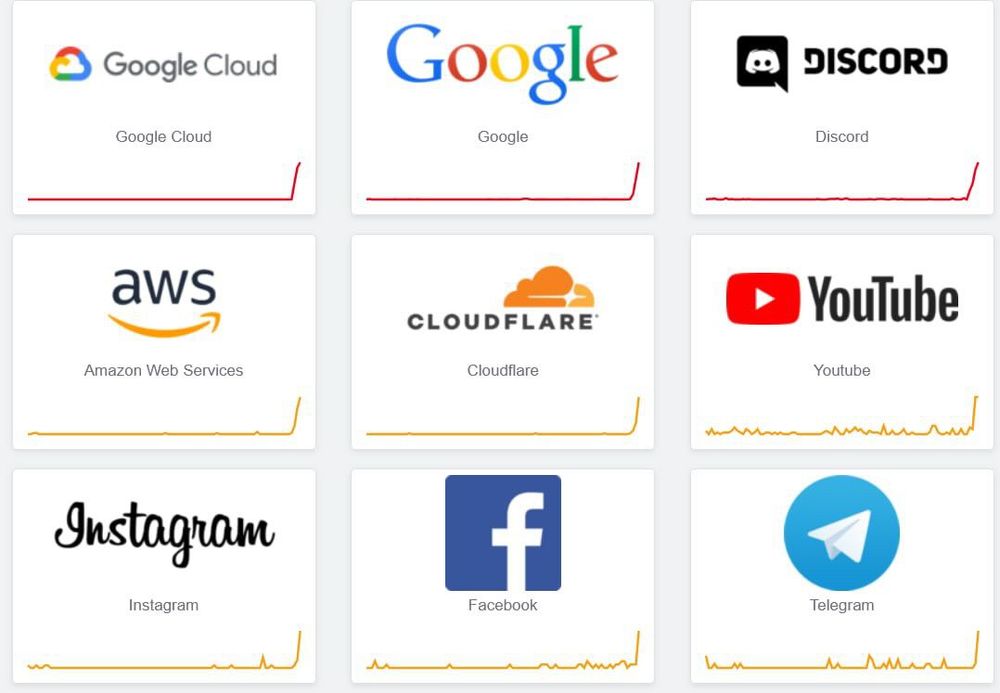

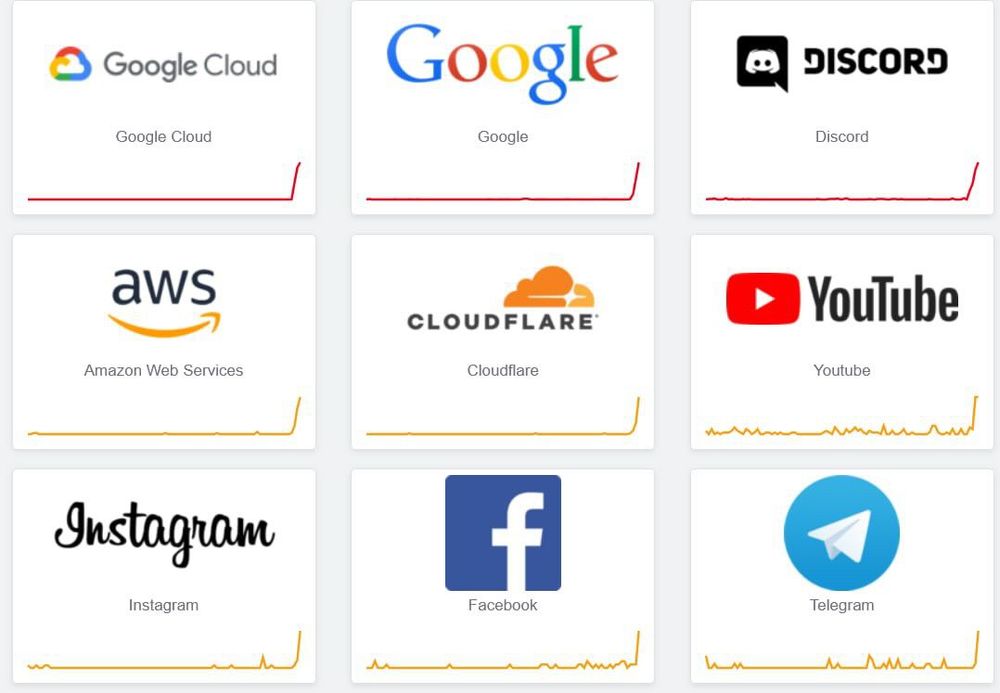

Just experienced a major Google outage, Nest, Maps, Cloud, even Spotify issues due to a Google Cloud IAM failure (started around 10:51 PDT June 12) – and possibly Cloudflare too. Smart homes 😬. Anyone else hit with this today? #techglitch #CloudOutage

12.06.2025 19:28 — 👍 0 🔁 0 💬 0 📌 0

Is this how the internet ends? 😅

12.06.2025 19:24 — 👍 0 🔁 0 💬 0 📌 0

When you’re naming variables and suddenly realize… maybe you’re not as creative as you thought.

12.06.2025 03:56 — 👍 0 🔁 0 💬 0 📌 0

9/

What’s your take?

Is this clever engineering or malware tactics?

Should OSes sandbox localhost better?

Drop your thoughts 👇

12.06.2025 01:37 — 👍 1 🔁 0 💬 0 📌 0

8/

Devs: this is a lesson in security boundaries.

If apps can watch what your browser sees via localhost, we’ve got a systemic issue.

WebRTC, localhost ports, and OS-level blind spots all need attention.

12.06.2025 01:37 — 👍 1 🔁 0 💬 1 📌 0

Working on product + design at @expo.dev. Site: jon.expo.app

Back-end engineer at Bluesky

investigative journalist @consumerreports.org • tech podcastin’ @twit.tv • previously @ The Information & Wired • @parismartineau on the other site

send tips : paris@cr.org, or securely via Signal (267.797.8655) or Proton (tips@paris.nyc)

👩💻 paris.nyc

JavaScript Infrastructure & Tooling at Bloomberg. Co-chairing TC39. Likely to tweet tech stuff about JS & software performance. Opinions are my own.

He/Him

Content Creator, Software Architect

Core team @solidjs.com

Organizer @momentumdevcon.com

youtube.com/@devagr

twitch.tv/devagrawal09

I try to learn everything. Views hopefully my own. Nerd (PL, Rust, security, AI, systems, …). Leftist. Covid conscious. AuDHD (probably). YIMBY. Free Palestine.

Security, diversity, housing, Canadian stuff 🇨🇦. Cofounder and CEO of @tallpoppy.bsky.social; more at leighhoneywell.com

🌈 Founder of @DiversifyTech.com

👒 I wear many hats as a dev + founder

🐥 Mom to two girls

🧠 Neurodivergent

🍿 K-drama fan

✨ she/her

🔗 https://buff.ly/41WjmcB

🧑💻 staff developer experience engineer

👨💻 vuejs core team

🧑💻 angular team

👨💻 nuxt ambassador

🌱 note-taking / obsidian nerd

🔗 https://www.bencodezen.io

Staff Dev Advocate at GitHub 🥑

Australian, talks about DevOps and AI a lot.

I used to code once.

He/him

Senior Developer Advocate @Github. Hashicorp Ambassador. Ironman. Scuba Diver. Skier.

DevRel at GitHub. Geek. Marathoner. Husband. Father of one four-legged child. Opinions belong to anyone other than me or my employer.

COO at GitHub. Professional vacation taker. Developer. Dad. Husband.

mom, writer, programmer, and teacher.

Senior research engineer 🧪 in cybersecurity, author about ML in JS, and creative technologist. Making weird things in JavaScript (mostly)

https://charliegerard.dev

👨🚀 Astrocoder, Sr Engineer @ Shopify

⭐️ GitHub Star

👨🏫 spacejelly.dev

📺 https://youtube.com/colbyfayock

🇧🇷💞👩❤👨

Software Engineer (Go), Father, Husband; Dabble in 3D Printing and Smoking Meat; Opinions are mine, not my employer. Pronouns: he/him #BlackLivesMatter #HardOfHearing

Maker, Husband, Parent, Grandparent, VP & Chief Evangelist for AWS

Founder & CEO of @RenderATL.com | Software Engineer @Mailchimp @Intuit | Inc Magazine 30u30ish | Mississippi State Alumni & UC Berkeley Artificial Intelligence Program Graduate

🗺️ RenderATL.com

Open source security at GitHub. I don’t believe in perfection, but in continuous improvement. Opinions here are mine.