Paper title and authors

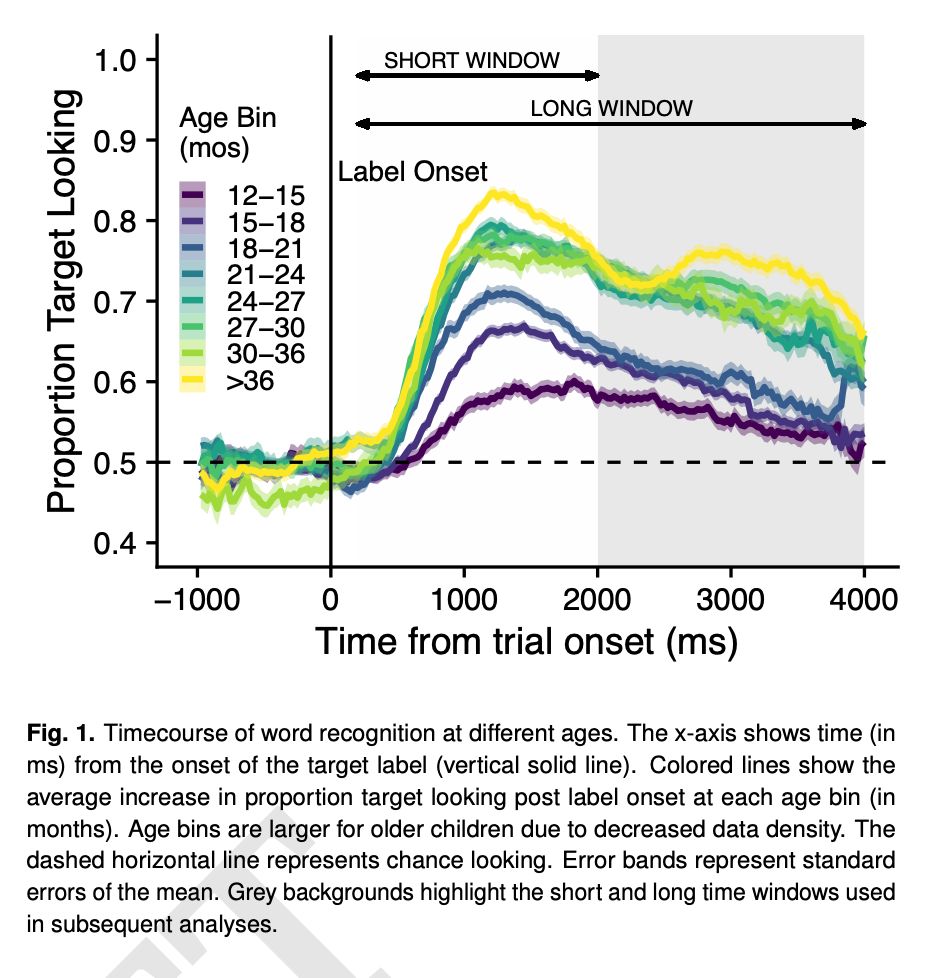

Now up as a reviewed @elife.bsky.social preprint: "Continuous developmental changes in word recognition support language learning across early childhood" elifesciences.org/reviewed-pre...

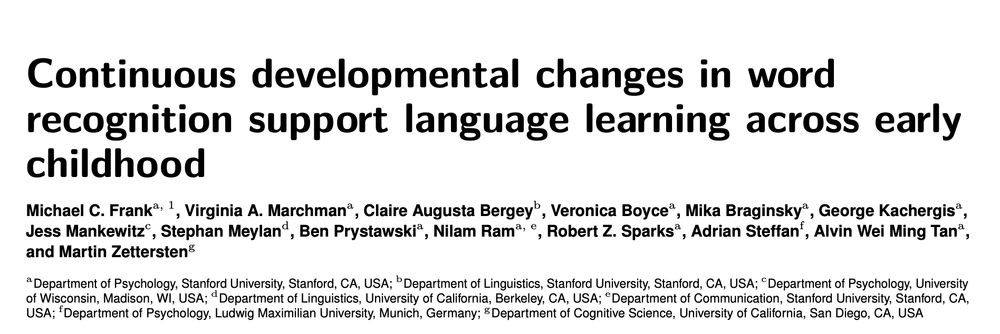

Using data from ~2000 kids ages 1-6, we quantify links between word recognition and early vocabulary growth!

05.01.2026 21:39 — 👍 31 🔁 12 💬 1 📌 3

Now out in Cognition, work with the great @gershbrain.bsky.social @tobigerstenberg.bsky.social on formalizing self-handicapping as rational signaling!

📃 authors.elsevier.com/a/1lo8f2Hx2-...

19.09.2025 03:46 — 👍 36 🔁 13 💬 1 📌 1

How do we predict what others will do next? 🤔

We look for patterns. But what are the limits of this ability?

In our new paper at CCN 2025 (@cogcompneuro.bsky.social), we explore the computational constraints of human pattern recognition using the classic game of Rock, Paper, Scissors 🗿📄✂️

12.08.2025 22:55 — 👍 14 🔁 3 💬 1 📌 0

APA PsycNet

My final project from grad school is out now in Dev Psych! Mombasa County preschoolers were more accurate on object-based than picture-based vocabulary assessments, whereas Bay Area preschoolers were equally accurate on object-based and picture-based assessments.

psycnet.apa.org/doiLanding?d...

06.08.2025 23:54 — 👍 10 🔁 2 💬 1 📌 0

![What do representations tell us about a system? Image of a mouse with a scope showing a vector of activity patterns, and a neural network with a vector of unit activity patterns

Common analyses of neural representations: Encoding models (relating activity to task features) drawing of an arrow from a trace saying [on_____on____] to a neuron and spike train. Comparing models via neural predictivity: comparing two neural networks by their R^2 to mouse brain activity. RSA: assessing brain-brain or model-brain correspondence using representational dissimilarity matrices](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:e6ewzleebkdi2y2bxhjxoknt/bafkreiav2io2ska33o4kizf57co5bboqyyfdpnozo2gxsicrfr5l7qzjcq@jpeg)

What do representations tell us about a system? Image of a mouse with a scope showing a vector of activity patterns, and a neural network with a vector of unit activity patterns

Common analyses of neural representations: Encoding models (relating activity to task features) drawing of an arrow from a trace saying [on_____on____] to a neuron and spike train. Comparing models via neural predictivity: comparing two neural networks by their R^2 to mouse brain activity. RSA: assessing brain-brain or model-brain correspondence using representational dissimilarity matrices

In neuroscience, we often try to understand systems by analyzing their representations — using tools like regression or RSA. But are these analyses biased towards discovering a subset of what a system represents? If you're interested in this question, check out our new commentary! Thread:

05.08.2025 14:36 — 👍 170 🔁 53 💬 5 📌 0

🚨New paper! We know models learn distinct in-context learning strategies, but *why*? Why generalize instead of memorize to lower loss? And why is generalization transient?

Our work explains this & *predicts Transformer behavior throughout training* without its weights! 🧵

1/

28.06.2025 02:35 — 👍 51 🔁 7 💬 2 📌 2

How can we combine the process-level insight that think-aloud studies give us with the large scale that modern online experiments permit? In our new CogSci paper, we show that speech-to-text models and LLMs enable us to scale up the think-aloud method to large experiments!

25.06.2025 05:32 — 👍 22 🔁 5 💬 0 📌 0

Delighted to announce our CogSci '25 workshop at the interface between cognitive science and design 🧠🖌️!

We're calling it: 🏺Minds in the Making🏺

🔗 minds-making.github.io

June – July 2024, free & open to the public

(all career stages, all disciplines)

06.06.2025 00:30 — 👍 57 🔁 21 💬 2 📌 2

figure 2 from our preprint, reporting the results from two experiments

we measure moral judgments about dividing money between two parties and manipulate the degree of asymmetry in the outside options each party has

we find that moral judgments track predictions from rational bargaining models like the nash bargaining solution and the kalai-smorodinsky solution in a negotiation context

by contrast, in a donation context, moral intuitions completely reverse, instead tracking redistributive and egalitarian principles

preprint link: https://osf.io/preprints/psyarxiv/3uqks_v1

the functional form of moral judgment is (sometimes) the nash bargaining solution

new preprint👇

20.05.2025 15:08 — 👍 24 🔁 7 💬 1 📌 2

Despite the world being on fire, I can't help but be thrilled to announce that I'll be starting as an Assistant Professor in the Cognitive Science Program at Dartmouth in Fall '26. I'll be recruiting grad students this upcoming cycle—get in touch if you're interested!

07.05.2025 22:08 — 👍 143 🔁 24 💬 17 📌 4

title of paper (in text) plus author list

Time course of word recognition for kids at different ages.

Super excited to submit a big sabbatical project this year: "Continuous developmental changes in word

recognition support language learning across early

childhood": osf.io/preprints/ps...

14.04.2025 21:58 — 👍 68 🔁 27 💬 1 📌 1

OSF

Hello bluesky world :) excited to share a new paper on data visualization literacy 📈 🧠 w/ @judithfan.bsky.social, @arnavverma.bsky.social, Holly Huey, Hannah Lloyd, @lacepadilla.bsky.social!

📝 preprint: osf.io/preprints/ps...

💻 code: github.com/cogtoolslab/...

07.03.2025 17:05 — 👍 26 🔁 7 💬 4 📌 1

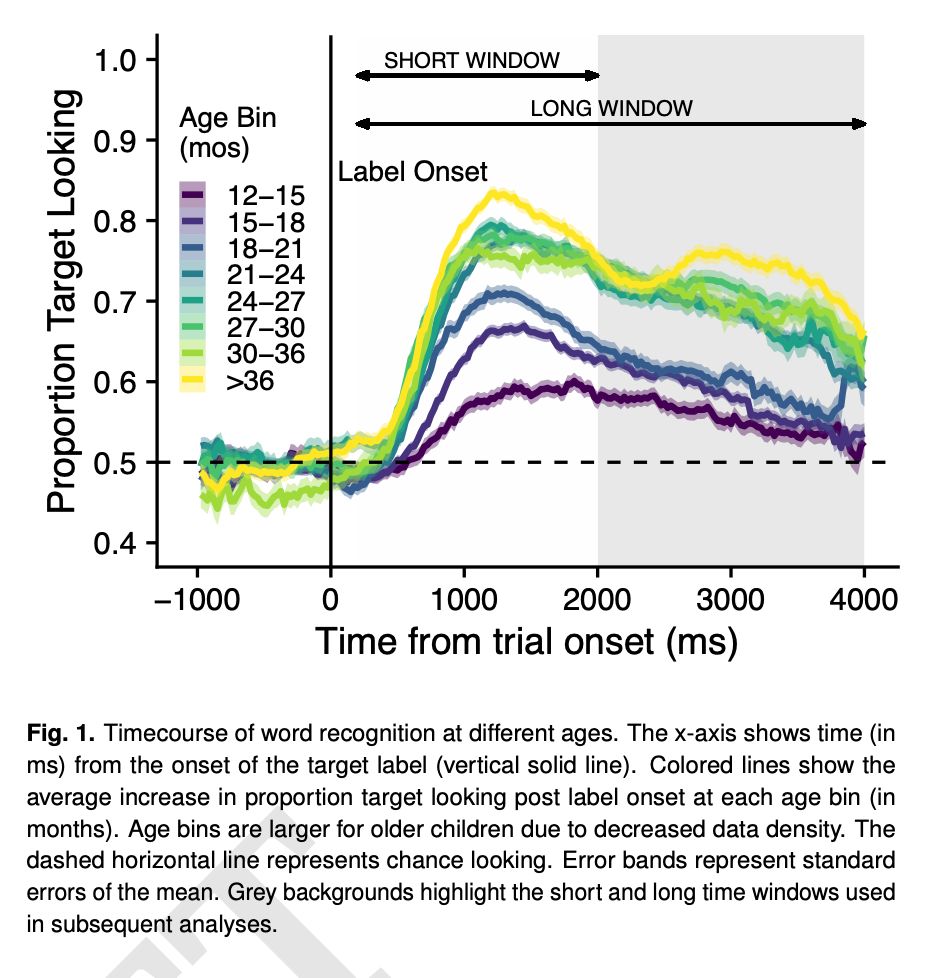

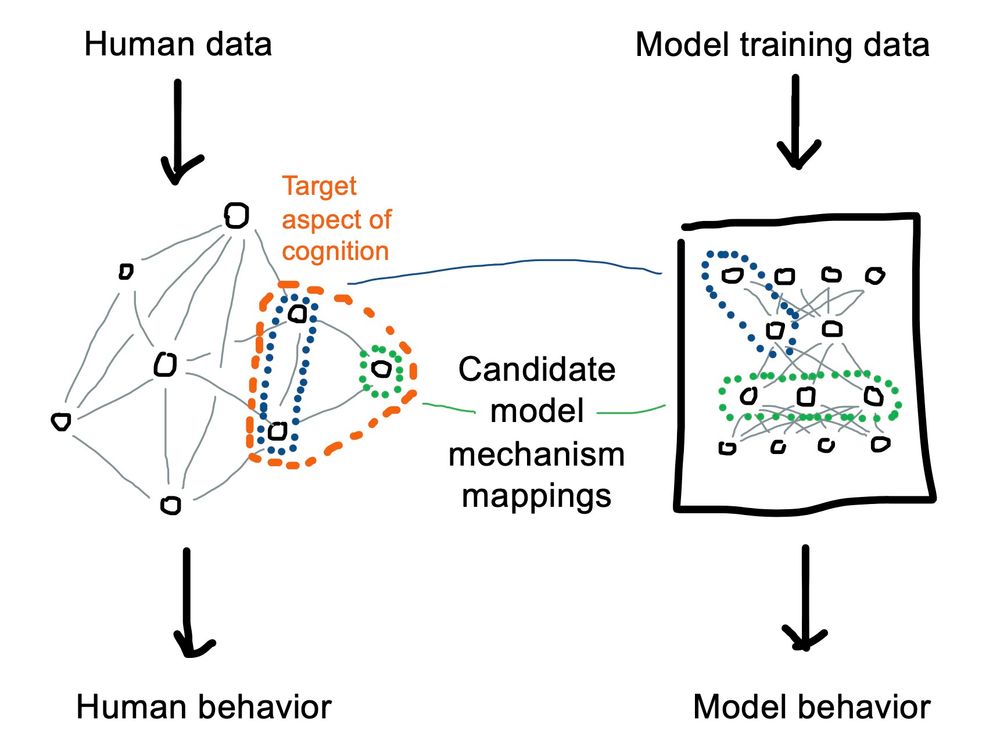

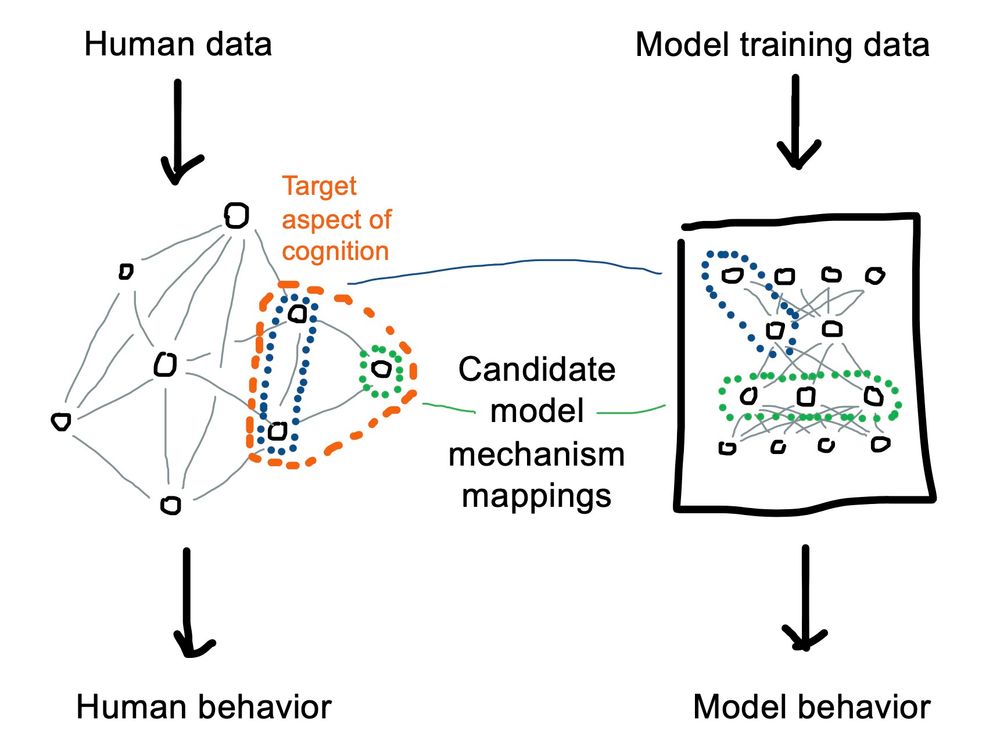

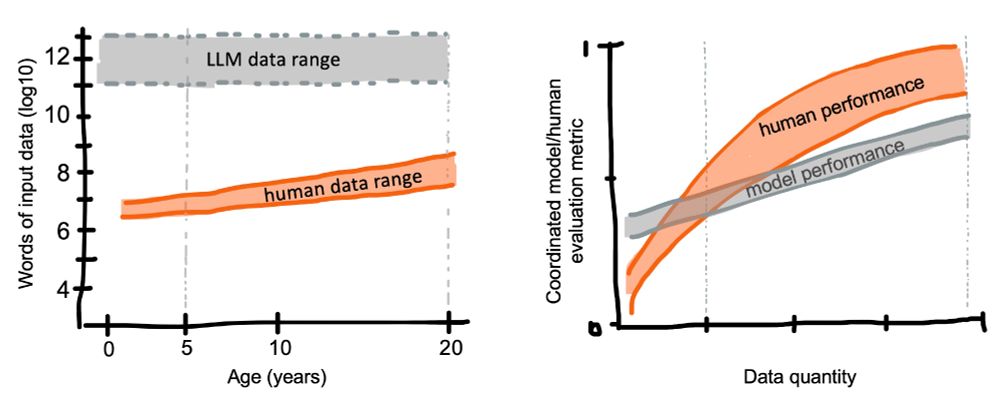

Figure 1. A schematic depiction of a model-mechanism mapping between a human learning system (left side) and a cognitive model (right side). Candidate model mechanism mappings are pictured as mapping between representations but also can be in terms of input data, architecture, or learning objective.

Figure 2. Data efficiency in human learning. (left) Order of magnitude of LLM vs. human training data, plotted by human age. Ranges are approximated from Frank (2023a). (right) A schematic depiction of evaluation scaling curves for human learners vs. models plotted by training data

quantity.

Paper abstract

AI models are fascinating, impressive, and sometimes problematic. But what can they tell us about the human mind?

In a new review paper, @noahdgoodman.bsky.social and I discuss how modern AI can be used for cognitive modeling: osf.io/preprints/ps...

06.03.2025 17:39 — 👍 63 🔁 25 💬 2 📌 0

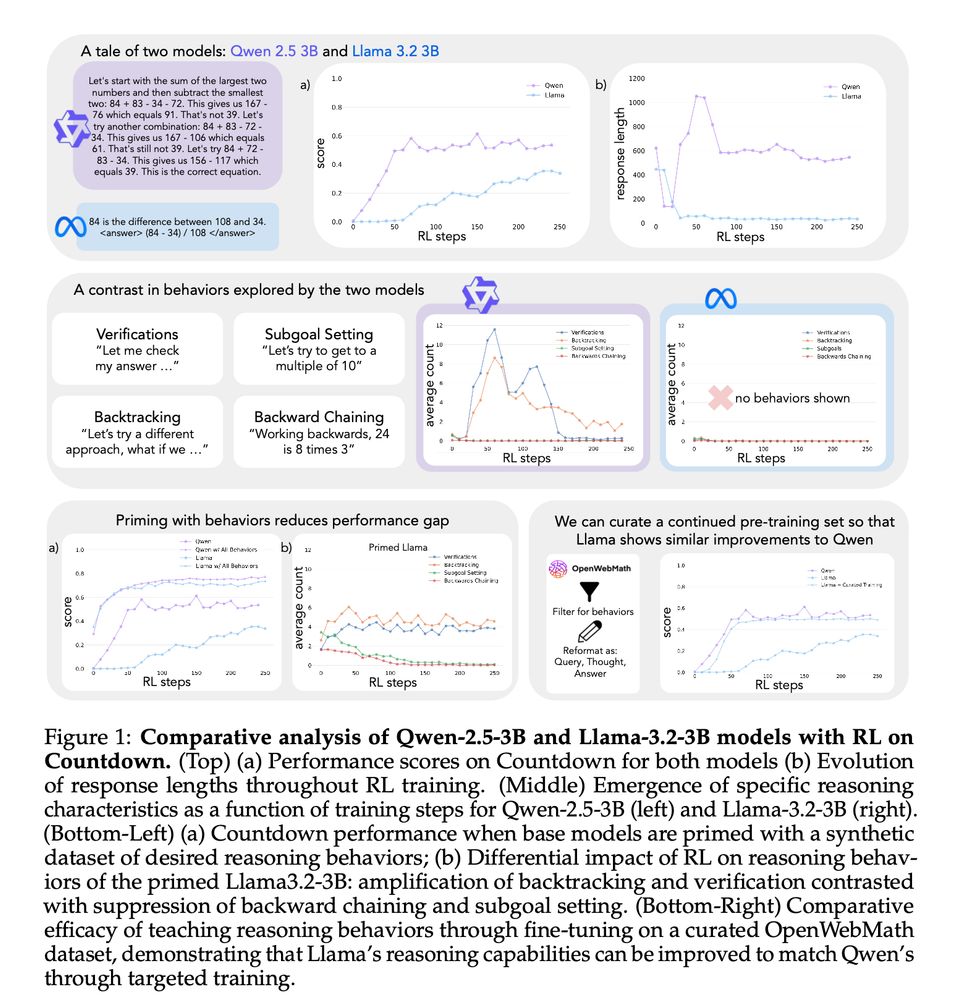

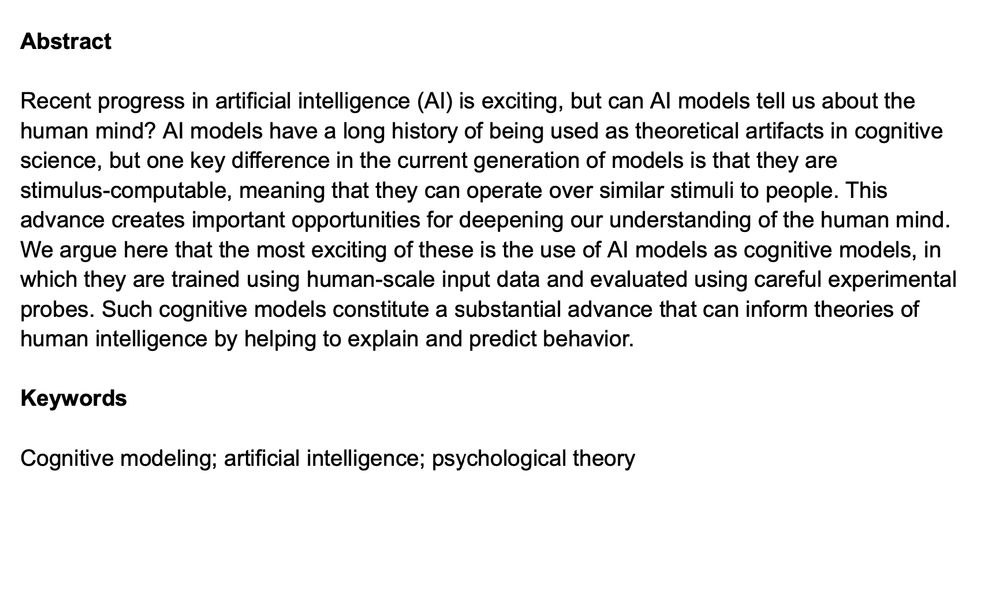

1/13 New Paper!! We try to understand why some LMs self-improve their reasoning while others hit a wall. The key? Cognitive behaviors! Read our paper on how the right cognitive behaviors can make all the difference in a model's ability to improve with RL! 🧵

04.03.2025 18:15 — 👍 57 🔁 17 💬 2 📌 3

New paper in Psychological Review!

In "Causation, Meaning, and Communication" Ari Beller (cicl.stanford.edu/member/ari_b...) develops a computational model of how people use & understand expressions like "caused", "enabled", and "affected".

📃 osf.io/preprints/ps...

📎 github.com/cicl-stanfor...

🧵

12.02.2025 18:25 — 👍 57 🔁 17 💬 1 📌 0

The broader spectrum of in-context learning

The ability of language models to learn a task from a few examples in context has generated substantial interest. Here, we provide a perspective that situates this type of supervised few-shot learning...

What counts as in-context learning (ICL)? Typically, you might think of it as learning a task from a few examples. However, we’ve just written a perspective (arxiv.org/abs/2412.03782) suggesting interpreting a much broader spectrum of behaviors as ICL! Quick summary thread: 1/7

10.12.2024 18:17 — 👍 123 🔁 32 💬 2 📌 1

Hey! Could you add me?

23.11.2024 23:16 — 👍 1 🔁 0 💬 0 📌 0

Aerial picture of the UBC campus, with an arrow pointing to a building and text asking "Your PhD lab?"

Do you want to understand how language models work, and how they can change language science? I'm recruiting PhD students at UBC Linguistics! The research will be fun, and Vancouver is lovely. So much cool NLP happening at UBC across both Ling and CS! linguistics.ubc.ca/graduate/adm...

18.11.2024 19:43 — 👍 23 🔁 8 💬 1 📌 2

OSF

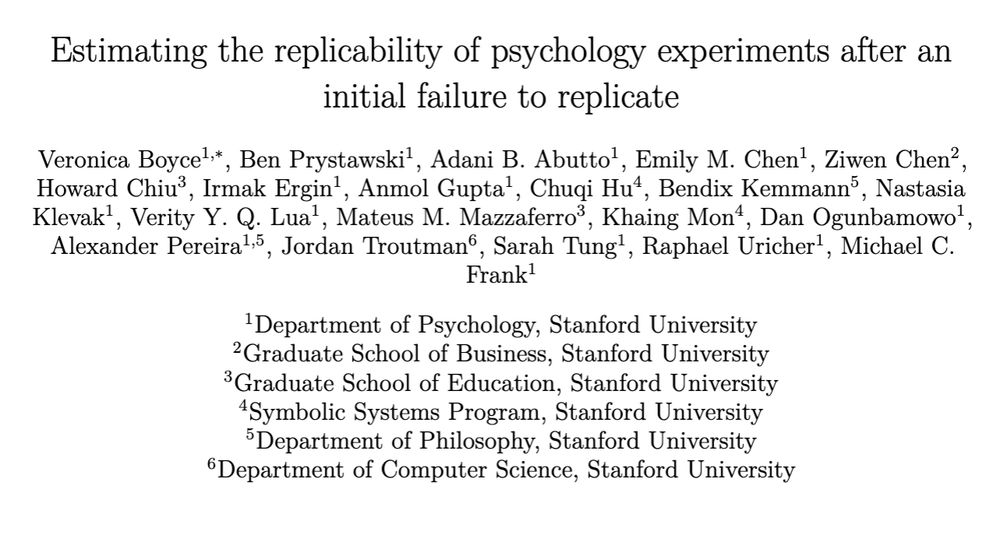

If you try to replicate a finding so you can build on it, but your study fails, what should you do? Should you follow up and try to "rescue" the failed rep, or should you move on? Boyce et al. tried to answer this question; in our sample, 5 of 17 rescue projects succeeded.

osf.io/preprints/ps...

18.10.2024 15:51 — 👍 36 🔁 20 💬 1 📌 3

A promotional image of One Hour One Life, showing a character growing up from a baby, to a child, to an adult, to an old woman, to a pile of bones. This work is not affiliated with One Hour One Life; we are grateful to Jason Rohrer, the game's developer, for making the game open data and open source.

Preprint alert! After 4 years, I’m super excited to share work with @thecharleywu.bsky.social @gershbrain.bsky.social and Eric Schulz on the rise and fall of technological development in virtual communities in #OneHourOneLife #ohol

doi.org/10.31234/osf...

13.09.2024 19:29 — 👍 39 🔁 11 💬 1 📌 1

Clear clusters in model representations driven by some features (plot colors) but neglecting other more complex ones (plotted as shapes) which are mixed within the color clusters.

How well can we understand an LLM by interpreting its representations? What can we learn by comparing brain and model representations? Our new paper highlights intriguing biases in learned feature representations that make interpreting them more challenging! 1/

23.05.2024 18:58 — 👍 19 🔁 10 💬 2 📌 3

When a replication fails, researchers have to decide whether to make another attempt or move on. How should we think about this decision? Here's a new paper trying to answer this question, led by Veronica Boyce and featuring student authors from my class!

osf.io/preprints/ps...

06.05.2024 19:23 — 👍 15 🔁 10 💬 0 📌 0

Cog Neuro PhD student @StanfordPsych studying brain development, neuroanatomy, & education 🧠 | she/her

pedestrian and straphanger, reluctantly in california, natural language processor, contentedly irrational, humanist | no kings, no masters, no ghosts

fiat veritas, et pereat mundus

phd student at stanford. developmental cognitive neuroscience. visual perception. infant fmri. she/her.

Getting a PhD in humanities+data. Evolutionary perspectives on language, culture, sign systems.

+ http://mastodon.green/@ptinits

+ twitter handle @yrgsupp

https://peetertinits.github.io/

Methodology prof in psychology at the University of British Columbia working on Schmeasurement. Into big team science, open science, and latent variables. Flake lab does not study flakes.

PhD student at MIT Brain and Cognitive Sciences, Tedlab. I study psycholinguistics. immigrant 🏳️🌈 he/him ex-STEM-phobic

website: mpoliak.notion.site

Cognitive scientist working at the intersection of moral cognition and AI safety. Currently: Google Deepmind. Soon: Assistant Prof at NYU Psychology. More at sites.google.com/site/sydneymlevine.

Associate Professor, Dept of Psychology, Stanford University

Social Learning, Cognition, Development | sll.stanford.edu

Blog: https://argmin.substack.com/

Webpage: https://people.eecs.berkeley.edu/~brecht/

Waiting on a robot body. All opinions are universal and held by both employers and family. ML/NLP professor.

nsaphra.net

CompNeuro M.Sc @ École Normale Supérieure - Paris

Cognitive scientist studying how morality, happiness, and other subjective magnitudes can be quantified.

Postdoctoral fellow at Yale University

https://www.vladchituc.com/

Psych PhD student @Harvard

CS PhD student at UT Austin in #NLP

Interested in language, reasoning, semantics and cognitive science. One day we'll have more efficient, interpretable and robust models!

Other interests: math, philosophy, cinema

https://www.juandiego-rodriguez.com/

We are a lab studying how children learn and what they understand about the world. Our lab is at Brown University and we also have researchers at the University of Toronto.

PhD student @Princeton Psych under Drs. Natalia Vélez & Tom Griffiths, studying the computational cognition of human aggregate minds. Before @Penn @Cal

👩🏻🏫 asst teaching prof @ uc san diego

🧠 cognitive scientist

Researcher at @ox.ac.uk (@summerfieldlab.bsky.social) & @ucberkeleyofficial.bsky.social, working on AI alignment & computational cognitive science. Author of The Alignment Problem, Algorithms to Live By (w. @cocoscilab.bsky.social), & The Most Human Human.

Kempner Institute research fellow @Harvard interested in scaling up (deep) reinforcement learning theories of human cognition

prev: deepmind, umich, msr

https://cogscikid.com/

![What do representations tell us about a system? Image of a mouse with a scope showing a vector of activity patterns, and a neural network with a vector of unit activity patterns

Common analyses of neural representations: Encoding models (relating activity to task features) drawing of an arrow from a trace saying [on_____on____] to a neuron and spike train. Comparing models via neural predictivity: comparing two neural networks by their R^2 to mouse brain activity. RSA: assessing brain-brain or model-brain correspondence using representational dissimilarity matrices](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:e6ewzleebkdi2y2bxhjxoknt/bafkreiav2io2ska33o4kizf57co5bboqyyfdpnozo2gxsicrfr5l7qzjcq@jpeg)