Choudhury and Kim et al., "Accelerating Vision Transformers With Adaptive Patch Sizes"

Transformer patches don't need to be of uniform size -- choose sizes based on entropy --> faster training/inference. Are scale-spaces gonna make a comeback?

22.10.2025 20:08 — 👍 16 🔁 4 💬 3 📌 1

![A scatter plot titled “AIME25 — Total Memory vs. Accuracy (Qwen3)” compares model accuracy (%) against total memory usage (weights + KV cache, in GB) for various Qwen3 model sizes and quantization levels.

Axes:

• X-axis: Total Memory (Weight + KV Cache) [GB] (log scale, ranging roughly from 1 to 100)

• Y-axis: Accuracy (%), ranging from 0 to 75

Legend:

• Colors: model sizes —

• 0.6B (yellow)

• 1.7B (orange)

• 4B (salmon)

• 8B (pink)

• 14B (purple)

• 32B (blue)

• Shapes: precision levels —

• Circle: 16-bit

• Triangle: 8-bit

• Square: 4-bit

• Marker size: context length —

• Small: 2k tokens

• Large: 30k tokens

Main trend:

Larger models (rightward and darker colors) achieve higher accuracy but require significantly more memory. Smaller models (left, yellow/orange) stay below 30% accuracy. Compression (8-bit or 4-bit) lowers memory usage but can reduce accuracy slightly.

Inset zoom (upper center):

A close-up box highlights the 8B (8-bit) and 14B (4-bit) models showing their proximity in accuracy despite differing memory footprints.

Overall, the chart demonstrates scaling behavior for Qwen3 models—accuracy grows with total memory and model size, with diminishing returns beyond the 14B range.](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:ckaz32jwl6t2cno6fmuw2nhn/bafkreiatqdqcrxckrhsruoka6llaggjdsr3cpgspql5lnn7kttwrx3q7ga@jpeg)

A scatter plot titled “AIME25 — Total Memory vs. Accuracy (Qwen3)” compares model accuracy (%) against total memory usage (weights + KV cache, in GB) for various Qwen3 model sizes and quantization levels.

Axes:

• X-axis: Total Memory (Weight + KV Cache) [GB] (log scale, ranging roughly from 1 to 100)

• Y-axis: Accuracy (%), ranging from 0 to 75

Legend:

• Colors: model sizes —

• 0.6B (yellow)

• 1.7B (orange)

• 4B (salmon)

• 8B (pink)

• 14B (purple)

• 32B (blue)

• Shapes: precision levels —

• Circle: 16-bit

• Triangle: 8-bit

• Square: 4-bit

• Marker size: context length —

• Small: 2k tokens

• Large: 30k tokens

Main trend:

Larger models (rightward and darker colors) achieve higher accuracy but require significantly more memory. Smaller models (left, yellow/orange) stay below 30% accuracy. Compression (8-bit or 4-bit) lowers memory usage but can reduce accuracy slightly.

Inset zoom (upper center):

A close-up box highlights the 8B (8-bit) and 14B (4-bit) models showing their proximity in accuracy despite differing memory footprints.

Overall, the chart demonstrates scaling behavior for Qwen3 models—accuracy grows with total memory and model size, with diminishing returns beyond the 14B range.

Is 32B-4bit equal to 16B-8bit? Depends on the task

* math: precision matters

* knowledge: effective param count is more important

* 4B-8bit threshold — for bigger prefer quant, smaller prefer more params

* parallel TTC only works above 4B-8bit

arxiv.org/abs/2510.10964

15.10.2025 11:10 — 👍 31 🔁 8 💬 3 📌 0

YouTube video by Monsieur Phi

Luc Julia au Sénat : autopsie d'un grand N'IMPORTE QUOI

NOUVELLE VIDEO ! Je décortique le cas Luc Julia, le réputé co-créateur de Siri et expert mondial de l'IA, encensé dans les médias et récemment auditionné au Sénat. Le résultat est salé mais c'était nécessaire.

youtu.be/e5kDHL-nnh4

youtu.be/e5kDHL-nnh4

youtu.be/e5kDHL-nnh4

11.08.2025 14:38 — 👍 395 🔁 163 💬 44 📌 54

Why would you ride in a car driven by a human? Do you have some sort of death wish?

28.06.2025 20:16 — 👍 44 🔁 5 💬 6 📌 1

Last month I did a little experiment.

I wanted to see how the exact same post would perform on both X (Twitter) and Bluesky.

The results were...interesting...

[Thread]

16.03.2025 18:08 — 👍 2509 🔁 1414 💬 128 📌 352

New LinkedIn wall background, thanks

15.03.2025 09:41 — 👍 0 🔁 0 💬 0 📌 0

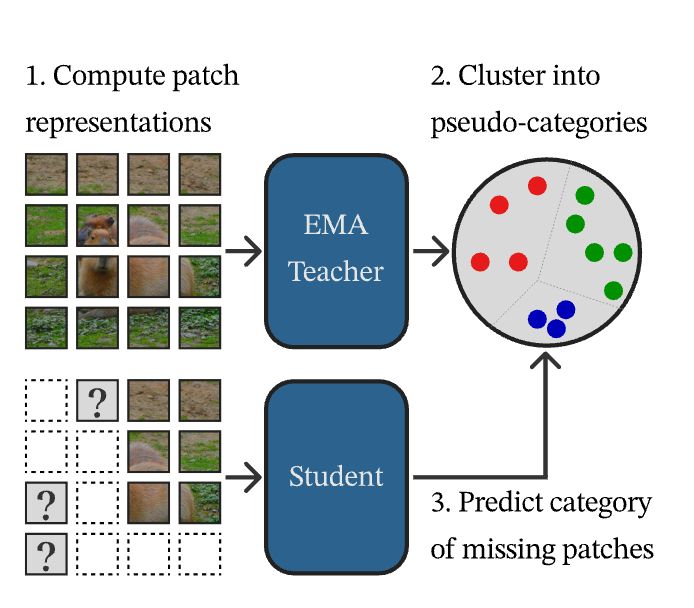

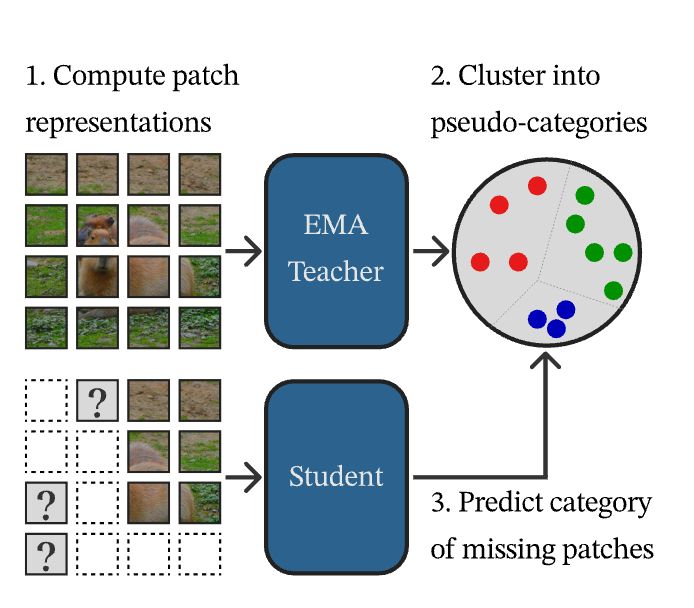

Want strong SSL, but not the complexity of DINOv2?

CAPI: Cluster and Predict Latents Patches for Improved Masked Image Modeling.

14.02.2025 18:04 — 👍 49 🔁 10 💬 1 📌 1

Pourquoi faire

09.01.2025 05:36 — 👍 0 🔁 0 💬 1 📌 0

J’ai l’impression que le graphique nous dit pourtant l’inverse, non?

10.12.2024 14:11 — 👍 0 🔁 0 💬 0 📌 0

Originally the default wallpaper of Microsoft's Windows XP, this photo shows green rolling hills with a vibrant blue sky and white clouds in the background. Charles O'Rear took the photo in California, USA.

We've always been a fan of blueskies.

04.04.1975 12:00 — 👍 11865 🔁 2119 💬 652 📌 656

Free speech on twitter:

01.12.2024 02:30 — 👍 109 🔁 5 💬 5 📌 0

GitHub - davidgasquez/docs-to-llmstxt: 🤖 Compile docs into text files for LLMs

🤖 Compile docs into text files for LLMs. Contribute to davidgasquez/docs-to-llmstxt development by creating an account on GitHub.

Inspired by @simonwillison.net llm-docs repo, I did a similar one compiling projects docs into single TXT files that can be fed to LLMs.

Right now, it only has atproto docs but already been useful to me to answer random questions about the project.

github.com/davidgasquez...

19.11.2024 09:48 — 👍 64 🔁 6 💬 4 📌 0

Assistant Professor of Computer Science at the University of British Columbia. I also post my daily finds on arxiv.

Assistant Professor at UW and Staff Research Scientist at Google DeepMind. Social Reinforcement Learning in multi-agent and human-AI interactions. PhD from MIT. Check out https://socialrl.cs.washington.edu/ and https://natashajaques.ai/.

A LLN - large language Nathan - (RL, RLHF, society, robotics), athlete, yogi, chef

Writes http://interconnects.ai

At Ai2 via HuggingFace, Berkeley, and normal places

Writing The Pragmatic Engineer (@pragmaticengineer.com), the #1 technology newsletter on Substack. Author of The Software Engineer's Guidebook (engguidebook.com). Formerly at Uber, Skype, Skyscanner. More at pragmaticengineer.com

A business analyst at heart who enjoys delving into AI, ML, data engineering, data science, data analytics, and modeling. My views are my own.

You can also find me at threads: @sung.kim.mw

AI Architect | North Carolina | AI/ML, IoT, science

WARNING: I talk about kids sometimes

Lead Engineer at AIPRM.com and LinkResearchTools.com, building AIPRM for ChatGPT & Claude

Idées singulières au service du progrès.

lel.media

A latent space odyssey

gracekind.net

🧪 Data science, survey science, social science

💻 Director of Data Science @ Microsoft Garage

[Posts do not represent my employer]

🧮 Stats, R, python

📝 Science, Research: measurement, social biases, emotion. Ex-academic but scientist at heart

Programmation compétitive, cybersécurité & IA.

Co-fondateur & CTO @agoratlas.com, agence d'analyse des réseaux sociaux à grande échelle.

Chercheur CNRS & ENS-PSL

#Psychologie, #neurosciences, #médecine, #éducation, #rationalisme

Anti #fakemed et #fakescience

Ministre du culte #pastafarien

https://linktr.ee/FranckRamus

Sakana AI is an AI R&D company based in Tokyo, Japan. 🗼🧠

https://sakana.ai/careers

Computer Vision research group @ox.ac.uk

Deep Learning researcher | professor for Artificial Intelligence in Life Sciences | inventor of self-normalizing neural networks | ELLIS program Director

Professor a NYU; Chief AI Scientist at Meta.

Researcher in AI, Machine Learning, Robotics, etc.

ACM Turing Award Laureate.

http://yann.lecun.com

We measure the attention that research outputs receive from policy documents, mainstream news outlets, Wikipedia, social media and online reference managers. We detect sentiment of Bluesky/X posts.

Come for the attention to research. Stay for the memes.

Breakthrough AI to solve the world's biggest problems.

› Join us: http://allenai.org/careers

› Get our newsletter: https://share.hsforms.com/1uJkWs5aDRHWhiky3aHooIg3ioxm

![A scatter plot titled “AIME25 — Total Memory vs. Accuracy (Qwen3)” compares model accuracy (%) against total memory usage (weights + KV cache, in GB) for various Qwen3 model sizes and quantization levels.

Axes:

• X-axis: Total Memory (Weight + KV Cache) [GB] (log scale, ranging roughly from 1 to 100)

• Y-axis: Accuracy (%), ranging from 0 to 75

Legend:

• Colors: model sizes —

• 0.6B (yellow)

• 1.7B (orange)

• 4B (salmon)

• 8B (pink)

• 14B (purple)

• 32B (blue)

• Shapes: precision levels —

• Circle: 16-bit

• Triangle: 8-bit

• Square: 4-bit

• Marker size: context length —

• Small: 2k tokens

• Large: 30k tokens

Main trend:

Larger models (rightward and darker colors) achieve higher accuracy but require significantly more memory. Smaller models (left, yellow/orange) stay below 30% accuracy. Compression (8-bit or 4-bit) lowers memory usage but can reduce accuracy slightly.

Inset zoom (upper center):

A close-up box highlights the 8B (8-bit) and 14B (4-bit) models showing their proximity in accuracy despite differing memory footprints.

Overall, the chart demonstrates scaling behavior for Qwen3 models—accuracy grows with total memory and model size, with diminishing returns beyond the 14B range.](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:ckaz32jwl6t2cno6fmuw2nhn/bafkreiatqdqcrxckrhsruoka6llaggjdsr3cpgspql5lnn7kttwrx3q7ga@jpeg)