Virtual information session for Georgetown’s 2-year Master’s in Computational Linguistics! Learn about our courses in NLP, psycholinguistics, low-resource languages, digital humanities, and LLMs, plus phonology, syntax, & semantics. DM for registration link. Friday Nov. 21 | 10–11 AM #linguistics

14.11.2025 17:52 — 👍 3 🔁 2 💬 1 📌 0

Screenshot of a figure with two panels, labeled (a) and (b). The caption reads: "Figure 1: (a) Illustration of messages (left) and strings (right) in toy domain. Blue = grammatical strings. Red = ungrammatical strings. (b) Surprisal (negative log probability) assigned to toy strings by GPT-2."

New work to appear @ TACL!

Language models (LMs) are remarkably good at generating novel well-formed sentences, leading to claims that they have mastered grammar.

Yet they often assign higher probability to ungrammatical strings than to grammatical strings.

How can both things be true? 🧵👇

10.11.2025 22:11 — 👍 91 🔁 20 💬 2 📌 3

I did not! Yikes! Another reason to include "pickle" and/or pickle-related emoji in any lab communication!

23.10.2025 01:04 — 👍 1 🔁 0 💬 0 📌 0

GUCL: Computation and Language @ Georgetown

Georgetown Linguistics has a dedicated Computational Linguistics PhD track, and a lively CL community on campus (gucl.georgetown.edu), including my faculty colleagues @complingy.bsky.social and Amir Zeldes.

21.10.2025 21:52 — 👍 0 🔁 0 💬 0 📌 0

PICoL stands for “Psycholinguistics, Information, and Computational Linguistics,” and I encourage applications from anyone whose research interests connect with these topics!

21.10.2025 21:52 — 👍 0 🔁 0 💬 1 📌 0

I will be recruiting PhD students via Georgetown Linguistics this application cycle! Come join us in the PICoL (pronounced “pickle”) lab. We focus on psycholinguistics and cognitive modeling using LLMs. See the linked flyer for more details: bit.ly/3L3vcyA

21.10.2025 21:52 — 👍 27 🔁 14 💬 2 📌 0

🌟🌟This paper will appear at ACL 2025 (@aclmeeting.bsky.social)! New updated version is on arXiv: arxiv.org/pdf/2505.07659 🌟🌟

03.06.2025 13:45 — 👍 9 🔁 0 💬 0 📌 0

A key hypothesis in the history of linguistics is that different constructions share underlying structure. We take advantage of recent advances in mechanistic interpretability to test this hypothesis in Language Models.

New work with @kmahowald.bsky.social and @cgpotts.bsky.social!

🧵👇!

27.05.2025 14:32 — 👍 30 🔁 6 💬 1 📌 3

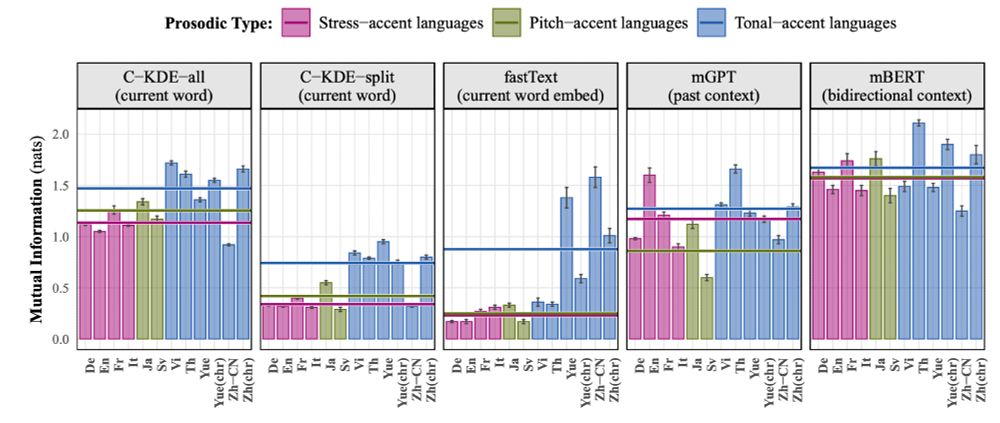

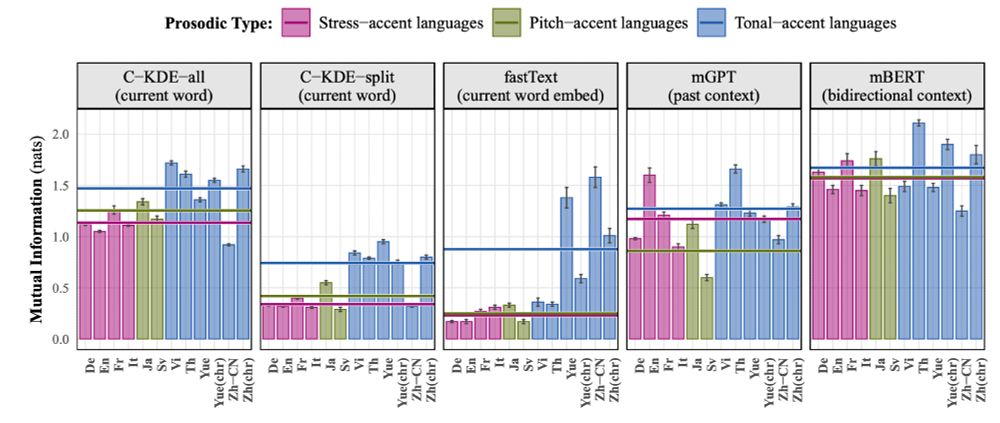

✅In line with our prediction, we find that mutual information is higher in tonal languages than in non-tonal languages. BUT, the way one represents context is important. When full sentential context is taken into account (mBERT and mGPT), the distinction collapses.

13.05.2025 13:21 — 👍 1 🔁 0 💬 1 📌 0

🌏🌍We test this prediction by estimating mutual information in an audio dataset of 10 different languages across 6 language families. 🌏🌍

13.05.2025 13:21 — 👍 0 🔁 0 💬 1 📌 0

We propose a way to do so using …📡information theory.📡 In tonal languages, pitch reduces uncertainty about lexical identity, therefore, the mutual information between pitch and words should be higher.

13.05.2025 13:21 — 👍 2 🔁 0 💬 1 📌 0

🌐But there are intermediate languages, which have lexically contrastive tone, but only sporadically, making some linguists doubt the tonal/non-tonal dichotomy. So, how can we measure how “tonal” a language is? 🧐🧐

13.05.2025 13:21 — 👍 0 🔁 0 💬 1 📌 0

🌏 Different languages use pitch in different ways. 🌏 “Tonal” languages, like Cantonese, use it to make lexical distinctions. 📖 While others, like English, use it for other functions, like marking whether or not a sentence is a question. ❓

13.05.2025 13:21 — 👍 0 🔁 0 💬 1 📌 0

GitHub - babylm/evaluation-pipeline-2025

Contribute to babylm/evaluation-pipeline-2025 development by creating an account on GitHub.

I’ll also use this as a way to plug human-scale language modeling in the wild: This year’s BabyLM eval pipeline was just released last week at github.com/babylm/evalu.... For more info on BabyLM head to babylm.github.io

12.05.2025 15:48 — 👍 3 🔁 0 💬 0 📌 0

Couldn’t be happier to have co-authored this will a stellar team, including: Michael Hu, @amuuueller.bsky.social, @alexwarstadt.bsky.social, @lchoshen.bsky.social, Chengxu Zhuang, @adinawilliams.bsky.social, Ryan Cotterell, @tallinzen.bsky.social

12.05.2025 15:48 — 👍 3 🔁 1 💬 1 📌 0

This version includes 😱New analyses 😱new arguments 😱 and a whole new “Looking Forward” section! If you’re interested in what a team of (psycho) computational linguists thinks the future will hold, check out our brand new Section 8!

12.05.2025 15:48 — 👍 1 🔁 0 💬 1 📌 0

OSF

📣Paper Update 📣It’s bigger! It’s better! Even if the language models aren’t. 🤖New version of “Bigger is not always Better: The importance of human-scale language modeling for psycholinguistics” osf.io/preprints/ps...

12.05.2025 15:48 — 👍 18 🔁 3 💬 1 📌 2

OSF

Excited to share our preprint "Using MoTR to probe agreement errors in Russian"! w/ Metehan Oğuz, @wegotlieb.bsky.social, Zuzanna Fuchs Link: osf.io/preprints/ps...

1- We provide moderate evidence that processing of agreement errors is modulated by agreement type (internal vs external agr.)

07.03.2025 22:21 — 👍 3 🔁 1 💬 1 📌 0

Looking forward: Linguistic theory and methods

This chapter examines current developments in linguistic theory and methods, focusing on the increasing integration of computational, cognitive, and evolutionary perspectives. We highlight four major ...

Me and @wegotlieb.bsky.social were recently invited to write a wide-ranging reflection on the current state of linguistic theory and methodology.

A draft is up here. For anyone interested in thinking big about linguistics, we'd be happy to hear your thoughts!

arxiv.org/abs/2502.18313

#linguistics

27.02.2025 14:47 — 👍 14 🔁 2 💬 0 📌 0

⚖️📣This paper was a big departure from my typical cognitive science fare, and so much fun to write! 📣⚖️ Thank you to @bwal.bsky.social and especially to @kevintobia.bsky.social for their legal expertise on this project!

19.02.2025 14:25 — 👍 1 🔁 0 💬 0 📌 0

On the positive side, we suggest that LLMs can serve a role as “dialectic” partners 🗣️❔🗣️ helping judges and clerks strengthen their arguments, as long as judicial sovereignty is maintained 👩⚖️👑👩⚖️

19.02.2025 14:25 — 👍 2 🔁 0 💬 1 📌 0

⚖️ We also show, through demonstration, that it’s very easy to engineer prompts that steer models toward one’s desired interpretation of a word or phrase. 📖Prompting is the new “dictionary shopping” 😬 📖 😬

19.02.2025 14:25 — 👍 1 🔁 0 💬 1 📌 0

🏛️We identify five “myths” about LLMs which, when dispelled, reveal their limitations as legal tools for textual interpretation. To take one example, during instruction tuning, LLMs are trained on highly structured, non-natural inputs.

19.02.2025 14:25 — 👍 1 🔁 0 💬 1 📌 0

We argue no! 🙅♂️ While LLMs appear to possess excellent language capabilities, they should not be used as references for “ordinary language use,” at least in the legal setting. ⚖️ The reasons are manifold.

19.02.2025 14:25 — 👍 0 🔁 0 💬 1 📌 0

🏛️Last year a U.S. judge queried Chat GPT to help with their interpretation of “ordinary meaning,” in the same way one might use a dictionary to look up the ordinary definition of a word 📖 … But is it the same?

19.02.2025 14:25 — 👍 0 🔁 0 💬 1 📌 0

Professor at the University of Kassel, https://hessian.AI, and https://ScaDS.AI. Member of @webis.de

Research in information retrieval #IR, natural language processing #NLP, and artificial intelligence.

Working on dynamic signs and signals in communication at Tilburg University at the Department of Computational Cognitive Science. Interested in language & movement science, complex systems & 4E, Open Science (envisionbox.org) & other things (wimpouw.com)

Solitary, poor, nasty, brutish and short.

Linguistics and cognitive science at Northwestern. Opinions are my own. he/him/his

Asst Prof @UMD Info College. NLP, computational social science, political communication, linguistics. Past: Data Science Postdoc @UChicago, Info PhD @UMich, CS + Lx @Stanford. Interests: cats, Yiddish, talking to my cats in Yiddish.

Digital Humanities | NLP | Computational Theology

Now: Institute for Digital Humanities, University of Göttingen.

Before: Digital Academy, Göttingen Academy of Sciences and Humanities.

Before Before: The list is long...

Economic historian w broad interests including population health, First Nations, mobility, inequality & lives of the incarcerated. Directing https://thecanadianpeoples.com & editing Asia-Pacific EcHR https://onlinelibrary.wiley.com/journal/2832157x

🇨🇦🇦🇺🇳🇿🏴

Researcher in Cognitive Science | Laboratoire de Psychologie Cognitive, AMU & Institut Jean Nicod, ENS-PSL | Interested in how communication shapes languages

https://alexeykosh.github.io

Assistant Professor of Cognitive AI @UvA Amsterdam

language and vision in brains & machines

cognitive science 🤝 AI 🤝 cognitive neuroscience

michaheilbron.github.io

neuroscience and behavior in parrots and songbirds

Simons junior fellow and post-doc at NYU Langone studying vocal communication, PhD MIT brain and cognitive sciences

PhD student @mainlp.bsky.social (@cislmu.bsky.social, LMU Munich). Interested in language variation & change, currently working on NLP for dialects and low-resource languages.

verenablaschke.github.io

Democracy Skies in Blueness

asst prof of computer science at cu boulder

nlp, cultural analytics, narratives, communities

books, bikes, games, art

https://maria-antoniak.github.io

🔎 distributional information and syntactic structure in the 🧠 | 💼 postdoc @ Université de Genève | 🎓 MPI for Psycholinguistics, BCBL, Utrecht University | 🎨 | she/her

Research Fellow, University of Oxford

Theology, philosophy, ethics, politics, environmental humanities

Associate Director @LSRIOxford

Anglican Priest

https://www.theology.ox.ac.uk/people/revd-dr-timothy-howles

U.S. Senator, Massachusetts. She/her/hers. Official Senate account.

https://substack.com/@senatorwarren

assistant professor in computer science / data science at NYU. studying natural language processing and machine learning.

Stanford Linguistics and Computer Science. Director, Stanford AI Lab. Founder of @stanfordnlp.bsky.social . #NLP https://nlp.stanford.edu/~manning/

Speech • Language • Learning

https://grzegorz.chrupala.me

@ Tilburg University