www.npr.org/2026/02/14/n... I wasn’t expecting “whether you want it or not” to feature so prominently so soon in using AI in health care…

15.02.2026 00:00 — 👍 0 🔁 0 💬 0 📌 0

www.npr.org/2026/02/14/n... I wasn’t expecting “whether you want it or not” to feature so prominently so soon in using AI in health care…

15.02.2026 00:00 — 👍 0 🔁 0 💬 0 📌 0

What do clinicians do when trust runs thin? open.substack.com/pub/familyme...

14.02.2026 13:55 — 👍 1 🔁 0 💬 0 📌 0

Cartoon. Person says to other person „We invented a robot that answers questions.“, adding, „we just have to feed it 10 baby giraffes a day“. The other person asks „But it answers the questions correctly?“ Person responds „Oh my goodness, no. No no no no no.“ By Aram J. French Appropriated due to missing alt text

12.02.2026 13:44 — 👍 12664 🔁 4123 💬 3 📌 61We had no way of anticipating how the car would revolutionize the landscape. With it came highways, streets, drunk driving, day trips to the sea, road rage, etc etc. We weren't intentional in how we adopted the car nor how we adopted the EMR. What culture do we intend to create with AI? 10/10

05.02.2026 13:15 — 👍 0 🔁 1 💬 0 📌 0(It is both more mundane and more laborious to double-check the output of someone/something else than to just do it yourself, as any attending earnestly reading over a trainee's notes can testify) 9/10

05.02.2026 13:15 — 👍 1 🔁 0 💬 1 📌 0So, too, with the rest of medicine: all standardized technique. What we want is to make the red numbers black; the dispel the shadows on the CT scan. Humans are in the loop - for now, and practicing a form of medicine that is itself a shadow of what once was. 8/10

05.02.2026 13:15 — 👍 0 🔁 0 💬 1 📌 0We're prepared for it because the practice of medicine is already a desiccated technical endeavor. A healthcare leader can discuss doing away with medical interpreters as if they're parts in a machine and offer no human value to the encounter itself. Translation is all technique and no culture. 7/10

05.02.2026 13:15 — 👍 1 🔁 0 💬 1 📌 0I would suggest that we both are and are not prepared for this. We're prepared for it in the same way a turkey is prepared for Thanksgiving; the culture of medicine has fashioned the clinical encounter into one that is ripe for harvesting by various stakeholders. 6/10

05.02.2026 13:15 — 👍 1 🔁 0 💬 1 📌 0

There's also growing interest in AI surrogate decision-makers. For years this was a theoretical and nuanced debate relegated to ethics journals. Now proof-of-concept trials have been published in NEJM AI. Are we prepared for this? familymeetingnotes.substack.com/p/a-machine-... 5/10

05.02.2026 13:15 — 👍 0 🔁 0 💬 1 📌 0

What I didn't hear discussed in this convo was how AI scribes will almost certainly increase the degree of surveillance within the clinical encounter. Nothing from recent decades would suggest otherwise: the EMR was used by various stakeholders to pry into that space, why not AI scribes? 4/10

05.02.2026 13:15 — 👍 2 🔁 0 💬 1 📌 0But saddled with the burdens of modern technopoly - the challenges and admin oversight of the EMR, the "always on" access of inbasket messages, etc. - have driven clinicians to embrace the promises of AI. 3/10

05.02.2026 13:15 — 👍 0 🔁 0 💬 1 📌 0Clinicians are desperate for "faster horses" - a phrase that reflects early 20th century expectations of what the next advance after the horse-drawn carriage might offer. The automobile wasn't a faster horse; it was something altogether different. 2/10

05.02.2026 13:15 — 👍 0 🔁 0 💬 1 📌 0It probably shouldn't be surprising to me anymore that clinicians are absolutely enamored with AI, just as they're usually enamored with technology more broadly (as evidenced by, e.g., allure of interventionalist specialities over primary care). 1/10

05.02.2026 13:15 — 👍 0 🔁 0 💬 1 📌 0

Full disclosure: I haven't read the book they discuss in this episode of @geripal.bsky.social so my comments are just related to the convo. geripal.org/ai-and-healt... #medsky

05.02.2026 13:15 — 👍 2 🔁 1 💬 1 📌 0

My book has a cover! I wish I could share it with you right now, but unfortunately you'll have to wait until August 18, 2026. But you can preorder it now if you want.

www.amazon.com/Resisting-Th...

Clinicians talking with admin in several years:

“What would you say you do here?”

“I told you! I take the clinical recommendations from the AI so the patient doesn’t have to. I have people skills! I HAVE PEOPLE SKILLS!”

Sure would be nice to have a functional federal regulatory agency techcrunch.com/2026/02/03/l...

03.02.2026 18:48 — 👍 12 🔁 4 💬 2 📌 1

Really troubled by this question in a popular surgical critical care study book…

Surgical Critical Care and Emergency Surgery

Topic is early v late dialysis and so much on this topic is know and just disappointing to this question…. The keyed answer was….

The consent form Louis Waskansky signed before undergoing the first-ever human heart transplant, in 1967.

20.01.2026 14:44 — 👍 5 🔁 1 💬 1 📌 0Media outlets sharpened their pens by telling us that cars and trucks hit pedestrians and cyclists instead of drivers. Now they rinse and repeat with AI.

02.01.2026 21:38 — 👍 5 🔁 1 💬 0 📌 0![[Scene is a kitchen - a middle aged woman called JANET is boiling peas at the stove. A younger more colourfully dressed woman named LIZ approached her.]

JANET:

Ugh...

LIZ:

What's up?

JANET:

I am so bored of cooking peas!

LIZ:

Have you tried...

AI peas?

JANET:

AI peas?

LIZ:

They're peas with AI!

[Liz holds up to us a packet of peas labelled: Pea-i AI - Peas with AI].

LIZ:

Al-powered peas harness the potential of your peas

JANET:

What

LIZ [Now a voiceover as we cut to a whizzy technology diagram of peas all connected by meaningless dotted lines]

Why not take your peas to the next level with Al Peas' new Al tools to power your peas?

[Show a techno diagram of a pea with a label reading 'AI' pointing to a random zone in it]

LIZ:

Each pea has Al in a way we haven't quite worked out yet but it's fine

[Show Janet and Liz now in a Matrix-style world of peas]

LIZ:

With Al peas you can supercharge productivity and make AI work for your peas!

JANET:

What

LIZ:

Shut up

LIZ:

Our game-changing Pea-Al gives you the freedom to unlock the potential of the power of the future of your peas workflow

From opening the bag of peas

to boiling the peas

to eating the peas

To spending millions on adding Al to the peas and then having to work out what that even means.

JANET:

Is it really necessary to-

LIZ [Grabbing Janet by the collar]:

THE PEAS HAVE GOT AI, JANET

[Cut to an advert ending screen, with the bag of peas and the slogan:

AI PEAS: Just 'Peas' for god's sake buy the AI peas.

[Ends]](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:4m3we4apbjayclzcnhwe3pfp/bafkreiayqa37f7kik7l7s7fbtfp2sir5ckb662ry2lylinyjrfa2rkvvka@jpeg)

[Scene is a kitchen - a middle aged woman called JANET is boiling peas at the stove. A younger more colourfully dressed woman named LIZ approached her.] JANET: Ugh... LIZ: What's up? JANET: I am so bored of cooking peas! LIZ: Have you tried... AI peas? JANET: AI peas? LIZ: They're peas with AI! [Liz holds up to us a packet of peas labelled: Pea-i AI - Peas with AI]. LIZ: Al-powered peas harness the potential of your peas JANET: What LIZ [Now a voiceover as we cut to a whizzy technology diagram of peas all connected by meaningless dotted lines] Why not take your peas to the next level with Al Peas' new Al tools to power your peas? [Show a techno diagram of a pea with a label reading 'AI' pointing to a random zone in it] LIZ: Each pea has Al in a way we haven't quite worked out yet but it's fine [Show Janet and Liz now in a Matrix-style world of peas] LIZ: With Al peas you can supercharge productivity and make AI work for your peas! JANET: What LIZ: Shut up LIZ: Our game-changing Pea-Al gives you the freedom to unlock the potential of the power of the future of your peas workflow From opening the bag of peas to boiling the peas to eating the peas To spending millions on adding Al to the peas and then having to work out what that even means. JANET: Is it really necessary to- LIZ [Grabbing Janet by the collar]: THE PEAS HAVE GOT AI, JANET [Cut to an advert ending screen, with the bag of peas and the slogan: AI PEAS: Just 'Peas' for god's sake buy the AI peas. [Ends]

Every ad now

13.11.2025 17:38 — 👍 5835 🔁 2557 💬 70 📌 111

I think Derek Thompson is right to claim Alasdair MacIntyre was only half right when he argued our society had lost moral cohesion. We're still bound by a moral framework: the market. www.derekthompson.org/p/the-26-mos...

22.12.2025 10:20 — 👍 1 🔁 0 💬 0 📌 0

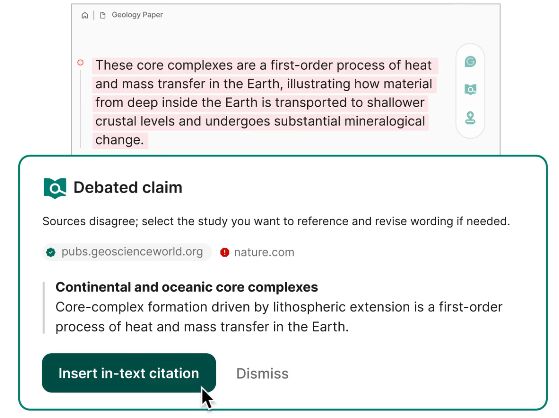

For help with attribution and sourcing, Grammarly is releasing a citation finder agent that automatically generates correctly formatted citations backing up claims in a piece of writing, and an expert review agent that provides personalized, topic-specific feedback.

Screenshot from Grammarly's demo of inserting a post-hoc citation. https://www.grammarly.com/ai-agents/citation-finder

It is not "attribution and sourcing" to generate post-hoc citations that have not been read and did not inform the student's writing. Those should be regarded as fraudulent: artifacts testifying to human actions and thought that did not occur.

www.theverge.com/news/760508/...

Coming back to this, I'm still struck by the implication that academics should care if the AI pushers "trust" all our work that's done without AI.

I don't know, man, you all seemed to trust our work enough to steal it from us wholesale.

To what extent is it our job as consultants to manage or attend to clinician distress? What happens when that clinician distress leads to conflict? #medsky

Guests Sara Johnson, Yael Schenker, & Anne Kelly.

👉 Post: bit.ly/GeriPalEp385

😀 Hosts: @alexsmithmd.bsky.social | @ewidera.bsky.social

I took up this question in the latest issue of Notes: open.substack.com/pub/familyme...

19.12.2025 11:00 — 👍 4 🔁 1 💬 0 📌 0

Dr. Paisley’s brow furrowed. “I will say, it looks like you’re spending about an average of three minutes per encounter on non-clinical chatter. Hmm. The weather, patient vacations. Of course, of course we want our patients to feel welcome, but…” A pie chart grew on the wall. “If you reduce that to just one minute, you’ll be able to see seven more patients in a day! Goodness, look at that. Three minutes of non-clinical puts you in the bottom 10th percentile. Friendly, but inefficient!”

familymeetingnotes.substack.com/p/where-the-...

18.12.2025 11:19 — 👍 1 🔁 0 💬 0 📌 0It will maximize the oversight and intrusiveness of the EMR by placing admin ears in every clinical encounter.

18.12.2025 11:17 — 👍 2 🔁 0 💬 1 📌 0They wonder what’s driving hospital investment in ambient AI for charting, as evidence for improved productivity isn’t there. Maybe it’s earnest care for clinician well-being? I have another suggestion: surveillance.

18.12.2025 11:17 — 👍 4 🔁 0 💬 1 📌 0

screenshot of a post on facebook from Alex Lamoreaux, depicting two versions of the "same" photograph of a bobcat in front of a pine plantation, one labeled original game cam photo, one labeling AI processed the markings have been altered on the AI photo, and the head changed and resized text says: Oh! A neat goose hybrid posted to ‘What’s this Bird?’ but when you zoom in on the photos the bill details have been too manipulated by AI photo editing to be accurately assessed for which species may be involved. Scroll on… A cool-looking Red-tailed Hawk that someone is wondering which subspecies it might be - right up my alley! But when you zoom in on the photos literally every feather group has been so heavily manipulated and altered by AI editing that it’s impossible to determine subspecies because the photo is essentially fake now; just a mashup of whatever AI thinks parts of a Red-tail should look like. Scroll on… WOW! That’s a remarkable trail cam photo of a Bobcat! It almost looks like a painting!… but the head looks oddly small…. hmmmm. After some prodding the original photo is produced and sure enough it had been so heavily altered by AI ‘post processing’ that the photo posted was essentially a digital mock-up with totally different proportions and fur markings. No longer the photo off the trail cam; it may as well have just been ‘created’ by AI from scratch. This stuff is killing me. My days on social media are becoming very limited. We can’t even trust simple, everyday photos of animals to be real. The wildlife community needs to quit editing with AI, and we need to start calling out photographers that are using it. Our reality is being destroyed all around us.

this field biologist does a great job highlighting the insidiousness of AI editing

these one-click AI edit buttons are incredibly appealing to many photographers, amateurs and professionals alike, and are framed as innocuous

this is worth sharing and educating people about