Travis Kelce slandered gumbo and was rightfully punished

10.02.2025 02:55 — 👍 368 🔁 80 💬 9 📌 9Remy Guercio

@remy.guerc.io

Planes, trains, and occasionally automobiles. PMM @tailscale.com,📍 NOLA

@remy.guerc.io

Planes, trains, and occasionally automobiles. PMM @tailscale.com,📍 NOLA

Travis Kelce slandered gumbo and was rightfully punished

10.02.2025 02:55 — 👍 368 🔁 80 💬 9 📌 9Hah @chrisckchang.bsky.social the trend is Accidental ASMR not ASMR. Regardless of if it’s accidental or not.

06.01.2025 18:35 — 👍 0 🔁 0 💬 0 📌 0Analytics + session recording + surveys means you can do things like search for users who did X & Y, gave some answer, and then watch what actually happened.

I agree about talking to folks, but it’s a helpful complement.

That almost sounds Facebook inflated video views level of suspicious.

06.01.2025 01:59 — 👍 0 🔁 0 💬 2 📌 0It’s my favorite piece of B2B SaaS that I’ve used in a while.

Posthog is up there on that list for me too. If you get it set up right, it’s essentially Gong but for PLG / self-serve rather than sales.

I’ve never been a fan of the people who shoot off their leftover fireworks on the nights following New Year’s Eve, but someone is doing it in Uptown New Orleans right now and I wish they would read the fucking room

02.01.2025 01:10 — 👍 67 🔁 8 💬 3 📌 1The related work section of this paper from last year is quite useful. Prediction vs compression, effects of tokenization, etc…

arxiv.org/pdf/2309.10668

Overfit is almost desired in this instance.

30.12.2024 14:57 — 👍 0 🔁 0 💬 0 📌 0Yeah I guess the better it is at predicting the next token the tighter the encoding.

In this world it seems like you might want a ton of small LLMs with really tight training sets for a given context.

Things always get very odd to me when you need to use a model at temperature 0.

Or is this the embedding vector represented as characters?

LLMs and compression are Interesting nonetheless. Still a little bit of a head scratcher.

Wouldn’t you expect better compression with what is effectively a pre-shared dictionary of ~170MB?

They say the training text is mostly English and code, so it seems like either there are still a bunch of Chinese characters being used as high context tokens or something happened with the tokenizer?

AMD can come close on “raw” numbers, but in the real world you’re getting only a fraction.

25.12.2024 19:19 — 👍 0 🔁 0 💬 0 📌 0

I’m not sure how’d you’d even reasonably do the compute comparison per $ over more than a few years. It’s hard enough as it is to compare real world FP16, INT8, etc… perf.

Lambda does its best for NVIDIA GPUs, but you have to look at specific models / precisions.

lambdalabs.com/gpu-benchmarks

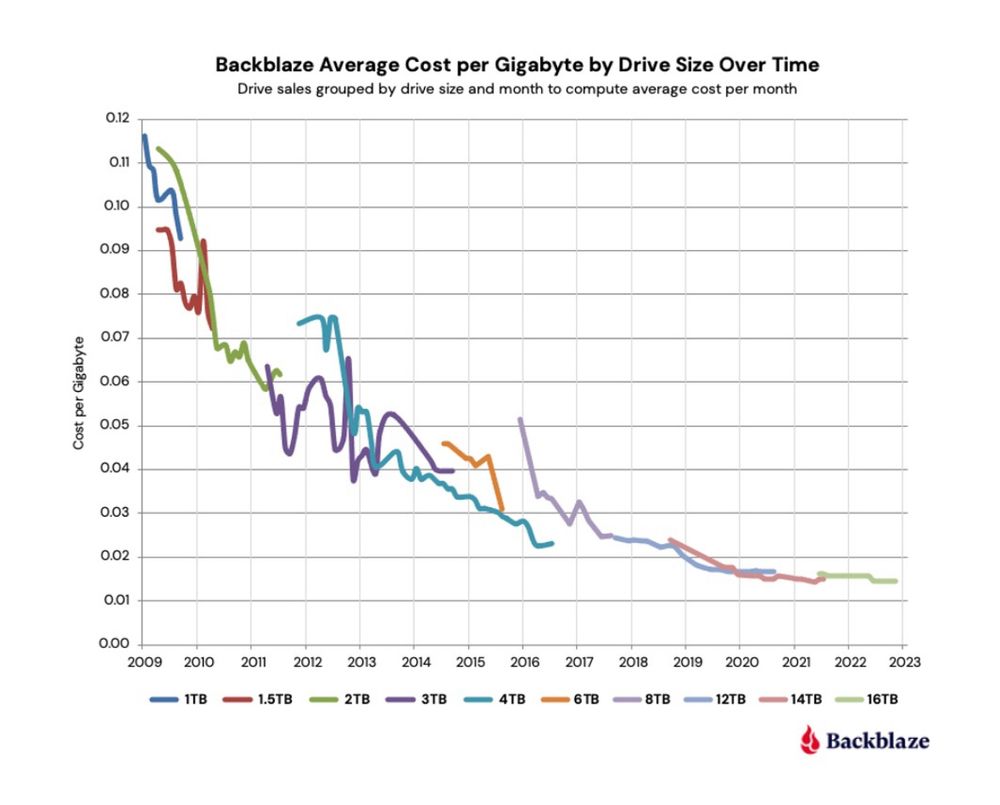

Backblaze has the most comprehensive spinning disk numbers I’ve seen. Haven’t found the same for SSD (SATA, NVMe, or otherwise).

www.backblaze.com/blog/hard-dr...

The Tet from Oblivion would like to have a word with you.

17.12.2024 08:09 — 👍 0 🔁 0 💬 0 📌 0Companies worry too much about lookalike competitors… meanwhile their complements are out there constantly trying to commoditize them or push them into irrelevance.

14.12.2024 03:55 — 👍 0 🔁 0 💬 0 📌 0Hah not sure I wanted to know that I’m at 16.5 with 6 more flights to go…

09.12.2024 18:00 — 👍 0 🔁 0 💬 0 📌 0With the inflation that’s happened over the last ~5 years, the $2 bill feels more relevant than ever…though maybe still not that relevant.

09.12.2024 17:52 — 👍 1 🔁 1 💬 0 📌 0Hah this feels like it’s part of the creative brief for some high fashion men’s workwear line.

07.12.2024 21:53 — 👍 0 🔁 0 💬 0 📌 0Trolls get too much attention. I'm here for those being kind to random people on the internet.

27.04.2023 17:00 — 👍 456 🔁 67 💬 26 📌 9