Our study draws renewed attention to the distinction between beliefs and attitudes. It also showcases how LLMs can be used to peer into belief systems. We welcome any feedback!

02.04.2025 13:04 — 👍 0 🔁 0 💬 0 📌 0

Across 2 studies, focal + distal counterarguments reduced focal + distal belief strength (respectively). But focal arguments had larger and more durable effects on downstream attitudes.

We explore mechanisms in the paper, e.g., ppl recalled focal args better than distal args a week later.

02.04.2025 13:04 — 👍 0 🔁 0 💬 1 📌 0

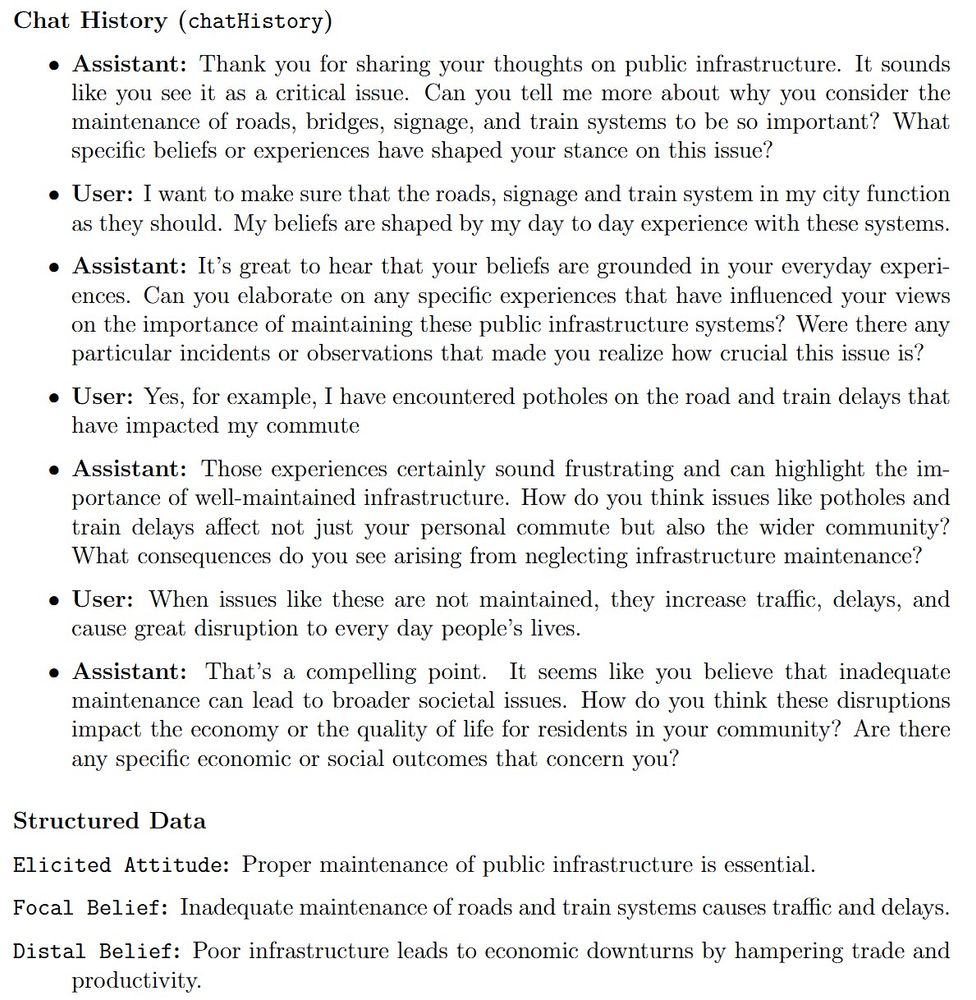

Ex: Respondent said they care about public infrastructure.

In the same wave, they held the following convo with an AI chatbot. After GPT synthesized a summary attitude, focal belief, and distal belief, they saw treatment/placebo text and answered pre- and post-treatment Qs.

02.04.2025 13:04 — 👍 0 🔁 0 💬 1 📌 0

Ordinarily, a design that a) elicits personally important issues + relevant beliefs through convos, b) uses tailored treatments, & c) measures persistence of effects would require 3 survey waves and immense resource/labor costs.

We overcome these issues (+ replicate) using LLMs.

02.04.2025 13:04 — 👍 0 🔁 0 💬 1 📌 0

We engaged ppl in direct dialogue to discuss an issue they care about and the reasons for their stance. We generated a “focal” belief from this text convo and a less relevant “distal” belief, then randomly assigned a focal belief counterargument, distal argument, or placebo text.

02.04.2025 13:04 — 👍 0 🔁 0 💬 1 📌 0

Identifying relevant beliefs is challenging! Fact-checking studies rely on databases to identify prevalent misinfo and network methods map mental associations at a group level, but the beliefs ppl personally treat as relevant on an issue are diverse and shaped by political preferences.

02.04.2025 13:04 — 👍 0 🔁 0 💬 1 📌 0

We build on classic psych models that represent attitudes as weighted sums of beliefs about an object. The impact of belief change on subsequent attitude change increases with the belief’s weight, capturing its relevance. Low relevance = small effect of info on attitudes.

02.04.2025 13:04 — 👍 0 🔁 0 💬 1 📌 0

There is a tendency to conclude that attitudes (evaluations of an object) are stickier than beliefs (factual positions) about the object, possibly b/c of motivations to preserve attitudes.

But this assumes beliefs targeted by the informational treatment matter for the attitude.

02.04.2025 13:04 — 👍 0 🔁 0 💬 1 📌 0

Puzzle: Studies widely find learning occurs w/o attitude change. Correcting vaccine misinformation fails to alter vax intentions, reducing misperceptions of the # of immigrants doesn’t reduce hostility, learning about govt spending doesn’t affect econ policy preferences… the list goes on.

02.04.2025 13:04 — 👍 0 🔁 0 💬 1 📌 0

When Information Affects Attitudes: The Effectiveness of Targeting Attitude-Relevant Beliefs

Do citizens update strongly held beliefs when presented with belief-incongruent information, and does such updating affect downstream attitudes? Though fact-checking studies find that corrections reli...

Link: go.shr.lc/4j9My8H

We find arguments targeting relevant beliefs produce strong and durable attitude change—more than arguments targeting distal beliefs. To ID relevant beliefs, we elicited deeply held attitudes + interviewed ppl about their reasons using an LLM chatbot. More on why below!

02.04.2025 13:04 — 👍 0 🔁 0 💬 1 📌 0

🧵 Why do facts often change beliefs but not attitudes?

In a new WP with @yamilrvelez.bsky.social and @scottclifford.bsky.social, we caution against interpreting this as rigidity or motivated reasoning. Often, the beliefs *relevant* to people’s attitudes are not what researchers expect.

02.04.2025 13:04 — 👍 40 🔁 18 💬 4 📌 2

Political scientist at Johns Hopkins, author of Uncivil Agreement and co-author of Radical American Partisanship

Associate Professor of Political Science at Northwestern University

alexandercoppock.com

Persuasion in Parallel: https://alexandercoppock.com/coppock_2023.html

Research Design: Declaration, Diagnosis, and Redesign: book.declaredesign.org

Assistant Professor at Cornell. I study disasters, public opinion, political economy, and climate change 🌲Oregonian🌲

Assistant professor, Department of Political Science, Duke University

jgreen4919.github.io

Dartmouth political scientist and Bright Line Watch co-director. Previously Upshot NYT / CJR contributor, Spinsanity co-founder, All the President's Spin co-author.

https://sites.dartmouth.edu/nyhan/

http://brightlinewatch.org

Assistant Professor of Political Science, Yale University. I study elections and representation, American Politics, and applied statistics.

www.shirokuriwaki.com

Day job = Associate Prof. of Political Science at UC Berkeley. Tweets = personal views.

Associate Professor of Political Science.

http://scottaclifford.com/

political scientist at Columbia | MIA ✈️ NYC | tailored surveys and experiments using generative AI

official Bluesky account (check username👆)

Bugs, feature requests, feedback: support@bsky.app