PhD Position for Deep Learning for Optogenetic Sensory Restoration

How to apply Who to contact? ekfz@sinzlab.org Email subject Start with

🚨 We’re hiring! 🚨

Together with Marcus Jeschke and Emilie Mace we are looking for a PhD student to join us for developing AI tools for optogenetic sensory restauration.

Apply now: sinzlab.org/positions/20...

#PhDposition #AI #Neuroprosthetics #ML #NeuroAI #Hiring

12.05.2025 08:37 — 👍 4 🔁 2 💬 1 📌 0

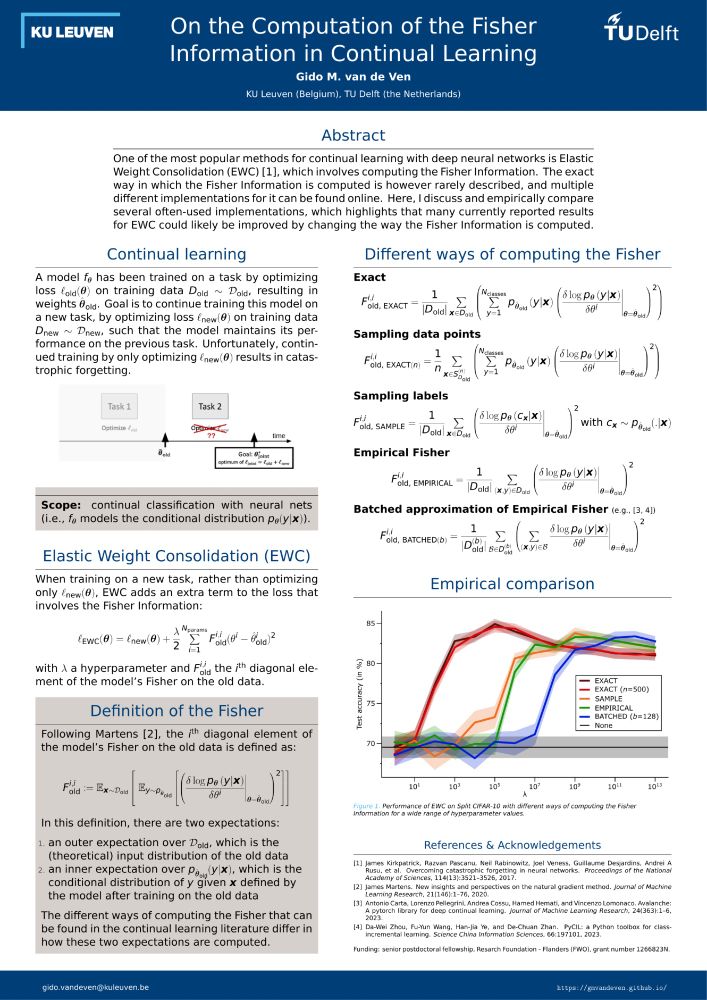

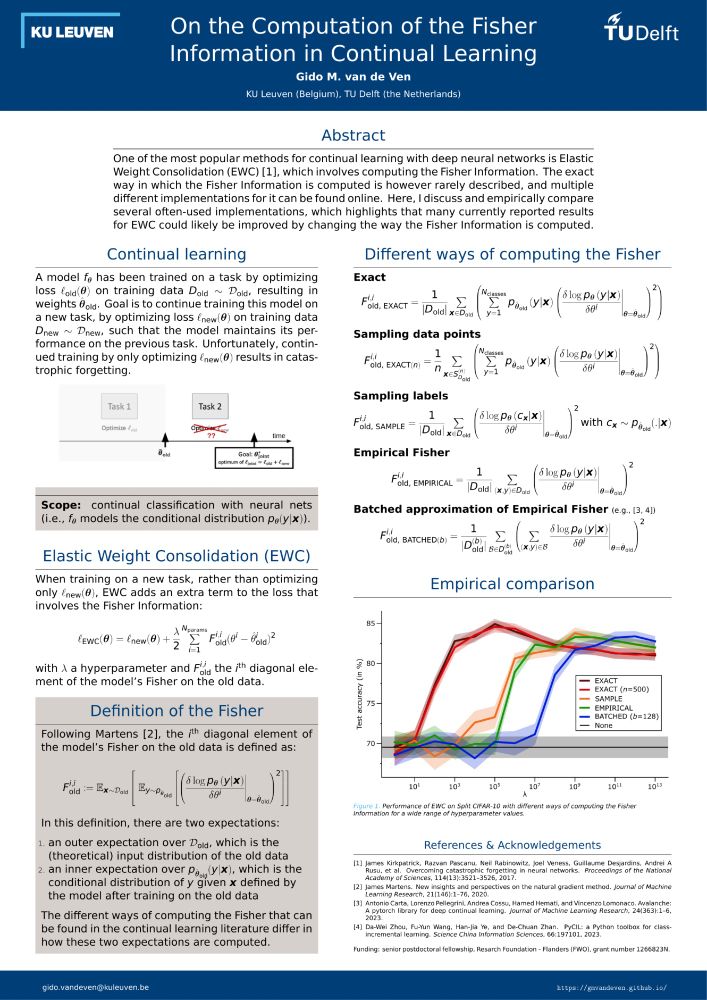

It has been claimed that for the performance it doesn’t really matter how the Fisher is computed. But while this holds to some extent for Split MNIST, already with Split CIFAR-10 significant differences in performance emerge.

At ICLR? Come and hear more at poster #483 on Saturday-morning!

25.04.2025 17:13 — 👍 1 🔁 0 💬 0 📌 0

On the Computation of the Fisher Information in Continual Learning

One of the most popular methods for continual learning with deep neural networks is Elastic Weight Consolidation (EWC), which involves computing the Fisher Information. The exact way in which the Fish...

How do you compute the Fisher when using EWC?

Different ways can be found in the continual learning literature, with the most-used one making rather crude approximations.

This has bothered me (and others!) for a long time, and I finally take this on in an ICLR blogpost: arxiv.org/abs/2502.11756

25.04.2025 17:13 — 👍 4 🔁 1 💬 1 📌 0

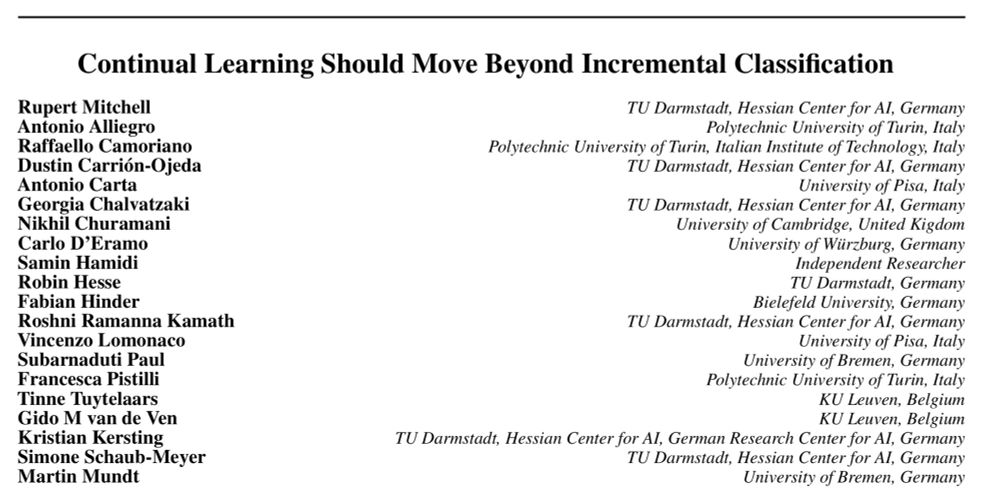

Why has continual ML not had its breakthrough yet?

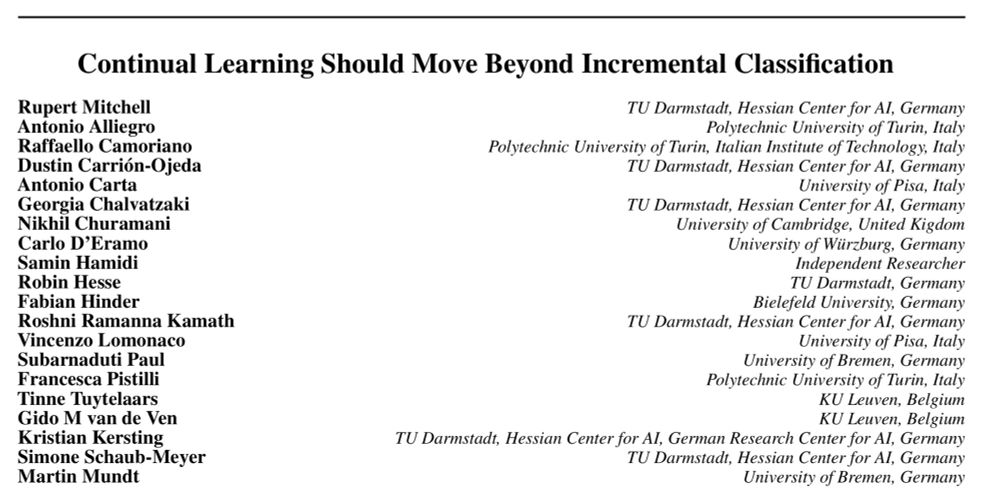

In our new collaborative paper w/ many amazing authors, we argue that “Continual Learning Should Move Beyond Incremental Classification”!

We highlight 5 examples to show where CL algos can fail & pinpoint 3 key challenges

arxiv.org/abs/2502.11927

18.02.2025 13:33 — 👍 10 🔁 3 💬 0 📌 0

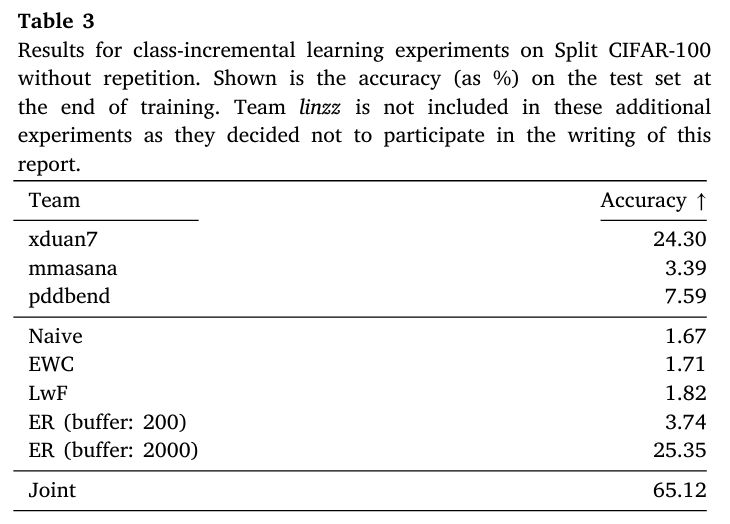

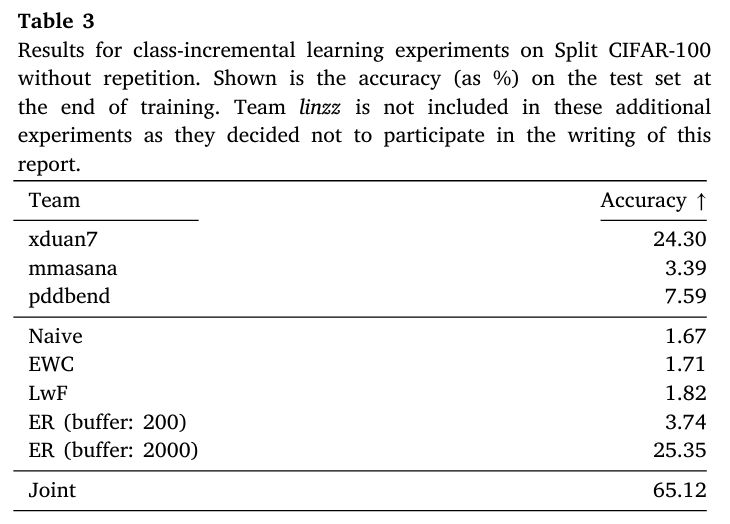

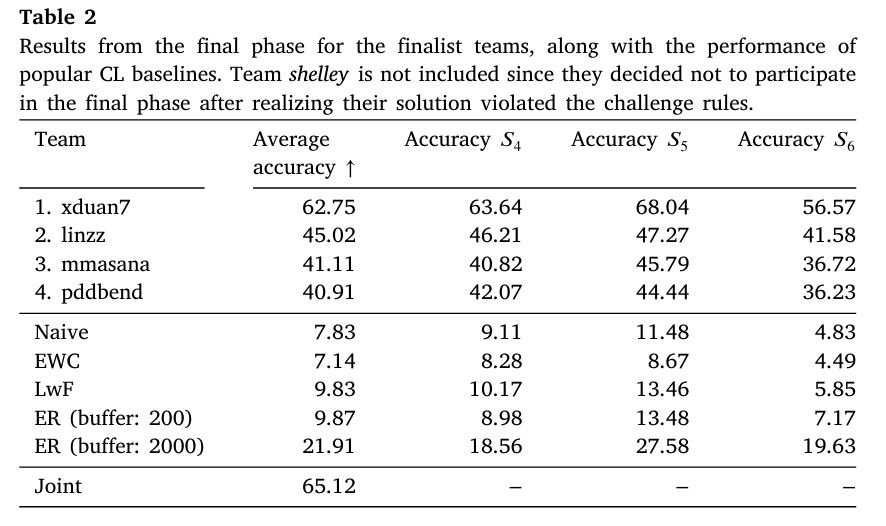

Results without repetition

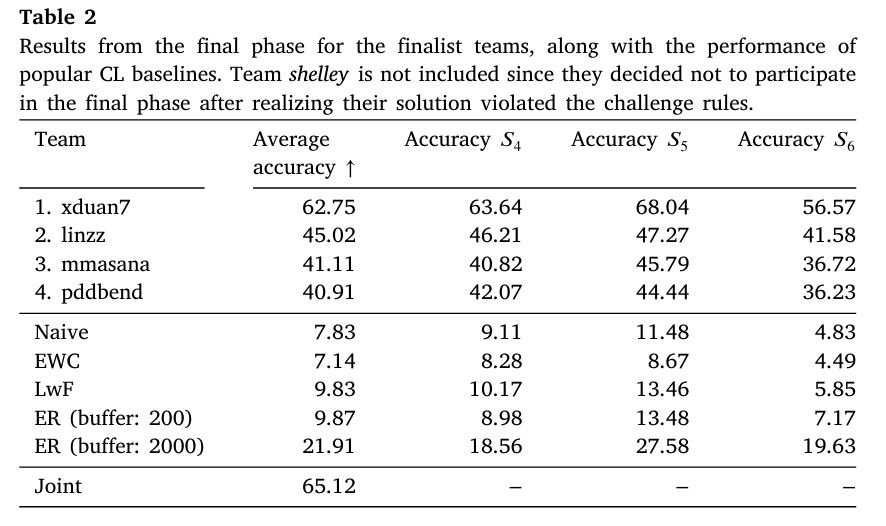

Results with repetition

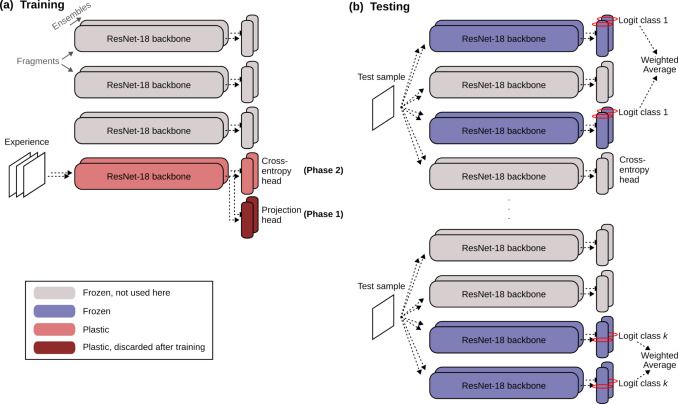

These winning strategies clearly outperform experience replay on data streams *with* repetition, but on a “standard” task-based continual learning stream *without* repetition, experience replay performs better.

02.12.2024 13:02 — 👍 2 🔁 0 💬 1 📌 0

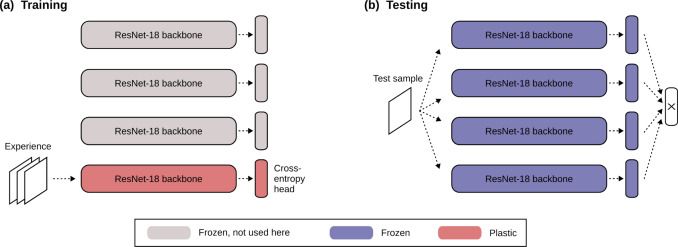

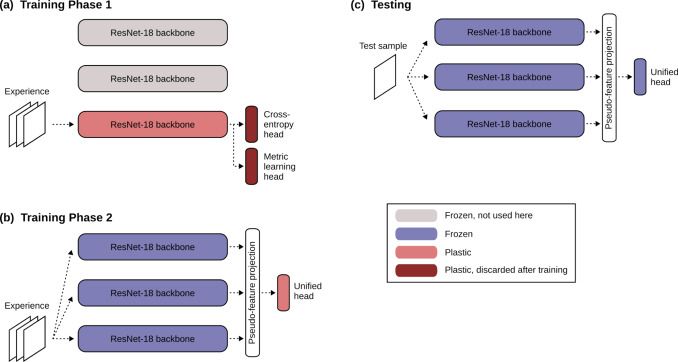

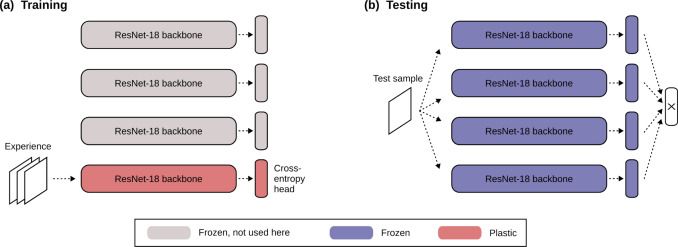

Schematic of DWGRNet

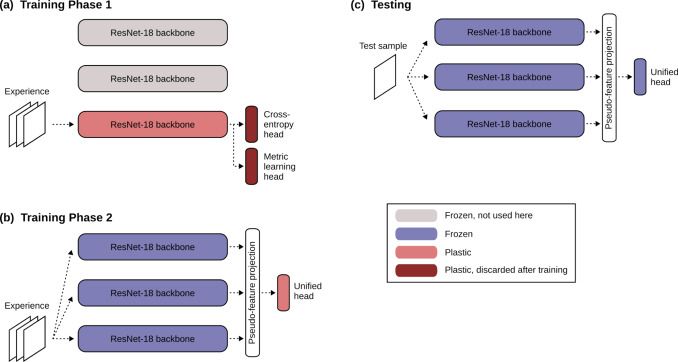

Schematic of Horde

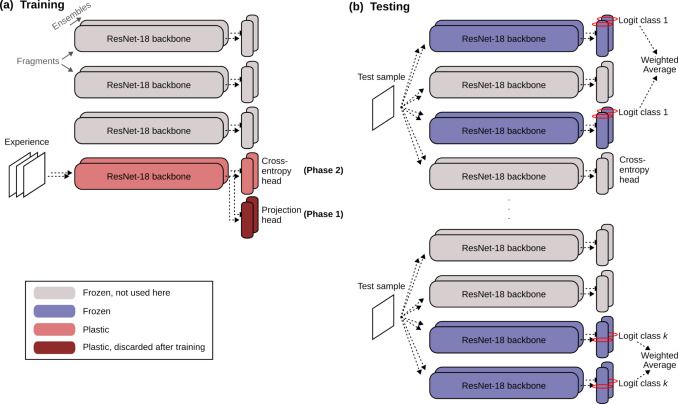

Schematic of HAT-CIR

A striking outcome of the challenge was that all winning teams used some kind of ensemble-based approach, in which separate sub-networks per task/experience are learned and later combined for making predictions.

02.12.2024 13:02 — 👍 1 🔁 0 💬 1 📌 0

Continual learning in the presence of repetition

Continual learning (CL) provides a framework for training models in ever-evolving environments. Although re-occurrence of previously seen objects or t…

Does continual learning change when there is repetition in the data stream?

The report of the #CVPR2023 CLVision challenge on **Continual learning in the presence of repetition** is out in Neural Networks. #OpenAccess

www.sciencedirect.com/science/arti...

02.12.2024 13:02 — 👍 6 🔁 1 💬 1 📌 0

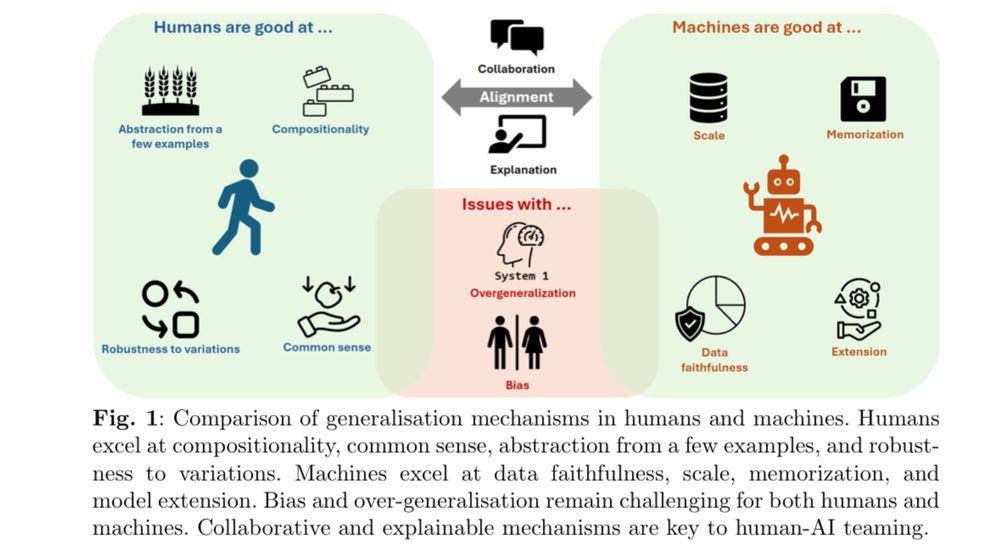

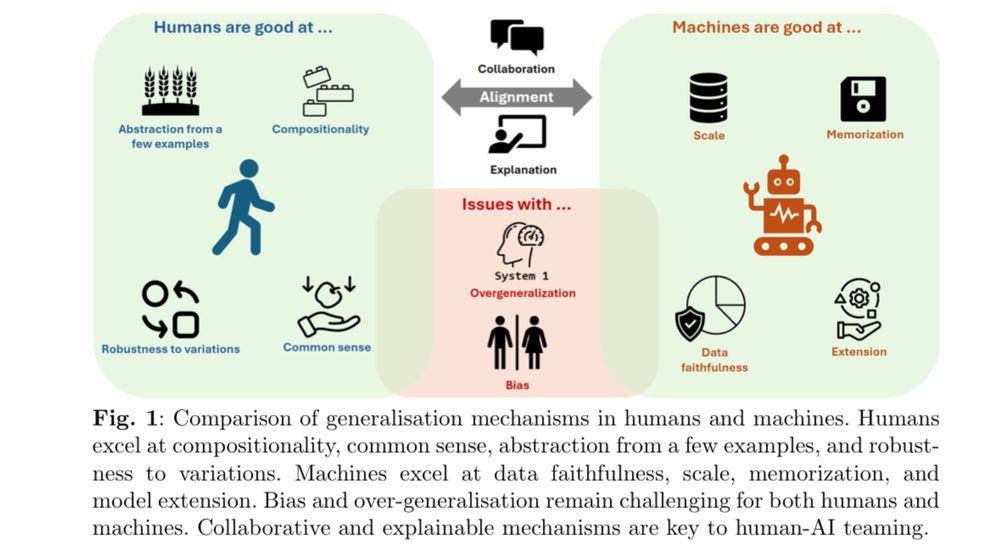

Aligning Generalisation Between Humans and Machines

Recent advances in AI -- including generative approaches -- have resulted in technology that can support humans in scientific discovery and decision support but may also disrupt democracies and target...

This is one of the most important arguments in the AI discourse, I think: With a large group of experts we explain why generalization of humans and machines works very differently. This is a fundamental points that has crucial implications for language models, as well: arxiv.org/abs/2411.15626

27.11.2024 12:46 — 👍 9 🔁 2 💬 2 📌 0

Is generalisation a process, an operation, or a product? 🤨

Read about the different ways generalisation is defined, parallels between humans & machines, methods & evaluation in our new paper: arxiv.org/abs/2411.15626

co-authored with many smart minds as a product of Dagstuhl 🙏🎉

27.11.2024 13:30 — 👍 9 🔁 2 💬 0 📌 0

Thanks Simon! I’d be keen to be added as well ☺️

13.11.2024 20:11 — 👍 1 🔁 0 💬 0 📌 0

Professor at ISTA (Institute of Science and Technology Austria), heading the Machine Learning and Computer Vision group. We work on Trustworthy ML (robustness, fairness, privacy) and transfer learning (continual, meta, lifelong). 🔗 https://cvml.ist.ac.at

Senior Research Scientist.

Prev Postdoc @ Mila, PhD @ Polytechnique Paris.

Interested in large-scale continual learning, representation learning, SSL and LLMs.

Postdoc at Visual Inference Lab, TU Darmstadt

predoc @NERF (KU Leuven, VIB, imec)

comp neuro and machine learning

goncalveslab.sites.vib.be/en

Manchester Centre for AI FUNdamentals | UoM | Alumn UCL, DeepMind, U Alberta, PUCP | Deep Thinker | Posts/reposts might be non-deep | Carpe espresso ☕

in love with memory and sleep research, puts rats, mice and humans into complex, mulit-trial tasks

DPhil candidate in the Dupret Lab at the MRC BNDU - University of Oxford

PhD candidate @ColumbiaNeuro @cu_neurotheory with Ashok Litwin-Kumar modeling learning in drosophila. syncrostone.github.io

Formerly with @johndmurray @YaleCompsci and @GJEHennequin @Cambridge_Uni. Migrating from @syncrostone on the other place.

Systems neuroscientist. Assistant Professor at

Cornell. Studying the computational and circuit mechanisms of learning, memory and natural behaviors in rodents

Postdoctoral research fellow @ Mount Sinai, department of AI and human health. Computational cognitive neuroscience, NeuroAI, neural representations

The Society for Philosophy and Neuroscience (SPAN) is a new philosophical and scientific society dedicated to providing a forum for the collaboration between philosophers and neuroscientists. philandneuro.com | thefeedback.blog

Neural correlates of learning and memory.

Postdoc @ Moser lab, NTNU

PhD @ McNaughton lab, UCIrvine

(Prev: IISc Bangalore, BITS Pilani)

NeuroAI, Deep Learning for neuroscience, visual system in mice and monkeys, computational lab based in Göttingen (Germany), https://sinzlab.org

Neuroscience, Machine Learning, Computer Vision • Scientist at @unigoettingen.bsky.social & MPI-DS • https://eckerlab.org • Co-founder of https://maddox.ai • Dad of three • All things outdoor

Computational Cognitive Neuroscientist at INS Marseille. I'm interested in speech, information theory and network modelling.

Computational neuroscientist at the FMI.

www.zenkelab.org

Postdoc in the Hayden lab at Baylor College of Medicine studying neural computations of natural language & communication in humans. Sister to someone with autism. she/her. melissafranch.com