Our analysis shows that it is natural to use the polar decomposition from a defining viewpoint. This gives rise to nuclear norm scaling: the update will vanish as the gradient becomes small, automatically! In contrast, Muon needs to manually tune the factor for the ortho matrix to achieve this.

29.05.2025 17:13 — 👍 1 🔁 0 💬 0 📌 0

How to Prevent a Tragedy of the Commons for AI Research?

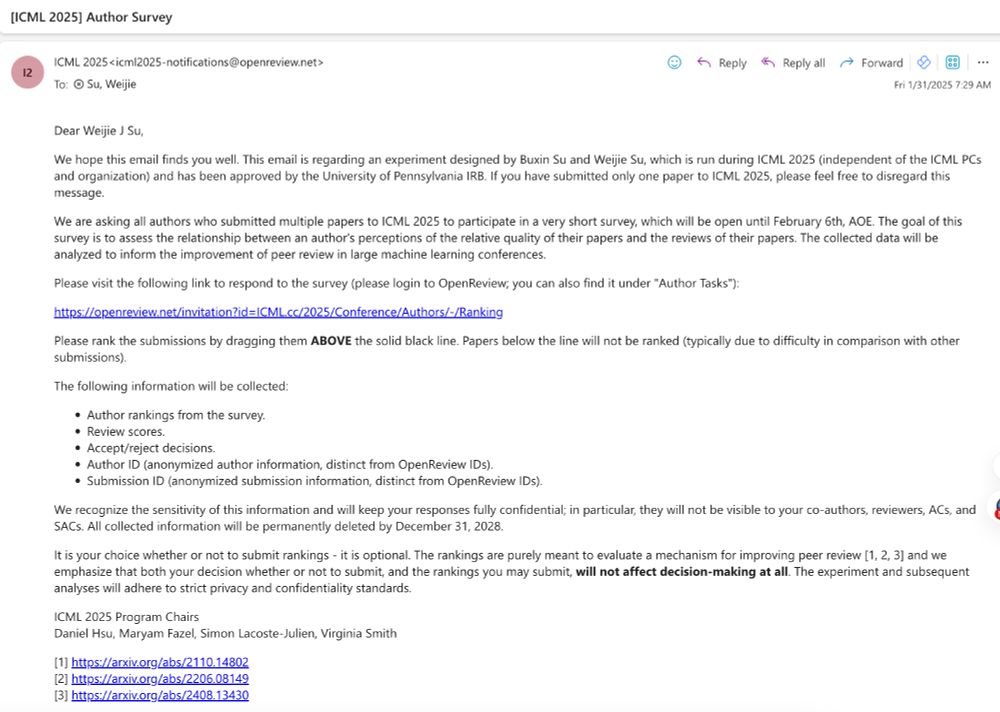

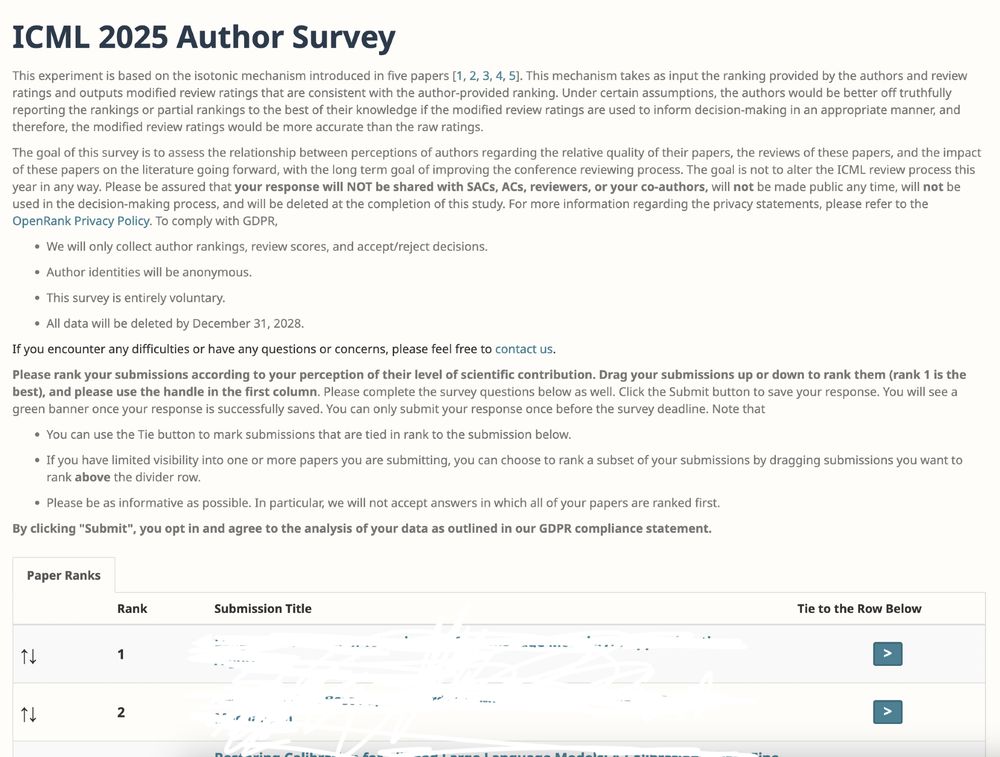

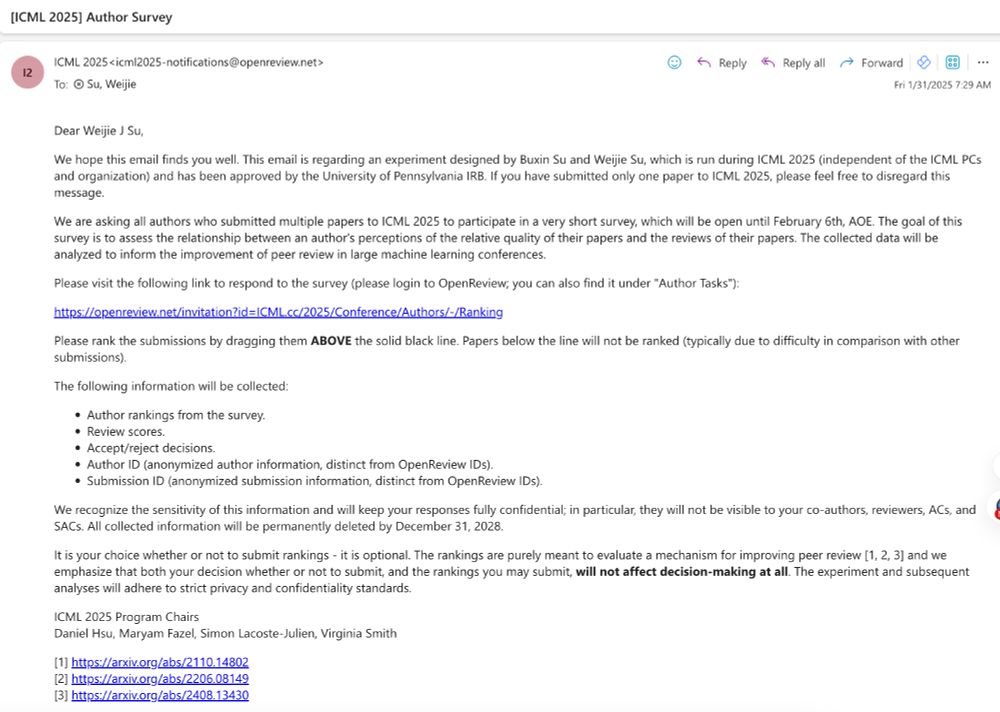

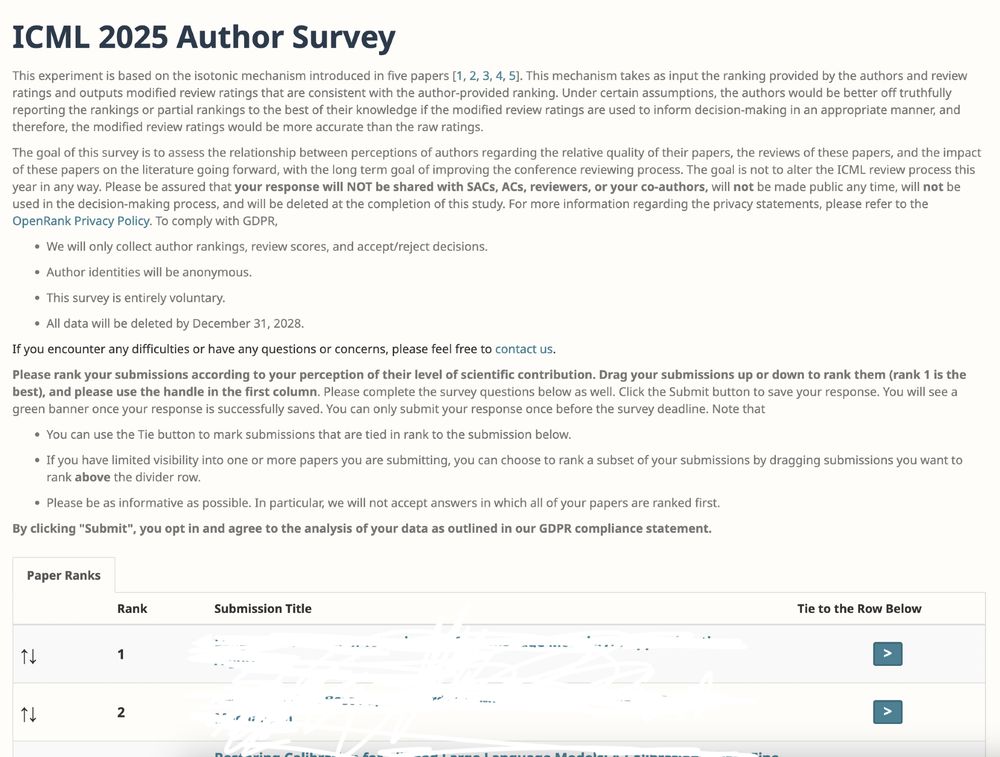

The ranking method was tested at ICML in 2023, 2024, and 2025. I hope we'll finally use it to improve ML/AI review processes soon. Here's an article about the method, from its conception to experimentation:

www.weijie-su.com/openrank/

27.05.2025 17:08 — 👍 1 🔁 0 💬 0 📌 0

Statistical Foundations of Large Language Models

We're hiring a postdoc focused on the statistical foundations of large language models, starting this fall. Join our team exploring the theoretical and statistical underpinnings of LLMs. If interested, check our work: weijie-su.com/llm/ and drop me an email. #AIResearch #PostdocPosition

13.05.2025 00:51 — 👍 1 🔁 1 💬 0 📌 0

The #ICML2025 @icmlconf.bsky.social deadline has just passed!

Peer review is vital to advancing AI research. We've been conducting a survey experiment at ICML since 2023. Pls take a few minutes to participate in it, sent via email with the subject "[ICML 2025] Author Survey". Thx!

31.01.2025 16:04 — 👍 1 🔁 0 💬 0 📌 0

Stat

Click on the title to browse this journal

A special issue on large language models (LLMs) and statistics at Stat (onlinelibrary.wiley.com/journal/2049...). We're seeking submissions examining LLMs' impact on statistical methods, practice, education, and many more @amstatnews.bsky.social

19.12.2024 10:25 — 👍 3 🔁 1 💬 0 📌 0

Heading to Vancouver tomorrow for #NeurIPS2024, Dec 10-14! Excited to reconnect with colleagues and enjoy Vancouver's seafood! 🦐

09.12.2024 19:46 — 👍 1 🔁 0 💬 0 📌 0

Add me plz. Thx!

28.11.2024 01:29 — 👍 1 🔁 0 💬 1 📌 0

How Is AI Changing the Science of Prediction?

Podcast Episode · The Joy of Why · 11/07/2024 · 37m

Machine learning has led to predictive algorithms so obscure that they resist analysis. Where does the field of traditional statistics fit into all of this? Emmanuel Candès asks the question, “Can I trust this?” Tune in to this week’s episode of “The Joy of Why” listen.quantamagazine.org/jow-321-s

07.11.2024 16:49 — 👍 32 🔁 8 💬 0 📌 0

Knew nothing about bluesky until today. Immediately stop using X or gradually migrate to bluesky? Is there an optimal switching strategy?

28.11.2024 01:22 — 👍 2 🔁 0 💬 0 📌 0

We make free, open-source software for data scientists like the RStudio IDE.

We're formerly known as RStudio. You can always download our open-source IDE here. https://posit.co/download/rstudio-desktop/

he/him - writing statistical software at Posit, PBC (née RStudio)🥑

simonpcouch.com, @simonpcouch elsewhere

A research center at Penn Engineering, working to foster research and innovation in interconnected social, economic and technological systems.

A business analyst at heart who enjoys delving into AI, ML, data engineering, data science, data analytics, and modeling. My views are my own.

You can also find me at threads: @sung.kim.mw

R, data, 🐕, 🍸, 🌈. He/him.

Postdoc at Simons at UC Berkeley; alumnus of Johns Hopkins & Peking University; deep learning theory.

https://uuujf.github.io

full-time ML theory nerd, part-time AI-non enthusiast

Mathematician at UCLA. My primary social media account is https://mathstodon.xyz/@tao . I also have a blog at https://terrytao.wordpress.com/ and a home page at https://www.math.ucla.edu/~tao/

Senior Lecturer #USydCompSci at the University of Sydney. Postdocs IBM Research and Stanford; PhD at Columbia. Converts ☕ into puns: sometimes theorems. He/him.

Lecturer in Maths & Stats at Bristol. Interested in probabilistic + numerical computation, statistical modelling + inference. (he / him).

Homepage: https://sites.google.com/view/sp-monte-carlo

Seminar: https://sites.google.com/view/monte-carlo-semina

Recently a principal scientist at Google DeepMind. Joining Anthropic. Most (in)famous for inventing diffusion models. AI + physics + neuroscience + dynamical systems.

Professor @UCLA, Research Scientist @ByteDance | Recent work: SPIN, SPPO, DPLM 1/2, GPM, MARS | Opinions are my own

Professor at University of Toronto. Research on machine learning, optimization, and statistics.

I work on AI at OpenAI.

Former VP AI and Distinguished Scientist at Microsoft.

Director, Princeton Language and Intelligence. Professor of CS.

Professor, Stanford University, Statistics and Mathematics. Opinions are my own.

Blog: https://argmin.substack.com/

Webpage: https://people.eecs.berkeley.edu/~brecht/

web: http://maxim.ece.illinois.edu

substack: https://realizable.substack.com

Research Director, Founding Faculty, Canada CIFAR AI Chair @VectorInst.

Full Prof @UofT - Statistics and Computer Sci. (x-appt) danroy.org

I study assumption-free prediction and decision making under uncertainty, with inference emerging from optimality.