An idea to post an expanded version on biorxiv.org?

10.09.2025 12:52 — 👍 0 🔁 0 💬 0 📌 0Steven Scholte

@neurosteven.bsky.social

Happy dad | Fascinated by perception | Anti-realist | Chair CCN2025 | #UvA #MidLevelVision #CognitiveAI

@neurosteven.bsky.social

Happy dad | Fascinated by perception | Anti-realist | Chair CCN2025 | #UvA #MidLevelVision #CognitiveAI

An idea to post an expanded version on biorxiv.org?

10.09.2025 12:52 — 👍 0 🔁 0 💬 0 📌 0That is deal with the statistical structure of the world on the basis of learning experience (sampling + evolution). To jump to human behaviour would be, I think, mixing up 2, or potentially 3 levels of observation.

10.09.2025 12:50 — 👍 1 🔁 0 💬 0 📌 0DCNNs here confirm our ideas of vision at a neuroscience level and potentially expand these with a broader view of what these filters are and how they can emerge. Beyond that, it suggests a theory of what vision does at this stage.

10.09.2025 12:50 — 👍 1 🔁 0 💬 1 📌 0I guess the theory that you would abstract from results such as these is that feedforward vision is dominated by features that naturally emerge through hierarchical processing and show these features can emerge in a convolutional tree.

10.09.2025 12:50 — 👍 0 🔁 0 💬 1 📌 0A substantial amount of the neural activity that can be explained relates to these processes, and this part (my 5 cents, this data) is (the bulk) of what is explained by DCNNs. But a necessary part of processing for vision outside the lab.

09.09.2025 08:02 — 👍 0 🔁 0 💬 1 📌 0For the model to classify objects it does a lot of 'stuff'. For instance, without a background a shallow network suffices (Seijdel et al., 2020, scholar.google.com/citations?vi...). Natural images force these networks to do a lot more than what we would label object recognition.

09.09.2025 08:02 — 👍 0 🔁 0 💬 1 📌 0It has been surprising for me the last 7 years how easy it is to find signature of texture processing (Loke et al., 2024) and scene segmentation (Seijdel et al., 2020, 2021) in DCNNs and how little of it seems to relate to subsequent steps. We have really been trying.

08.09.2025 20:13 — 👍 1 🔁 0 💬 0 📌 0DNNs still capture an impressive amount of variance. The most parsimonious account I think is that DNNs model the initial encoding well but miss, or perform differently, subsequent steps of object recognition.

08.09.2025 20:13 — 👍 1 🔁 1 💬 2 📌 0Great work from my PhD Jessica Loke, together with @lynnkasorensen.bsky.social , @irisgroen.bsky.social and Nathalie Cappaert.

08.09.2025 18:32 — 👍 4 🔁 0 💬 0 📌 0

Take-home: High DNN-brain correlations may reflect shared texture processing rather than object understanding. This challenges how we interpret brain-AI alignment studies and suggests new directions for computational models. www.biorxiv.org/content/10.1...

08.09.2025 18:32 — 👍 11 🔁 1 💬 1 📌 0

Trajectories make it visual:

🔵 Texture path → high alignment, low object info (upper-left quadrant)

🔴/🟢 Natural & object-only paths → more object info but no extra alignment.

This explains why better object recognition ≠ better brain prediction.

Cross-prediction: texture features predict brain responses to natural scenes almost as well as features from the originals themselves.

Local image statistics = the common representational currency between artificial and biological vision.

The key dissociation:

• EEG encodes object category across all conditions.

• But object info does not drive DNN–brain alignment.

• Peak alignment occurs when object info is minimal (texture condition).

Three versions of each image:

🔴Natural scenes

🔵Texture-synthesized (global summaries of local stats only; no recognizable objects)

🟢Object-only (objects without backgrounds). Counterintuitive result: strongest DNN–brain alignment for texture-only images!

🧠 New preprint: Why do deep neural networks predict brain responses so well?

We find a striking dissociation: it’s not shared object recognition. Alignment is driven by sensitivity to texture-like local statistics.

📊 Study: n=57, 624k trials, 5 models doi.org/10.1101/2025...

Our response is due in 3 weeks. Pondering.

07.09.2025 17:18 — 👍 3 🔁 0 💬 0 📌 0

Datasets like NSD & THINGS offer rich stimuli but often test a single task.

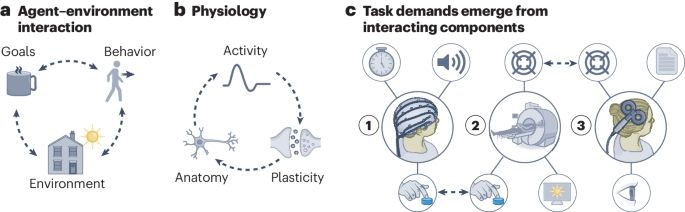

After great conversations at #CCN2025 on multi-task studies & generalization in brains & models, I thought I would repost our perspective for those interested in this topic. We need multiple tasks!👉 doi.org/10.1038/s415...

Thank you!!!!

17.08.2025 15:06 — 👍 9 🔁 0 💬 0 📌 0Lynn Flannery, Kerry Miller, Jeff Wilson, Kevin Koenrades, Brenda Klappe and our Volunteers: Nina Fitzmaurice, Ole Jürgensen, Denise Kittelmann, Elif Ayten

Maithe van Noort, Mohanna Hoveyda, Caroline Harbison, Yamil Vidal, Sotirios Panagiotou,Sofie Wahlberg, Danting Meng, Mobina Tousian.

@claires012345.bsky.social @mheilbron.bsky.social Angela Radulescu @neuroprinciplist.bsky.social @dotadotadota.bsky.social Tyler bonnen Sneha Aenugu @hannesmehrer.bsky.social @debyee.bsky.social Julian Kosciessa @anne-urai.bsky.social @mdhk.net @tdado.bsky.social Shauney Wilson, Shawna Lampkin,

17.08.2025 15:06 — 👍 13 🔁 0 💬 1 📌 0But could not have run without @lauragwilliams.bsky.social @jaspervdb.bsky.social @achterbrain.bsky.social @niklasmuller.bsky.social @eringrant.me @pebenjamters.bsky.social @shahabbakht.bsky.social @judithfan.bsky.social @jfeather.bsky.social Jiahui Guo @tknapen.bsky.social @lampinen.bsky.social

17.08.2025 15:06 — 👍 11 🔁 0 💬 1 📌 0a reception in Hotel Arena, an epic-party in Ijver (with about 40% of attendees on the dance floor), and above all 929 community members who brought a lot of energy and hopefully had, on multiple dimensions a fantastic conference.

So proud to be, together with @irisgroen.bsky.social chair.

#CCN2025 is over. Over 5 days there were 6 fantastic keynotes, 550 posters, 3 community events, 3 keynote & tutorials, 3 generative adversarial collaborations, 8 Satellite events, 1 community lunch meeting, 1 cross-conference hackathon, 1 competition, coffee all day, stroopwafels on day 1,

17.08.2025 15:06 — 👍 62 🔁 7 💬 1 📌 2

That's a wrap for CCN2025 -- and so planning for CCN2026 in New York is starting today! Save travels to all participants and remember to fill out the feedback survey sent via email!

15.08.2025 16:29 — 👍 59 🔁 7 💬 1 📌 0The moment we've all been waiting for— #CCN2025 is HERE! Today we start with satellite events, and tomorrow the main conference begins in Amsterdam! We'll be sharing daily updates about each day's program, so follow the CCN account to stay in the loop. Can't wait to see everyone!

11.08.2025 08:29 — 👍 35 🔁 4 💬 1 📌 0

After preparing for a full year together with @neurosteven.bsky.social and all other amazing organizers

of @cogcompneuro.bsky.social, #CCN2025 is finally here!

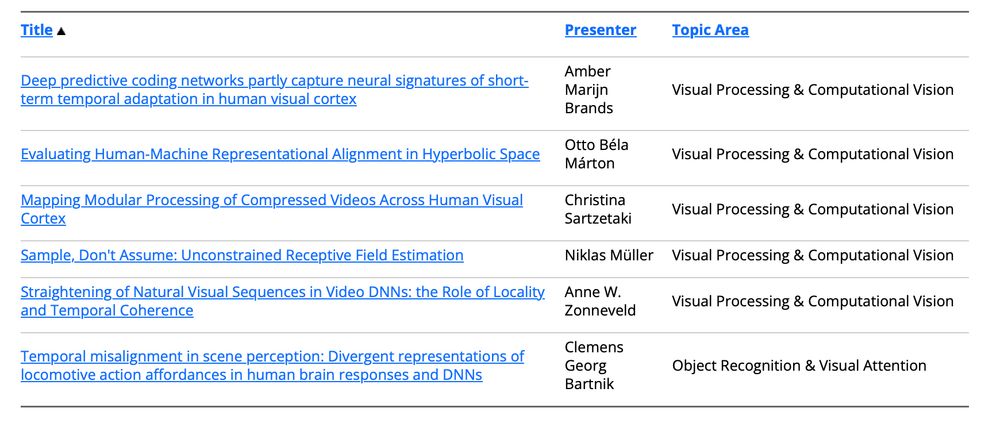

While I'm proud of the entire program we put together, I'd now like to highlight my own lab's contributions, 6 posters total:

CCN2025 kicks off this Tuesday in Amsterdam (with satellite events Monday)! Fun fact: We might be getting the best conference weather Amsterdam has ever seen ☀️

Can't wait to meet everyone and dive into the exciting program ahead. See you there!

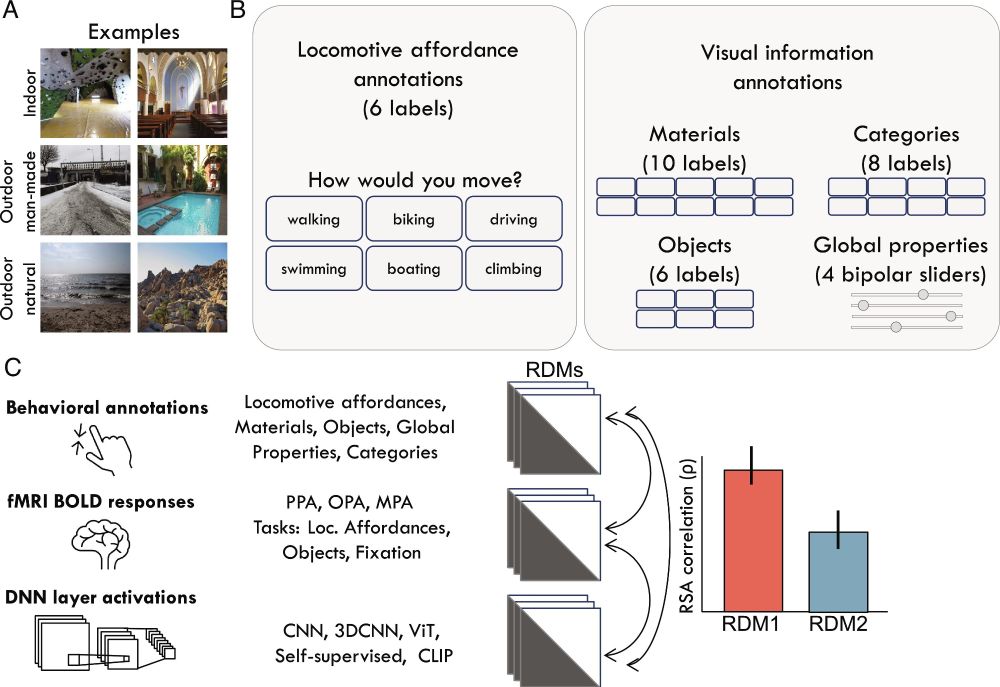

In these tumultuous times, still happy to report a scientific achievement: our preprint on affordance perception was just published in PNAS!

www.pnas.org/doi/10.1073/...

Using behavior, fMRI and deep network analyses, we report two key findings. To recapitulate (preprint 🧵lost on other place):

There are 2 PhD positions in my lab in Amsterdam (collaboration with Sander Bohte, @tessamdekker.bsky.social and Ingmar Visser) on NeuroAI of developmental vision.

academicpositions.nl/ad/centrum-w...

Phew decisions are out..!! Congratulations to the authors of the 26 papers selected for the first edition of the CCN Proceedings 📜 @eringrant.bsky.social @neurosteven.bsky.social @cogcompneuro.bsky.social 1/2

16.05.2025 13:33 — 👍 9 🔁 3 💬 2 📌 0