Microsoft Research Lab - New York City - Microsoft Research

Apply for a research position at Microsoft Research New York & collaborate with academia to advance economics research, prediction markets & ML.

🚨Microsoft Research NYC is hiring🚨

We're hiring postdocs and senior researchers in AI/ML broadly, and in specific areas like test-time scaling and science of DL. Postdoc applications due Oct 22, 2025. Senior researcher applications considered on a rolling basis.

Links to apply: aka.ms/msrnyc-jobs

18.09.2025 14:37 — 👍 18 🔁 7 💬 0 📌 1

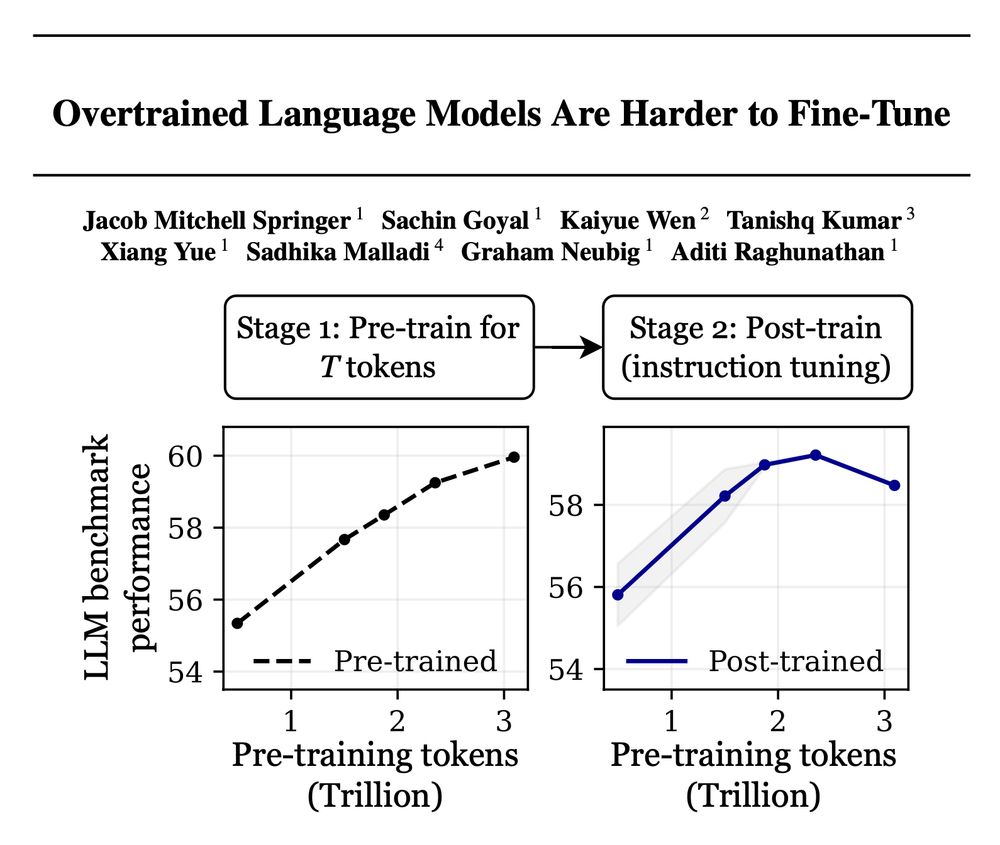

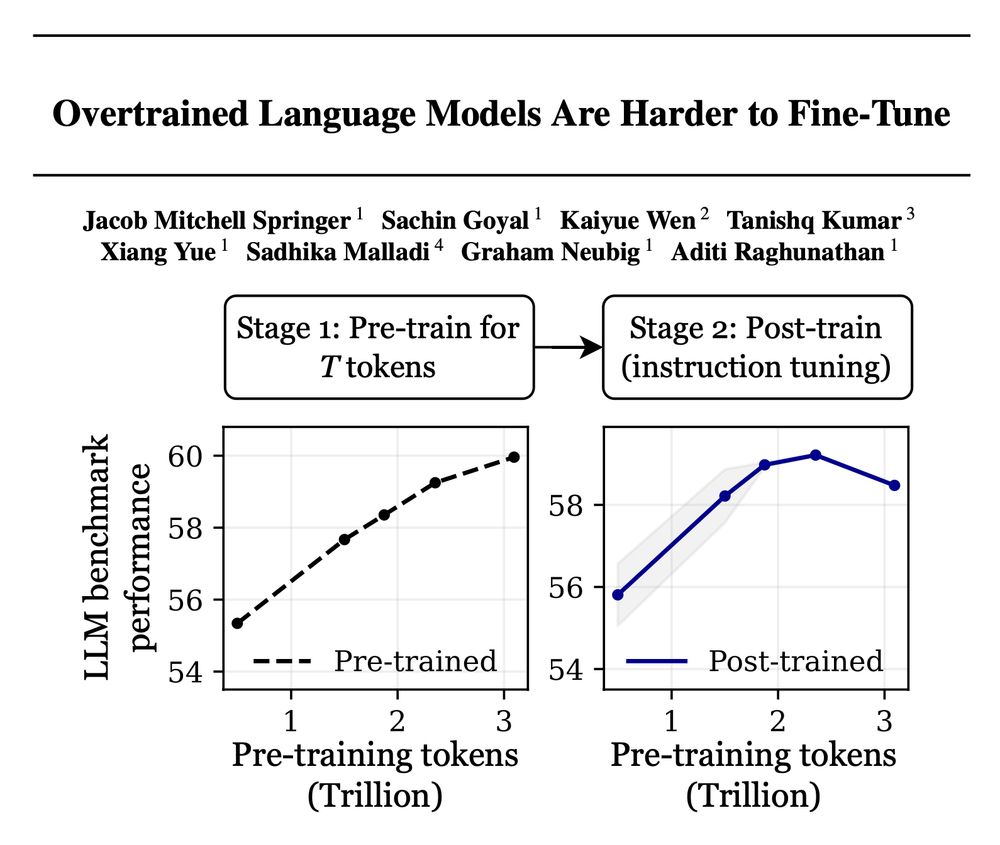

Training with more data = better LLMs, right? 🚨

False! Scaling language models by adding more pre-training data can decrease your performance after post-training!

Introducing "catastrophic overtraining." 🥁🧵👇

arxiv.org/abs/2503.19206

1/10

26.03.2025 18:35 — 👍 33 🔁 14 💬 1 📌 1

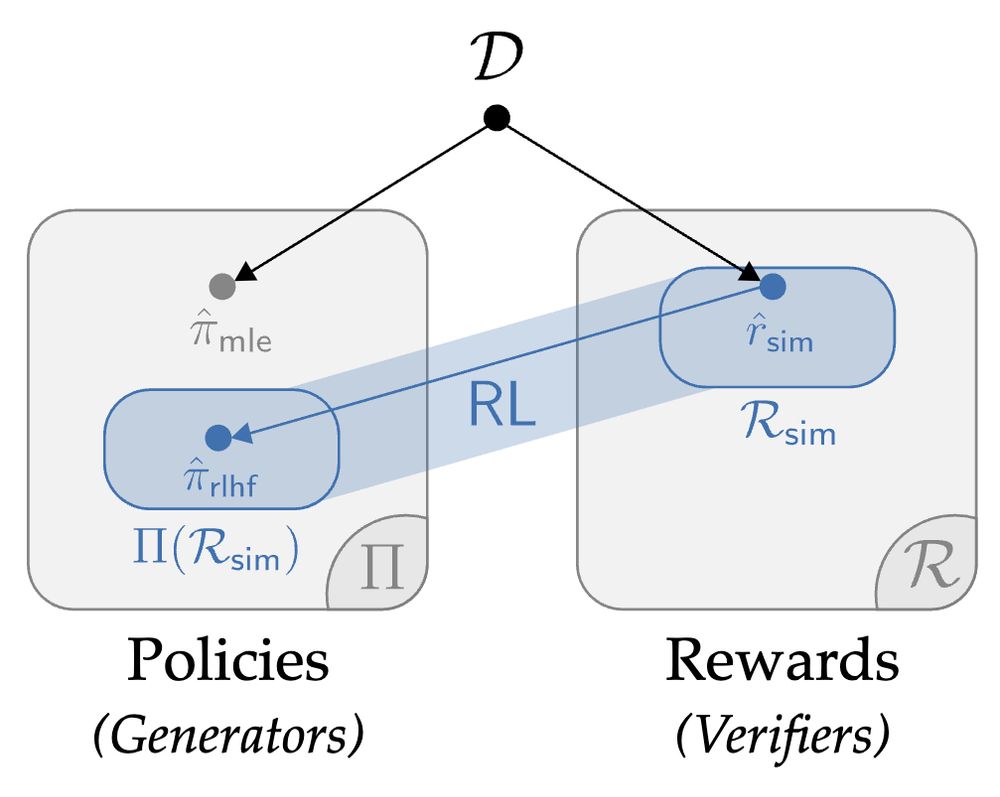

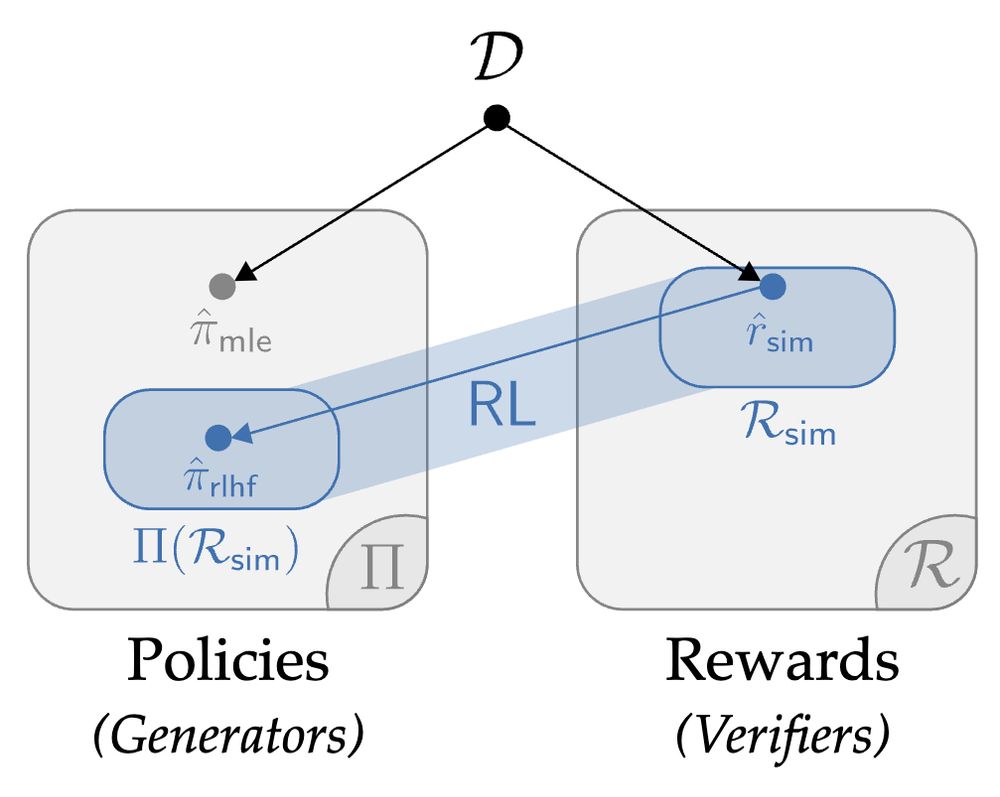

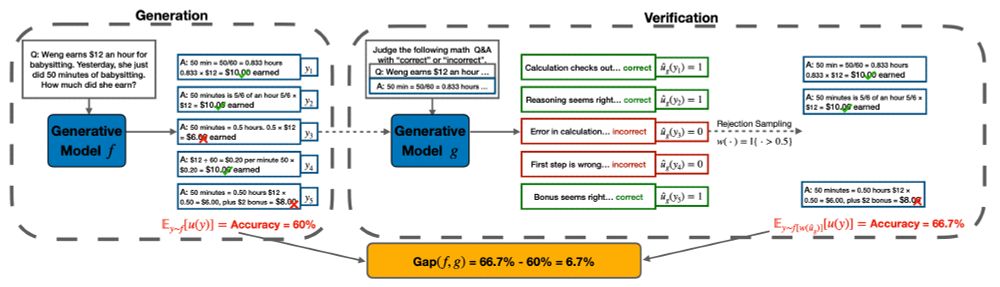

1.5 yrs ago, we set out to answer a seemingly simple question: what are we *actually* getting out of RL in fine-tuning? I'm thrilled to share a pearl we found on the deepest dive of my PhD: the value of RL in RLHF seems to come from *generation-verification gaps*. Get ready to 🤿:

04.03.2025 20:59 — 👍 59 🔁 11 💬 1 📌 3

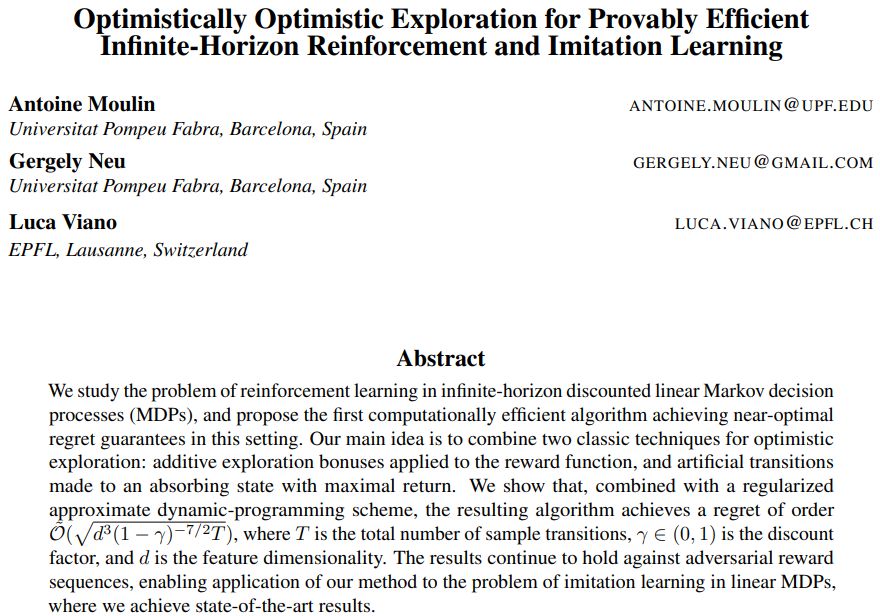

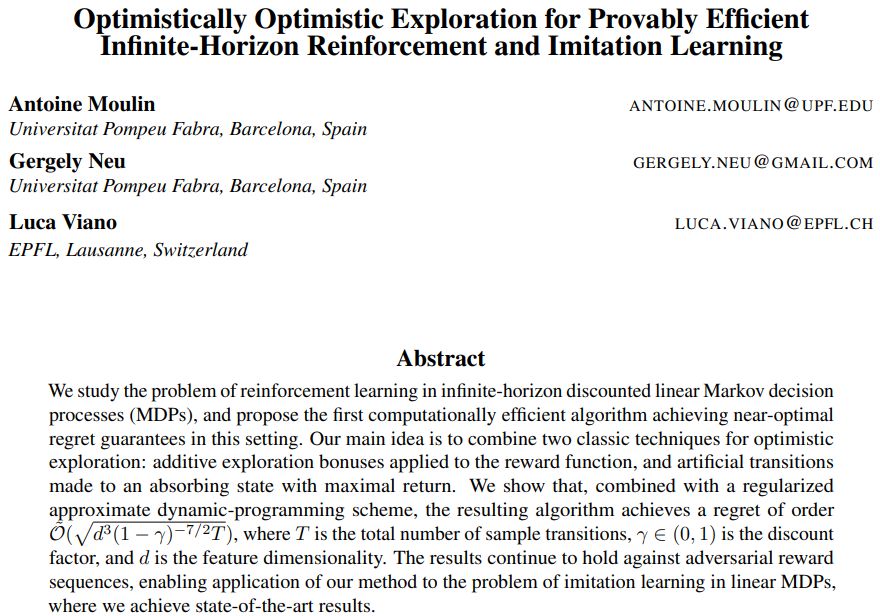

super happy about this preprint! we can *finally* perform efficient exploration and find near-optimal stationary policies in infinite-horizon linear MDPs, and even use it for imitation learning :) working with @neu-rips.bsky.social and @lviano.bsky.social on this was so much fun!!

20.02.2025 17:45 — 👍 23 🔁 2 💬 2 📌 1

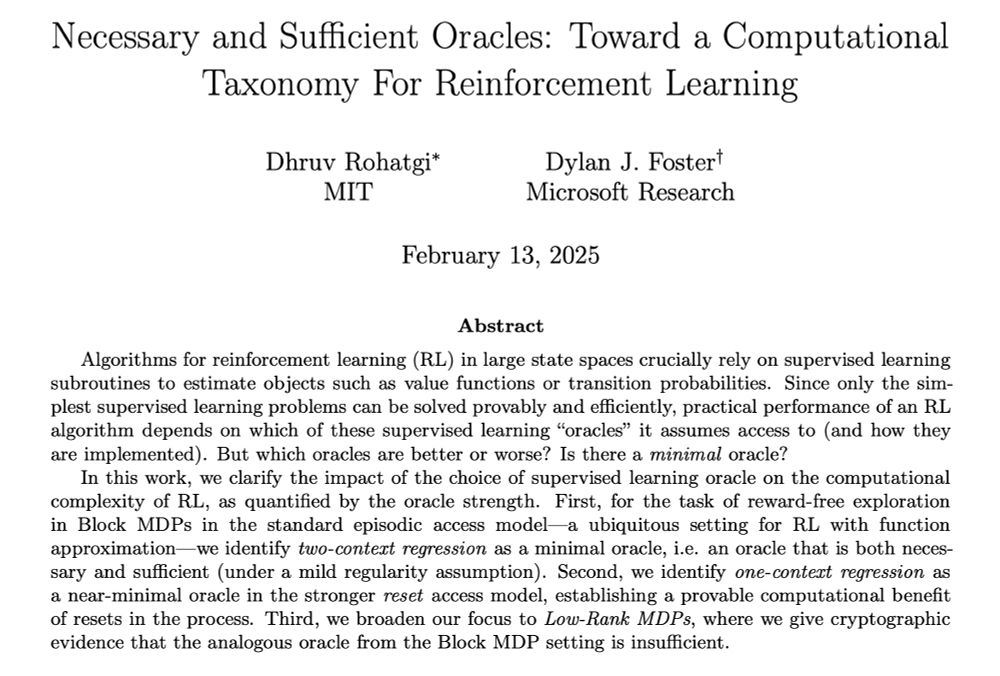

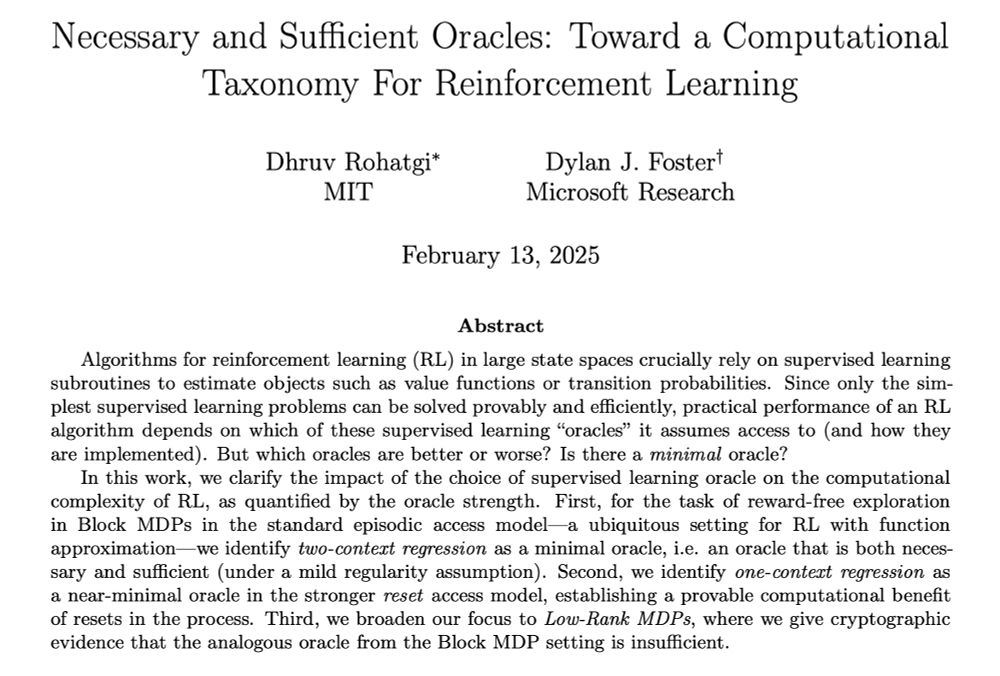

What are the minimal supervised learning primitives required to perform RL efficiently?

New paper led by my amazing intern Dhruv Rohatgi:

Necessary and Sufficient Oracles: Toward a Computational Taxonomy for Reinforcement Learning

arxiv.org/abs/2502.08632

1/

20.02.2025 23:39 — 👍 25 🔁 4 💬 1 📌 0

Mind the Gap: Examining the Self-Improvement Capabilities of Large Language Models | alphaXiv

View 3 comments: Delete the space?

Models can self-improve🥷 by knowing they were wrong🧘♀️ but when can they do it?

Across LLM families, tasks and mechanisms

This ability scales with pretraining, prefers CoT, non QA tasks and more in 🧵

alphaxiv.org/abs/2412.02674

@yus167.bsky.social @shamkakade.bsky.social

📈🤖

#NLP #ML

13.12.2024 23:55 — 👍 24 🔁 3 💬 2 📌 0

On Saturday I will present our LLM self-improvement paper in the workshop on Mathematics of Modern Machine Learning (M3L) and the workshop on Statistical Foundations of LLMs and Foundation Models (SFLLM).

bsky.app/profile/yus1...

09.12.2024 19:48 — 👍 2 🔁 0 💬 0 📌 0

NeurIPS Poster The Importance of Online Data: Understanding Preference Fine-tuning via CoverageNeurIPS 2024

I will present two papers at #NeurIPS2024!

Happy to meet old and new friends and talk about all aspects of RL: data, environment structure, and reward! 😀

In Wed 11am-2pm poster session I will present HyPO-- best of both worlds of offline and online RLHF: neurips.cc/virtual/2024...

09.12.2024 19:48 — 👍 9 🔁 2 💬 1 📌 0

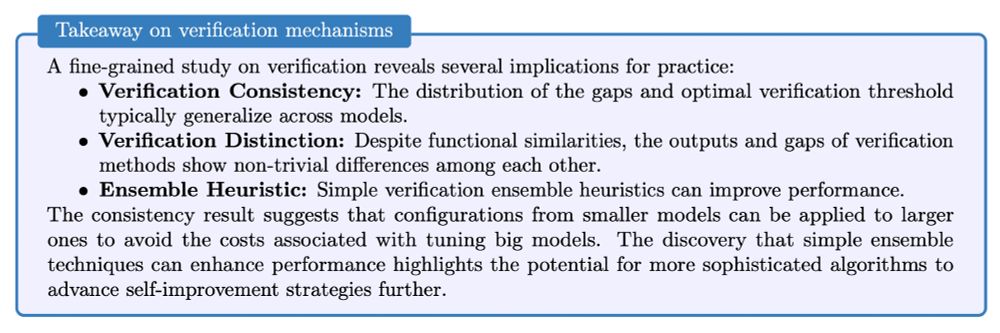

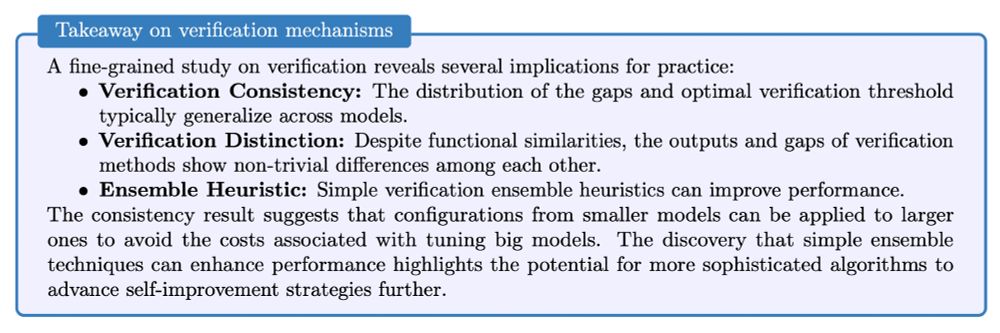

We also dive deep into the similarity and difference between different verification mechanisms. We observed the consistency, distinction and ensemble properties of the verification methods (see the summary image). (8/9)

06.12.2024 18:02 — 👍 1 🔁 0 💬 1 📌 0

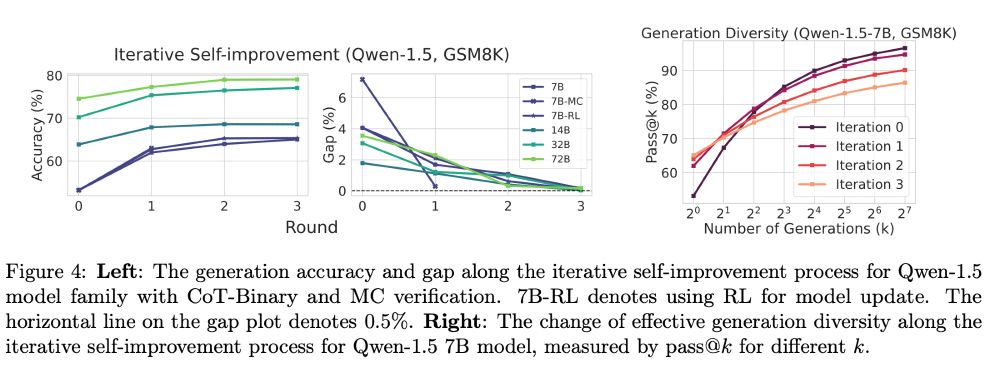

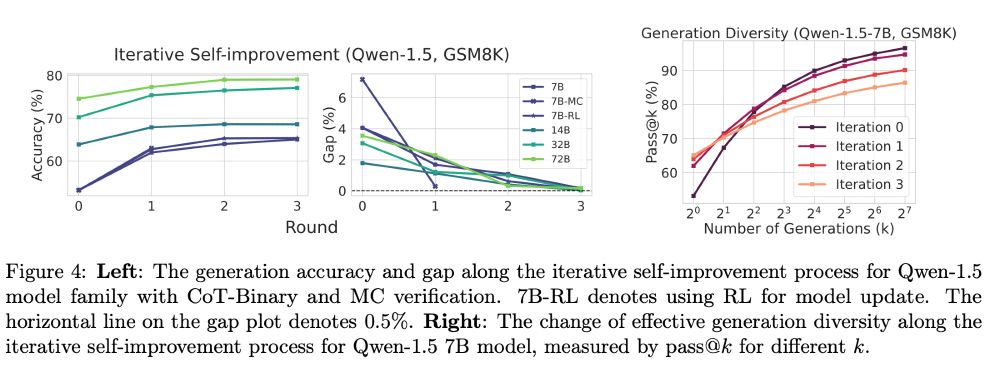

In iterative self-improvement, we observe the gap diminishes to 0 in a few iterations, resembling many previous findings. We discovered that one cause of such saturation is the degradation of the "effective diversity" of the generation due to the imperfect verifier. (7/9)

06.12.2024 18:02 — 👍 1 🔁 0 💬 1 📌 0

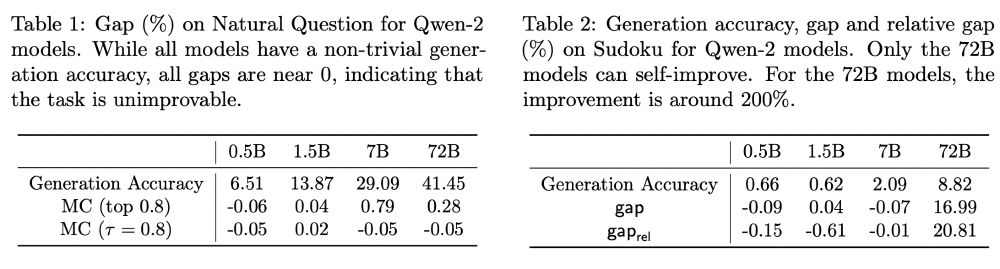

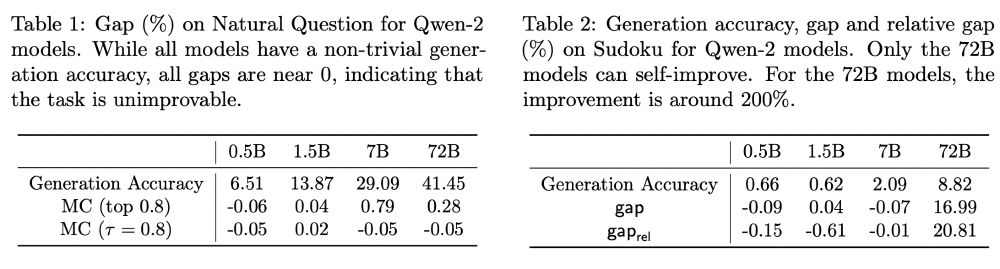

However, self-improvement is not always possible on all tasks. We do not observe significant self-improvement signal on QA tasks like Natural Questions. Also, not all models can self-improve on sudoku, a canonical example of "verification is easier than generation". (6/9)

06.12.2024 18:02 — 👍 1 🔁 0 💬 1 📌 0

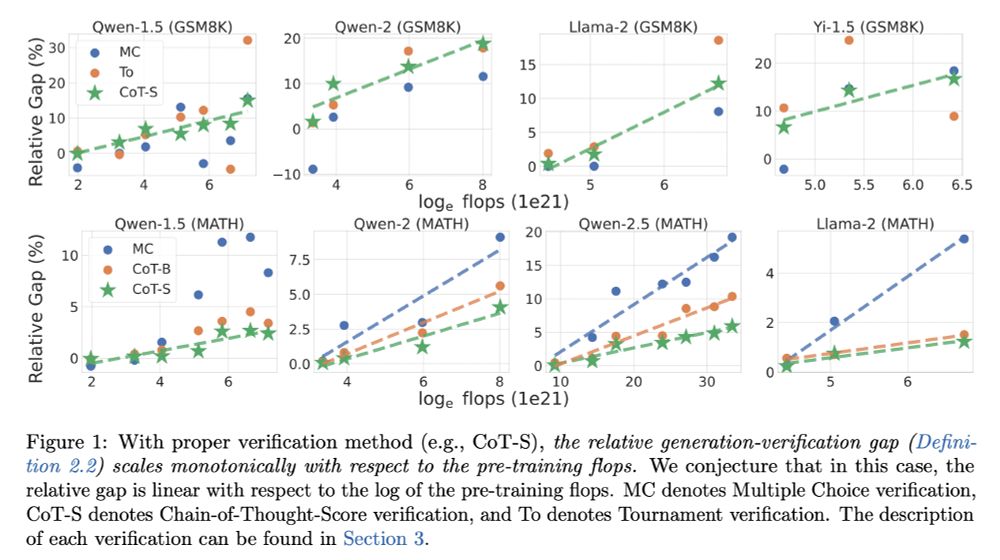

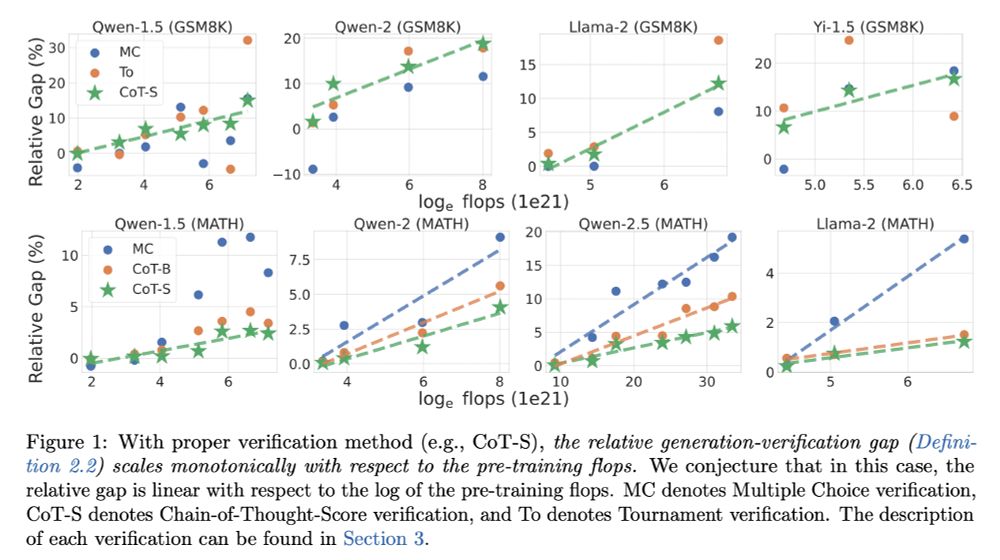

Our first major result is an observational scaling law: with certain verification methods, the relative gap increases monotonically (almost linear) to the log of pretrain flops, on tasks like GSM8K and MATH. (5/9)

06.12.2024 18:02 — 👍 1 🔁 0 💬 1 📌 0

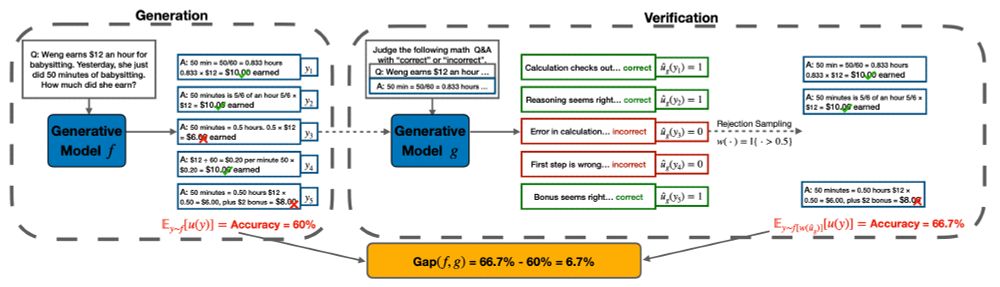

We propose to use the performance difference between the reweighted and original responses (2-1) -- the "generation-verification gap". We also study the relative gap -- gap weighted by the error rate. Intuitively, improvement is harder if the model makes fewer mistakes. (4/9)

06.12.2024 18:02 — 👍 1 🔁 0 💬 1 📌 0

While previous works measure self-improvement using the performance difference between the models (3-1), we found out that step 3 (distillation) introduces confounders (for example, the models can just be better at following certain formats). (3/9)

06.12.2024 18:02 — 👍 1 🔁 0 💬 1 📌 0

We study self-improvement as the following process:

1. Model generates many candidate responses.

2. Model filters/reweights responses based on its verifications.

3. Distill the reweighted responses into a new model.

(2/9)

06.12.2024 18:02 — 👍 1 🔁 0 💬 1 📌 0

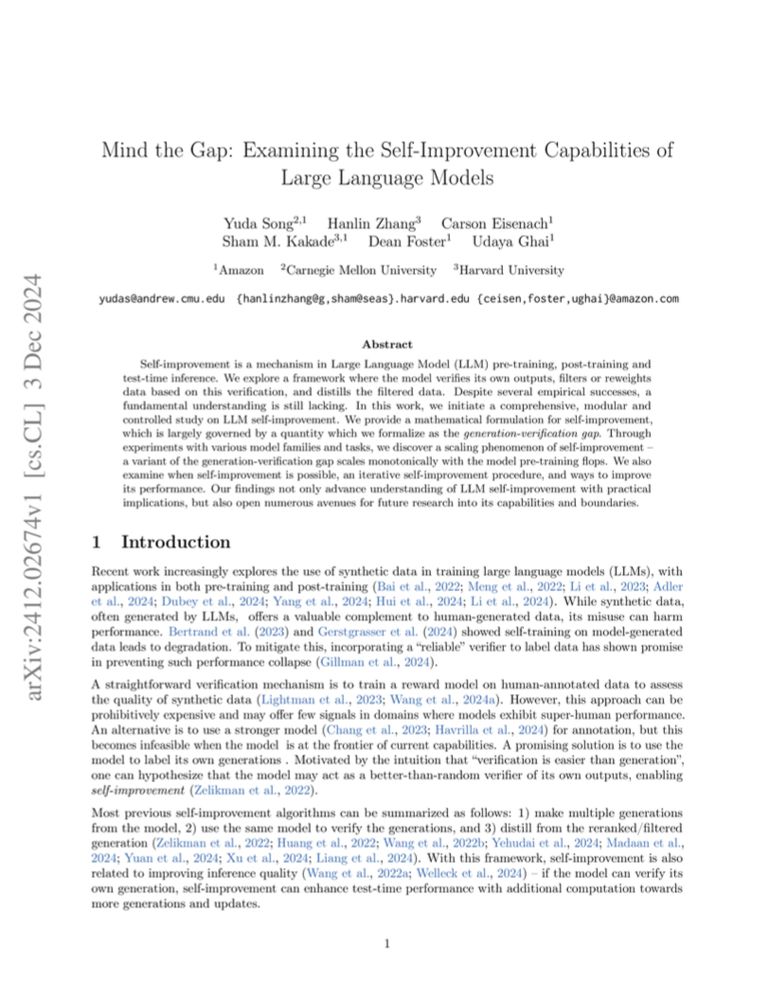

LLM self-improvement has critical implications in synthetic data, post-training and test-time inference. To understand LLMs' true capability of self-improvement, we perform large-scale experiments with multiple families of LLMs, tasks and mechanisms. Here is what we found: (1/9)

06.12.2024 18:02 — 👍 12 🔁 4 💬 1 📌 1

Yuda Song, Hanlin Zhang, Carson Eisenach, Sham Kakade, Dean Foster, Udaya Ghai

Mind the Gap: Examining the Self-Improvement Capabilities of Large Language Models

https://arxiv.org/abs/2412.02674

04.12.2024 09:09 — 👍 1 🔁 1 💬 0 📌 0

I think the main difference in terms of interpolation / extrapolation between DPO and RLHF is that the former only guarantees closeness to the reference policy on the training data, while RLHF usually tacks on an on-policy KL penalty. We explored this point in arxiv.org/abs/2406.01462.

22.11.2024 15:38 — 👍 4 🔁 1 💬 1 📌 0

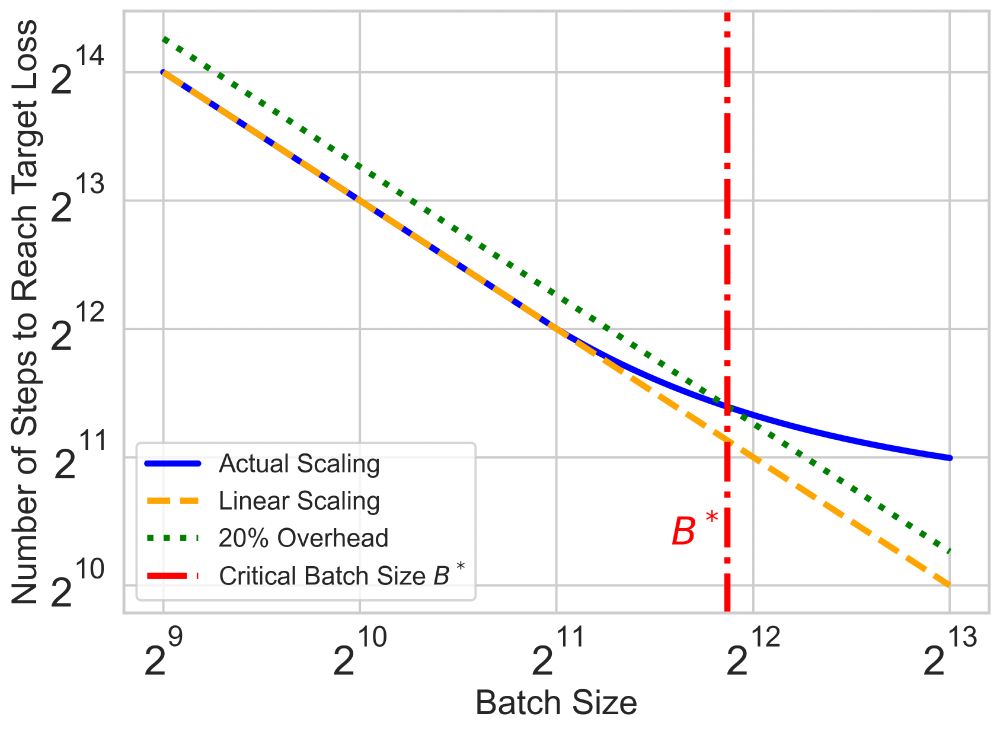

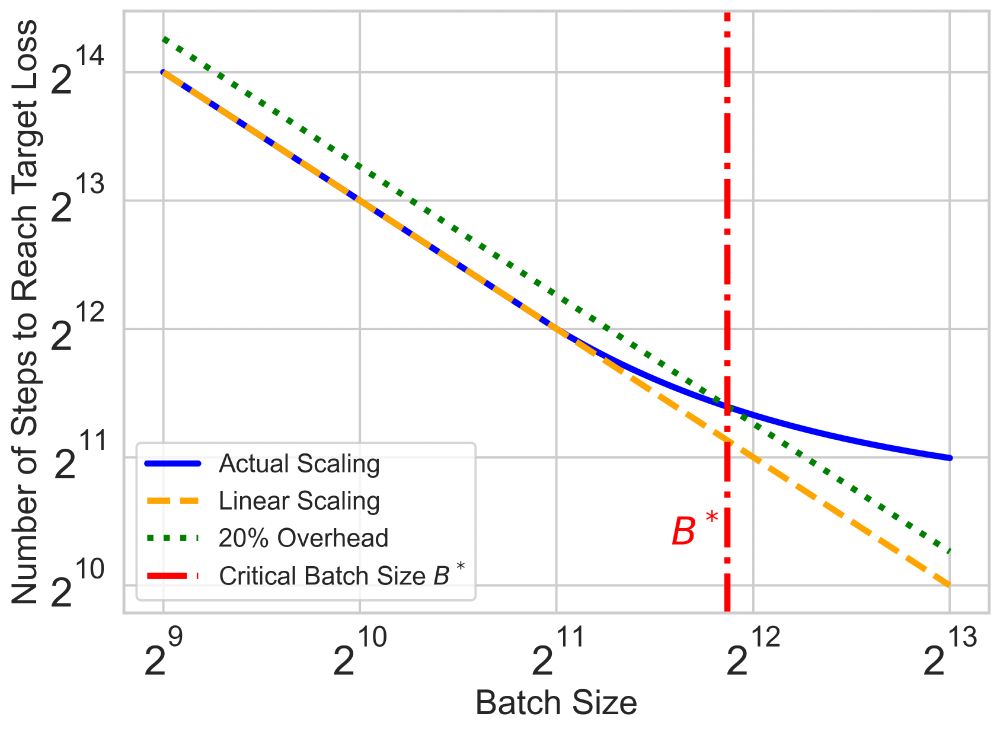

(1/n) 💡How can we speed up the serial runtime of long pre-training runs? Enter Critical Batch Size (CBS): the tipping point where the gains of data parallelism balance with diminishing efficiency. Doubling batch size halves the optimization steps—until we hit CBS, beyond which returns diminish.

22.11.2024 20:19 — 👍 16 🔁 4 💬 2 📌 0

I created a starter pack for people who are or have been affiliated with the Machine Learning Department at CMU. Let me know if I missed someone!

go.bsky.app/QLTVEph

#AcademicSky

18.11.2024 15:46 — 👍 4 🔁 4 💬 0 📌 0

Ojash Neopane, Aaditya Ramdas, Aarti Singh

Logarithmic Neyman Regret for Adaptive Estimation of the Average Treatment Effect

https://arxiv.org/abs/2411.14341

22.11.2024 05:01 — 👍 5 🔁 1 💬 0 📌 0

Intro 🦋

I am a final-year PhD student from CMU Robotics. I work on humanoid control, perception, and behavior in both simulation and real life, using mostly RL:

🏃🏻PHC: zhengyiluo.com/PHC

💫PULSE: zhengyiluo.com/PULSE

🔩Omnigrasp: zhengyiluo.com/Omnigrasp

🤖OmniH2O: omni.human2humanoid.com

19.11.2024 20:34 — 👍 22 🔁 3 💬 2 📌 0

Hi Bsky people 👋 I'm a PhD candidate in Machine Learning at Carnegie Mellon University.

My research focuses on interactive AI, involving:

🤖 reinforcement learning,

🧠 foundation models, and

👩💻 human-centered AI.

Also a founding co-organizer of the MineRL competitions 🖤 Follow me for ML updates!

18.11.2024 15:05 — 👍 70 🔁 6 💬 2 📌 0

Machine Learning Researcher | PhD Candidate @ucsd_cse | @trustworthy_ml

chhaviyadav.org

Chief Models Officer @ Stealth Startup; Inria & MVA - Ex: Llama @AIatMeta & Gemini and BYOL @GoogleDeepMind

Stupid #robotics guy at ETHz

Twitter: https://x.com/ChongZitaZhang

Research Website: https://zita-ch.github.io/

cs phd student @cornelluniversity.bsky.social. previously @harveymuddcollege.bsky.social. working on reinforcement learning & generative models. https://nico-espinosadice.github.io/

The world's leading venue for collaborative research in theoretical computer science. Follow us at http://YouTube.com/SimonsInstitute.

PhD candidate at UCSD. Prev: NVIDIA, Meta AI, UC Berkeley, DTU. I like robots 🤖, plants 🪴, books 📚, and they/them pronouns 🏳️🌈

https://www.nicklashansen.com

Research Scientist Meta, Adjunct Professor at University of Pittsburgh. Interested in reinforcement learning, approximate DP, adaptive experimentation, Bayesian optimization, & operations research. http://danielrjiang.github.io

📍Chicago, IL

Assistant Prof at University of Utah Fall 2025. NLP+CV+RL. RS at Google DeepMind. PhD from CMU MLD, undergrad Georgia Tech. Sometimes researcher, frequent shitposter.

Researching planning, reasoning, and RL in LLMs @ Reflection AI. Previously: Google DeepMind, UC Berkeley, MIT. I post about: AI 🤖, flowers 🌷, parenting 👶, public transit 🚆. She/her.

http://www.jesshamrick.com

PhD Candidate @ CMU Robotics Institute

Research scientist @ Google DeepMind. AI to help humans.

Transactions on Machine Learning Research (TMLR) is a new venue for dissemination of machine learning research

https://jmlr.org/tmlr/

Research Scientist GoogleDeepMind

Ex @UniofOxford, AIatMeta, GoogleAI

PhD Candidate at Cambridge | ex Meta, Amazon | Studying diversity in multi-agent and multi-robot learning

https://matteobettini.com/

Google Chief Scientist, Gemini Lead. Opinions stated here are my own, not those of Google. Gemini, TensorFlow, MapReduce, Bigtable, Spanner, ML things, ...

Faculty at the University of Pennsylvania. Lifelong machine learning and AI for robotics and precision medicine: continual learning, transfer & multi-task learning, deep RL, multimodal ML, and human-AI collaboration. seas.upenn.edu/~eeaton