When RAG systems hallucinate, is the LLM misusing available information or is the retrieved context insufficient? In our #ICLR2025 paper, we introduce "sufficient context" to disentangle these failure modes. Work w Jianyi Zhang, Chun-Sung Ferng, Da-Cheng Juan, Ankur Taly, @cyroid.bsky.social

24.04.2025 18:18 — 👍 10 🔁 5 💬 1 📌 0

Hey AI folks - stop using SHAP! It won't help you debug [1], won't catch discrimination [2], and makes no sense for feature importance [3].

Plus - as we show - it also won't give recourse.

In a paper at #ICLR we introduce feature responsiveness scores... 1/

arxiv.org/pdf/2410.22598

24.04.2025 16:37 — 👍 29 🔁 8 💬 3 📌 0

Many ML models predict labels that don’t reflect what we care about, e.g.:

– Diagnoses from unreliable tests

– Outcomes from noisy electronic health records

In a new paper w/@berkustun, we study how this subjects individuals to a lottery of mistakes.

Paper: bit.ly/3Y673uZ

🧵👇

19.04.2025 23:04 — 👍 12 🔁 2 💬 1 📌 0

We'll be @ ICLR!

Poster: Sat 26 Apr 10AM — 12:30PM SGT

Paper: tinyurl.com/2deek4wx

Code: tinyurl.com/2rb6zc28

24.04.2025 06:19 — 👍 2 🔁 1 💬 0 📌 0

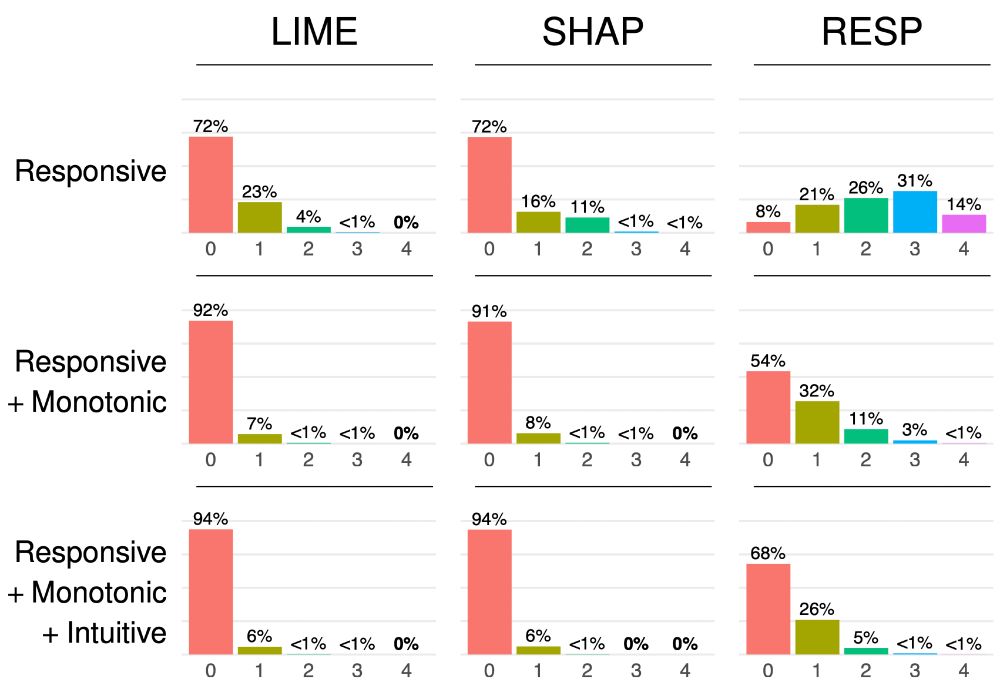

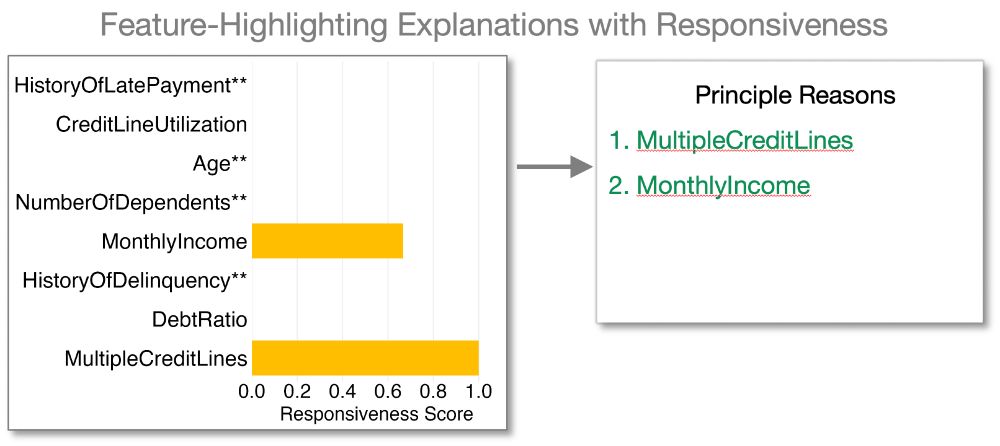

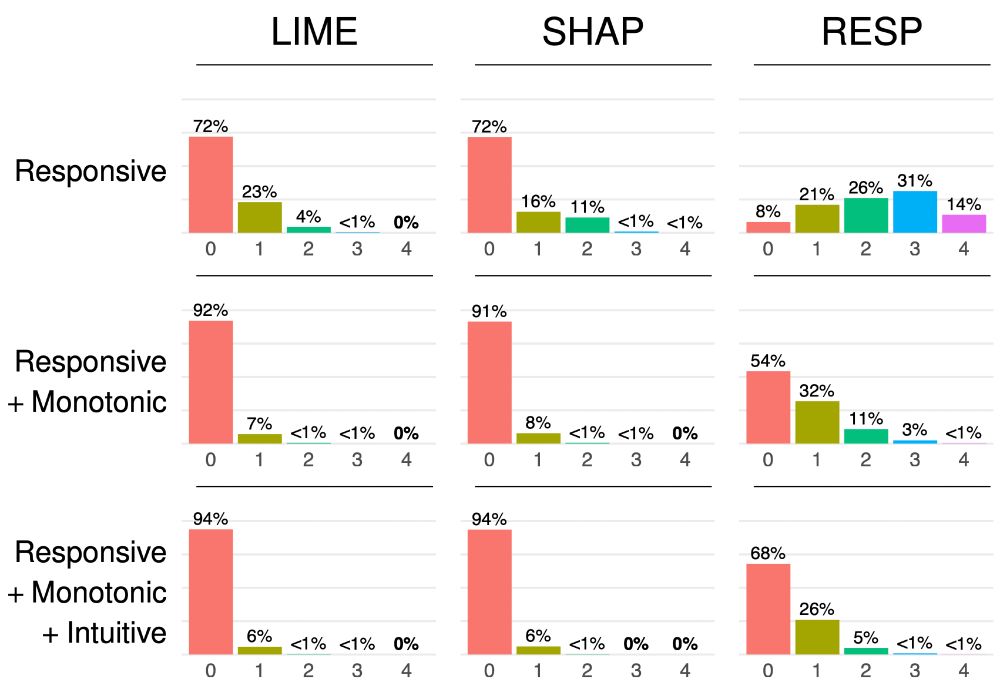

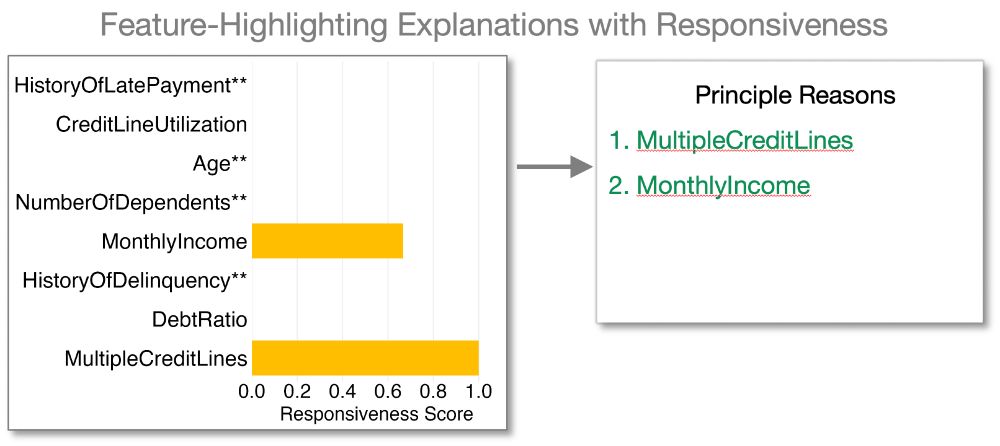

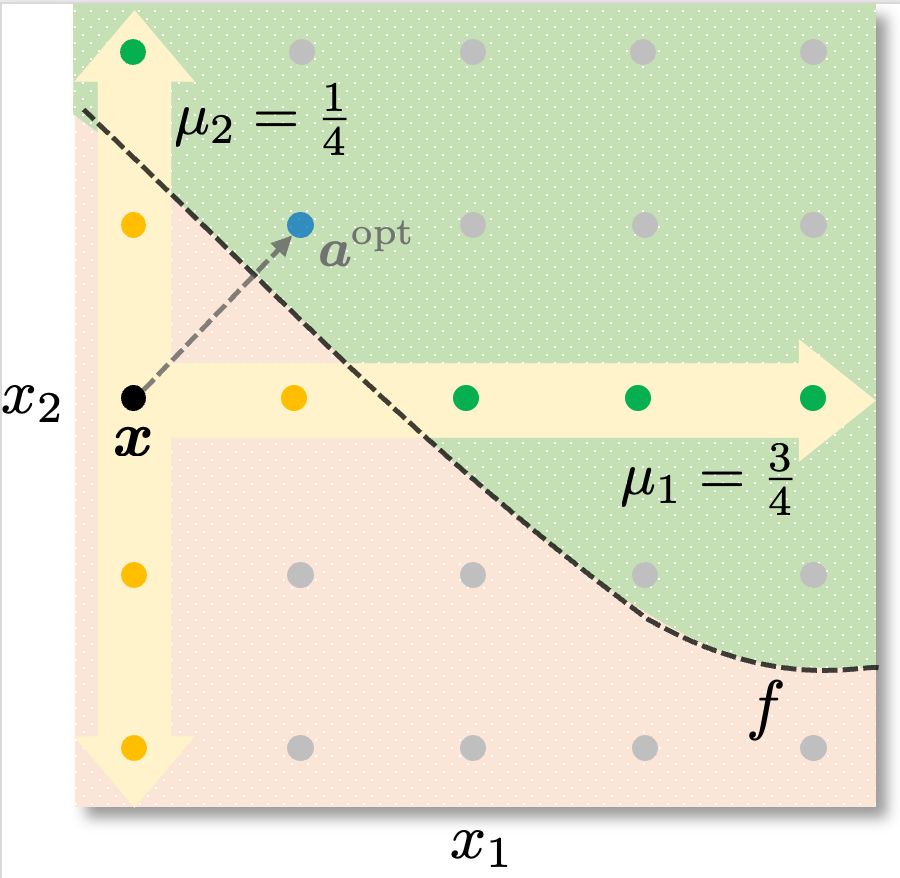

We develop methods to compute responsiveness scores for any dataset and models. Three main advantages:

1. Can be swapped in place of existing methods

2. Highlight responsive features

3. Flag instances where such features don't exist!

24.04.2025 06:19 — 👍 2 🔁 1 💬 1 📌 0

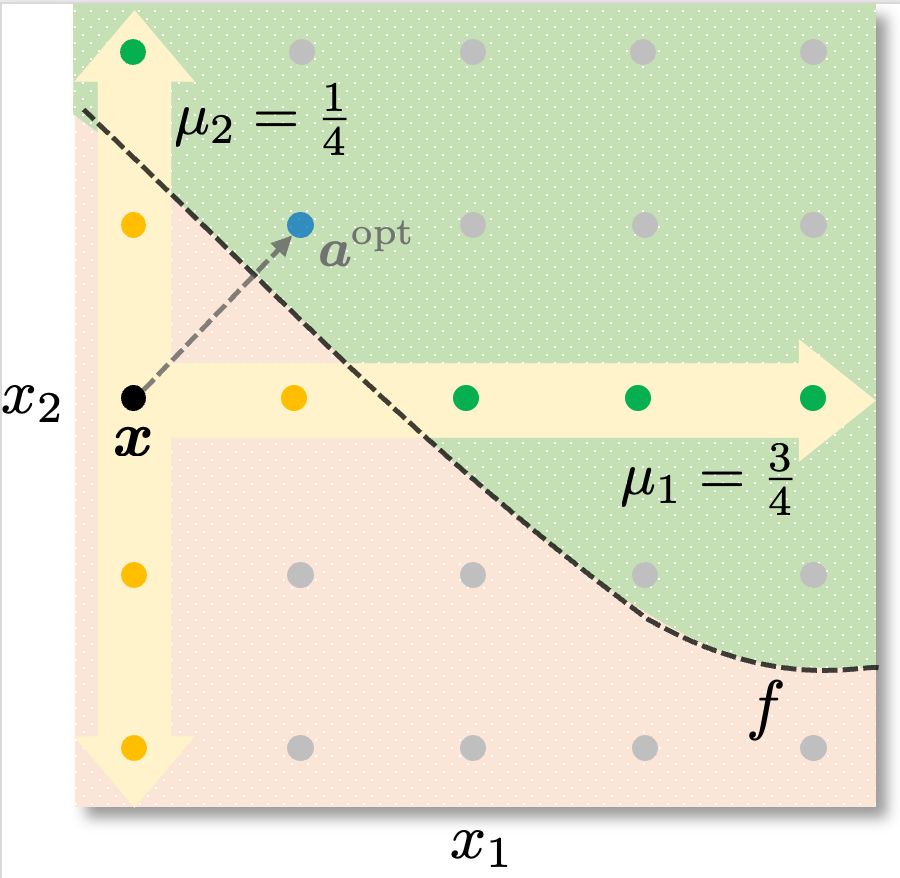

Current approaches are unable to inform consumers when:

1. features are not responsive

2. features are not monotonically responsive (e.g., can't increase income "too much")

3. features must change in counterintuitive ways (e.g., decrease income) to obtain the desired prediction

24.04.2025 06:19 — 👍 2 🔁 1 💬 1 📌 0

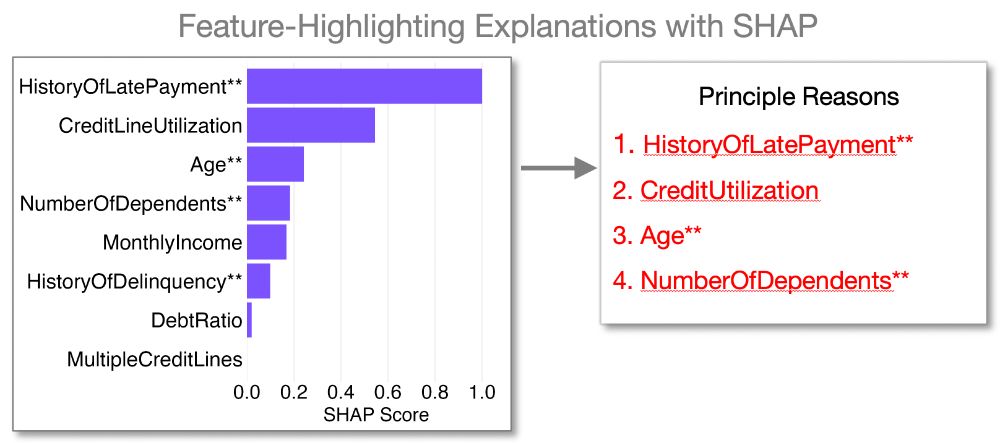

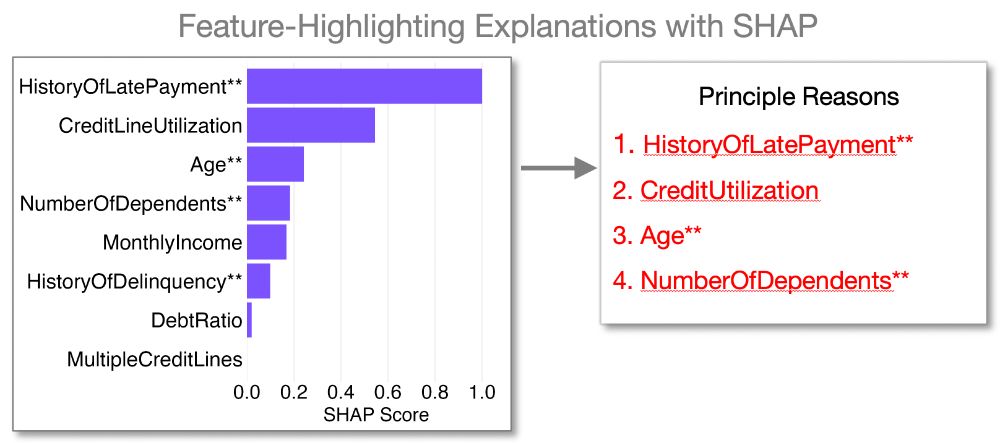

But, SHAP highlights features that are:

1. Immutable: HistoryOfLatePayment

2. Mutable but not actionable: Age, NumberOfDependents

3. Actionable but not responsive: CreditUtilization

24.04.2025 06:19 — 👍 2 🔁 1 💬 1 📌 0

Hence, we designed responsiveness scores to highlight features that are actionable and responsive (i.e., lead to desired prediction when changed)

24.04.2025 06:19 — 👍 2 🔁 1 💬 1 📌 0

Many countries seek to protect consumers in applications like lending and hiring by requiring explanations for adverse outcomes. But,

- Many provide companies with substantial flexibility

- Standard approach is to use methods like SHAP and LIME to highlight important features

24.04.2025 06:19 — 👍 2 🔁 1 💬 1 📌 0

Denied a loan, an interview, or an insurance claim by machine learning models? You may be entitled to a list of reasons.

In our latest w @anniewernerfelt.bsky.social @berkustun.bsky.social @friedler.net, we show how existing explanation frameworks fail and present an alternative for recourse

24.04.2025 06:19 — 👍 17 🔁 7 💬 1 📌 1

PhD Student @ UC San Diego

Researching reliable, interpretable, and human-aligned ML/AI

researcher studying privacy, security, reliability, and broader social implications of algorithmic systems · fake doctor working at a real hospital

website: https://kulyny.ch

MD/PhD student | University of Toronto | Machine Learning for Health

Remaking consumer electronics to respect people and the planet. 💻🪛 Framework Laptop 13 and 16 are now in stock, and pre-orders are open on Framework Desktop!

Research Scientist at DeepMind. Opinions my own. Inventor of GANs. Lead author of http://www.deeplearningbook.org . Founding chairman of www.publichealthactionnetwork.org

Working towards the safe development of AI for the benefit of all at Université de Montréal, LawZero and Mila.

A.M. Turing Award Recipient and most-cited AI researcher.

https://lawzero.org/en

https://yoshuabengio.org/profile/

Advocate for tech that makes humans better | Spatial Computing, Holodeck, and AI Futurist | Ex-Microsoft, Rackspace | Co-author, "The Infinite Retina."

PhD candidate in the XAI group at Fraunhofer HHI

Host of the YouTube channel, Snazzy Labs! I talk about tech.

I’m @SnazzyQ most other places.

Sr. Principal Research Manager at Microsoft Research, NYC // Machine Learning, Responsible AI, Transparency, Intelligibility, Human-AI Interaction // WiML Co-founder // Former NeurIPS & current FAccT Program Co-chair // Brooklyn, NY // http://jennwv.com

AI Professor and Founding Director @ https://cair.uia.no | Chair of Technical Steering Committee @ https://www.literal-labs.ai | Book: https://tsetlinmachine.org

Building the future of work with AI. Previously CTO at Peerspace. Love data, functional programming, AI and that kind of stuff. Father of 2 and husband of 1.

Postdoc at UW CSE. Differential privacy, memorization in ML, and learning theory.

“How Data Happened” with Chris Wiggins available now! Historian of science, AI/ML computing, sigint, and technology, Princeton

Machine learning + psychiatry, from a human rights perspective. American in Deutschland. Kindness & Curiosity. Dog & twin mom.

https://www.beingsaige.com/

PhD @UChicagoCS / BE in CS @Umich / ✨AI/NLP transparency and interpretability/📷🎨photography painting

PhD Student @ LMU Munich

Munich Center for Machine Learning (MCML)

Research in Interpretable ML / Explainable AI

PhD student in Computer Science @UCSD. Studying interpretable AI and RL to improve people's decision-making.

Human-centered AI #HCAI, NLP & ML. Director TRAILS (Trustworthy AI in Law & Society) and AIM (AI Interdisciplinary Institute at Maryland). Formerly Microsoft Research NYC. Fun: 🧗🧑🍳🧘⛷️🏕️. he/him.