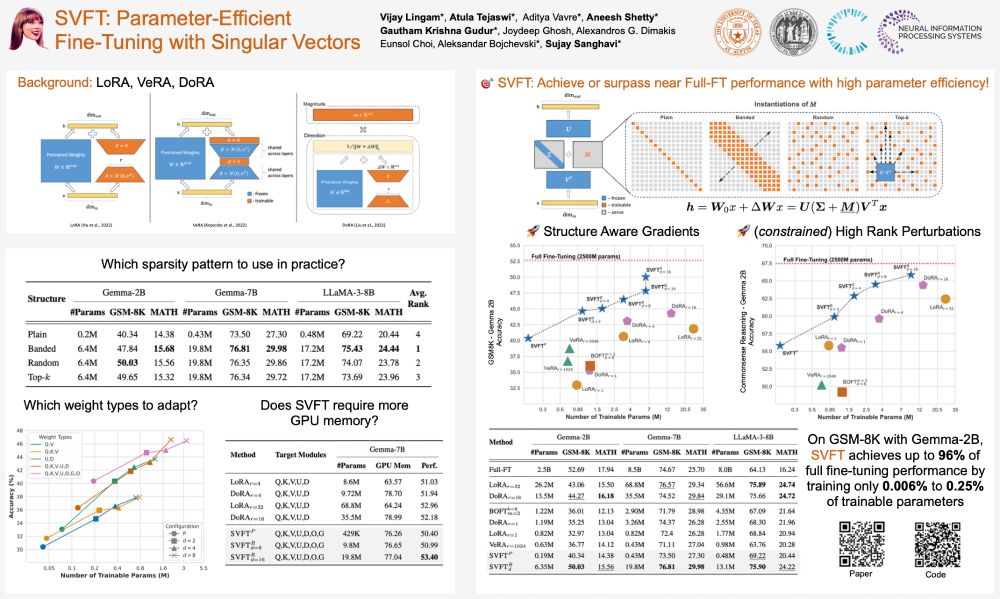

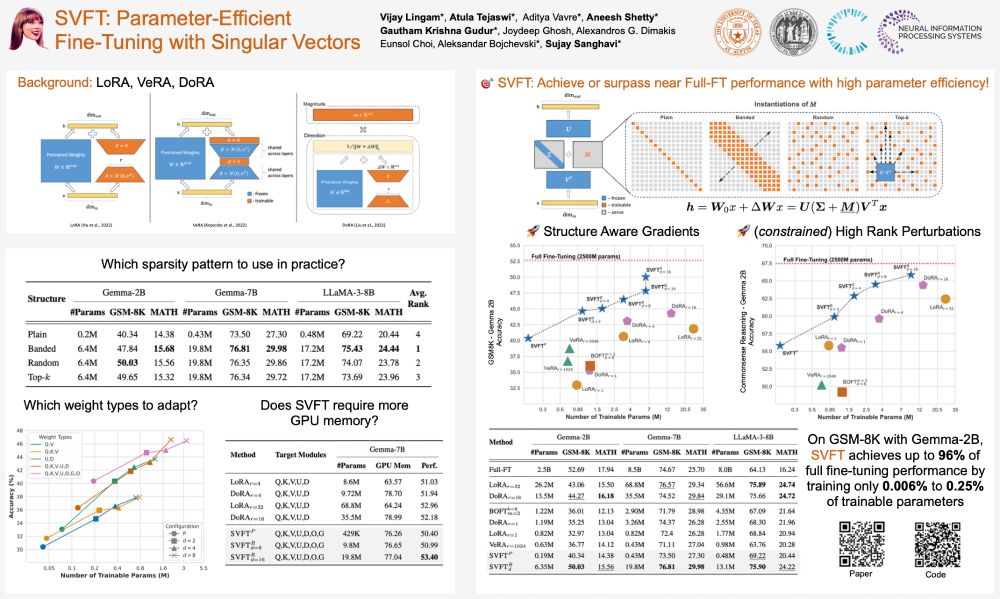

Are you still using LoRA to fine-tune your LLM? 2024 has seen an explosion of new parameter-efficient fine tuning technique (PEFT), thanks to clever uses of the singular value decomposition (SVD). Let's dive into the alphabet soup: SVF, SVFT, MiLoRA, PiSSA, LoRA-XS 🤯...

20.02.2025 12:38 — 👍 41 🔁 13 💬 2 📌 3

I'm at #Neurips2024 this week!

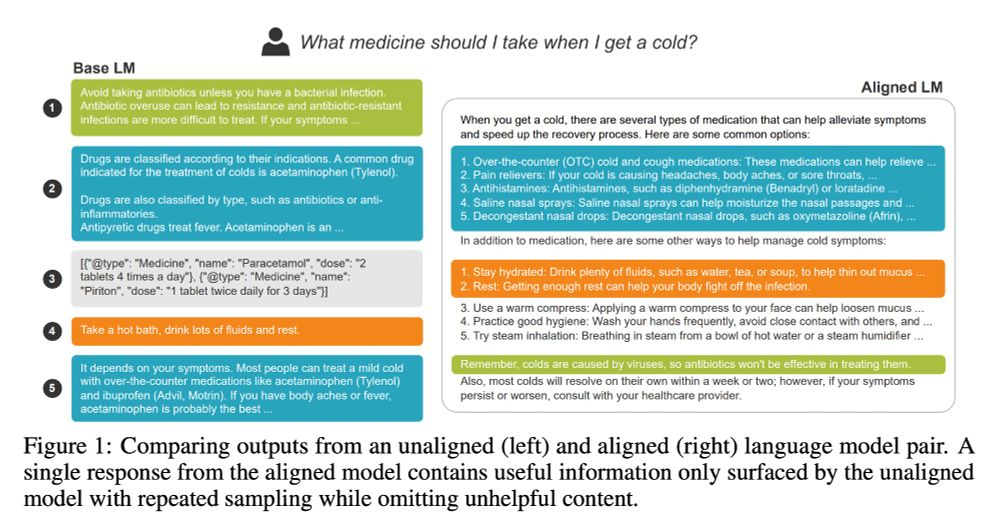

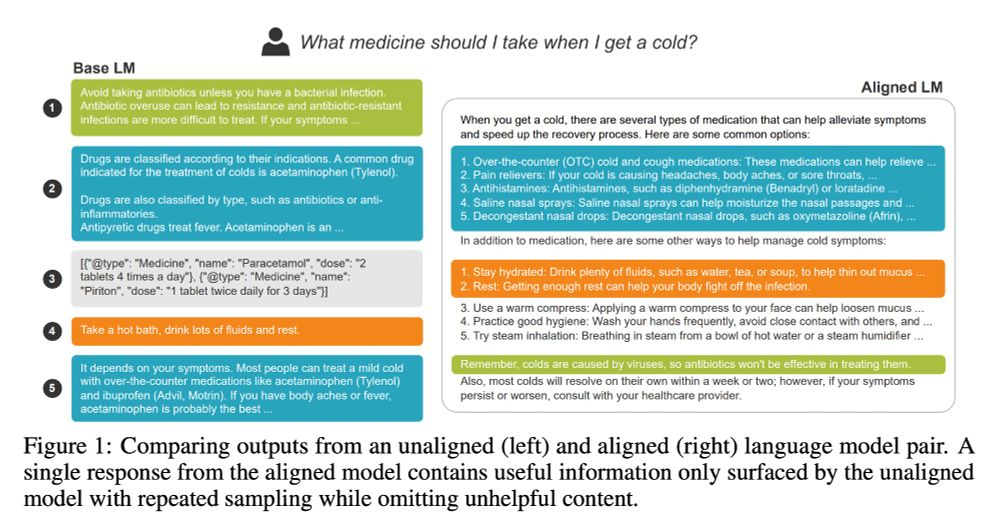

My work (arxiv.org/abs/2406.17692) w/ @gregdnlp.bsky.social & @eunsol.bsky.social exploring the connection between LLM alignment and response pluralism will be at pluralistic-alignment.github.io Saturday. Drop by to learn more!

11.12.2024 17:39 — 👍 28 🔁 6 💬 0 📌 0

Well I guess we have a long way to go...

09.12.2024 08:24 — 👍 0 🔁 0 💬 0 📌 0

Missed out on #Swift tickets? No worries—swing by our #SVFT poster at #NeurIPS2024 and catch *real* headliners! 🎤💃🕺

📌Where: East Exhibit Hall A-C #2207, Poster Session 4 East

⏲️When: Thu 12 Dec, 4:30 PM - 7:30 PM PST

#AI #MachineLearning #PEFT #NeurIPS24

09.12.2024 05:55 — 👍 9 🔁 2 💬 1 📌 0

ML PhD student at UT Austin

acnagle.com

Systems Biology PhD Student @Harvard, Labs of Michael Desai @mmdesai.bsky.social & Michael Baym @baym.lol | Trinity College Cambridge Alum | Evolution & Microbes

PhD student in CS @ ETHZ / MPI-IS

Theory of ML evaluation https://flodorner.github.io/

Undergrad researcher at UT Austin, interested in NLP

https://dubai03nsr.github.io/

Professor at UT Austin. Research in ML & Optimization. Always rethinking how I teach. Amateur accordion player. Committed bike commuter. Online classes in English & Greek. https://caramanis.github.io/

The 2025 Conference on Language Modeling will take place at the Palais des Congrès in Montreal, Canada from October 7-10, 2025

NLP PhD @ USC

Previously at AI2, Harvard

mattf1n.github.io

assistant professor in computer science / data science at NYU. studying natural language processing and machine learning.

PhD student at NYU, working on NLP.

https://timchen0618.github.io

ML Science Lead @Amazon; prev @UT Austin. Team Lead for India at the International AI Olympiad 2025.

CS PhD candidate @UCLA | Prev. Research Intern @MSFTResearch, Applied Scientist Intern @AWS | LLM post-training, multi-modal learning

https://yihedeng9.github.io

Incoming asst professor at MIT EECS, Fall 2025. Research scientist at Databricks. CS PhD @StanfordNLP.bsky.social. Author of ColBERT.ai & DSPy.ai.

Stanford Professor of Linguistics and, by courtesy, of Computer Science, and member of @stanfordnlp.bsky.social and The Stanford AI Lab. He/Him/His. https://web.stanford.edu/~cgpotts/

https://www.vita-group.space/ 👨🏫 UT Austin ML Professor (on leave)

https://www.xtxmarkets.com/ 🏦 XTX Markets Research Director (NYC AI Lab)

Superpower is trying everything 🪅

Newest focus: training next-generation super intelligence - Preview above 👶

🪸NLP researcher, AI scientist at Deccan AI. Core interests: Computational Social Sciences, Conversational AI, Ai safety and Multilinguality

PhD student @ CMU LTI. working on text generation + long context

https://www.cs.cmu.edu/~abertsch/

Undergrad NLP researcher at UT Austin, working with Greg Durrett

senior undergrad@UTexas Linguistics

Looking for Ph.D position 26 Fall

Comp Psycholing & CogSci, human-like AI, rock🎸 @growai.bsky.social

Prev:

Summer Research Visit @MIT BCS(2025), Harvard Psych(2024), Undergrad@SJTU(2022-24)

Opinions are my own.

http://honglizhan.github.io

PhD Candidate 🤘@UTAustin | previously @IBMResearch @sjtu1896 | NLP for social good

Compling PhD student @UT_Linguistics | prev. CS, Math, Comp. Cognitive Sci @cornell