![Gaussian integration by parts. Let z be a standard Gaussian random variable. Then E[zf(z)] = E[f'(z)].](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:choq7wzmsf7n3t6drq3ys27h/bafkreies7hqoujoycvwectbrxkkx4hzkpc6use4tngnlrtwxn7duh6k44y@jpeg)

Gaussian integration by parts. Let z be a standard Gaussian random variable. Then E[zf(z)] = E[f'(z)].

New blog post up about the amazingly useful Gaussian integration by parts formula! As an application, we use it to analyze power iteration from a random start www.ethanepperly.com/index.php/20...

05.08.2025 17:29 —

👍 0

🔁 0

💬 0

📌 0

2025 July Prize Spotlight | SIAM

Congratulations to the SIAM prize recipients who will be recognized at AN25, ACDA25, CT25, and GD25!

Very excited to share that I’ve been awarded a SIAM student paper prize! I look forward to seeing any of you who will be at #SIAMAN25 in Montréal. Thanks to the committee for selecting me for this honor www.siam.org/publications...

11.07.2025 18:28 —

👍 5

🔁 1

💬 0

📌 0

Ethan Epperly

Ethan Epperly Email Forms

Also, if you never want to miss a blog post, you can sign up to receive email notifications here! eepurl.com/i5M2P2

16.06.2025 17:25 —

👍 0

🔁 0

💬 0

📌 0

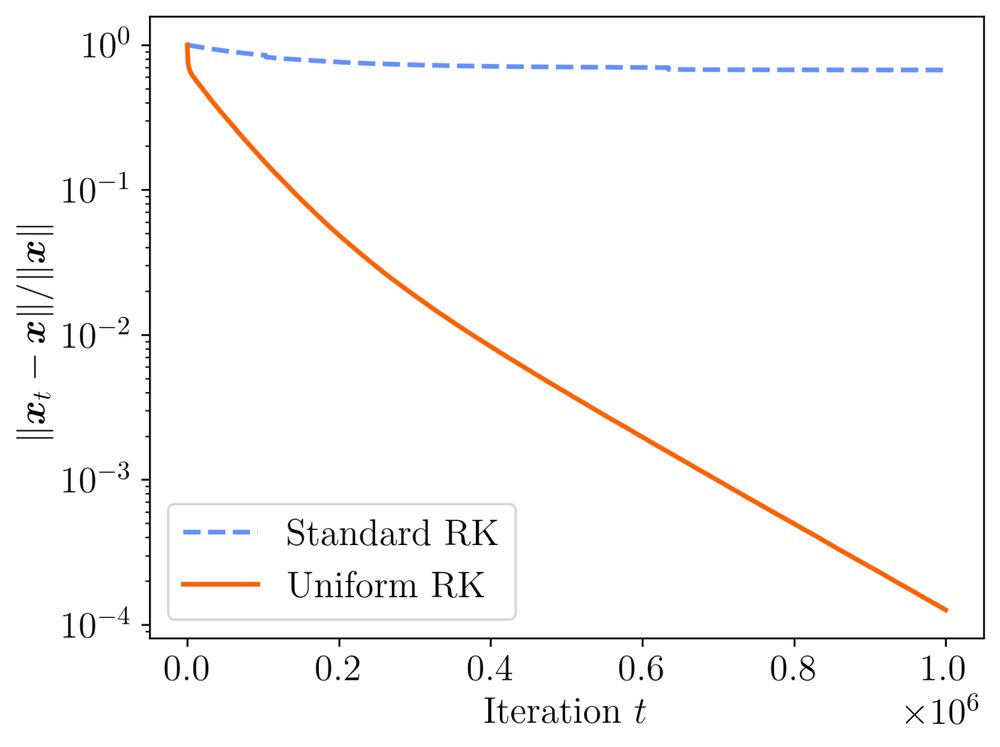

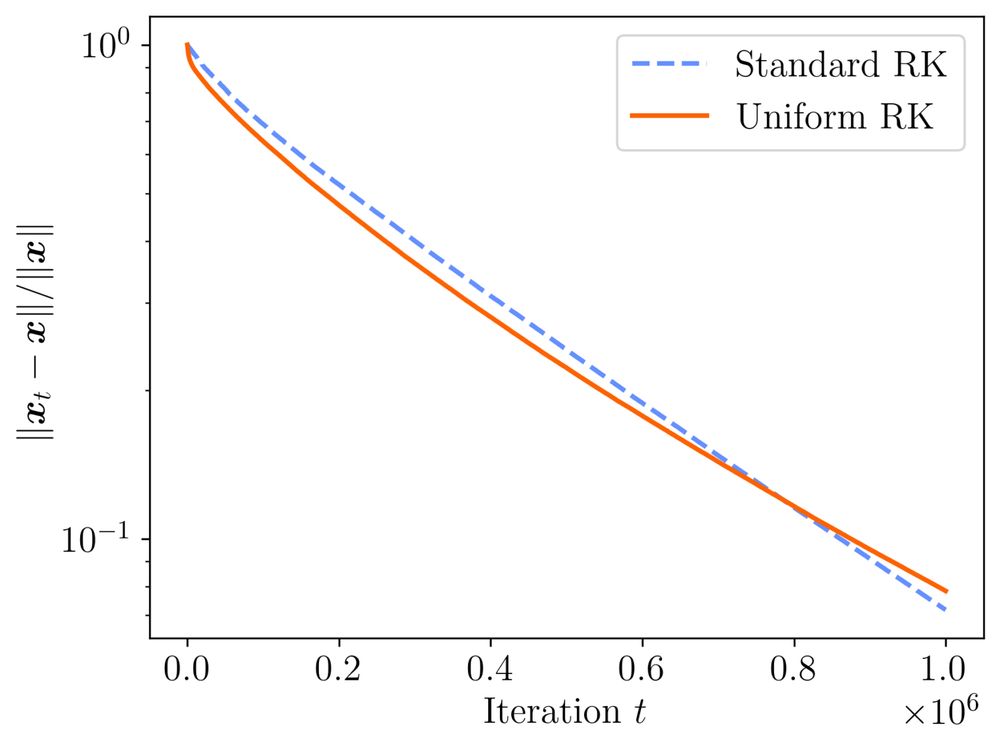

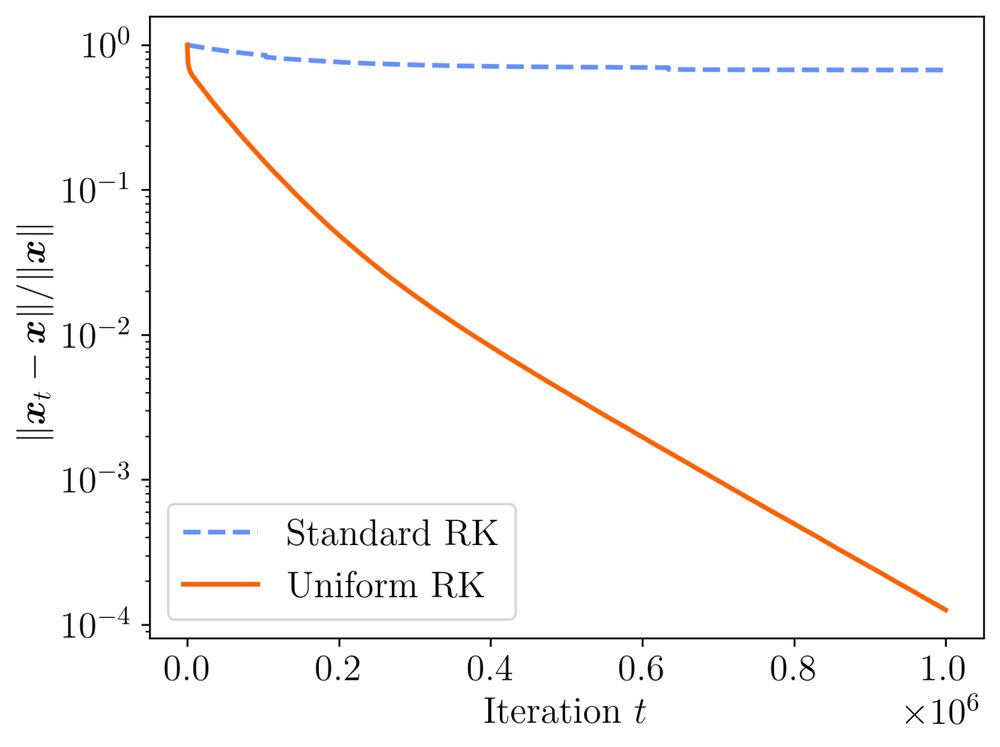

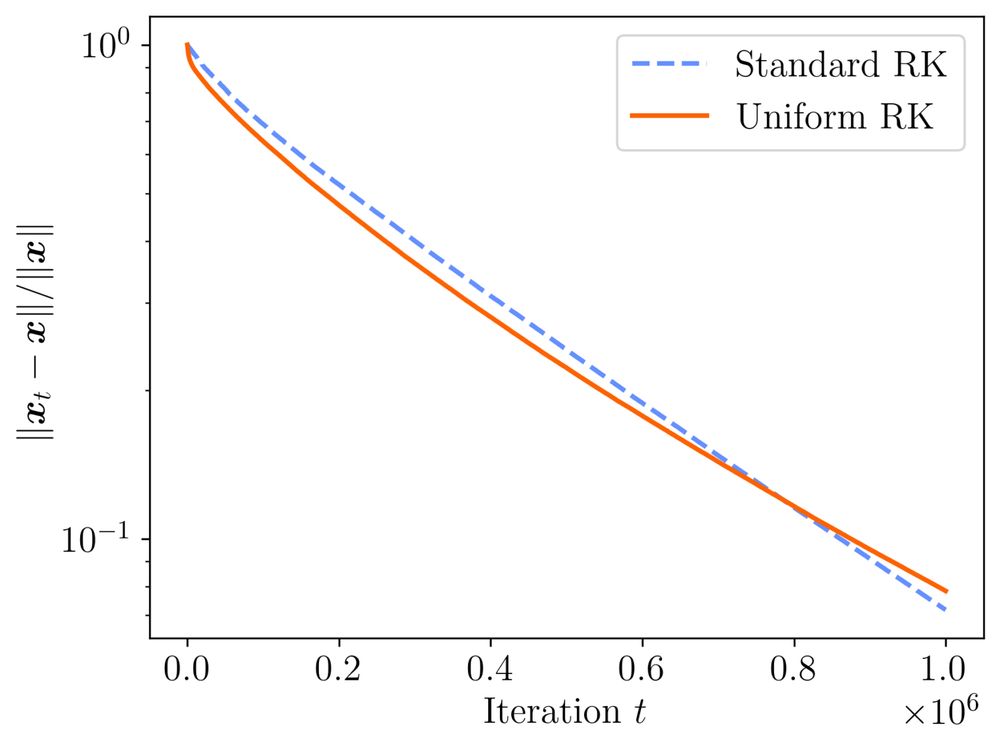

New blog post up about the randomized Kaczmarz algorithm. The classic RK algorithms samples rows according to their squared norms, but what happens if you sample them uniformly? The answer surprised me: Uniform sampling is often just as good or even better www.ethanepperly.com/index.php/20...

16.06.2025 17:25 —

👍 2

🔁 0

💬 1

📌 0

A Neat Not-Randomized Algorithm: Polar Express – Ethan N. Epperly

New blog post out about the new Polar Express algorithm of Amsel, Persson, Musco, and Gower for computing the matrix sign function with applications to the Muon optimizer www.ethanepperly.com/index.php/20...

07.06.2025 02:03 —

👍 4

🔁 0

💬 0

📌 0

Markov Musings 5: Poincaré Inequalities – Ethan N. Epperly

New blog post out in my series on Markov chains! In this post, I discuss Poincaré inequalities and their connection to mixing of Markov chains www.ethanepperly.com/index.php/20...

24.05.2025 19:17 —

👍 15

🔁 2

💬 0

📌 1

a bunch of red and white balls in the sky

Alt: A lot of red and white Pokeballs falling from the sky

🧩 New week, time for our weₐᵉkly quiz! Today, another thing a bit random: Pokémon! Ash and Barry want to catch 'em all: all of them. You know, Pikachu, Jigglypuff, err... Charmander? It's been a while.

So, n Pokémon to catch, and no idea how long it'll take. Gotta help them out! #WeaeklyQuiz

1/

10.03.2025 09:28 —

👍 17

🔁 5

💬 1

📌 3

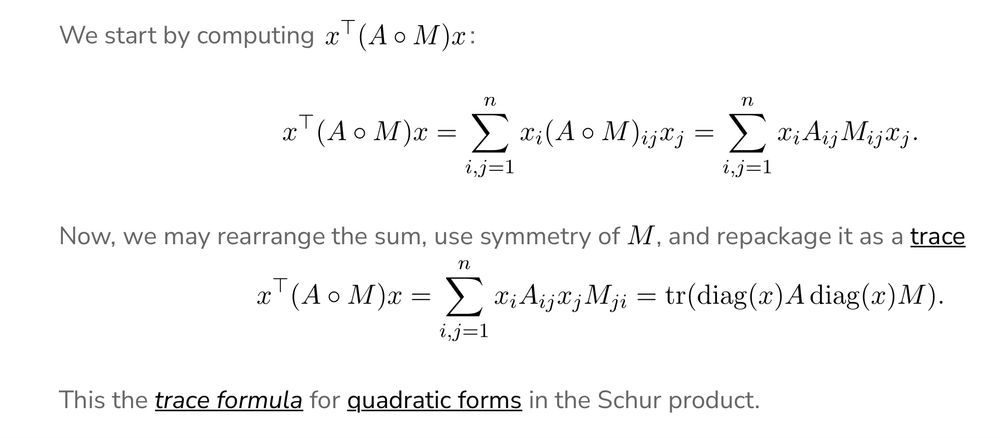

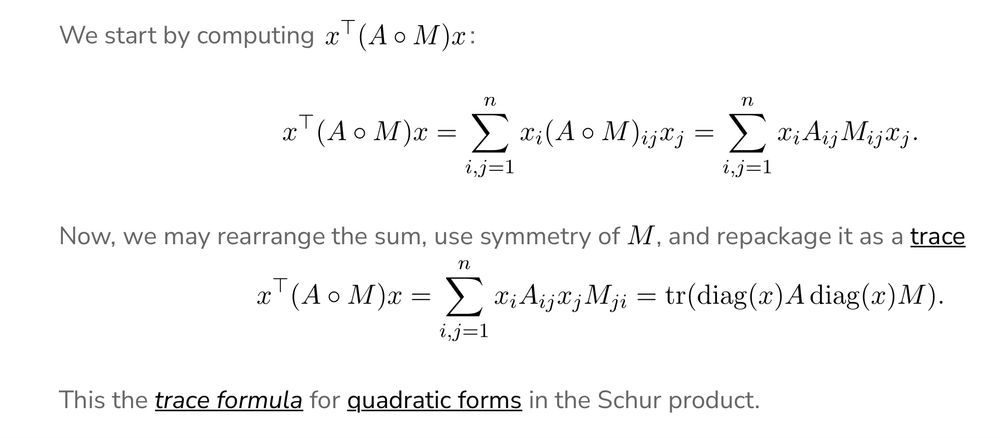

We start by computing $x^\top (A\circ M)x$: $$x^\top (A\circ M)x = \sum_{i,j=1}^n x_i (A\circ M)_{ij} x_j = \sum_{i,j=1}^n x_i A_{ij} M_{ij} x_j.$$Now, we may rearrange the sum, use symmetry of $M$, and repackage it as a trace $$x^\top (A\circ M)x = \sum_{i,j=1}^n x_i A_{ij} x_j M_{ji} = \tr(\operatorname{diag}(x) A \operatorname{diag}(x) M).$$This the trace formula for quadratic forms in the Schur product.

Ack! Typesetting glitch. It was meant to be diag(x) A diag(x) M

25.02.2025 15:45 —

👍 1

🔁 0

💬 1

📌 0

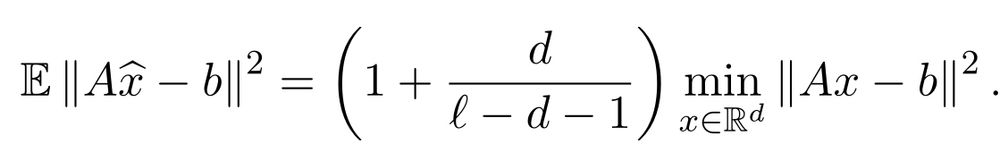

Proof of the Schur product theorem: The Kronecker product $A\otimes M$ of two psd matrices is psd. The entrywise product $A\circ M$ is a principal submatrix of $A\otimes M$: $$A\circ M = ((A\otimes M)_{(i+n(i-1))(i+n(i-1))} : i = 1,\ldots,n).$$All principal submatrices of a psd matrix are psd, so $A\circ M$ is psd.

New blog post with four proofs of the Schur product theorem. Do you know a fifth? www.ethanepperly.com/index.php/20...

25.02.2025 03:23 —

👍 1

🔁 0

💬 1

📌 0

Note to Self: How Accurate is Sketch and Solve? – Ethan N. Epperly

New blog post up! In it, I look at the question: how accurate is sketch-and-solve method for least squares? A standard bound suggests the residual is within a 1 + O(η) factor of optimal for an embedding of distortion η. But this isn't the correct answer! www.ethanepperly.com/index.php/20...

14.02.2025 19:22 —

👍 1

🔁 0

💬 0

📌 0

Oooo can you share?

31.12.2024 03:34 —

👍 1

🔁 0

💬 1

📌 0

Delightful little tale by Nick Trefethen: people.maths.ox.ac.uk/trefethen/ba...

16.12.2024 06:25 —

👍 1

🔁 0

💬 0

📌 0

My Favorite Proof of the Cauchy–Schwarz Inequality – Ethan N. Epperly

What is your favorite proof of the Cauchy–Schwartz inequality? I wrote about my favorite proof, which uses matrix theory, in a new blog post. Check it out! Also included: a matrix theoretic proof of Jensen’s inequality for 1/x www.ethanepperly.com/index.php/20...

12.12.2024 15:40 —

👍 2

🔁 1

💬 0

📌 0

Low-Rank Approximation Toolbox – Ethan N. Epperly

Sorry! This is definitely one of the most "advanced" posts I've written on my blog so far. The earlier posts in my "low-rank approximation toolbox" series might be some help, at least! www.ethanepperly.com/index.php/ca...

09.12.2024 17:58 —

👍 1

🔁 0

💬 0

📌 0

Low-Rank Approximation Toolbox: The Gram Correspondence – Ethan N. Epperly

Did you know that randomized Nyström approximation of A is equivalent to running the randomized SVD on A⁰ᐧ⁵? This and other surprising facts on this week's blog post on the "Gram correspondence" www.ethanepperly.com/index.php/20...

09.12.2024 17:15 —

👍 18

🔁 6

💬 2

📌 0

This whole “advent of research” series of posts by David is really excellent, but I love this one in particular

08.12.2024 01:10 —

👍 1

🔁 0

💬 0

📌 0

Randomized Kaczmarz is Asympotically Unbiased for Least Squares – Ethan N. Epperly

New blog post up! The randomized Kaczmarz algorithm doesn’t converge for inconsistent systems of linear equations, but—as an estimator for the least-squares solution—it does have an exponentially decreasing bias www.ethanepperly.com/index.php/20...

05.12.2024 17:22 —

👍 2

🔁 0

💬 0

📌 0

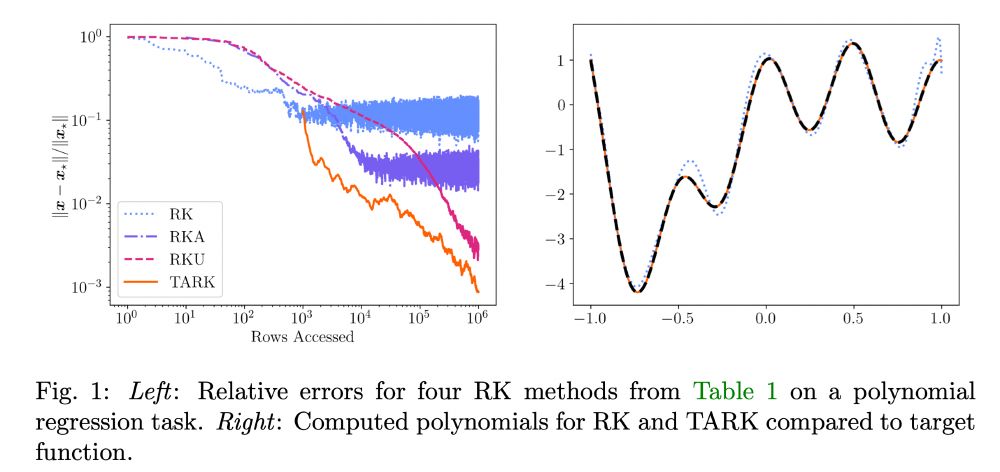

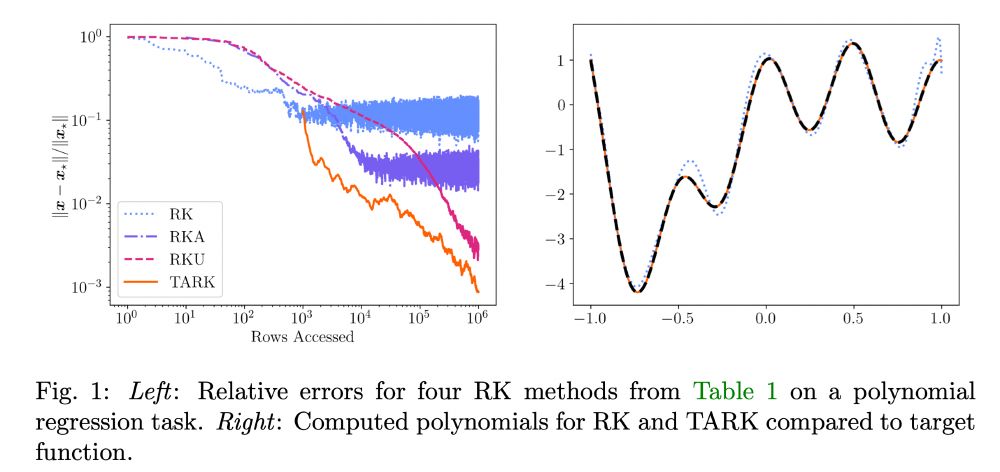

Error for different randomized Kaczmarz methods applied to a least-squares problem. Tail-averaged randomized Kaczmarz (TARK) outcompetes the existing methods

New paper out with Gil Goldshlager and Rob Webber! In it, we show that *tail averaging* can be used to improve the accuracy of the randomized Kaczmarz method for solving least-squares problems. The resulting method, TARK, outcompetes other row-access methods for least squares

02.12.2024 17:05 —

👍 2

🔁 0

💬 1

📌 0

Cross-posting this - please join us!

Mailing List link: groups.google.com/g/internatio...

YouTube Channel link: www.youtube.com/@MonteCarloS...

30.11.2024 20:51 —

👍 20

🔁 5

💬 1

📌 1

Home

About

The New York Theory Day is a workshop aimed to bring together the theoretical computer science community in the New York metropolitan area for a day of interaction and discussion. The Theory Da...

A reminder about NY Theory Day in a week! Fri Dec 6th! Talks by Amir Abboud, Sanjeev Khanna, Rotem Oshman, and Ron Rothblum! At NYU Tandon!

sites.google.com/view/nyctheo...

Registration is free, but please register for building access.

See you all there!

30.11.2024 17:04 —

👍 45

🔁 9

💬 1

📌 0

Just created the Starter Pack for Optimization Researchers to help you on your journey into optimization! 🚀

Did I miss anyone? Tag them or let me know what to add!

go.bsky.app/VjpyyRw

23.11.2024 23:59 —

👍 38

🔁 8

💬 14

📌 0

The first d columns of an a Haar random matrix from the orthogonal group O(n), yes

23.11.2024 18:07 —

👍 1

🔁 0

💬 1

📌 0

A uniformly random matrix with orthonormal rows would be another example

23.11.2024 17:31 —

👍 0

🔁 0

💬 1

📌 0

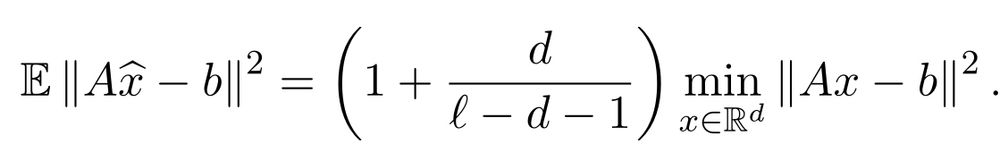

New blog post up presenting some beautiful *exact formulas* for sketched least squares with a Gaussian embedding. These beautiful formulas appear to have only been published as recently as 2020; see post for details! www.ethanepperly.com/index.php/20...

21.11.2024 16:59 —

👍 3

🔁 0

💬 1

📌 0

Five Interpretations of Kernel Quadrature – Ethan N. Epperly

Very excited to be attending #NeurIPS2023 next week where I’ll be presenting my work “Kernel quadrature with randomly pivoted Cholesky” with Elvira Moreno. I’ve written a little blog post to explain what kernel quadrature is and what our approach is to it!

05.12.2023 22:19 —

👍 5

🔁 0

💬 0

📌 0

![Gaussian integration by parts. Let z be a standard Gaussian random variable. Then E[zf(z)] = E[f'(z)].](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:choq7wzmsf7n3t6drq3ys27h/bafkreies7hqoujoycvwectbrxkkx4hzkpc6use4tngnlrtwxn7duh6k44y@jpeg)