AGI might be here soon, but what can you actually do about it? I'm writing a new guide to that. Stay tuned for updates, but here's a summary:

29.04.2025 17:19 — 👍 1 🔁 0 💬 0 📌 0

AGI might be here soon, but what can you actually do about it? I'm writing a new guide to that. Stay tuned for updates, but here's a summary:

29.04.2025 17:19 — 👍 1 🔁 0 💬 0 📌 0

If you want to switch, speak to @80000Hours one-on-one and they can help you with planning & introductions.

80000hours.org/speak-with-us/

And follow the links in the full post for more:

benjamintodd.substack.com/p/work-on-a...

7/ So if you can find a role that helps over the next 5-10 years, that seems like the highest expected-impact thing you can do.

Though, I don't think it's for everyone:

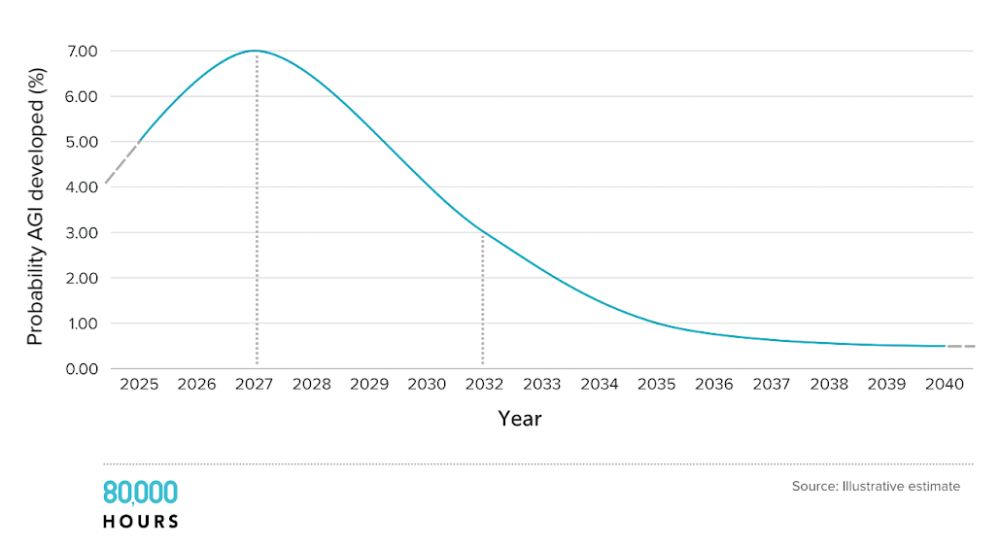

6/ The chance of building powerful AI is unusually high between now and around 2030, making the next 5 years especially critical.

If AGI emerges in the next 5 years, you’ll be part of one of the most important transitions in human history. If not, you’ll have time to return to your previous path.

It's often possible to transition with just ~100h of reading and speaking to people in the field. You don't need to be technical – there are many other ways to help.

29.04.2025 16:15 — 👍 0 🔁 0 💬 1 📌 0

5/ A few years it was much harder to help, but today there are more and more concrete jobs working on these issues.

29.04.2025 16:15 — 👍 0 🔁 0 💬 1 📌 04/ Under 10,000 people work full-time reducing important aspects of these risks – tiny compared to the millions working on established issues like climate change, or the number of people trying to deploy the technology as quickly as possible.

29.04.2025 16:15 — 👍 0 🔁 0 💬 1 📌 0

3/ These accelerations bring a range of major risks, not just misalignment, but also concentration of power, new weapons of mass destruction, great power conflict, treatment of digital beings, and more.

29.04.2025 16:15 — 👍 0 🔁 0 💬 1 📌 0

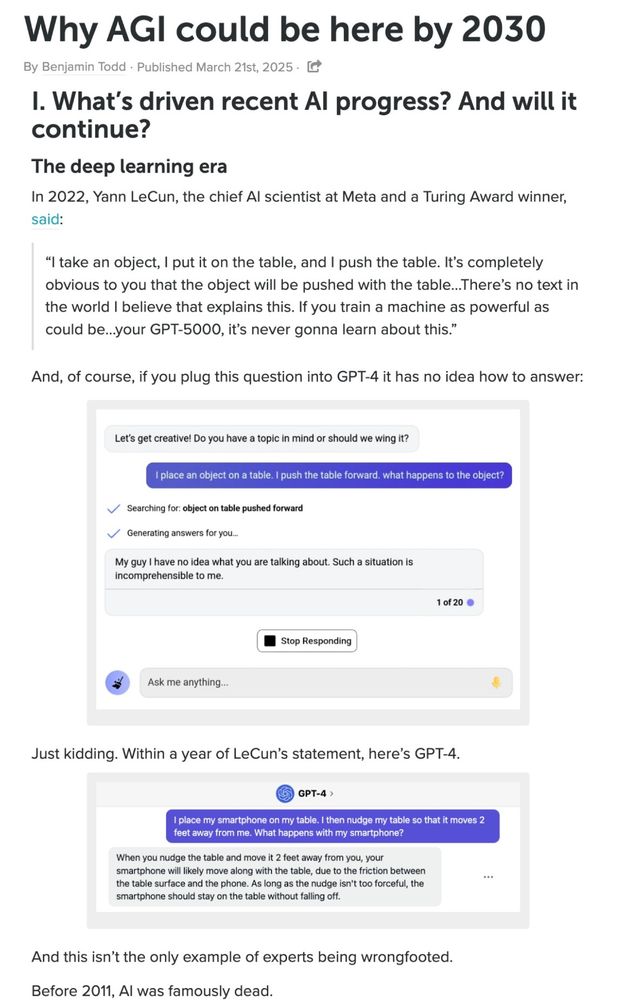

2/ Lots of people hype AI as 'transformative' but few internalise how crazy it could really be. There's three different types of possible acceleration, which are much more grounded in empirical research than a couple of years ago.

29.04.2025 16:15 — 👍 0 🔁 0 💬 1 📌 0

1/ There's a significant chance of AI systems that could accelerate science and automate many skilled jobs in five years.

80000hours.org/agi/guide/w...

Why to quit your job and work on risks from AI (the short version) 🧵

29.04.2025 16:14 — 👍 0 🔁 0 💬 1 📌 0

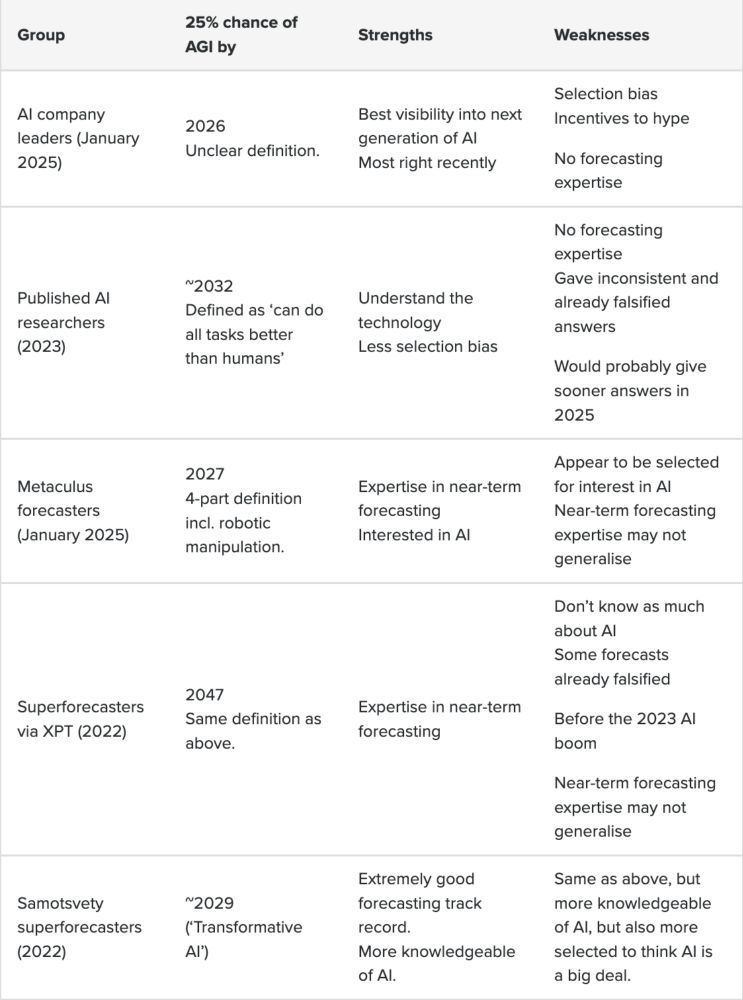

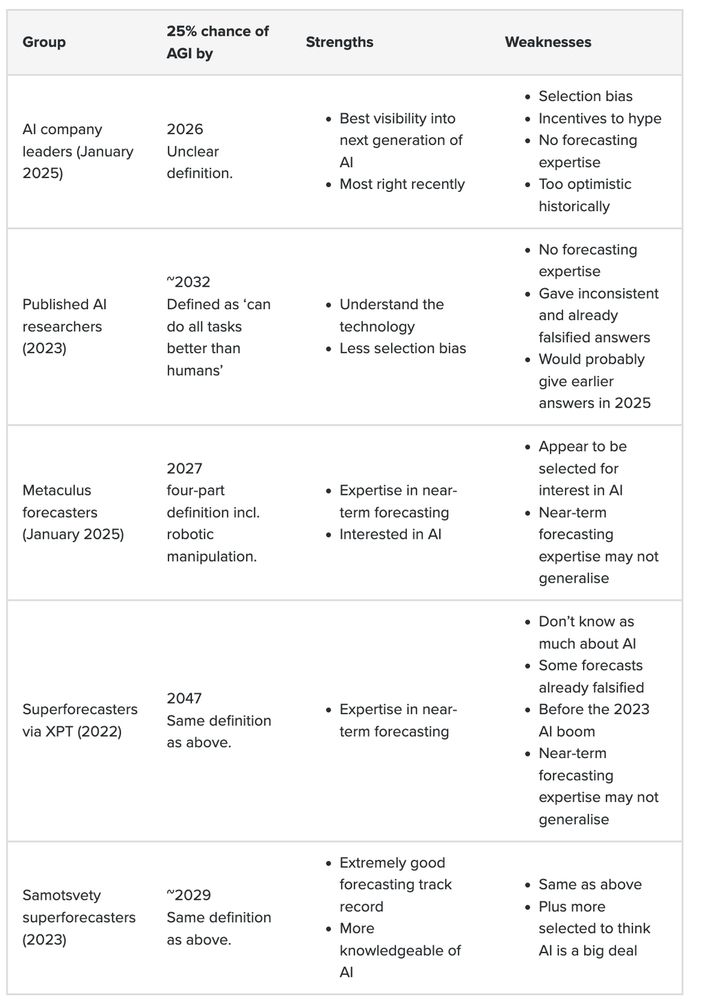

Combining the groups, I think it's fair to AGI by 2030 is within the bounds of expert opinion.

There's a lot of uncertainty, but high uncertainty means we can neither rule it out, nor rule it in.

And every group agrees it's coming sooner.

Full post:

80000hours.org/2025/03/when...

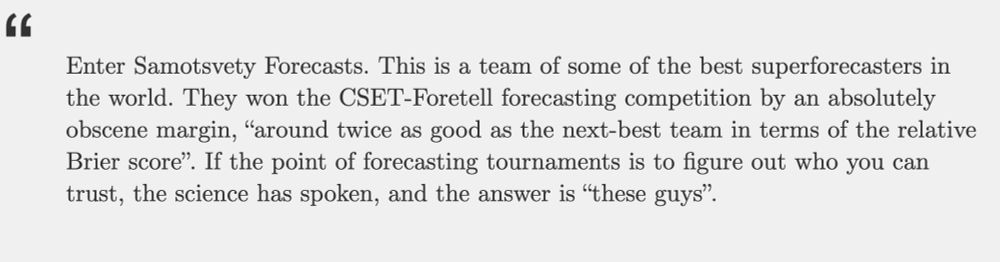

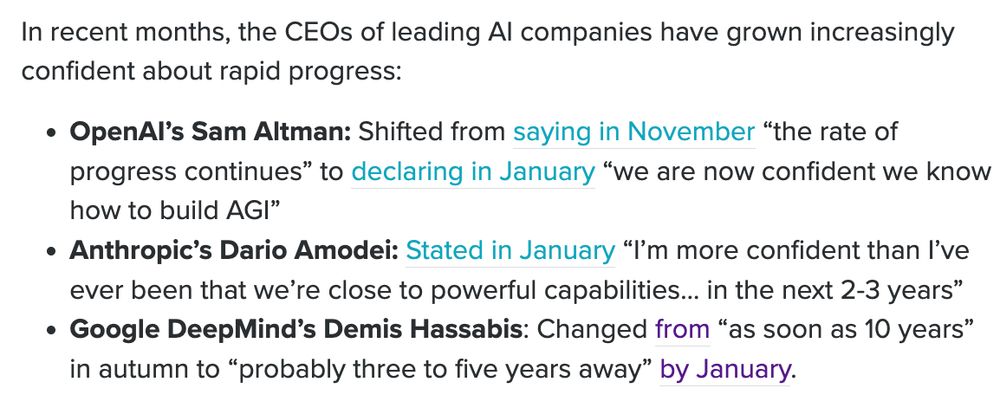

5/ Samotsvety are a team of some of the most elite forecasters out there, who also know more about AGI.

In 2023, they gave shorter estimates: 25% by 2029.

This was also down vs. their 2022 forecast.

But unfortunately this still used the terrible Metaculus definition.

4/ XPT surveyed 33 superforecasters in 2022.

They gave much longer answers: 25% chance by 2047.

But 2022 is before the great timeline shortening.

And their predictions about compute have already been falsified, and they don't seem to know that much about AI.

More:

asteriskmag.com/issues/03/th...

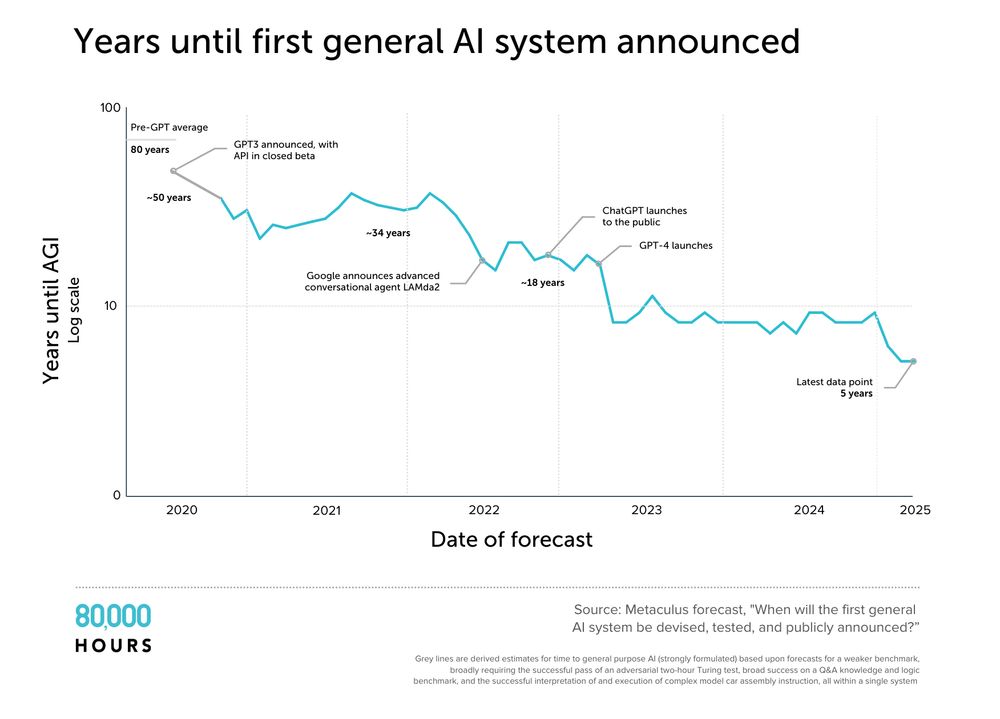

3/ So what about forecasting experts?

The Metaculus AGI Q has 1000+ forecasts.

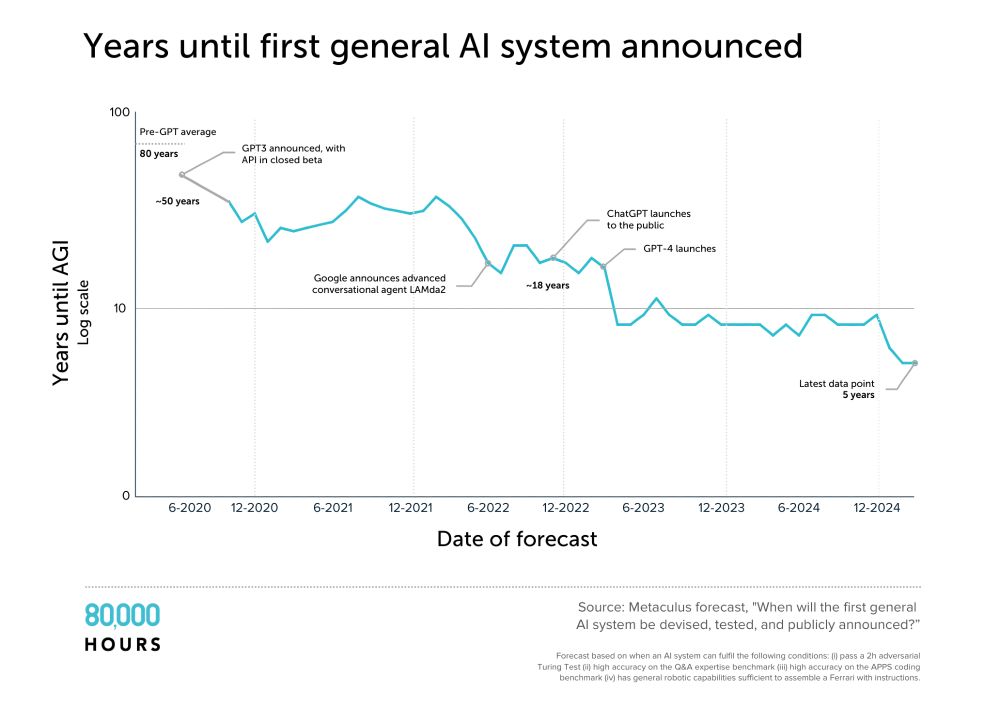

The median has fallen from 50 years to 5.

Unfortunately, the definition is both too stringent for AGI, and not stringent enough. So I'm skeptical of the specific numbers.

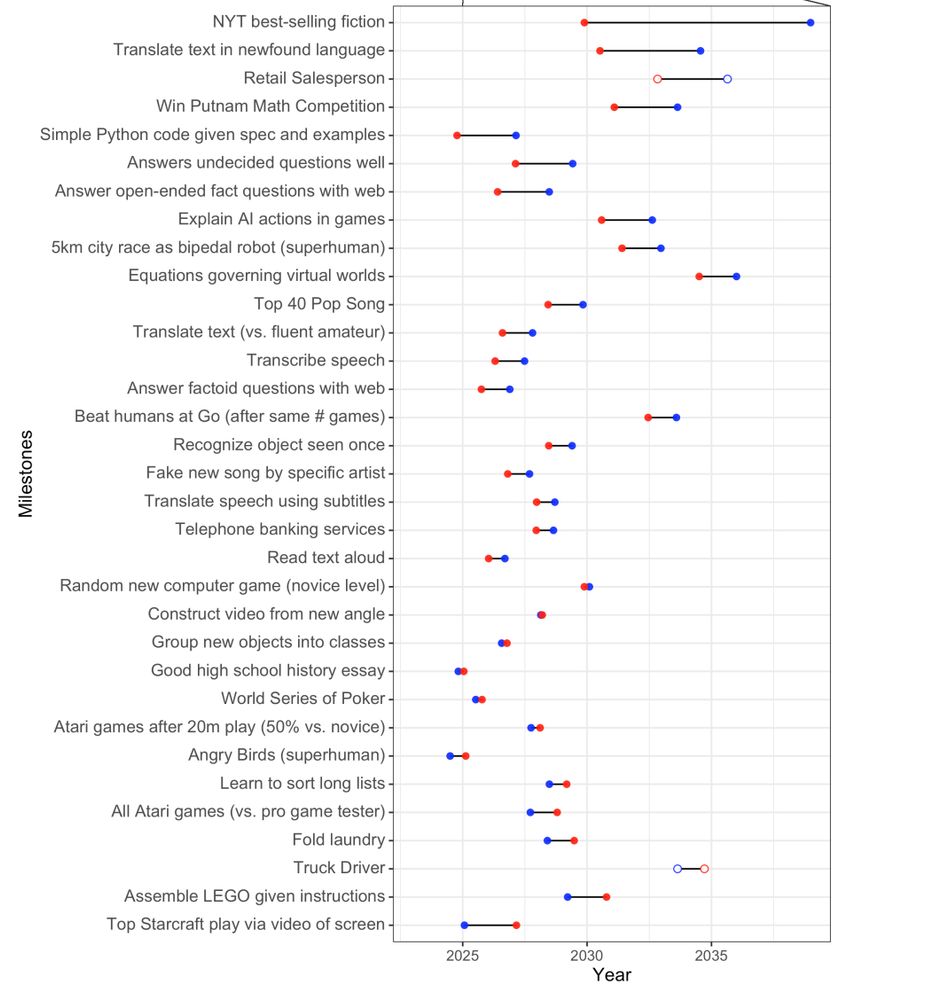

In 2022 (blue), they forecast AI wouldn't be able to write simple python code until 2027.

And even in 2023 (red), they predicted 2025!

They gave much longer answers for "full automation of labour" for unclear reasons.

Also AI expertise ≠ forecasting expertise.

2/ To reduce bias, we could consider a wider range of AI experts and in the AI Impacts survey of thousands of published AI authors.

Median: 25% chance of AI better than humans at "all tasks" by 2032.

But this is from 2023, their answers have been too pessimistic historically.

1/ First up, AI company leaders.

They tend to be most bullish – predicting AGI in 2-5 years.

It's obvious why they might be biased.

But I don't think should be totally ignored – they have the most visibility into next gen capabilities.

(And have been more right about recent progress.)

What can experts tell us about when AGI will arrive?

Maybe not much. Except that it's coming sooner than before.

I did a review of the 5 most relevant expert groups and what we can learn from them..

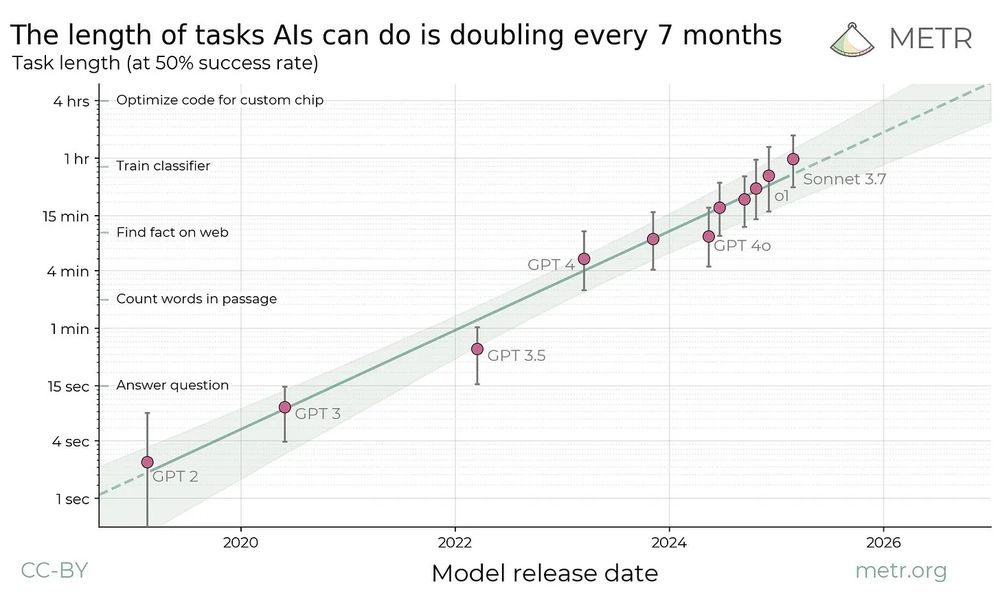

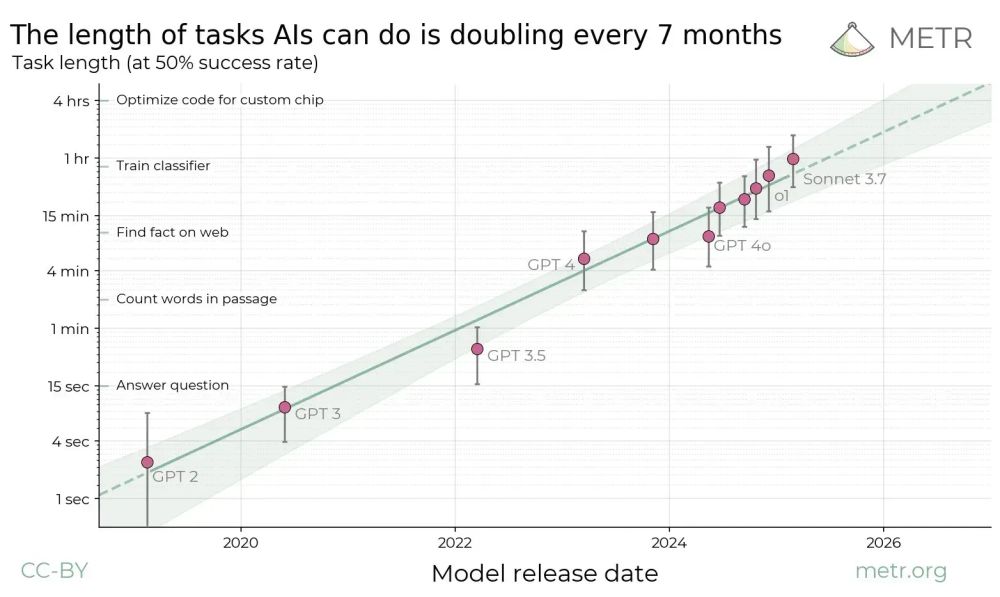

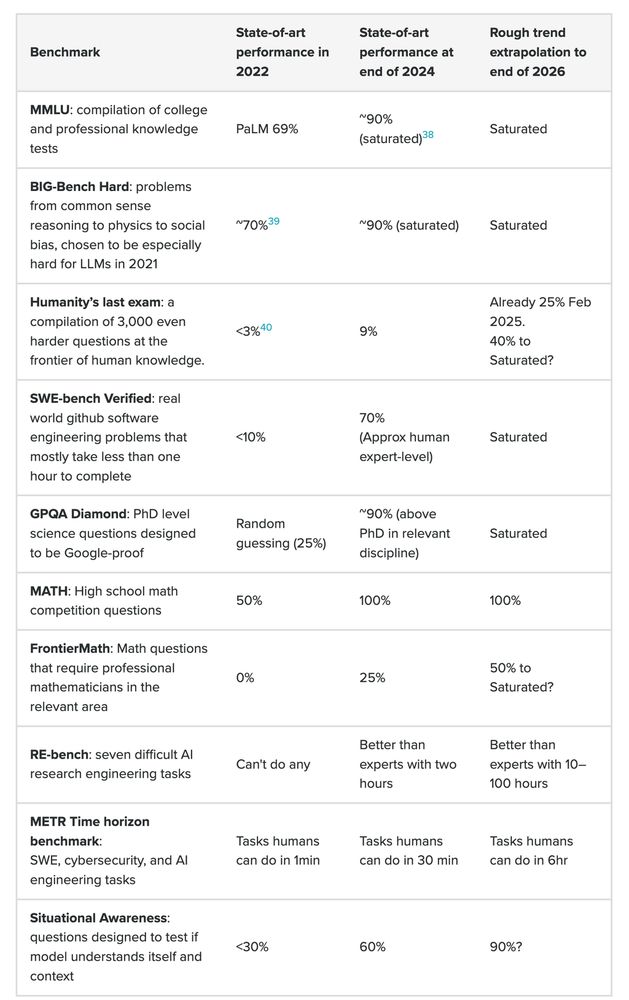

Projecting the trend forward:

In 2 years, can do many 1-day computer use tasks

In 4 years, many 1-week tasks

On substack I argue we should expect the trend to continue, and discuss some limitations:

benjamintodd.substack.com/p/the-most-i...

Why has there been so little AI automation, despite great benchmark scores? This chart is a big part of the answer.

Models today can do tasks up to 1h.

But real jobs mainly consist of tasks taking days or weeks.

So AI can answer questions but can't do real jobs.

But that's about to change..

My take: It's remarkably difficult to rule out AGI before 2030.

Not saying it's certain—just that it could happen with only an extension of current trends.

Full analysis here:

80000hours.org/agi/guide/w...

Other meaningful arguments against:

06.04.2025 15:13 — 👍 0 🔁 0 💬 1 📌 0

The strongest counterargument?

Current AI methods might plateau on ill-defined, contextual, long-horizon tasks—which happens to be most knowledge work.

Without continuous breakthroughs, profit margins fall and investment dries up.

You can boil it down to whether this trend will continue:

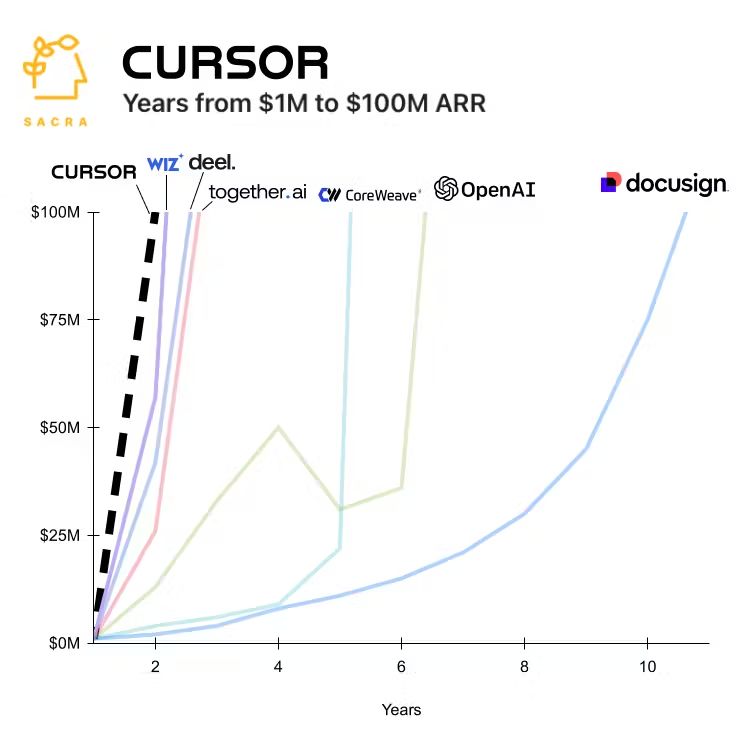

5. While real-world deployment faces many hurdles, AI is already very useful in virtual and verifiable domains:

• Software engineering & startups

• Scientific research

• AI development itself

These alone could drive massive economic impact and accelerate AI progress.

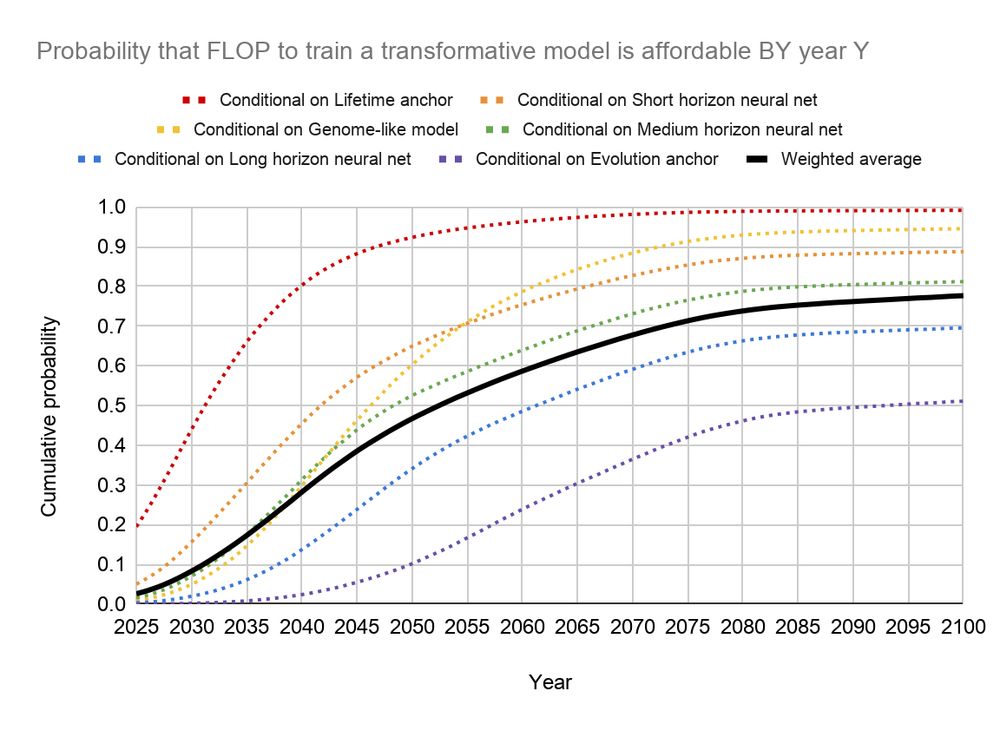

4. By 2030, AI training compute will far surpass estimates for the human brain.

If algorithms approach even a fraction of human learning efficiency, we'd expect human-level capabilities in at least some domains.

www.cold-takes.com/forecasting...

3. Expert forecasts have consistently moved earlier.

AI and forecasting experts now place significant probability on AGI-level capabilities pre-2030.

I remember when 2045 was considered optimistic.

80000hours.org/2025/03/whe...

2. Benchmark extrapolation suggests in 2028 we'll see systems with superhuman coding and reasoning that can autonomously complete multi-week tasks.

All the major benchmarks ↓

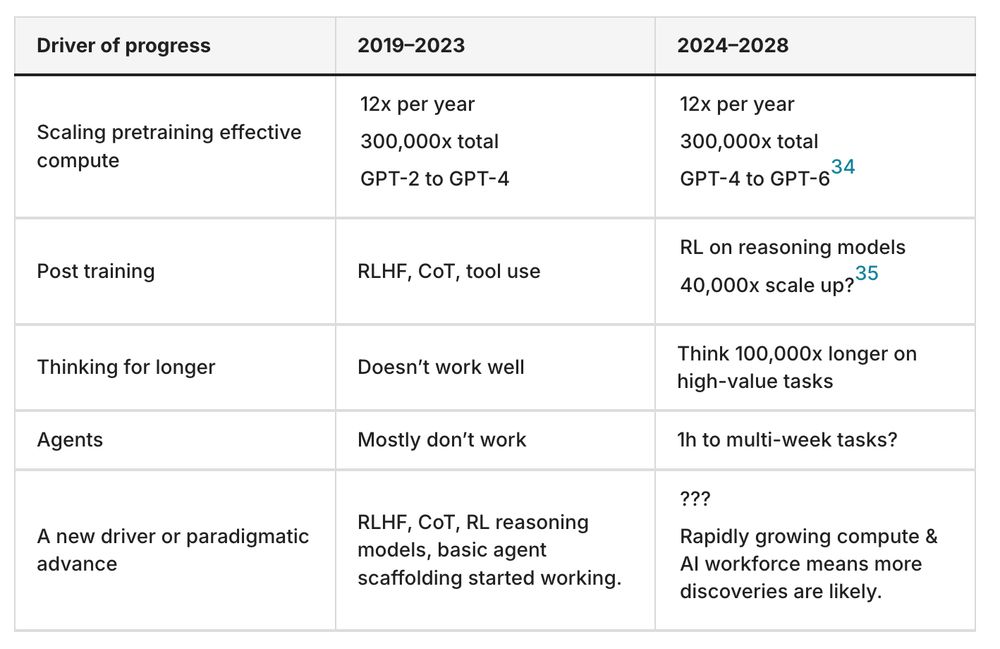

1. The four recent drivers of progress don't run into bottlenecks until at least 2028.

And with investment in compute and algorithms continuing to increase, new drivers are likely to be discovered.

AI CEOs claim AGI will be here in 2-5 years.

Is this just hype, or could they be right?

I spent weeks writing this new in-depth primer on the best arguments for and against.

Starting with the case for...🧵