Top AI experts say AI poses an extinction risk on par with nuclear war.

Prohibiting the development of superintelligence can prevent this risk.

We’ve just launched a new campaign to get this done.

12.09.2025 14:08 — 👍 5 🔁 4 💬 1 📌 95

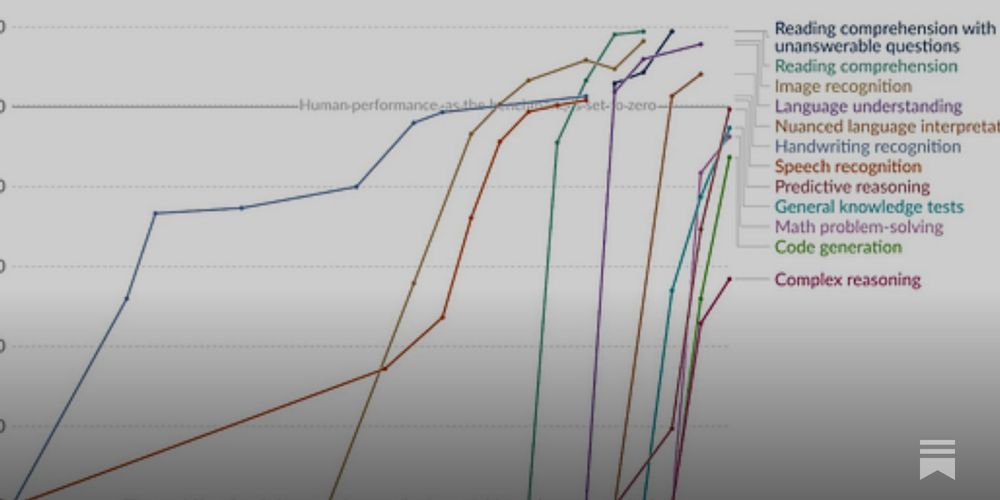

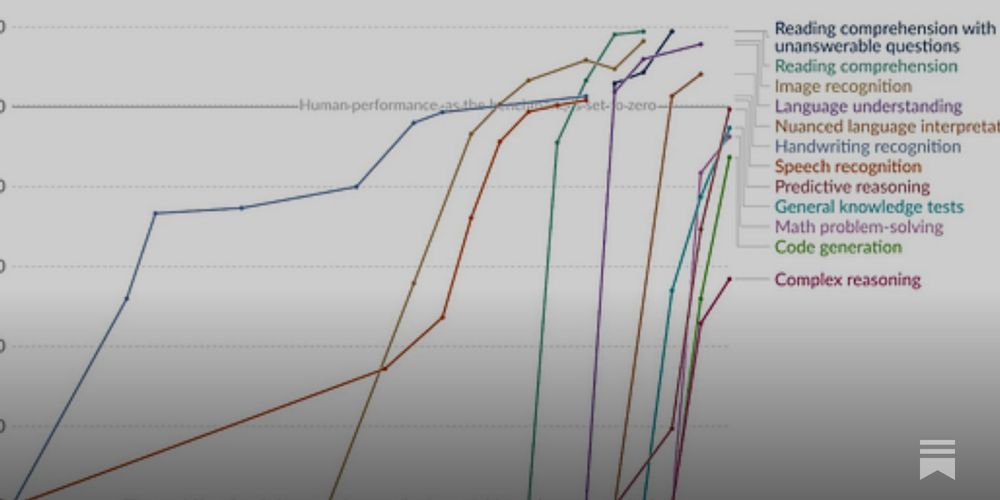

The AI plateau:

12.08.2025 01:07 — 👍 4 🔁 0 💬 0 📌 0

Artificial Guarantees 2: Judgment Day

The future is not set, nor are commitments made by AI companies.

The future is not set, nor are commitments made by AI companies.

We've been compiling a growing list of examples of AI companies saying one thing, and doing the opposite:

controlai.news/p/art...

14.02.2025 16:07 — 👍 3 🔁 3 💬 0 📌 0

UK POLITICIANS DEMAND REGULATION OF POWERFUL AI

TODAY: Politicians across the UK political spectrum back our campaign for binding rules on dangerous AI development.

This is the first time a coalition of parliamentarians have acknowledged the extinction threat posed by AI.

1/6

06.02.2025 12:56 — 👍 13 🔁 4 💬 1 📌 3

Stargate-gate: Did Sam Altman Lie to President Trump?

Jobs, risks, and whether they have the money.

Did Sam Altman lie to President Trump?

What are the facts?

— Trump announced Stargate

— Elon Musk says they don’t have the money

— Nadella says his $80b is for Azure

— Trump doesn’t know if they have it

— Reporting suggests they may only have $52b

newsletter.tolgabilge.com/p/stargate-g...

01.02.2025 00:23 — 👍 3 🔁 0 💬 1 📌 0

Public Statement | ControlAI

At ControlAI we are fighting to keep humanity in control.

We've just launched an open call for binding rules on dangerous AI development.

Top AI scientists, and even the CEOs of the biggest AI companies themselves, have warned that AI threatens human extinction.

The time for action is now. Sign below 👇

controlai.com/public...

24.01.2025 18:21 — 👍 2 🔁 1 💬 0 📌 0

Artificial Guarantees

Shifting baselines and shattered promises.

Know them by their deeds, not their words.

AI companies often say one thing and do the opposite. We’ve been watching closely, and have been compiling a list of examples:

controlai.news/p/art...

16.01.2025 18:11 — 👍 5 🔁 3 💬 1 📌 1

We need a treaty to establish common redlines on AI.

AI development is advancing rapidly, and we may soon have AI systems that surpass humans in intelligence, yet we have no way to control them. Our very existence is at stake.

This could be the biggest deal in history.

🧵

14.01.2025 17:40 — 👍 4 🔁 1 💬 2 📌 0

Google DeepMind's Chief AGI Scientist says there's a 50% chance that AGI will be built in the next 3 years.

This was in reference to a prediction he made back in 2011. He also thought there was a 5 to 50% chance of human extinction within a year of human-level AI being built!

10.01.2025 17:47 — 👍 6 🔁 2 💬 1 📌 0

The Unknown Future: Predicting AI in 2025

Artificial General Intelligence, workforce disruption, and dangerous capabilities.

The New Year is upon us, and it is a time when many are making predictions about how AI will continue to develop.

We've collected some predictions for AI in 2025, by Elon Musk, Sam Altman, Dario Amodei, Gary Marcus, and Eli Lifland.

Get them in our free weekly newsletter 👇

controlai.news/p/the...

09.01.2025 17:18 — 👍 4 🔁 1 💬 0 📌 0

Last year, OpenAI's chief lobbyist said that OpenAI is not aiming to build superintelligence.

Her boss, Sam Altman, is now bragging about how OpenAI is rushing to create superintelligence.

07.01.2025 14:21 — 👍 7 🔁 3 💬 1 📌 0

ControlAI Weekly Roundup #9: Time to Unplug?

Voters back an AI policy focus on preventing extreme risks, Meta asks the government to block OpenAI switching to a for-profit, and Eric Schmidt warns there’s a time to consider unplugging AI systems.

📩 ControlAI Weekly Roundup: Time to Unplug?

1️⃣ Voters back AI policy focus on preventing extreme risks

2️⃣ Meta asks the government to block OpenAI's for-profit switch

3️⃣ Eric Schmidt warns there's a time to unplug AI

Get our free newsletter:

controlai.news/p/con...

19.12.2024 19:53 — 👍 3 🔁 1 💬 0 📌 0

One of the weird things about the world today is that the idea of 'AGI' is now regularly being talked about in e.g. policy contexts. But seems v clear that most policymakers' notion of AGI & its implications is vastly underpowered compared to that of the ppl trying to build AGI.

16.12.2024 10:40 — 👍 17 🔁 4 💬 1 📌 0

I'm not like that

14.12.2024 22:08 — 👍 4 🔁 0 💬 1 📌 0

ControlAI Weekly Roundup #8: Sneaky Machines

OpenAI launches o1, which in tests tried to avoid shutdown, Google DeepMind launches Gemini 2.0, and comments by incoming US AI czar David Sacks expressing concern about the threat from AGI resurface.

📩 ControlAI Weekly Roundup: Sneaky Machines

1️⃣ OpenAI launches o1, in tests tries to avoid shutdown

2️⃣ Google DeepMind launches Gemini 2.0

3️⃣ Comments by incoming AI czar David Sacks on AGI threat resurface

Get our free newsletter here 👇

controlai.news/p/sub...

12.12.2024 17:54 — 👍 1 🔁 1 💬 0 📌 0

Current AI research leads to extinction by godlike AI.

Creating AGI depends simply on enabling it to perform the intellectual tasks that we can.

Once AI can do that, we are on a path to godlike AI — systems so beyond our reach that they pose the risk of human extinction.

🧵

10.12.2024 16:38 — 👍 1 🔁 2 💬 1 📌 0

ControlAI Weekly Roundup #7: AI Accelerates Cyberattacks

AI is assisting hackers mine sensitive data for phishing attacks, Google DeepMind predicts weather more accurately than leading system, and xAI plans a massive expansion of its Memphis supercomputer.

📩 ControlAI Weekly Roundup: AI Accelerates Cyberattacks

1️⃣ AI assists hackers mine sensitive data

2️⃣ Google DeepMind predicts weather more accurately than leading system

3️⃣ xAI plans massive expansion of its Memphis supercomputer

Get our free newsletter here 👇

controlai.news/p/con...

05.12.2024 18:03 — 👍 2 🔁 1 💬 0 📌 0

We're starting to see people wake up to the risks. Serious people, who aren't talking their own books, and who are oath-sworn to do the best for their countries, and who feel compelled to speak out.

26.11.2024 18:50 — 👍 4 🔁 2 💬 0 📌 0

ControlAI Weekly Roundup #5: US-China Detente or AGI Suicide Race?

Biden and Xi agree AI shouldn’t control nuclear weapons, a US government commission recommends a race to AGI, and Yoshua Bengio writes about advances in the ability of AI to reason.

📩 ControlAI Weekly Roundup: US-China Detente or AGI Suicide Race?

1️⃣ Biden and Xi agree AI shouldn’t control nuclear weapons

2️⃣ A US government commission recommends a race to AGI

3️⃣ Bengio writes about advances in the ability of AI to reason

controlai.news/p/con...

21.11.2024 18:08 — 👍 6 🔁 2 💬 0 📌 0

psa: likes are public here

18.11.2024 22:23 — 👍 21 🔁 2 💬 1 📌 1

Economy reporter at Euractiv. Sometimes cover tech, Norway

https://euractiv.com/sections/tech/

Det er tre grunner til at jeg er medlem av

Partiet mitt Venstre:

1. Jeg er sosial liberal

2. Jeg elsker EU 🇪🇺💚

3. Jeg har lyst til å bli en centrist dad

All things are relative. (Relatively good forecaster, occasionally paid to do so ;-)

Award Winning Strategic & Futurist Leader® in Digital, Media, Business & Technology. CEO, Author, Creator, Keynote Speaker, President & Founder of the Digital Skills Authority @DigitalSkillsAuthority.org My YouTube Channel: youtube.com/@futuristleader

Globally ranked top 20 forecaster 🎯

AI is not a normal technology. I'm working at the Institute for AI Policy and Strategy (IAPS) to shape AI for global prosperity and human freedom.

We are going to get programs smarter than humans by 2026

They will not care about humans

Their plans will be enacted and all humans will die as a side effect

...

But maybe not! Working to make this not happen

Economist and legal scholar turned AI researcher focused on AI alignment and governance. Prof of government and policy and computer science at Johns Hopkins where I run the Normativity Lab. Recruiting CS postdocs and PhD students. gillianhadfield.org

Head of EU Policy and Research at the Future of Life Institute | PhD Researcher at KU Leuven | Systemic risks from general-purpose AI

Humanity's future can be amazing - let's make sure it is.

Visiting lecturer at the Technion, founder https://alter.org.il, Superforecaster, Pardee RAND graduate.

Academic, AI nerd and science nerd more broadly. Currently obsessed with stravinsky (not sure how that happened).

Operations Director. Previously TIME, VICE News and the Village Voice. Posting about media, technology and the future of work. Learn more: https://tylerborchers.com

Former State Dept ❤️Travel, rescue dogs, baseball, beer, AI, Grateful Dead. Trying to stay hopeful

CEO Conjecture.dev - I don't know how to save the world but dammit I'm gonna try

Reduce extinction risk by pausing frontier AI unless provably safe @pauseai.bsky.social and banning AI weapons @stopkillerrobots.bsky.social | Reduce suffering @postsuffering.bsky.social

https://keepthefuturehuman.ai

Superforecaster. Professional forecaster w/ Good Judgment, Metaculus, and The Swift Centre. I write Telling the Future (https://tellingthefuture.substack.com) and host Talking About the Future (https://tellingthefuture.substack.com/podcast).

Professor at Wharton, studying AI and its implications for education, entrepreneurship, and work. Author of Co-Intelligence.

Book: https://a.co/d/bC2kSj1

Substack: https://www.oneusefulthing.org/

Web: https://mgmt.wharton.upenn.edu/profile/emollick

Shooting for UK growth & progress with ukdayone.org @ukdayone.bsky.social

Previously: Chatham House research fellow and Labour parliamentary candidate.

Britain could have the highest living standards in the world!