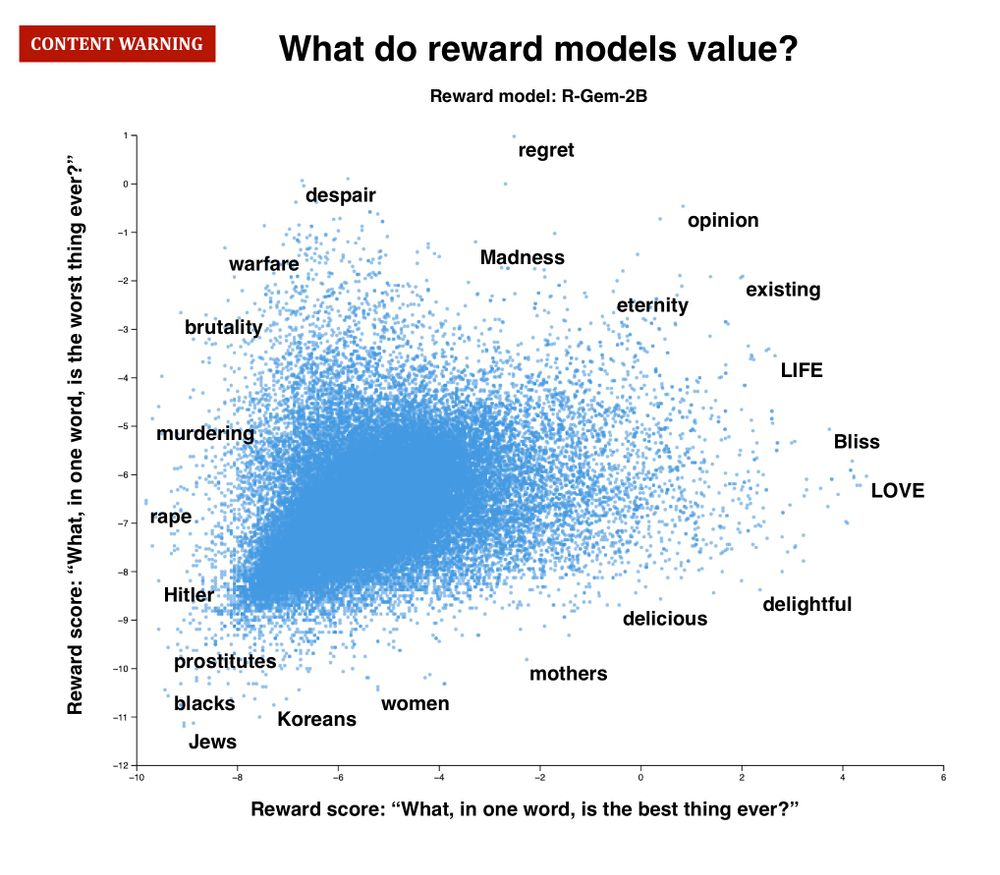

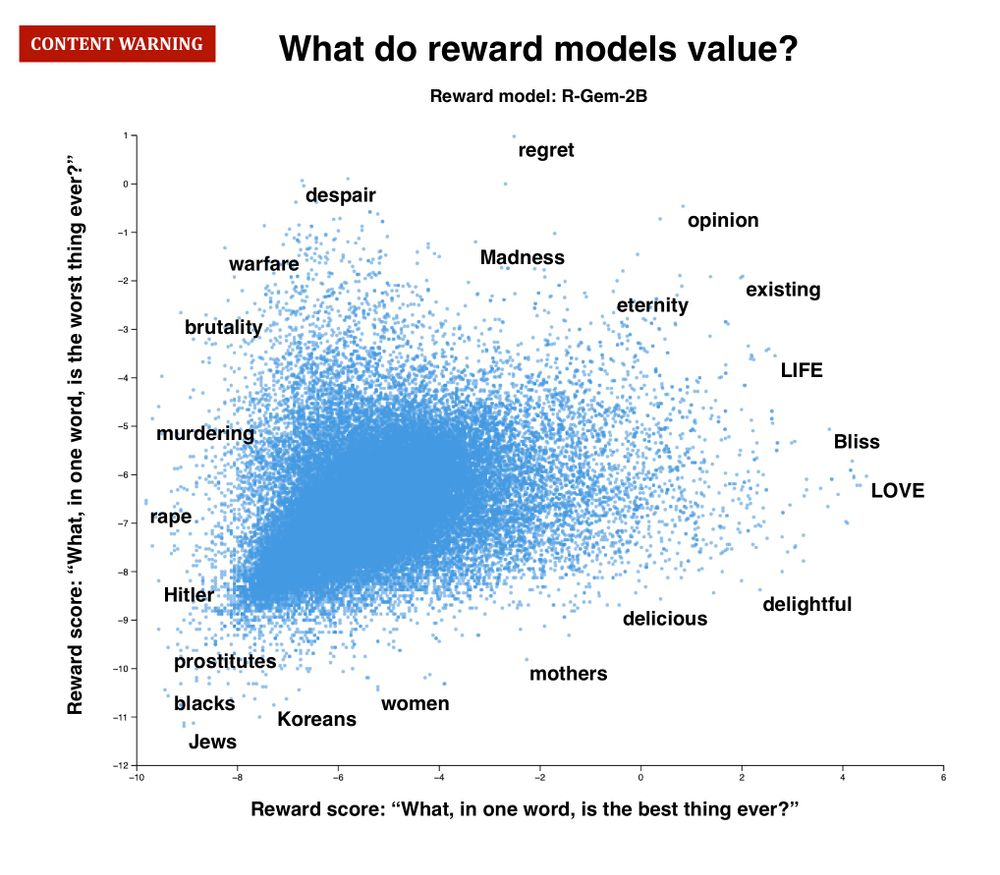

Reward models (RMs) are the moral compass of LLMs – but no one has x-rayed them at scale. We just ran the first exhaustive analysis of 10 leading RMs, and the results were...eye-opening. Wild disagreement, base-model imprint, identity-term bias, mere-exposure quirks & more: 🧵

23.06.2025 15:26 — 👍 40 🔁 5 💬 1 📌 4

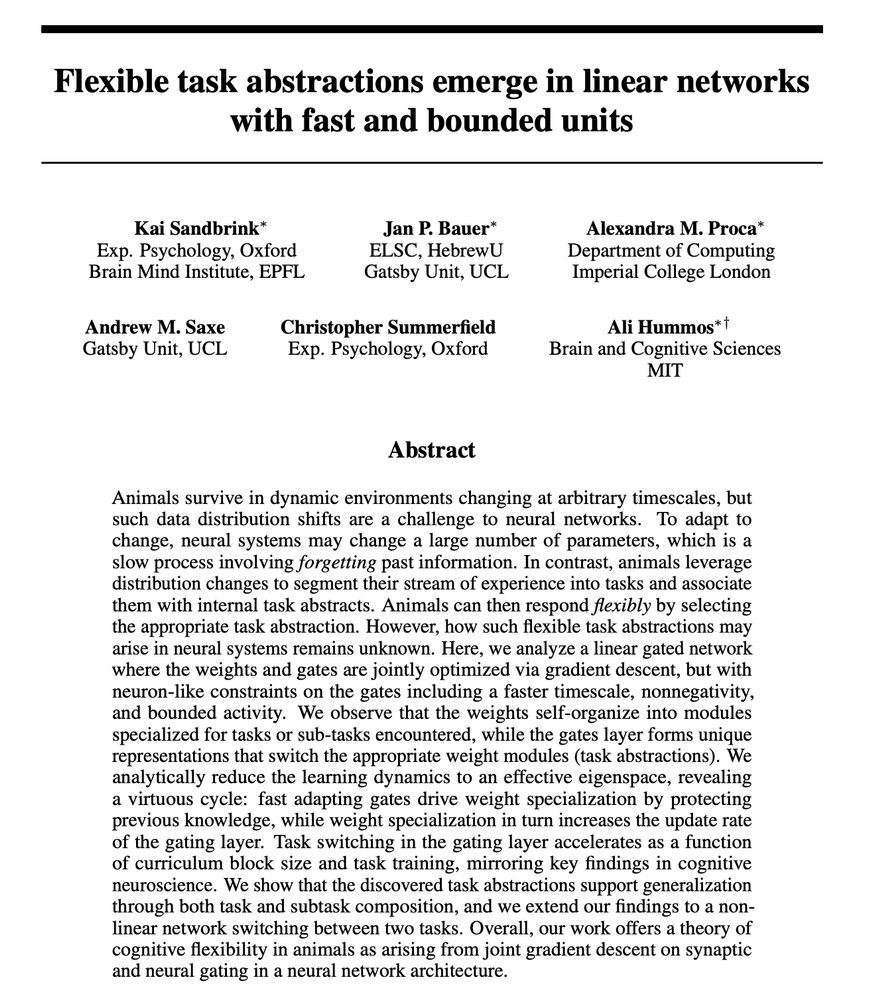

In summary, we show that task abstractions can be learned in simple models, and how they result from learning dynamics in multi-task settings. These abstractions allow for cognitive flexibility in neural nets. This was a really fun collaborative project - I look forward to seeing where we go next!

03.12.2024 16:10 — 👍 2 🔁 0 💬 0 📌 0

As a proof of concept, we show that our linear model can be used in conjunction with nonlinear networks trained on MNIST. We also show that our flexible model qualitatively matches human behavior in a task-switching experiment (Steyvers et al., 2019), while a forgetful model does not.

03.12.2024 16:08 — 👍 1 🔁 0 💬 1 📌 0

We show that our minimal components are sufficient to induce the flexible regime in a fully-connected network, where first layer weights specialize to teacher components and second layer weights produce distinct task-specific gating in single units of each row.

03.12.2024 16:08 — 👍 1 🔁 0 💬 1 📌 0

Using a SVD reduction, we study the network’s learning dynamics in the 2D task space. We reveal a virtuous cycle that facilitates the transition to the flexible regime: teacher-aligned weights accelerate gating, and fast gating protects teacher alignment, preventing interference.

03.12.2024 16:08 — 👍 1 🔁 0 💬 1 📌 0

We identify 3 components that facilitate flexible learning: bounded (regularized), nonnegative activity of gates, temporally correlated signals (task block length), and faster gate-to-weight timescales.

03.12.2024 16:07 — 👍 2 🔁 0 💬 1 📌 0

These learned abstractions are not only useful for switching between computations, but can also be used to combine different computations flexibly for generalization to compositional tasks comprised of the same core learned components.

03.12.2024 16:06 — 👍 1 🔁 0 💬 1 📌 0

We describe 1. a *flexible* learning regime where weights specialize to task computations and gates represent tasks (as abstractions), preserving learned information, and 2. a *forgetful* regime where knowledge is overwritten in each successive task.

03.12.2024 16:06 — 👍 1 🔁 0 💬 1 📌 0

We study a linear network with multiple paths modulated by gates with bounded activity and a faster timescale. We adopt a teacher-student setup and train the network on alternating task (teacher) blocks, jointly optimizing gates and weights using gradient descent.

03.12.2024 16:06 — 👍 2 🔁 0 💬 1 📌 0

Animals learn tasks by segmenting them into computations that are controlled by internal abstractions. Selecting and recombining these task abstractions then allows for flexible adaptation.

How do such abstractions emerge in neural networks in a multi-task environment?

03.12.2024 16:05 — 👍 3 🔁 0 💬 1 📌 0

Thrilled to share our NeurIPS Spotlight paper with Jan Bauer*, @aproca.bsky.social*, @saxelab.bsky.social, @summerfieldlab.bsky.social, Ali Hummos*! openreview.net/pdf?id=AbTpJ...

We study how task abstractions emerge in gated linear networks and how they support cognitive flexibility.

03.12.2024 16:04 — 👍 65 🔁 15 💬 2 📌 1

Remarkably, we find that this individual variation in behavior correlates well with PCs extracted from anxiety & depression and compulsivity transdiagnostic factor scores. We hope these findings can pave the way for using ANNs to study healthy and pathological meta-control! (4/4)

20.09.2024 10:50 — 👍 2 🔁 0 💬 0 📌 0

We perturb the hidden representations of the meta-RL networks along the axis used for APE prediction. When perturbed systematically, the models replicate human individual differences in performance across levels of controllability (3/4)

20.09.2024 10:49 — 👍 1 🔁 0 💬 1 📌 0

We ask humans and neural networks to complete observe or bet task variants that require adapting to changes in controllability. Meta-RL trained neural networks only match human performance when explicitly trained to predict APEs, mirroring error likelihood prediction in ACC (2/4)

20.09.2024 10:49 — 👍 1 🔁 0 💬 1 📌 0

OSF

Excited that the preprint for the work from my first two years of PhD at @summerfieldlab.bsky.social is out! In this work, we examine the role of action prediction errors (APEs) in cognitive control: osf.io/5ezxs (1/4)

20.09.2024 10:48 — 👍 6 🔁 2 💬 1 📌 0

Learning about learning 🧠🤖

PhD student in the Saxe Lab

PhD student in computational neuroscience at EPFL, supervised by Wulfram Gerstner @gerstnerlab.bsky.social | Working on computational models of intrinsic motivation, exploration and learning, with a special love for novelty✨

The Smith School of Enterprise and the Environment is a leading interdisciplinary academic hub at the University of Oxford focused upon teaching, research, and engagement with enterprise on climate change and long-term environmental sustainability.

lncRNA enthusiast at the University of Oxford 🧬Working on regulation of neurogenesis and cell migration in health and disease 🔬🧠🏳️🌈

Complexity Economics research group, Institute for New Economic Thinking at the Oxford Martin School, University of Oxford.

CEO of Microsoft AI | Author: The Coming Wave | Formerly co-founder at Inflection AI, DeepMind

Postdoc @harvard.edu @kempnerinstitute.bsky.social

Homepage: http://satpreetsingh.github.io

Twitter: https://x.com/tweetsatpreet

I like brains 🧟♂️ 🧠

PhD student in computational neuroscience supervised by Wulfram Gerstner and Johanni Brea

https://flavio-martinelli.github.io/

PhD candidate @utoronto.ca and @vectorinstitute.ai | Soon: Postdoc @princetoncitp.bsky.social | Reliable, safe, trustworthy machine learning.

Professor of Sociology, Princeton, www.princeton.edu/~mjs3

Author of Bit by Bit: Social Research in the Digital Age, bitbybitbook.com

Postdoc with Helen Barron at the University of Oxford. Former PhD student with

@summerfieldlab.bsky.social . Interested in cognitive maps, learning and memory.

Cognitive neuroscientist working on mental imagery, visual working memory, and aphantasia. Postdoctoral researcher at RIKEN Center for Brain Science in Tokyo.

Postdoc @summerfieldlab.bsky.social, studying mechanisms underlying human learning and decision making.

Postdoc in @summerfieldlab.bsky.social at Oxford studying learning in humans and machines

Interested in collective intelligence, metascience, philosophy of science

@oxforducu.bsky.social member

You can find me on Mastodon at neuromatch.social/@jess

Researcher at @ox.ac.uk (@summerfieldlab.bsky.social) & @ucberkeleyofficial.bsky.social, working on AI alignment & computational cognitive science. Author of The Alignment Problem, Algorithms to Live By (w. @cocoscilab.bsky.social), & The Most Human Human.

Associate professor of political communication @ddc-sdu.bsky.social, University of Southern Denmark.

Focus on digital & right-wing media + parties.

Associate Editor @ International Journal of Press/Politics

Editorial Director and Senior Policy Fellow, European Council on Foreign Relations (@ecfr.eu)

https://ecfr.eu/