Has anyone successfully done RL post-training of GPT-oss with meaningful performance gains?

What libraries even support it? I guess technically TRL/axolotl, maybe Unsloth... but there are no good examples of doing it...

04.09.2025 22:48 — 👍 7 🔁 1 💬 2 📌 1

Check out our website: sophontai.com

Read our manifesto/announcement: tanishq.ai/blog/sophont

If you're interested in building & collaborating in this space, whether you're in genAI or medicine/pharma/life sciences, feel free to reach out at: contact@sophontai.com

01.04.2025 20:49 — 👍 5 🔁 1 💬 0 📌 0

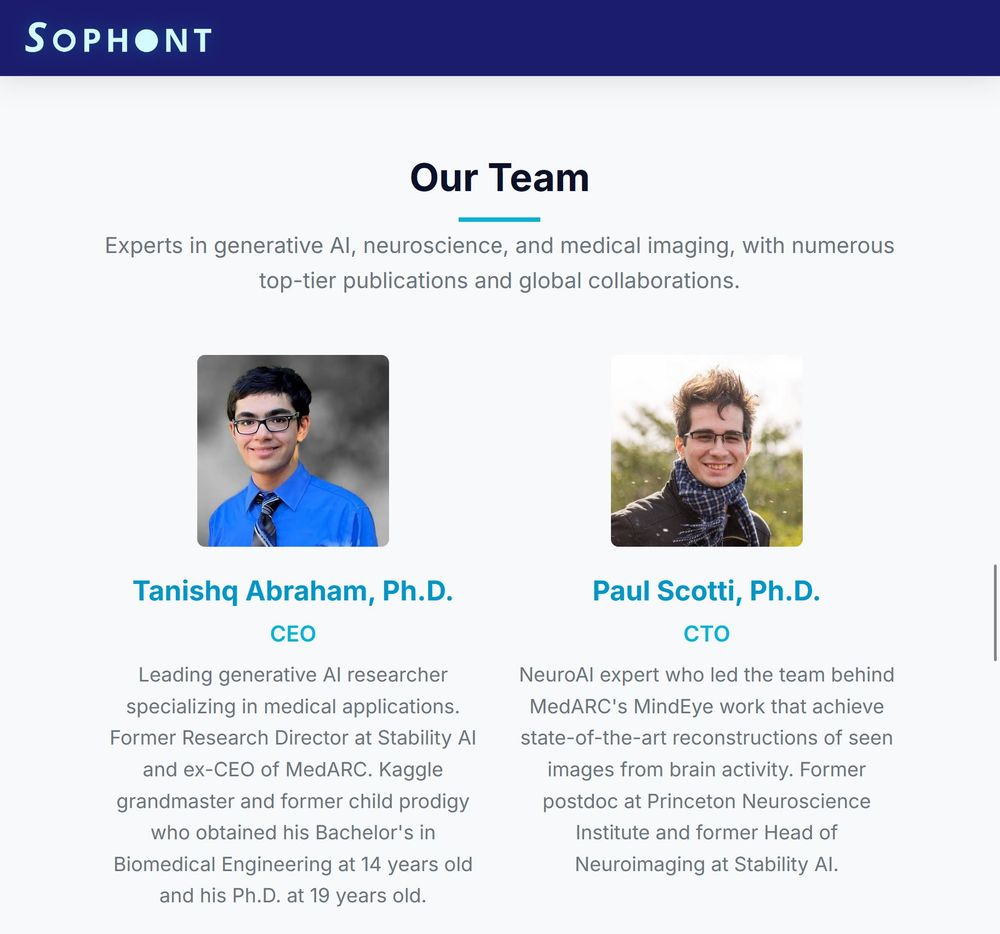

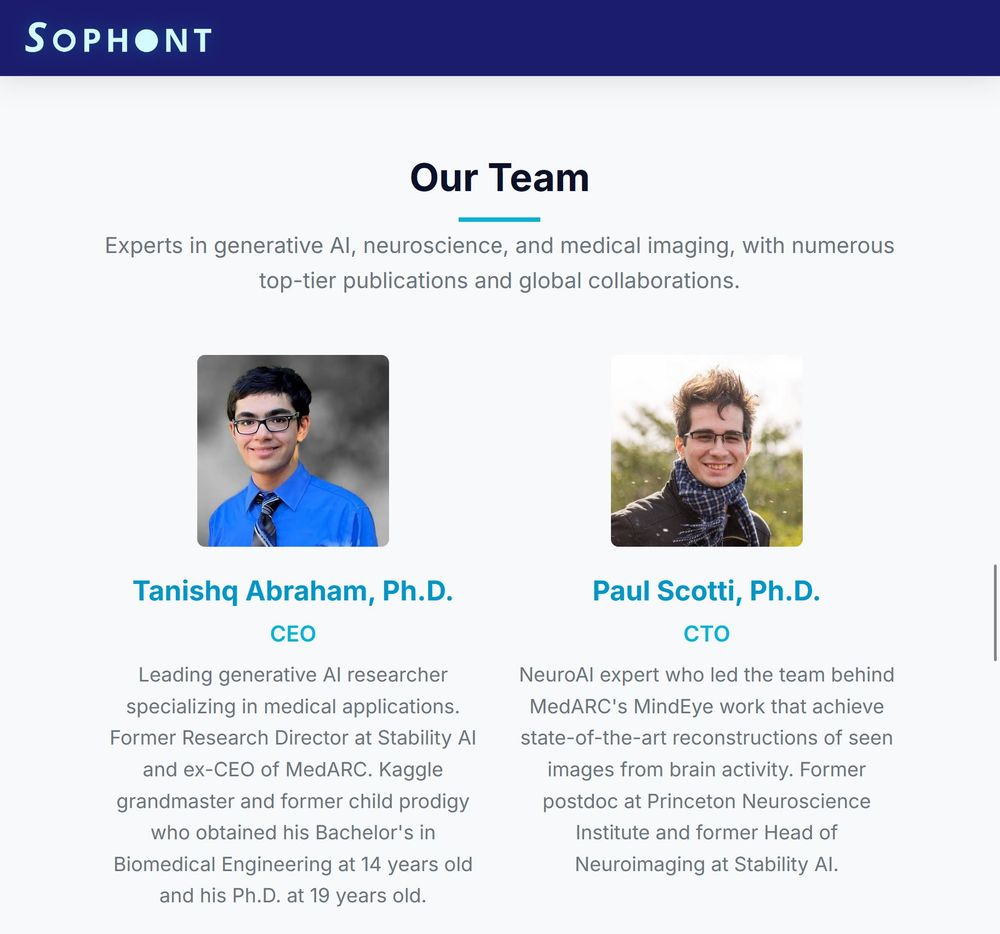

researcher at Princeton University, and served as Head of NeuroAI at Stability AI. He led the team behind the MindEye publications, which achieved state-of-the-art reconstructions of seen images from brain activity.

01.04.2025 20:49 — 👍 3 🔁 0 💬 1 📌 0

With over five years of experience applying generative AI to medicine, I bring a wealth of expertise, having previously served as the Research Director at Stability AI and CEO of MedARC. My co-founder, Paul Scotti, has a decade of experience in computational neuroscience, was a postdoctoral ...

01.04.2025 20:49 — 👍 3 🔁 0 💬 1 📌 0

We are strong believers in open, transparent research, based on our proven track records in medical AI research and building open science online communities. We hope to continue this science-in-the-open approach to research at Sophont to build towards the future of healthcare.

01.04.2025 20:49 — 👍 2 🔁 0 💬 1 📌 0

Our vision is to merge highly performant, specialized medical foundation models into a single holistic, highly flexible medical-specific multimodal foundation model. We believe that this should be done open-source for maximum transparency and flexibility

01.04.2025 20:49 — 👍 2 🔁 0 💬 1 📌 0

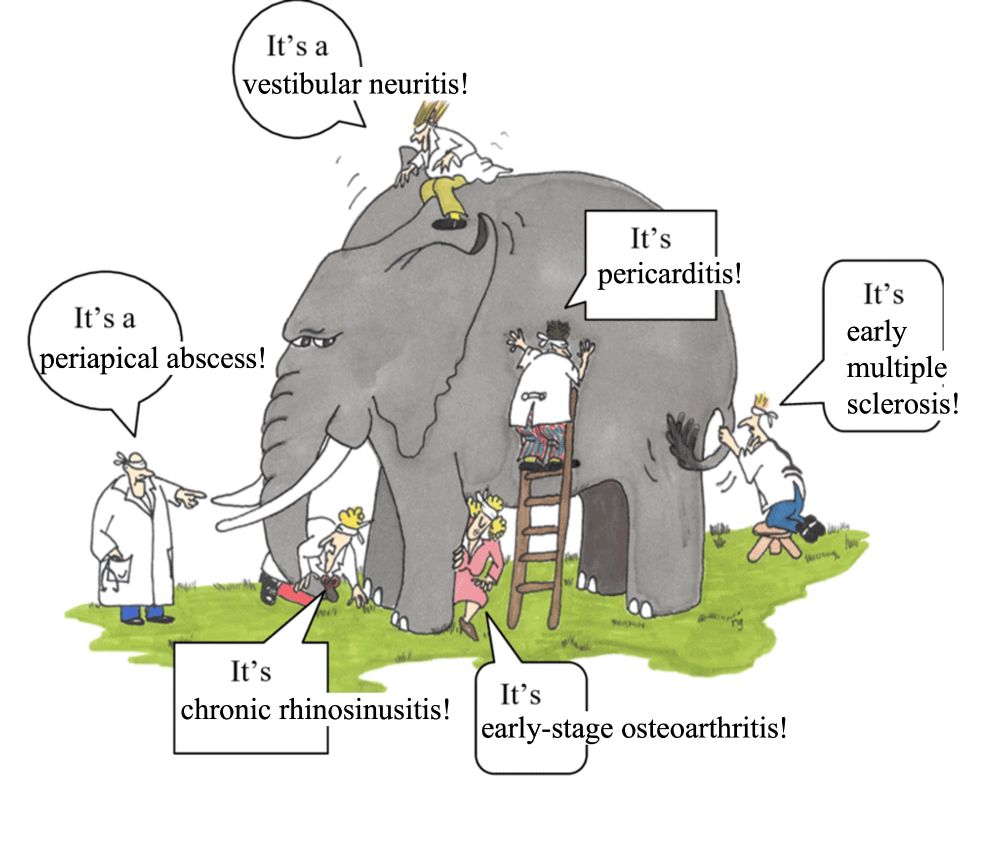

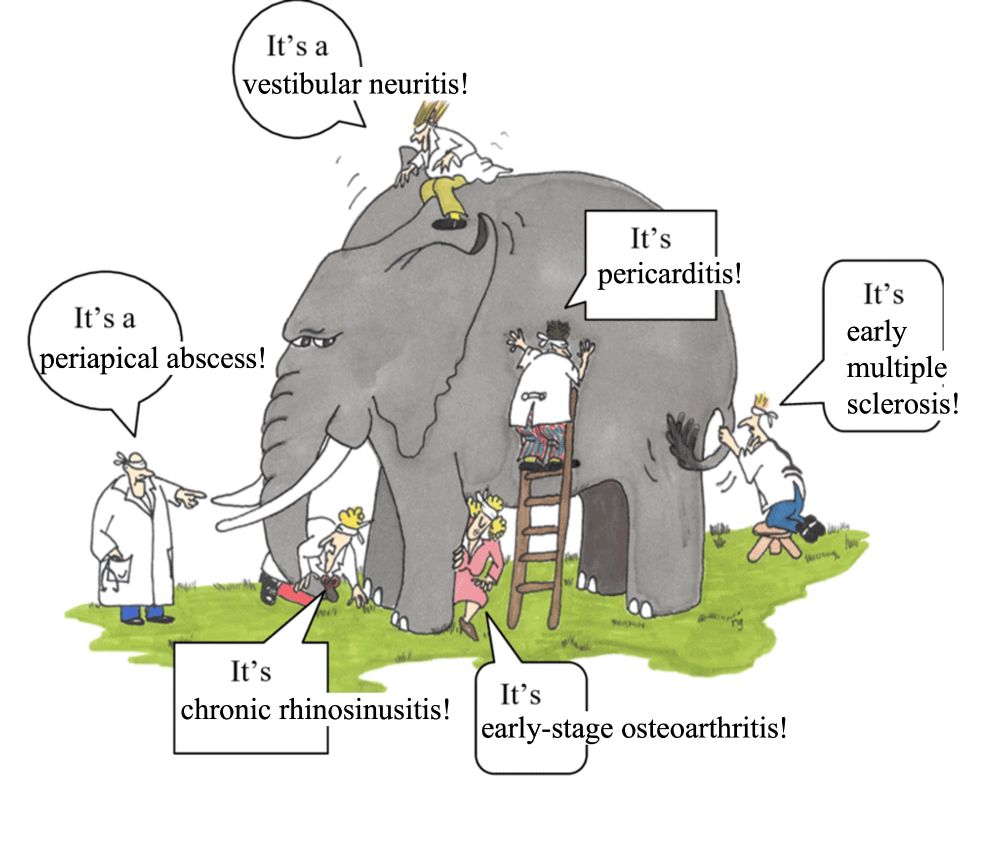

These approaches succumb to the parable of the blind men and the elephant: The blind men are unimodal medical models and the patient is the elephant.

01.04.2025 20:49 — 👍 2 🔁 0 💬 1 📌 0

AI is clearly needed to enhance doctors’ ability to provide the best care.

However, currently deployed medical AI models are inflexible, rigid, suited for narrow tasks focused on individual data modalities.

01.04.2025 20:49 — 👍 2 🔁 0 💬 1 📌 0

I have EXCITING news:

I've started a company!

Introducing Sophont

We’re building open multimodal foundation models for the future of healthcare. We need a DeepSeek for medical AI, and @sophontai.bsky.social will be that company!

Check out our website & blog post for more info (link below)

01.04.2025 20:49 — 👍 30 🔁 2 💬 1 📌 0

Btw, I posted this 2 weeks ago on Twitter but forgot to post here, so doing it now. Twitter is probably going to always be the fastest place to get updates from me unfortunately 😅

17.03.2025 08:08 — 👍 4 🔁 0 💬 1 📌 0

NEW BLOG POST: LLMs in medicine: evaluations, advances, and the future

www.tanishq.ai/blog/posts/l...

A short blog post discussing how LLMs are evaluated for medical capabilities and what's the future for LLMs in medicine (spoiler: it's reasoning!)

17.03.2025 08:08 — 👍 21 🔁 2 💬 1 📌 0

Yeah, but compute scaling can mean lots of things including synthetic data for example

20.02.2025 11:37 — 👍 0 🔁 0 💬 0 📌 0

Artificial superintelligence

20.02.2025 11:37 — 👍 1 🔁 0 💬 1 📌 0

New blog post coming tomorrow on medical LLMs...

19.02.2025 09:22 — 👍 7 🔁 0 💬 0 📌 0

I restarted my blog a few weeks ago. The 1st post was:

Debunking DeepSeek Delusions

I discussed 5 main myths that I saw spreading online back during the DeepSeek hype.

It may be a little less relevant now, but hopefully still interesting to folks.

Check it out → www.tanishq.ai/blog/posts/d...

19.02.2025 09:22 — 👍 22 🔁 1 💬 4 📌 0

Are folks still here? 😅

19.02.2025 08:39 — 👍 46 🔁 0 💬 11 📌 0

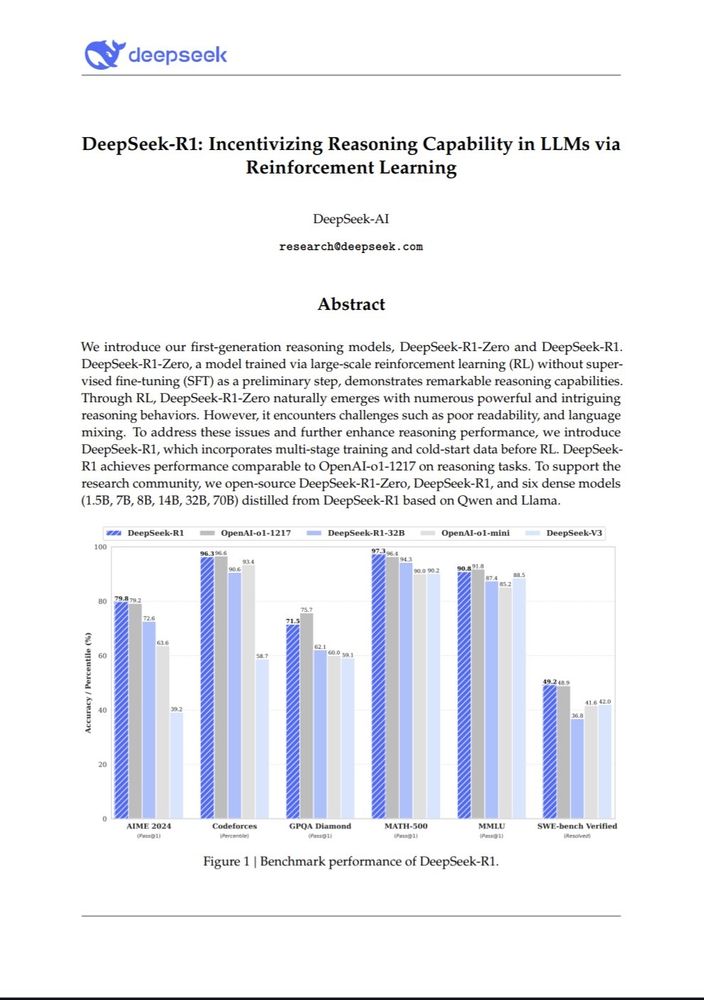

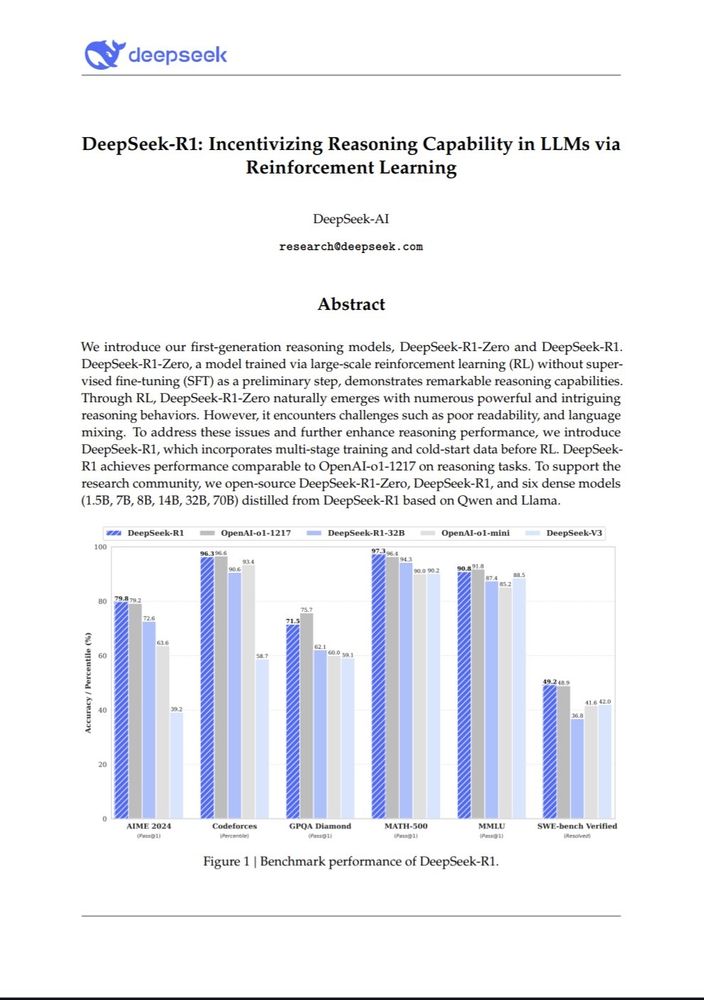

github.com/deepseek-ai/...

20.01.2025 16:15 — 👍 7 🔁 0 💬 0 📌 0

Okay so this is so far the most important paper in AI of the year

20.01.2025 16:14 — 👍 23 🔁 1 💬 2 📌 0

Anthropic, please add a higher tier plan for unlimited messages 😭🙏

11.01.2025 06:16 — 👍 16 🔁 0 💬 4 📌 0

Decentralized Diffusion Models

UC Berkeley and Luma AI introduce Decentralized Diffusion Models, a way to train diffusion models on decentralized compute with no communication between nodes.

abs: arxiv.org/abs/2501.05450

project page: decentralizeddiffusion.github.io

10.01.2025 10:15 — 👍 20 🔁 2 💬 0 📌 0

The GAN is dead; long live the GAN! A Modern Baseline GAN

This is a very interesting paper, exploring making GANs simpler and more performant.

abs: arxiv.org/abs/2501.05441

code: github.com/brownvc/R3GAN

10.01.2025 10:15 — 👍 13 🔁 2 💬 0 📌 0

Flow matching is closely related to diffusion and rectified flows and Gaussian flow matching is equivalent to denoising diffusion.

10.12.2024 08:35 — 👍 1 🔁 0 💬 0 📌 0

Inventors of flow matching have released a comprehensive guide going over the math & code of flow matching!

Also covers variants like non-Euclidean & discrete flow matching.

A PyTorch library is also released with this guide!

This looks like a very good read! 🔥

arxiv: arxiv.org/abs/2412.06264

10.12.2024 08:35 — 👍 109 🔁 26 💬 1 📌 1

Refusal Tokens: A Simple Way to Calibrate Refusals in Large Language Models

"We introduce a simple strategy that makes refusal behavior controllable at test-time without retraining: the refusal token."

arxiv.org/abs/2412.06748

10.12.2024 08:04 — 👍 6 🔁 1 💬 0 📌 0

Can foundation models actively gather information in interactive environments to test hypotheses?

"Our experiments with Gemini 1.5 reveal significant exploratory capabilities"

arxiv.org/abs/2412.06438

10.12.2024 08:03 — 👍 10 🔁 1 💬 0 📌 0

Training Large Language Models to Reason in a Continuous Latent Space

Introduces a new paradigm for LLM reasoning called Chain of Continuous Thought (COCONUT)

Directly feed the last hidden state (a continuous thought) as the input embedding for the next token.

arxiv.org/abs/2412.06769

10.12.2024 08:02 — 👍 53 🔁 8 💬 2 📌 2

Anthropomorphic Orbital | Engineering Science PhD | Founder @ Coalescence Labs

Computer scientist on a life-science mission. Working on pushing bioimage analysis, computer vision, AI/ML methods for the life sciences. Love running and pottery.

Google Chief Scientist, Gemini Lead. Opinions stated here are my own, not those of Google. Gemini, TensorFlow, MapReduce, Bigtable, Spanner, ML things, ...

Senior Director, Research Scientist @ Meta FAIR + Visiting Prof @ NYU.

Pretrain+SFT: NLP from Scratch (2011). Multilayer attention+position encode+LLM: MemNet (2015). Recent (2024): Self-Rewarding LLMs & more!

Kaggle.com - Kaggle is the world's largest data science community with powerful tools and resources to help you achieve your data science goals.

Co-Founder & CEO, Sakana AI 🎏 → @sakanaai.bsky.social

https://sakana.ai/careers

Nanoscience, science, engineering, medicine, science communication, science policy, travel

UCLA prof, husband, father, all comments are my own

Neuroscientist: consciousness, perception, and Dreamachines. Author of Being You - A New Science of Consciousness.

AI, Game generation, want to make heaven

crnt: SynthLabsAI

prev: StabilityAI, CarperAI, EleutherAI, UWaterloo

Creator of GitHub Copilot, Dropbox Paper, AI Tinkerers, Hackpad, Minion, etc. 🎗️

AI researcher at Mila, visiting researcher at Meta

Also on X: @soniajoseph_

Working at wandb on Weave, helping teams ship AI applications

Radiologist, Scientist & Faculty @ Stanford, Stanford AIMI | #RadSky #ChestRad 🫁🫀 🩻

PhD @berkeley_ai; prev SR @GoogleDeepMind. I stare at my computer a lot and make things

PyTorch dev. Fond of hot takes