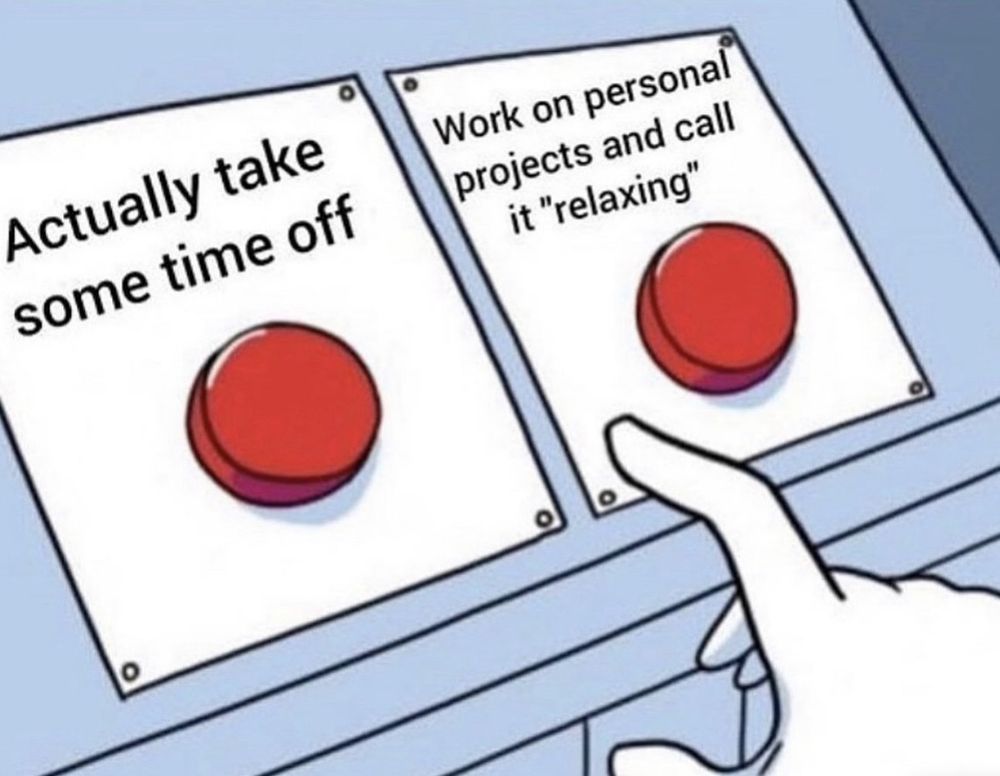

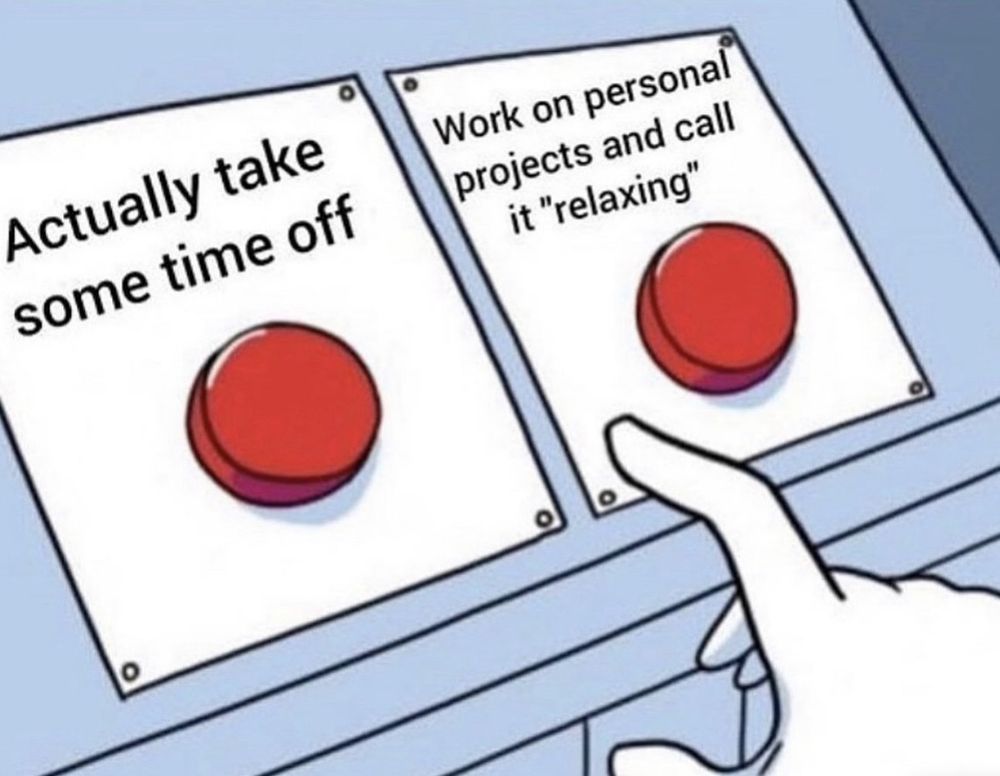

deciding between "actually taking some time off" and "work on personal projects and call it relaxing"

hmm i feel attacked

24.12.2024 20:27 — 👍 96 🔁 12 💬 5 📌 0@ericmtztx.bsky.social

UTRGV School of Medicine / UTHealth RGV Asst Dir Business Intelligence & Enterprise Engineering Adjunct Lecturer @ UTRGV CS Jiu-Jitsu Black Belt

deciding between "actually taking some time off" and "work on personal projects and call it relaxing"

hmm i feel attacked

24.12.2024 20:27 — 👍 96 🔁 12 💬 5 📌 0Your 'AI Engineer' can't deploy their own model? Your 'Data Engineer' can't write a web app? Stop with narrow job titles that create silos. In healthcare tech, we need full-stack problem solvers who can ship end-to-end. One capable generalist > five specialists.

30.11.2024 19:27 — 👍 5 🔁 1 💬 0 📌 0

Healthcare (system) is rarely personalised. In this HBR article the authors predict that *eventually healthcare will be as personalised as online shopping or banking. See poll below to weigh in.

#healthcare #personalisation #pt

hbr.org/2024/11/why-...

🧵 Following our healthcare AI journey? I'll share:

- Implementation & design details

- Evaluation methodology and dataset development

- AI engineering process

- Data platform infrastructure/ HIPAA compliance

Learn how we're making healthcare more accessible in the RGV, one search at a time. (7/7)

Step 5: Address Bias Head-On

We're expanding our evaluation dataset to detect unwanted outcomes that could impact patients, providers, or departments. No system is perfect, but measuring bias lets us iterate and improve responsibly. (6/7)

Step 4: Systematic Development

- Build comprehensive evaluation dataset

- Perfect basic provider matching

- Enable structured query rewriting with LLMs

Each step verified through testing to ensure reliable results. (5/7)

Step 3: Measure What Matters

Key metrics we're tracking:

- Query-to-(provider/specialty/department) match accuracy

- Cross-lingual search performance

- Response time (targeting <300ms)

These guide our improvements where they matter most and can be sliced across descriptors to measure bias. (4/7)

Step 2: Fast Iteration Cycles

We built an evaluation pipeline measuring precision and other key metrics. This enables rapid testing of different embedding models, scoring methods, and chunking strategies - focusing on getting provider retrieval right before adding complexity. (3/7)

Step 1: Real Data, Synthetic Queries

We extract actual patient-provider matches from our EHR, then use LLMs to generate natural search queries. This creates a robust test dataset that reflects real patient needs - from "my toe hurts" to "doctor for diabetes control" (2/7)

We're building an AI-powered search engine at UTHealth RGV that connects patients with the right healthcare providers. Our focus: making it easier for our community to find and access the care they need. Here's how we're building responsibly: (1/7)

28.11.2024 18:06 — 👍 1 🔁 0 💬 1 📌 0It’s almost a game for people to race to take the latest open weight model and Med* it. But anyone who has tried them can tell you that they don’t seem very good and their performance on anything real world is still far below state of the art closed source models.

27.11.2024 05:31 — 👍 1 🔁 0 💬 0 📌 0I do think small task oriented models trained on real world data could be very useful (speed, cost, task performance). But skeptical that throwing PubMed articles and things of that sort will improve performance on real world clinical tasks, especially without significant curation and synthesis.

27.11.2024 05:28 — 👍 1 🔁 0 💬 0 📌 0Great paper. Really skeptical of the fine-tuning techniques and training data being used in many of these Med* models. Not much effort seems to be going into building legit high quality datasets for clinical tasks. I yawn at yet another Med* model eval’d on low quality QA datasets.

27.11.2024 05:22 — 👍 1 🔁 0 💬 1 📌 0Assuming biased data means biased models is lazy.

Measure bias by quantifying disparities in your model’s behavior.

Here’s how:

1. Define your AI's tasks and metrics

2. Measure performance across critical subgroups

3. Iterate based on real results

Act like a scientist—test, measure, repeat.

I’d be curious to see if this phenomenon occurs with 1) larger variants of the same model teaching smaller models 2) The effect of prompting to curate high quality data than the original. It would be interesting to see how long it takes for collapse to outweigh the benefits.

23.11.2024 17:27 — 👍 1 🔁 0 💬 0 📌 0

Neat RAG library we developed for our in-house Ruby on Rails applications at UTRGV School of Medicine. We’ll be using this to power our upcoming “Find a Doctor” tool. No dependencies other than pgvector and Azure OpenAI.

23.11.2024 07:35 — 👍 5 🔁 0 💬 0 📌 0