What’s a multiverse good for anyway?

Julia M. Rohrer, Jessica Hullman, and Andrew Gelman

Multiverse analysis has become a fairly popular approach, as indicated by the present special issue on the matter. Here, we take one step back and ask why one would conduct a multiverse analysis in the first place. We discuss various ways in which a multiverse may be employed – as a tool for reflection and critique, as a persuasive tool, as a serious inferential tool – as well as potential problems that arise depending on the specific purpose. For example, it fails as a persuasive tool when researchers disagree about which variations should be included in the analysis, and it fails as a serious inferential tool when the included analyses do not target a coherent estimand. Then, we take yet another step back and ask what the multiverse discourse has been good for and whether any broader lessons can be drawn. Ultimately, we conclude that the multiverse does remain a valuable tool; however, we urge against taking it too seriously.

New preprint! So, what's a multiverse analysis good for anyway?>

With @jessicahullman.bsky.social and @statmodeling.bsky.social

juliarohrer.com/wp-content/u...

04.02.2026 10:24 — 👍 173 🔁 52 💬 9 📌 3

Transparent and comprehensive statistical reporting is critical for ensuring the credibility, reproducibility, and interpretability of psychological research. This paper offers a structured set of guidelines for reporting statistical analyses in quantitative psychology, emphasizing clarity at both the planning and results stages. Drawing on established recommendations and emerging best practices, we outline key decisions related to hypothesis formulation, sample size justification, preregistration, outlier and missing data handling, statistical model specification, and the interpretation of inferential outcomes. We address considerations across frequentist and Bayesian frameworks and fixed as well as sequential research designs, including guidance on effect size reporting, equivalence testing, and the appropriate treatment of null results. To facilitate implementation of these recommendations, we provide the Transparent Statistical Reporting in Psychology (TSRP) Checklist that researchers can use to systematically evaluate and improve their statistical reporting practices (https://osf.io/t2zpq/). In addition, we provide a curated list of freely available tools, packages, and functions that researchers can use to implement transparent reporting practices in their own analyses to bridge the gap between theory and practice. To illustrate the practical application of these principles, we provide a side-by-side comparison of insufficient versus best-practice reporting using a hypothetical cognitive psychology study. By adopting transparent reporting standards, researchers can improve the robustness of individual studies and facilitate cumulative scientific progress through more reliable meta-analyses and research syntheses.

Our paper on improving statistical reporting in psychology is now online 🎉

As a part of this paper, we also created the Transparent Statistical Reporting in Psychology checklist, which researchers can use to improve their statistical reporting practices

www.nature.com/articles/s44...

14.11.2025 20:43 — 👍 236 🔁 93 💬 8 📌 5

“Google's strategic transformation into an AI-first company fundamentally conflicts with maintaining niche academic services like Scholar”

11.11.2025 22:30 — 👍 12 🔁 3 💬 0 📌 0

In general I think it's hard to combat scientific misinformation when some of the best research is locked behind an academic paywall, while lots of nonsense gets published free for everyone to read in predatory journals.

28.09.2025 17:25 — 👍 493 🔁 124 💬 20 📌 30

Folks, always share your code. It doesn’t have to be perfect to be helpful. And if you feel that it’s still too messy or not sufficiently clean to be shared, you shouldn’t submit yet. After all, there could be mistakes in your mess.

09.05.2025 10:21 — 👍 121 🔁 44 💬 4 📌 8

Assistant or Associate Professor in Epidemiology

Montreal, Quebec, Canada United States #Epijobs

careers.apha.org/jobs/2125722...

05.05.2025 02:09 — 👍 1 🔁 3 💬 0 📌 0

Clarifying causal mediation analysis for the applied researcher:

Defining effects based on what we want to learn

Trang Quynh Nguyen, Ian Schmid, Elizabeth A. Stuart

Johns Hopkins Bloomberg School of Public Health

The incorporation of causal inference in mediation analysis has led to theoretical and methodological

advancements – effect definitions with causal interpretation, clarification of assumptions

required for e ect identification, and an expanding array of options for effect estimation.

However, the literature on these results is fast-growing and complex, which may be confusing

to researchers unfamiliar with causal inference or unfamiliar with mediation. The goal of this

paper is to help ease the understanding and adoption of causal mediation analysis. It starts by

highlighting a key difference between the causal inference and traditional approaches to mediation

analysis and making a case for the need for explicit causal thinking and the causal inference

approach in mediation analysis. It then explains in as-plain-as-possible language existing

effect types, paying special attention to motivating these e ects with different types of research

questions, and using concrete examples for illustration. This presentation differentiates two

perspectives (or purposes of analysis): the explanatory perspective (aiming to explain the total

e ect) and the interventional perspective (asking questions about hypothetical interventions on

the exposure and mediator, or hypothetically modified exposures). For the latter perspective,

the paper proposes tapping into a general class of interventional effects that contains as special

cases most of the usual effect types – interventional direct and indirect effects, controlled direct

effects and also a generalized interventional direct effect type, as well as the total effect and

overall effect...

Just discovered this excellent paper on mediation analysis in Psych Methods. The focus defining various effects; I really appreciate how the authors contrast the "traditional" approach with "causal" mediation analysis. Great job picking up readers where they are!

www.researchgate.net/publication/...

16.04.2025 09:11 — 👍 50 🔁 10 💬 2 📌 0

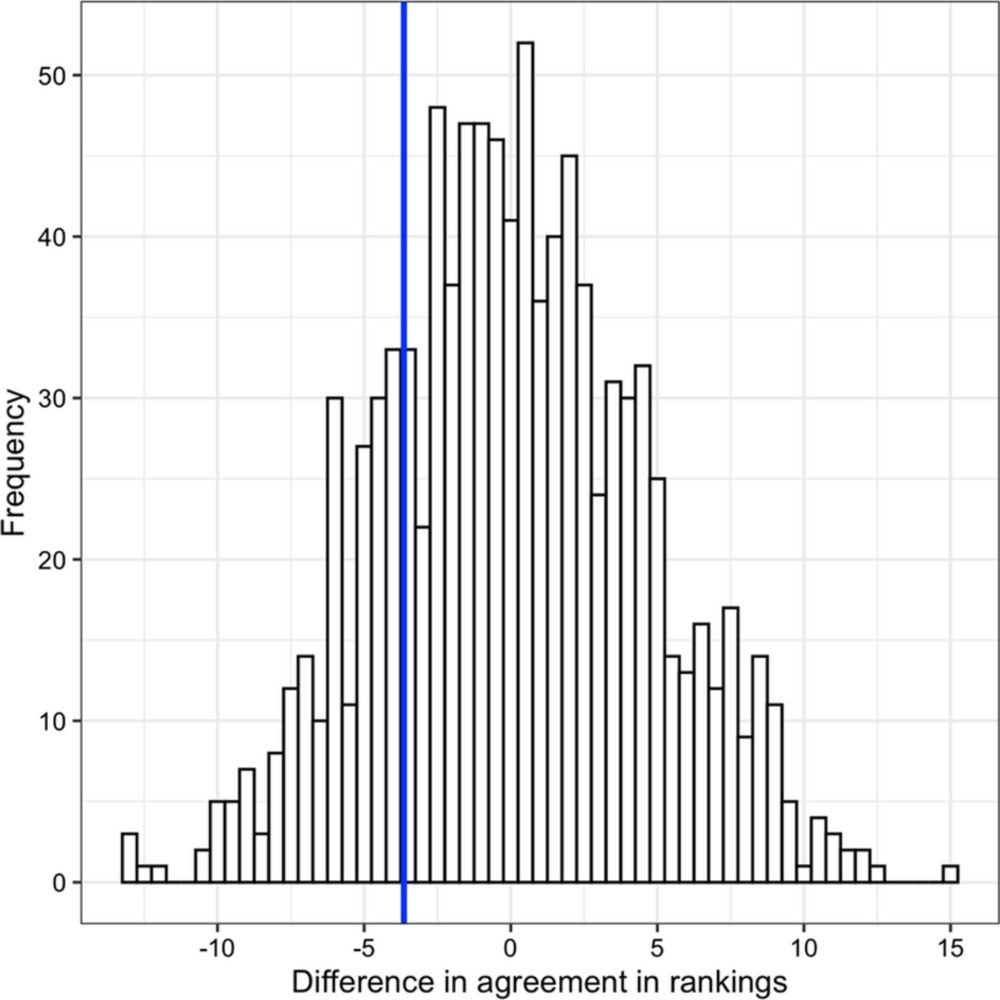

New research alert! Our study investigates the effectiveness of human-only, AI-assisted, and AI-led teams in assessing the reproducibility of quantitative social science research. We've got some surprising findings!

22.01.2025 02:22 — 👍 102 🔁 48 💬 3 📌 20

A strong contender, at least: arxiv.org/abs/2405.08675

27.12.2024 17:13 — 👍 12 🔁 2 💬 0 📌 0

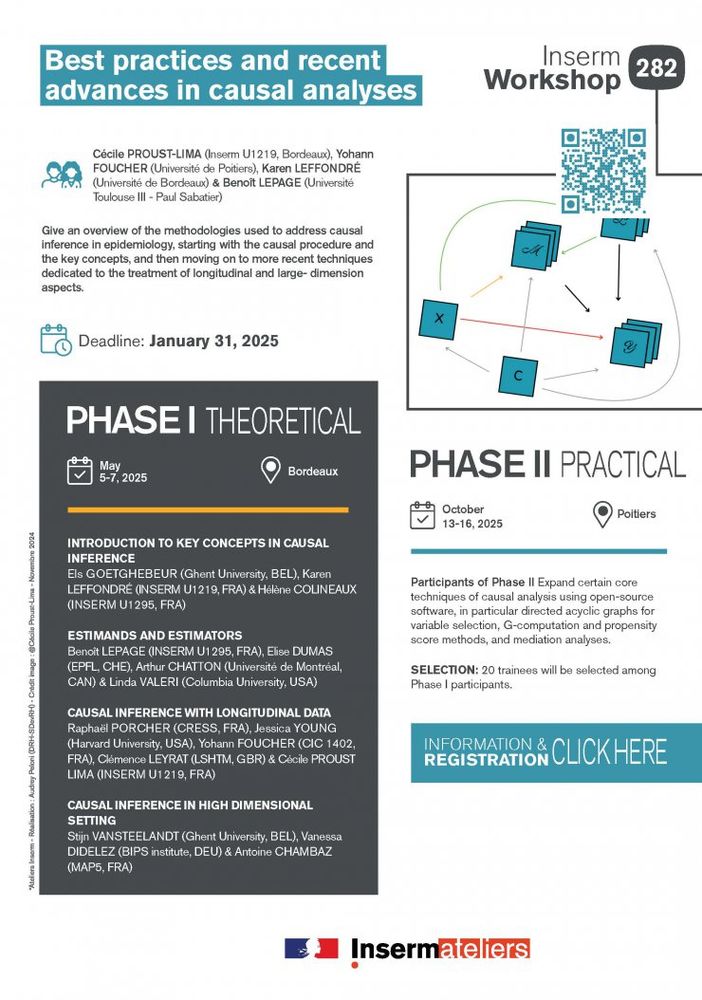

📣 Do you want to learn about recent advances in causal inference?

Colleagues at INSERM are organising a workshop gathering international experts in the field. Bonus: it's happening in two amazing locations 🌇🇫🇷

18.12.2024 09:02 — 👍 7 🔁 8 💬 1 📌 1

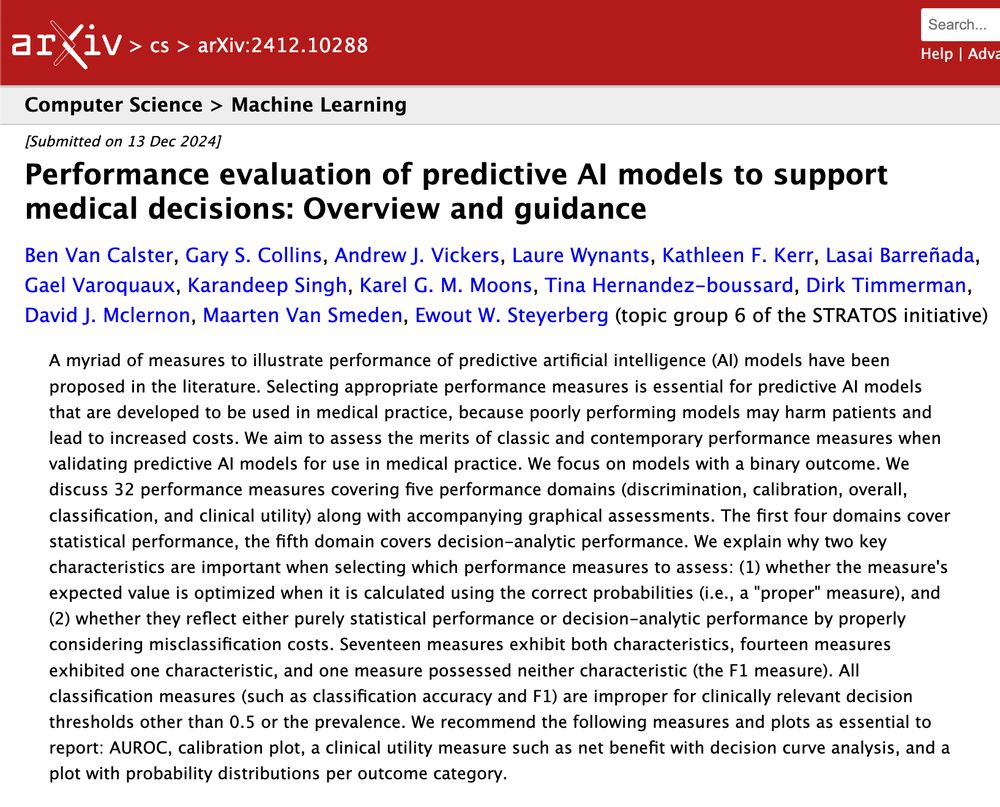

NEW PREPRINT

A detailed overview of 32 popular predictive performance metrics for prediction models

arxiv.org/abs/2412.10288

16.12.2024 08:44 — 👍 192 🔁 65 💬 11 📌 6

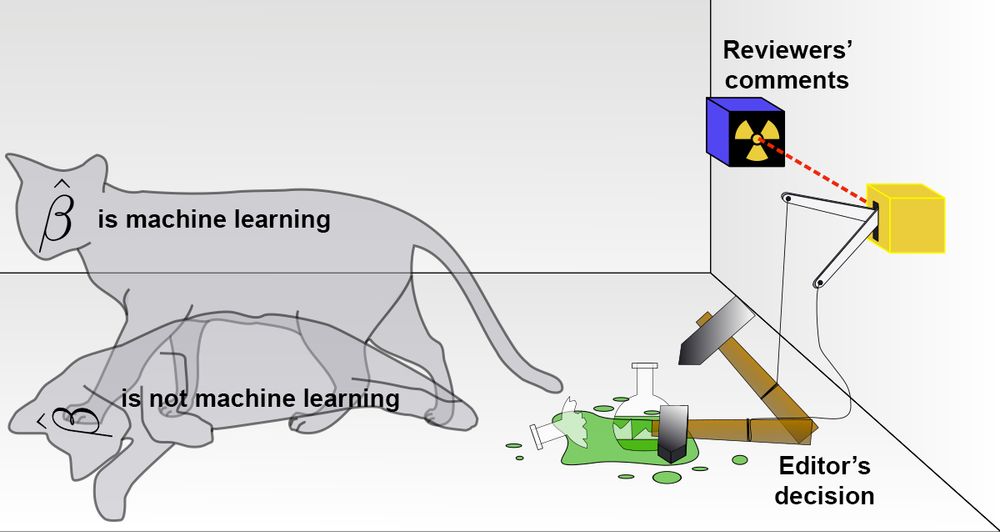

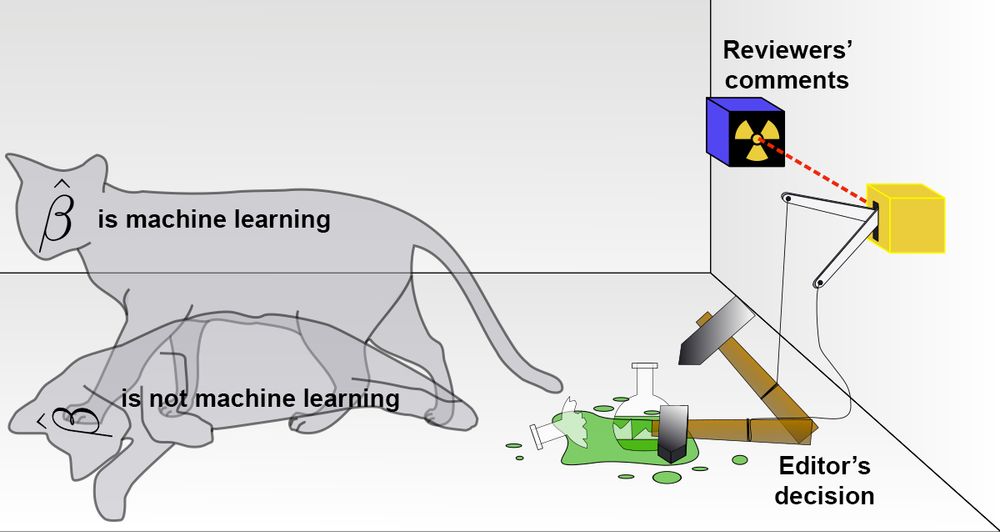

Schrodinger's cat, but the radioactive source is labeled "reviewers' comments", the hammer for the poison is labeled "editor's decision", and the alive cat is labeled "beta-hat is machine learning" and the dead cat is labeled "beta-hat is not machine learning"

best I got for Schrodinger's regression

27.11.2024 17:32 — 👍 8 🔁 1 💬 2 📌 0

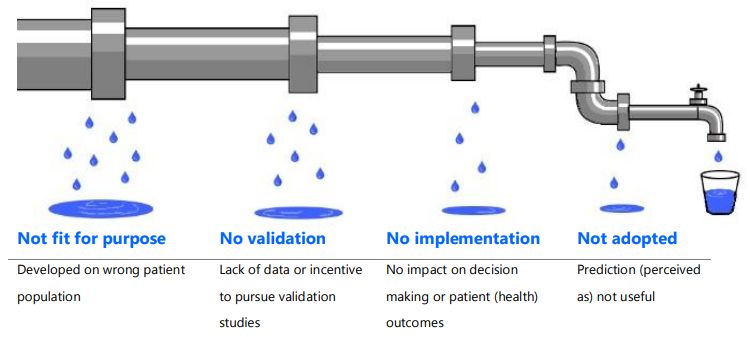

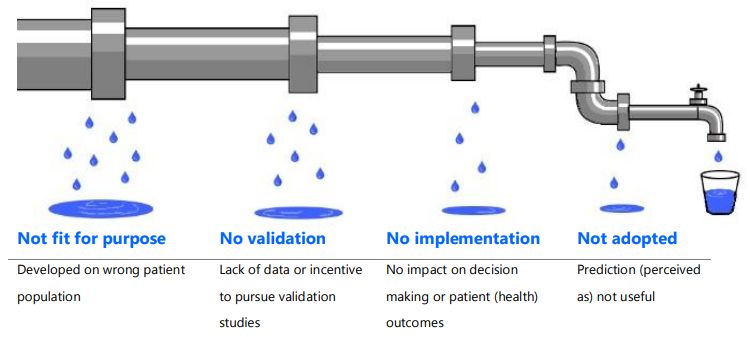

Leaky Clinical Prediction Models

The "Leaky prognostic model adoption pipeline" by @maartenvsmeden.bsky.social and colleagues is probably one of my most used figures when discussing building useful clinical prediction models. See the full paper here: publications.ersnet.org/content/erj/... #MLSky #stats #rstats #statistics

14.11.2024 15:29 — 👍 30 🔁 8 💬 4 📌 0

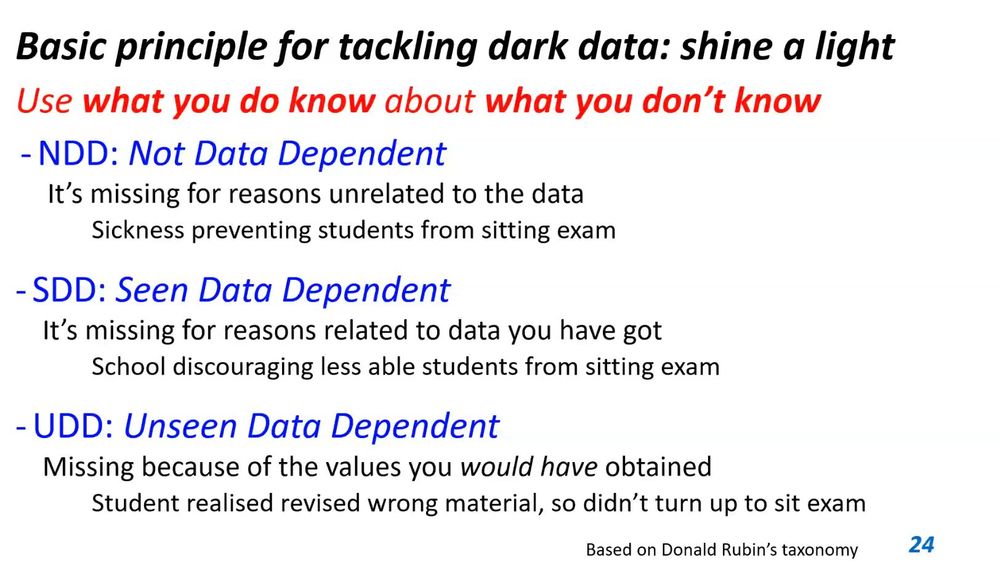

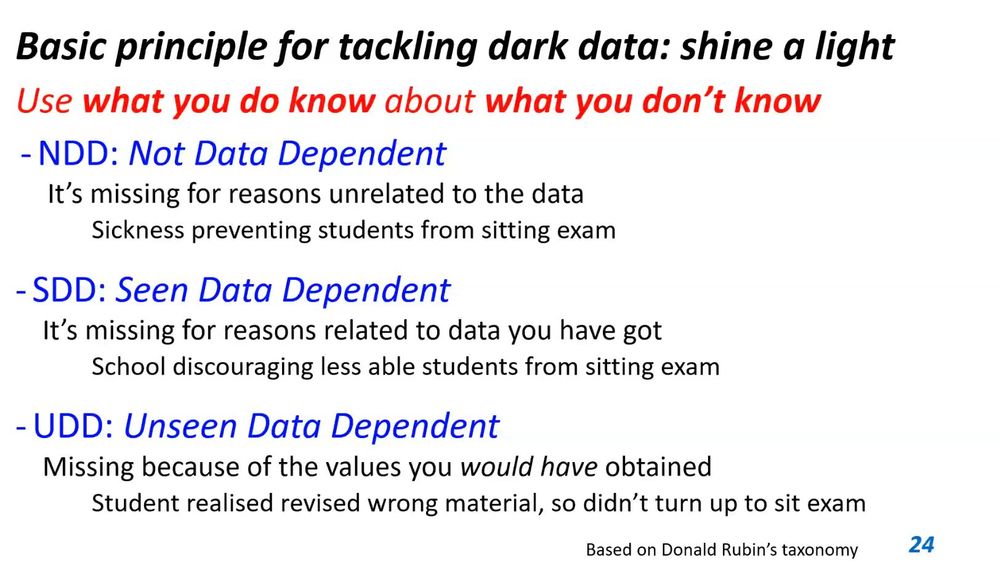

Was recently reminded of David Hand's alternative missing data taxonomy renaming the (in)famous taxonomy MCAR/MAR/MNAR by Donald Rubin to NDD/SDD/UDD. I am not generally a fan of renaming things, but this might be the exception

Source: rss.org.uk/training-eve...

11.11.2024 09:13 — 👍 76 🔁 19 💬 6 📌 4

A conversation on treatment effects

The trial statistician and the clinical investigator took a step back to admire their creation.

You can't understand what tx effects can be estimated using clinical RCTs without understanding the REAL-WORLD context that clinical RCTs are conducted in. How patients are enrolled, and how medicines are "approved" are critical parts of this context.

(ICYMI)

statsepi.substack.com/p/a-conversa...

08.11.2024 14:26 — 👍 19 🔁 4 💬 1 📌 0

Since I have new followers, time to re-up this:

do you want to use my textbook (EPIDEMIOLOGY BY DESIGN) to teach? I have materials to share! I will give you lecture notes and exercises and exams and more!!

12.09.2024 16:22 — 👍 56 🔁 19 💬 3 📌 2

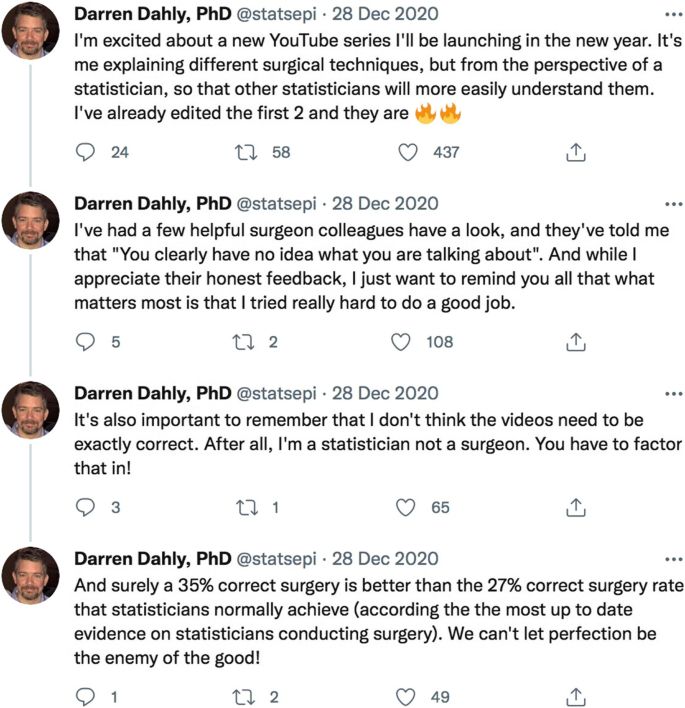

Every so often I'm reminded that a few of my tweets were included in a scientific paper and I'm still not exactly sure how I feel about that.

trialsjournal.biomedcentral.com/articles/10....

09.10.2024 11:40 — 👍 70 🔁 19 💬 12 📌 0

Ellie sitting at a table coloring at the library, with bookshelves as far as the eye can see.

Words cannot describe how wonderful libraries are. They are true treasure of society. The fact that they are getting their funding cut so police forces can have tanks and tactical gear is a true crime against culture.

Libraries are one of the greatest things in earth, no hyperbole.

16.06.2024 14:36 — 👍 99 🔁 35 💬 4 📌 1

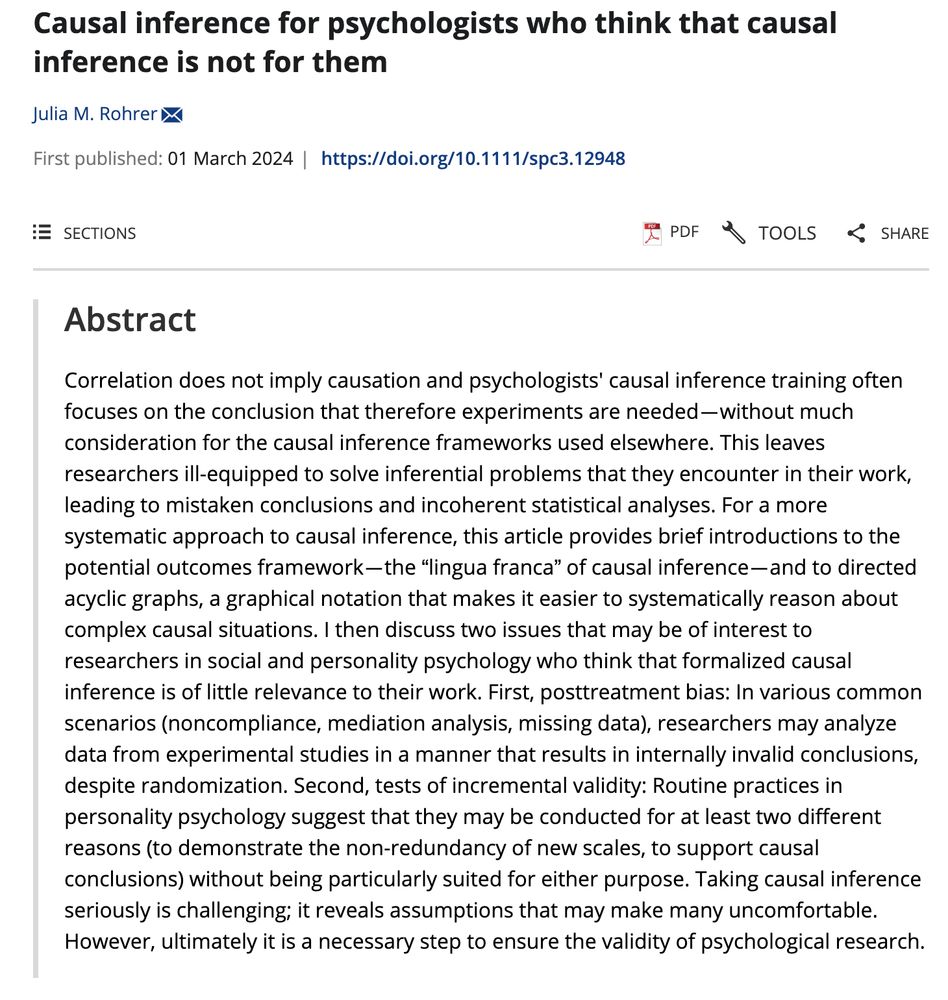

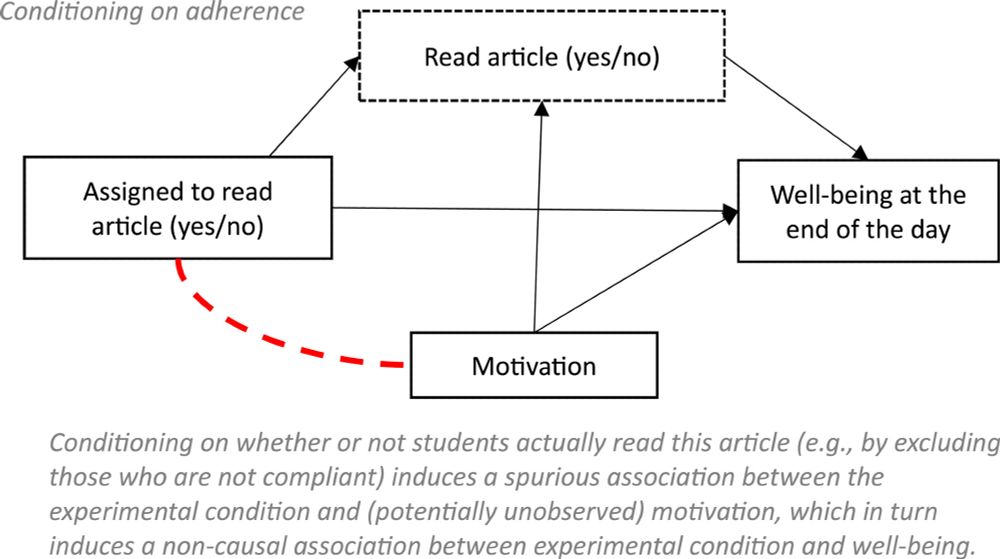

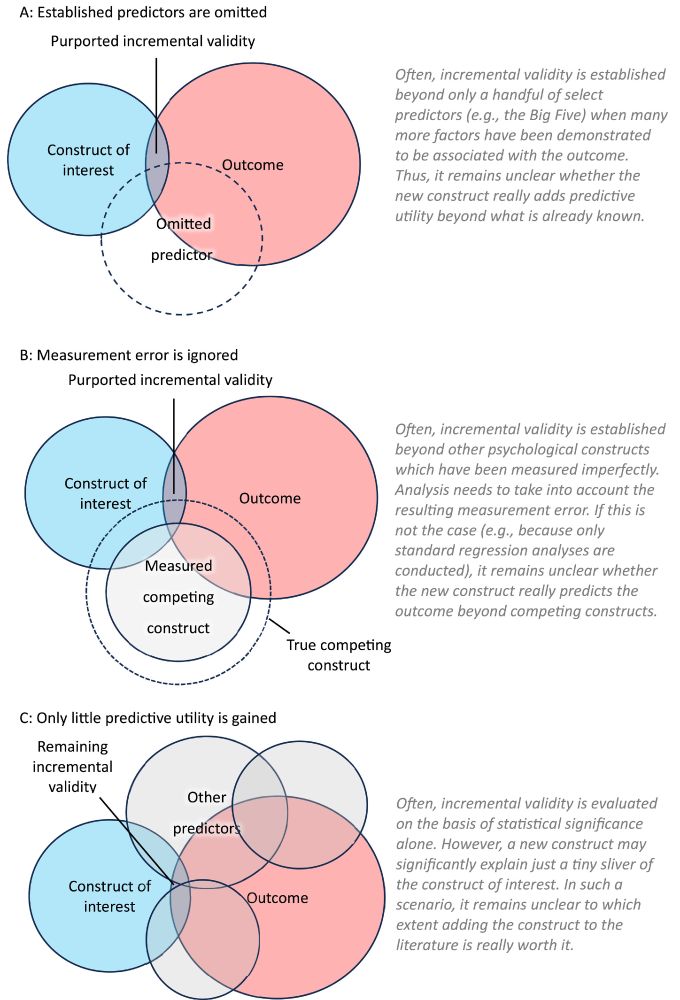

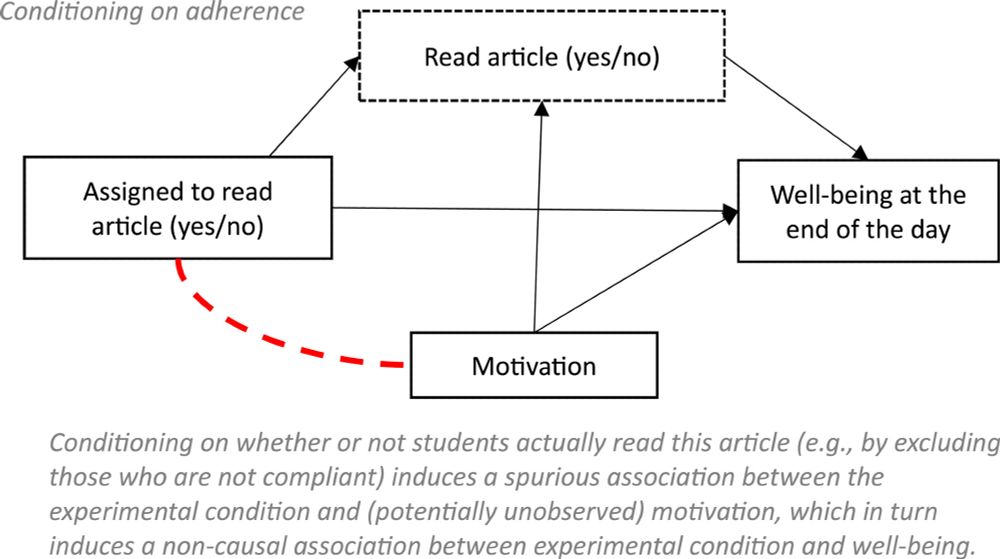

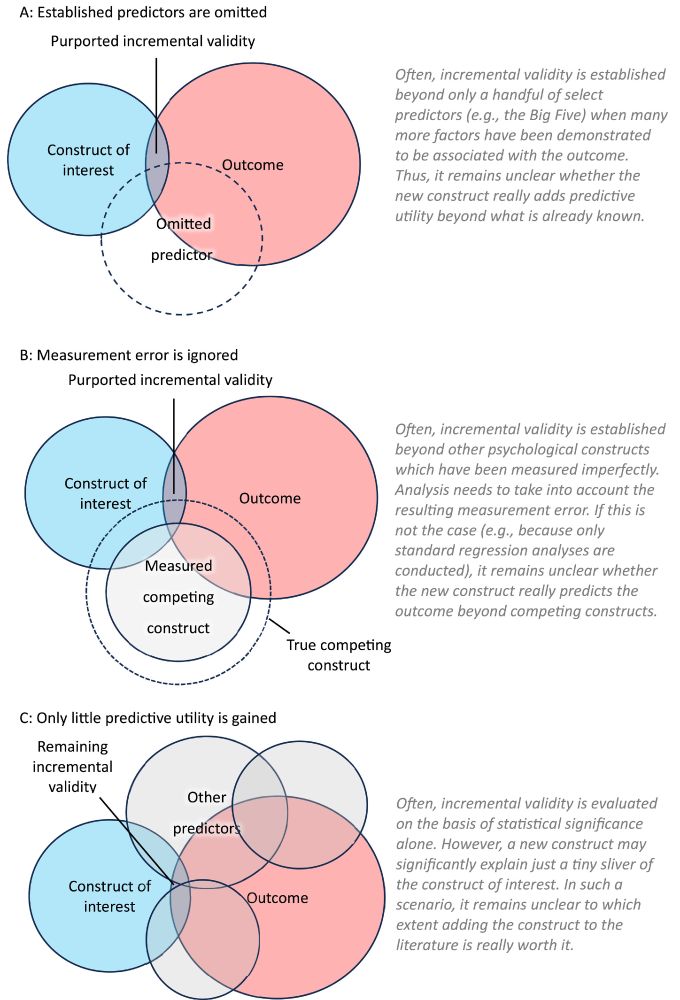

Causal inference for psychologists who think that causal inference is not for them. Correlation does not imply causation and psychologists' causal inference training often focuses on the conclusion that therefore experiments are needed—without much consideration for the causal inference frameworks used elsewhere. This leaves researchers ill-equipped to solve inferential problems that they encounter in their work, leading to mistaken conclusions and incoherent statistical analyses. For a more systematic approach to causal inference, this article provides brief introductions to the potential outcomes framework—the “lingua franca” of causal inference—and to directed acyclic graphs, a graphical notation that makes it easier to systematically reason about complex causal situations. I then discuss two issues that may be of interest to researchers in social and personality psychology who think that formalized causal inference is of little relevance to their work. First, posttreatment bias:...

DAG illustrating posttreatment bias which can be induced in randomized experiments whenever researchers condition on posttreatment variables

Figure illustrating various reasons why demonstrations of incremental validity may be unimpressive: established predictors are omitted, measurement error is ignored, only little predictive utility is gained

Do you think that learning more about causal inference is not worth it because you're running experiments anyway, or because you're interested in predictive questions? In that case, I've written a paper just for you, out now in SPPC: compass.onlinelibrary.wiley.com/doi/10.1111/...

02.03.2024 05:25 — 👍 186 🔁 84 💬 11 📌 1

Epidemiology By Design

Periodic reminder, episky medsky! If you teach epidemiology and might be interested in using my textbook (EPIDEMIOLOGY BY DESIGN) --

I will send you ALL MY TEACHING MATERIALS (lecture slides; practice problems; exercises; exams + keys; sample syllabi...)

Just ask! And also --

28.02.2024 00:25 — 👍 33 🔁 12 💬 2 📌 0