It's officially been 75 years since the proposal of the Turing Test, a good time bring up 'The Minimal Turing Test':

www.sciencedirect.com/science/arti...

@whylikethis.bsky.social

Assistant Prof. at the Technion. Computational Psycholinguistics, NLP, Cognitive Science. https://lacclab.github.io/

It's officially been 75 years since the proposal of the Turing Test, a good time bring up 'The Minimal Turing Test':

www.sciencedirect.com/science/arti...

if any

11.09.2025 05:23 — 👍 5 🔁 0 💬 0 📌 0

my friend/colleague Frank Jäkel wrote a book on AI. I sadly don't know German but I happily know Frank, and I've heard him talking about this for a while now, and just on that basis I'd recommend the German speakers in the audience check it out

24.08.2025 15:50 — 👍 16 🔁 3 💬 0 📌 2We had a lot of fun delivering the Eye Tracking and NLP tutorial at ACL! The slides are available on the tutorial website acl2025-eyetracking-and-nlp.github.io

20.08.2025 07:28 — 👍 3 🔁 0 💬 0 📌 0Help us record firefly flashes! 👇🙏

25.06.2025 21:32 — 👍 65 🔁 55 💬 5 📌 0

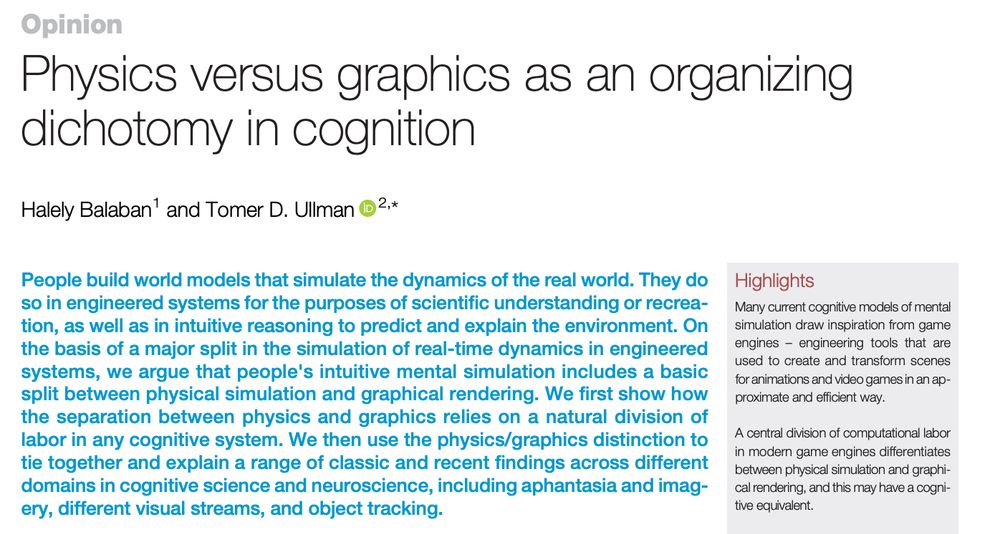

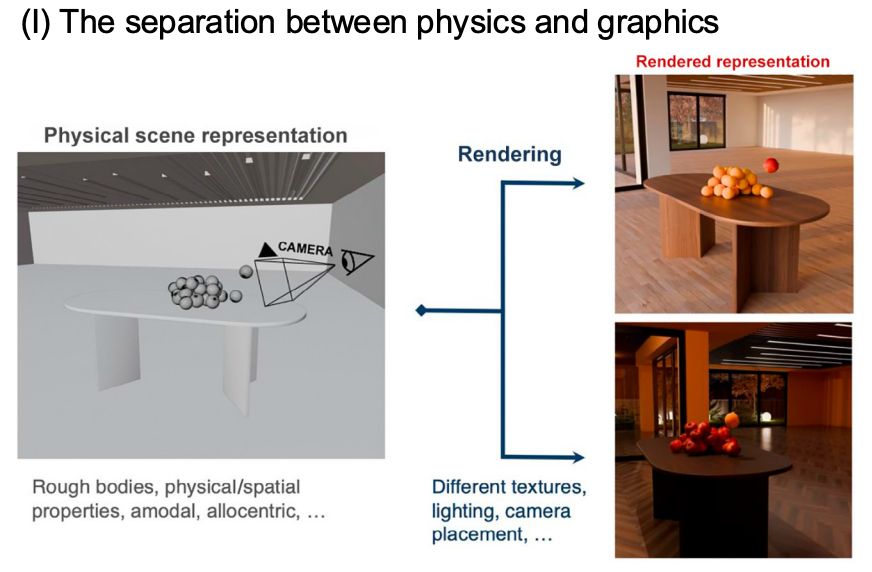

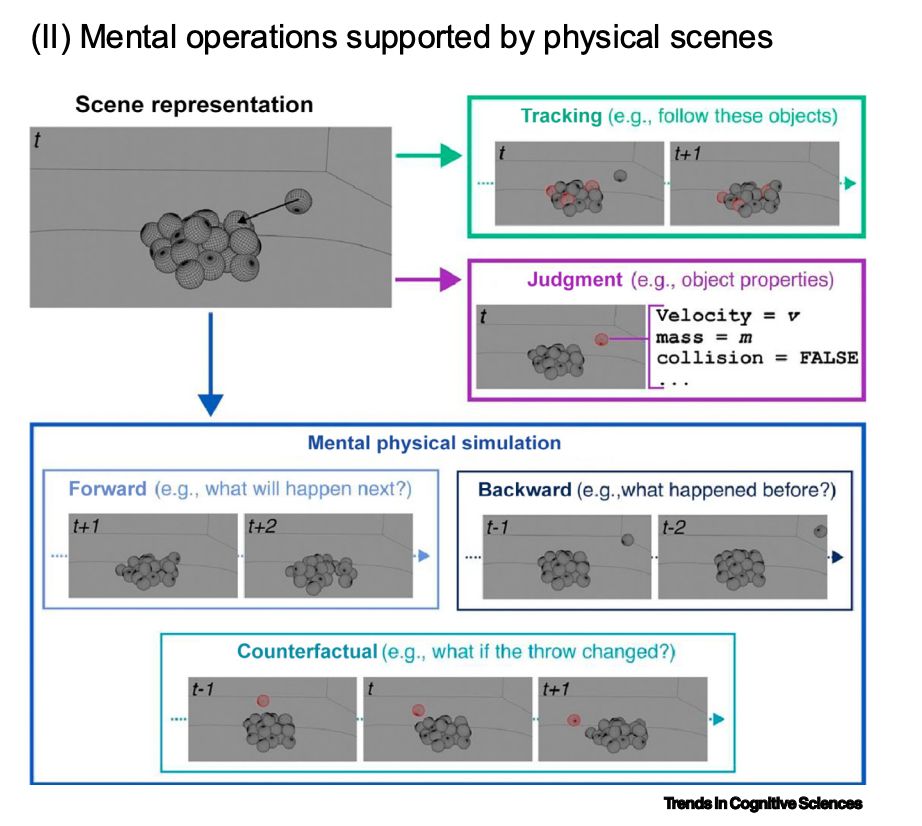

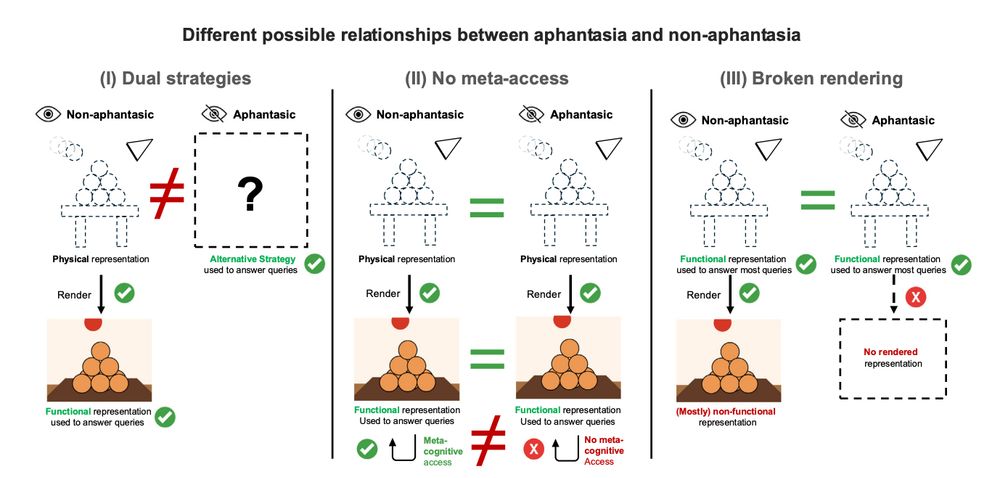

Out now in TiCS, something i've been thinking about a lot:

"Physics vs. graphics as an organizing dichotomy in cognition"

(by Balaban & me)

relevant for many people, related to imagination, intuitive physics, mental simulation, aphantasia, and more

authors.elsevier.com/a/1lBaC4sIRv...

OECS thematic collections.

If you haven't been looking recently at the Open Encyclopedia of Cognitive Science (oecs.mit.edu), here's your reminder that we are a free, open access resource for learning about the science of mind.

Today we are launching our new Thematic Collections to organize our growing set of articles!

I'm hiring a postdoc to start this fall! Come work with me? recruit.ucdavis.edu/JPF07123

30.05.2025 01:30 — 👍 25 🔁 25 💬 0 📌 1Data and documentation: github.com/lacclab/OneS...

Preprint: osf.io/preprints/ps...

Exciting recent work with OneStop from our lab (more on this soon!!): github.com/lacclab/OneS...

👁️🗨️ 4 sub-corpora: 📖 reading for comprehension, 🔎📖 information seeking, 📖📖 repeated reading, 🔎📖📖 information seeking in repeated reading.

🏋🏽 Text difficulty level manipulation: reading original and simplified texts.

👌 High quality recordings with an EyeLink 1000 Plus eye tracker.

👥 360 participants (English L1) & 152 hours of eye movement recordings - more data than all the publicly available English L1 eye tracking corpora combined!

🗞️ 30 newswire articles in English (162 paragraphs) with reading comprehension questions and auxiliary text annotations.

👀 📖 Big news! 📖 👀

Happy to announce the release of the OneStop Eye Movements dataset! 🎉 🎉

OneStop is the product of over 6 years of experimental design, data collection and data curation.

github.com/lacclab/OneS...

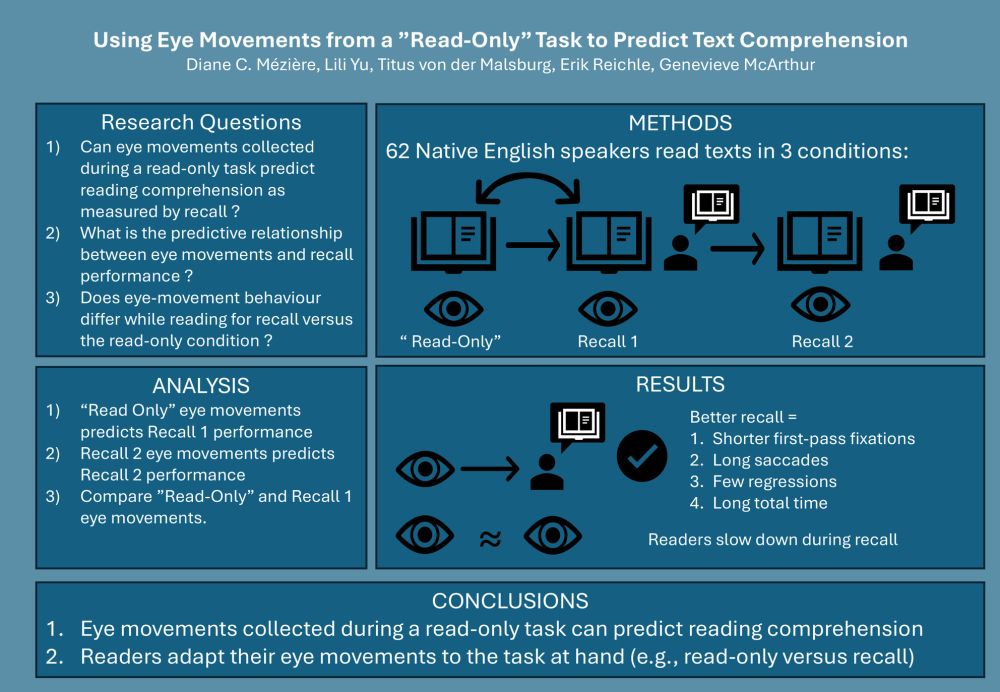

New paper! We show that eye movements during normal reading (no extra task) are effective at predicting reading comprehension as measured by recall. Both early and late eye-movement measures are key. This research was led by the amazing Diane Mézière.

ila.onlinelibrary.wiley.com/doi/10.1002/...

In person (no streaming/zoom) sentence processing workshop at Potsdam with Tal Linzen, Brian Dillon, Titus von der Malsburg, Oezge Bakay, William Timkey, Pia Schoknecht, Michael Vrazitulis, and Johan Hennert:

vasishth.github.io/sentproc-wor...

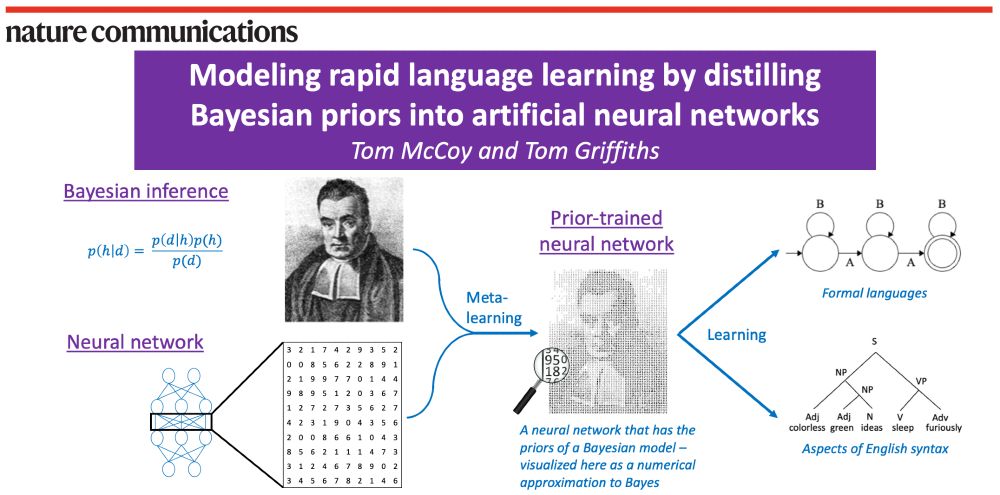

A schematic of our method. On the left are shown Bayesian inference (visualized using Bayes’ rule and a portrait of the Reverend Bayes) and neural networks (visualized as a weight matrix). Then, an arrow labeled “meta-learning” combines Bayesian inference and neural networks into a “prior-trained neural network”, described as a neural network that has the priors of a Bayesian model – visualized as the same portrait of Reverend Bayes but made out of numbers. Finally, an arrow labeled “learning” goes from the prior-trained neural network to two examples of what it can learn: formal languages (visualized with a finite-state automaton) and aspects of English syntax (visualized with a parse tree for the sentence “colorless green ideas sleep furiously”).

🤖🧠 Paper out in Nature Communications! 🧠🤖

Bayesian models can learn rapidly. Neural networks can handle messy, naturalistic data. How can we combine these strengths?

Our answer: Use meta-learning to distill Bayesian priors into a neural network!

www.nature.com/articles/s41...

1/n

On the left is a probabilistic context free grammar (PCFG). On the right is an image of the Transformer architecture. There are arrows going back and forth between the PCFG and the Transformer, showing how the assignment goes back and forth between them.

Made a new assignment for a class on Computational Psycholinguistics:

- I trained a Transformer language model on sentences sampled from a PCFG

- The students' task: Given the Transformer, try to infer the PCFG (w/ a leaderboard for who got closest)

Would recommend!

1/n

Check out our new work on introspection in LLMs! 🔍

TL;DR we find no evidence that LLMs have privileged access to their own knowledge.

Beyond the study of LLM introspection, our findings inform an ongoing debate in linguistics research: prompting (eg grammaticality judgments) =/= prob measurement!

it's only Consciousness if it comes from the Consciousness region of the brain, otherwise its just sparkling attention

11.03.2025 12:36 — 👍 30 🔁 3 💬 3 📌 1new preprint on Theory of Mind in LLMs, a topic I know a lot of people care about (I care. I'm part of people):

"Re-evaluating Theory of Mind evaluation in large language models"

(by Hu* @jennhu.bsky.social , Sosa, and me)

link: arxiv.org/pdf/2502.21098

Out today in Nature Machine Intelligence!

From childhood on, people can create novel, playful, and creative goals. Models have yet to capture this ability. We propose a new way to represent goals and report a model that can generate human-like goals in a playful setting... 1/N

Hello! I'm looking to hire a post-doc, to start this Summer or Fall.

It'd be great if you could share this widely with people you think might be interested.

More details on the position & how to apply: bit.ly/cocodev_post...

Official posting here: academicpositions.harvard.edu/postings/14723

Our language neuroscience lab (evlab.mit.edu) is looking for a new lab manager/FT RA to start in the summer. Apply here: tinyurl.com/3r346k66 We'll start reviewing apps in early Mar. (Unfortunately, MIT does not sponsor visas for these positions, but OPT works.)

05.02.2025 14:43 — 👍 30 🔁 20 💬 0 📌 0The 3rd Workshop on Eye Movements and the Assessment of Reading Comprehension will take place on June 5–7, 2025 at the University of Stuttgart!

Submit an abstract by March 1st and join us!

tmalsburg.github.io/Comprehensio...

So excited to receive the Troland Award!! Huge congrats to the other winner—Nick Turk-Browne! And TY, as always, to my mentors&nominators, to my amazing labbies past&present, and to all the wonderful and supportive colleagues in our broader scientific community. <3 www.nasonline.org/award/trolan...

23.01.2025 17:50 — 👍 220 🔁 21 💬 34 📌 0Fantastic resource!

23.01.2025 17:30 — 👍 0 🔁 0 💬 0 📌 0Happy New Year everyone! Jim and I just put up our January 2025 release of Speech and Language Processing! Check it out here: web.stanford.edu/~jurafsky/sl...

12.01.2025 20:44 — 👍 151 🔁 50 💬 1 📌 1postdoc opportunity in @alexwoolgar.bsky.social and my lab, based in Cambridge UK! seeking someone with excellent analytical skills to join our project using time-resolved human neuroimaging to study receptive language processing in non-speaking autistic individuals 🧠✨

www.jobs.cam.ac.uk/job/48835/

Reminder that we are looking for papers using LLMs for humanities research, for a special issue of the Computational Humanities Research Journal.

Deadline January 31st!

#NLP #DigitalHumanities #CulturalAnalytics

02.01.2025 10:09 — 👍 2 🔁 0 💬 0 📌 0

02.01.2025 10:09 — 👍 2 🔁 0 💬 0 📌 0

New potential side effect of participating in an eyetracking study in our lab - curly eyelashes #thingswedoforscience

02.01.2025 10:09 — 👍 4 🔁 0 💬 2 📌 0