🔗 Resources for ESPnet-SDS:

📂 Codebase (part of ESPnet): github.com/espnet/espnet

📖 README & User Guide: github.com/espnet/espne...

🎥 Demo Video: www.youtube.com/watch?v=kI_D...

@siddhant-arora.bsky.social

@siddhant-arora.bsky.social

🔗 Resources for ESPnet-SDS:

📂 Codebase (part of ESPnet): github.com/espnet/espnet

📖 README & User Guide: github.com/espnet/espne...

🎥 Demo Video: www.youtube.com/watch?v=kI_D...

This was joint work with my co-authors at

@ltiatcmu.bsky.social , Sony Japan and Hugging Face ( @shinjiw.bsky.social @pengyf.bsky.social @jiatongs.bsky.social @wanchichen.bsky.social @shikharb.bsky.social @emonosuke.bsky.social @cromz22.bsky.social @reach-vb.hf.co @wavlab.bsky.social ).

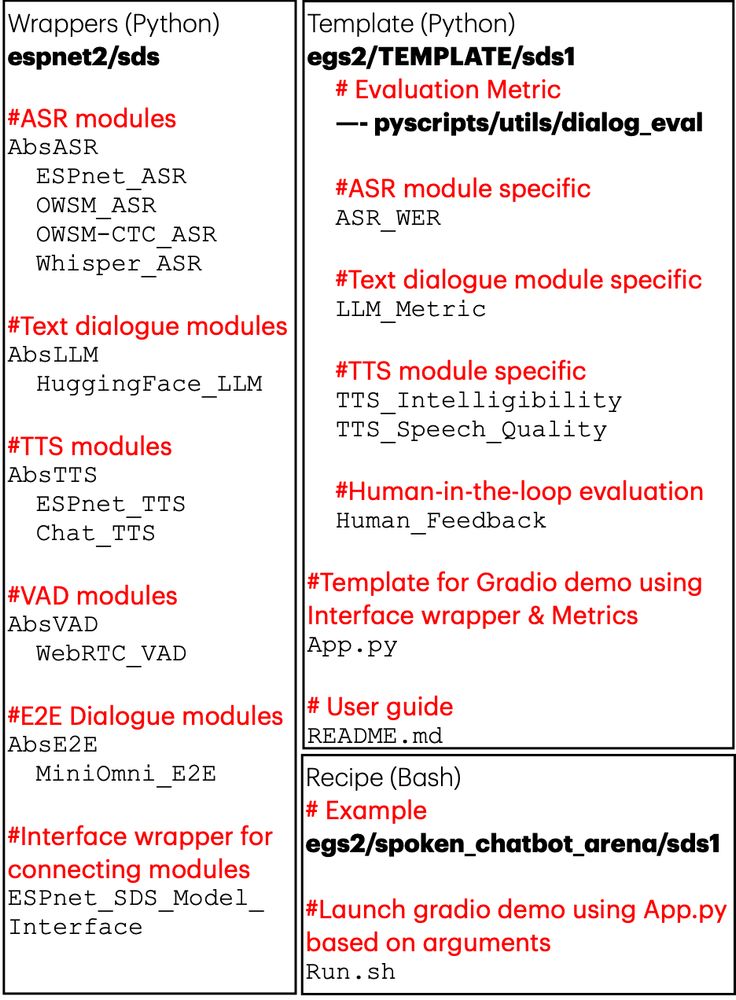

ESPnet-SDS provides:

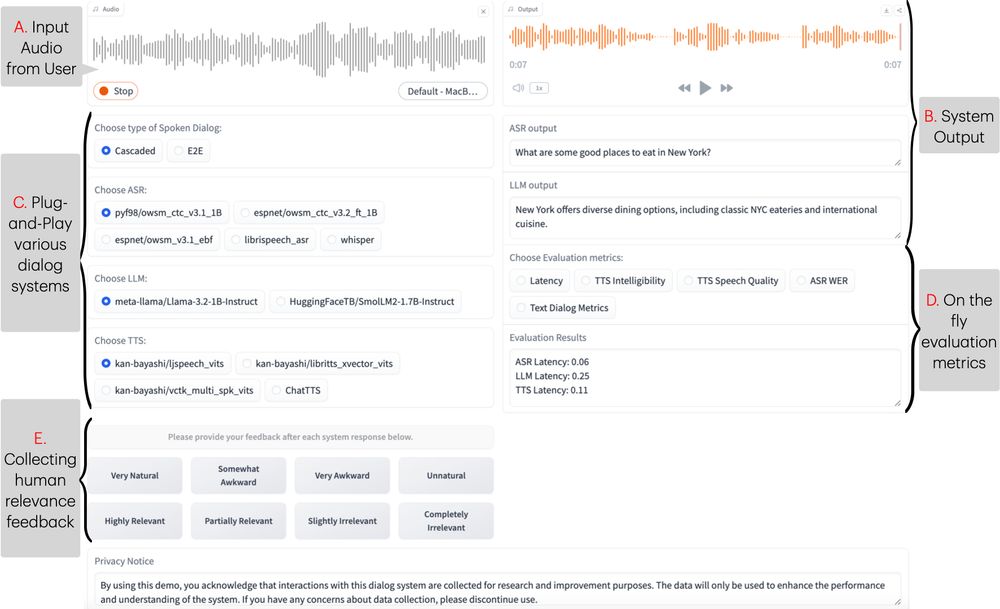

✅ Unified Web UI with support for both cascaded & E2E models

✅ Real-time evaluation of latency, semantic coherence, audio quality & more

✅ Mechanism for collecting user feedback

✅ Open-source with modular code -> could easily incorporate new systems!

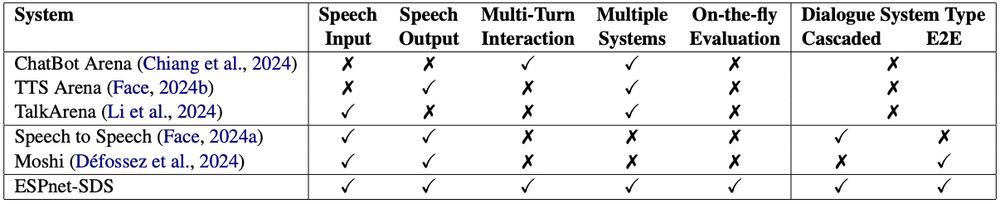

Spoken dialogue systems (SDS) are everywhere, with many new systems emerging.

But evaluating and comparing them is challenging:

❌ No standardized interface—different frontends & backends

❌ Complex and inconsistent evaluation metrics

ESPnet-SDS aims to fix this!

New #NAACL2025 demo, Excited to introduce ESPnet-SDS, a new open-source toolkit for building unified web interfaces for both cascaded & end-to-end spoken dialogue system, providing real-time evaluation, and more!

📜: arxiv.org/abs/2503.08533

Live Demo: huggingface.co/spaces/Siddh...

This work was done during my internship at Apple with Zhiyun Lu, Chung-Cheng Chiu, @ruomingpang.bsky.social

along with co-authors @ltiatcmu.bsky.social ( @shinjiw.bsky.social @wavlab.bsky.social).

(9/9)

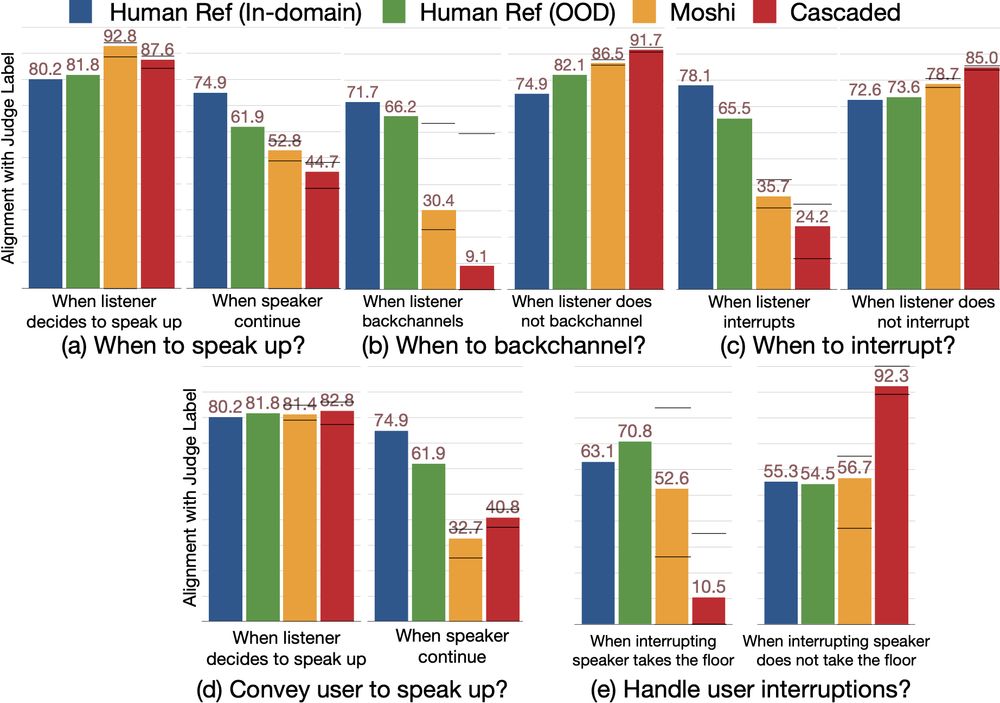

GPT-4o rarely backchannels and interrupt but shows strong turn-taking capabilities. More analyses of audio FM ability to understand and predict turn taking events can be found in our full paper.

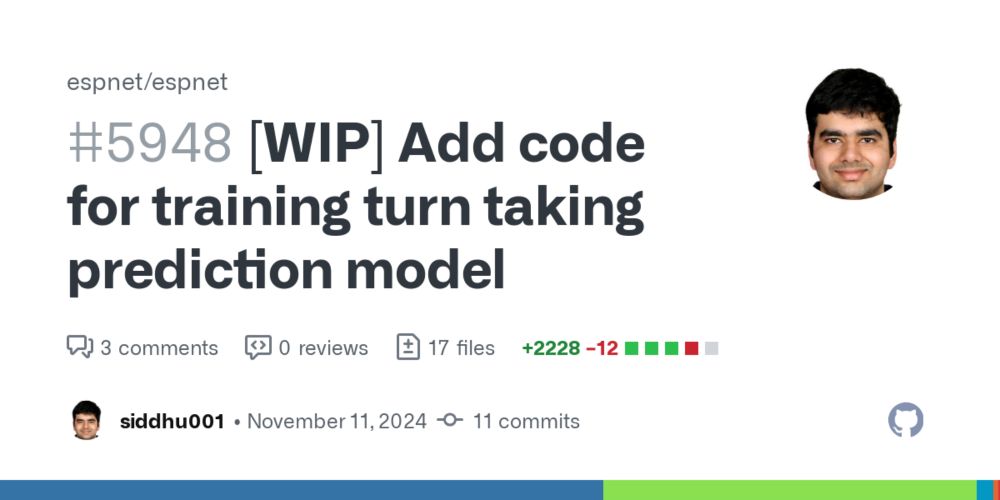

We’re open-sourcing our evaluation platform: github.com/espnet/espne...!

(8/9)

🤯 What did we find?

❌ Both systems fails to speak up when they should and do not give user enough cues when they wants to keep conversation floor.

❌ Moshi interrupt too aggressively.

❌ Both systems rarely backchannel.

❌ User interruptions are poorly managed.

(7/9)

We train a causal judge model on real human-human conversations that predicts turn-taking events. ⚡

Strong OOD generalization -> a reliable proxy for human judgment!

No need for costly human judgments—our model judges the timing of turn taking events automatically!

(6/9)

Global metrics fails to evaluate when turn taking event happens!

Moshi generates overlapping speech—but is it helpful or disruptive to the natural flow of the conversation? 🤔

(5/9)

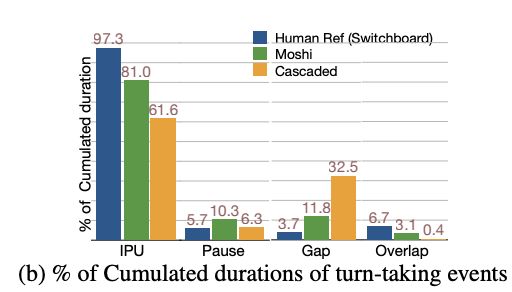

We compare E2E (Moshi us.moshi.chat) & cascaded (github.com/huggingface/...) dialogue systems through user study with global corpus level statistics!

Moshi: small gaps, some overlap—but less than natural dialogue

Cascaded: higher latency, minimal overlap.

(4/9)

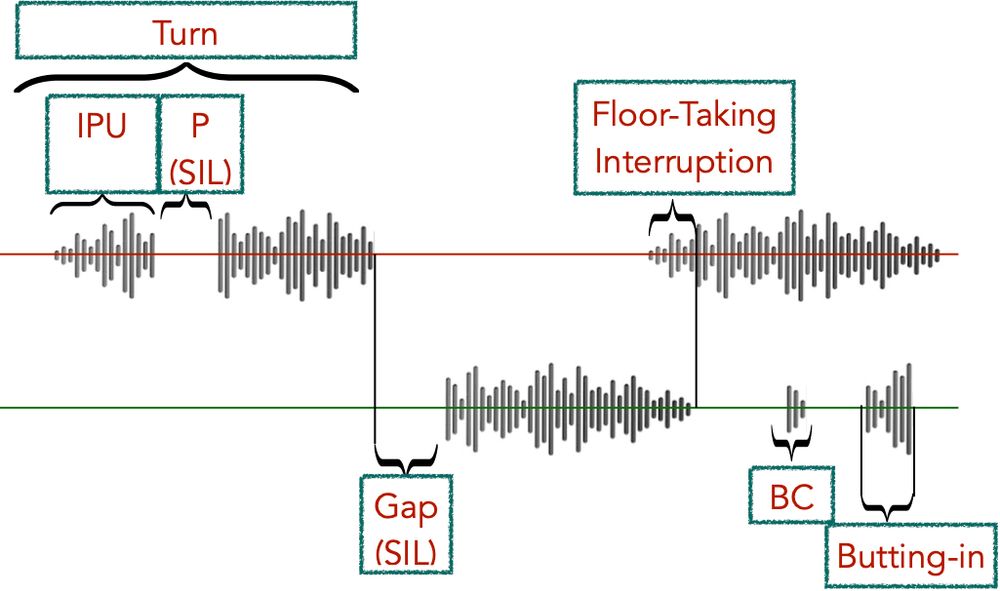

Silence ≠ turn-switching cue! 🚫 Pauses are often longer than gaps in real conversations. 🤦♂️

Recent audio FMs claim to have conversational abilities but limited efforts to evaluate these models on their turn taking capabilities.

(3/9)

💡 Why does turn-taking matter?

In human dialogue, we listen, speak, and backchannel in real-time.

Similarly the AI should know when to listen, speak, backchannel, interrupt, convey to the user when it wants to keep the conversation floor and address user interruptions

(2/9)

🚀 New #ICLR2025 Paper Alert! 🚀

Can Audio Foundation Models like Moshi and GPT-4o truly engage in natural conversations? 🗣️🔊

We benchmark their turn-taking abilities and uncover major gaps in conversational AI. 🧵👇

📜: arxiv.org/abs/2503.01174