Is Cluely...somehow ethical now?

04.08.2025 16:40 — 👍 0 🔁 0 💬 0 📌 0

👀👀👀

02.08.2025 07:29 — 👍 1 🔁 0 💬 1 📌 0

The military keynesianism of our day (though it's of course possible that military keynesianism is the military keynesianism of our day, and this is just _another_ one)

01.08.2025 13:06 — 👍 0 🔁 0 💬 0 📌 0

I need one of these sized for a cone!!

30.07.2025 07:20 — 👍 0 🔁 0 💬 0 📌 0

Womp, wrong hashtag: #acl2025

28.07.2025 15:42 — 👍 1 🔁 0 💬 0 📌 0

Beyond Text: Characterizing Domain Expert Needs in Document Research

Sireesh Gururaja, Nupoor Gandhi, Jeremiah Milbauer, Emma Strubell. Findings of the Association for Computational Linguistics: ACL 2025. 2025.

We argue that models need to be better along four axes: they need to be accessible, personalizable, support iteration, and socially aware.

How might we do that? Come to the poster to find out!

I had so much fun doing this work with Nupoor, @jerelev.bsky.social and @strubell.bsky.social!

28.07.2025 15:26 — 👍 3 🔁 0 💬 1 📌 0

Modern tools struggle to support many of these use cases. Even where research exists for certain problems, such as with terminology drift, it requires writing code to effectively use, and is therefore inaccessible, while the accessible tools do not address those use cases at all.

28.07.2025 15:26 — 👍 1 🔁 0 💬 1 📌 0

We also saw that they maintained deeply personal methods for reading documents, which led to idiosyncratic, iteratively constructed mental models of the corpora.

Our findings echo early findings in STS, most notably Bruno Latour's account of the social construction of facts!

28.07.2025 15:26 — 👍 1 🔁 0 💬 1 📌 0

Most notably, experts tended to maintain and use nuanced models of the social production of the documents they read. In the sciences, this might look like asking whether a paper follows standard practices in a field, where it was published, and whether it looks "too neat"

28.07.2025 15:26 — 👍 1 🔁 0 💬 1 📌 0

Screenshot of paper title "Beyond Text: Characterizing Domain Expert Needs in Document Research"

Coming soon (6pm!) to the #ACL poster session: how do experts work with collections of documents, and do LLMs do those things?

tl;dr: only sometimes! While we have good tools for things like information extraction, the way that experts read documents goes deeper - come to our poster to learn more!

28.07.2025 15:26 — 👍 11 🔁 0 💬 1 📌 0

@mariaa.bsky.social's: bsky.app/profile/did:...

28.07.2025 08:28 — 👍 2 🔁 0 💬 1 📌 0

Yay, thank you! Was going to do this later today, and now I don't have to 🌞

26.07.2025 07:21 — 👍 2 🔁 0 💬 0 📌 0

It also feels like a credibility thing - having been in industry without a PhD, you rarely have the leeway to push for even slightly risky things. By the time you've built that credibility, it's hard not to have internalized the practice of risk minimization

25.07.2025 16:31 — 👍 0 🔁 0 💬 0 📌 0

Excited to listen to this! Personalizable viz is so promising, and it's still *so* complicated, at least in my experience

25.07.2025 16:19 — 👍 1 🔁 0 💬 1 📌 0

This thread really does make me wonder why we moved away from soft prompt tuning. I can see the affordance benefit of being able to write the prompts, but it doesn't feel like there is necessarily a "theory" of prompt optimization in discrete space that makes it worth keeping prompts in language

16.07.2025 22:09 — 👍 3 🔁 0 💬 1 📌 0

The answer is yes - East if you're from West of here, Midwest if you're from further east. And also a secret third thing! (rust belt)

13.07.2025 19:47 — 👍 4 🔁 0 💬 0 📌 0

This looks incredible!! Very excited to read it 👀👀

02.07.2025 21:10 — 👍 1 🔁 0 💬 1 📌 0

ok, reading a bit more, I could def see Kenneth Goldsmith advocating this point. Ty for the reference!

23.06.2025 18:33 — 👍 1 🔁 0 💬 0 📌 0

Do you have a link to this? I'd love to read more - what aesthetic innovation removes the necessity of the human element to appreciation?

23.06.2025 18:23 — 👍 0 🔁 0 💬 1 📌 0

All of these sound great! I would also maybe suggest rather than (or maybe in addition to) a starter pack, a list of attendees - that way, the list can back a custom feed, capturing things that aren't explicitly tagged for the conference, and the feed also becomes interest-based after the conf

17.06.2025 17:40 — 👍 4 🔁 0 💬 1 📌 0

This is some excellent viz 🤩 also love that there's a Sam Learner on the team that built it!

17.06.2025 14:36 — 👍 1 🔁 0 💬 1 📌 0

Gently, I would like to say: When people tell you that they would appreciate a feature that does something automatically, it's not responsive to that concern to explain that by going through several steps for every individual instance, they can get the same result in each instance.

12.06.2025 13:45 — 👍 72 🔁 2 💬 3 📌 0

OpenAI has effectively conned people into thinking that Chatbots & AI "Assistants" are The FEWTCHA of AI. Friends, they are most likely *not.* Neither are the big cloud-based Generative AI services.

Small, purpose-fit, on-device models that make your existing activities easier/better? There you go.

10.06.2025 14:54 — 👍 29 🔁 4 💬 3 📌 0

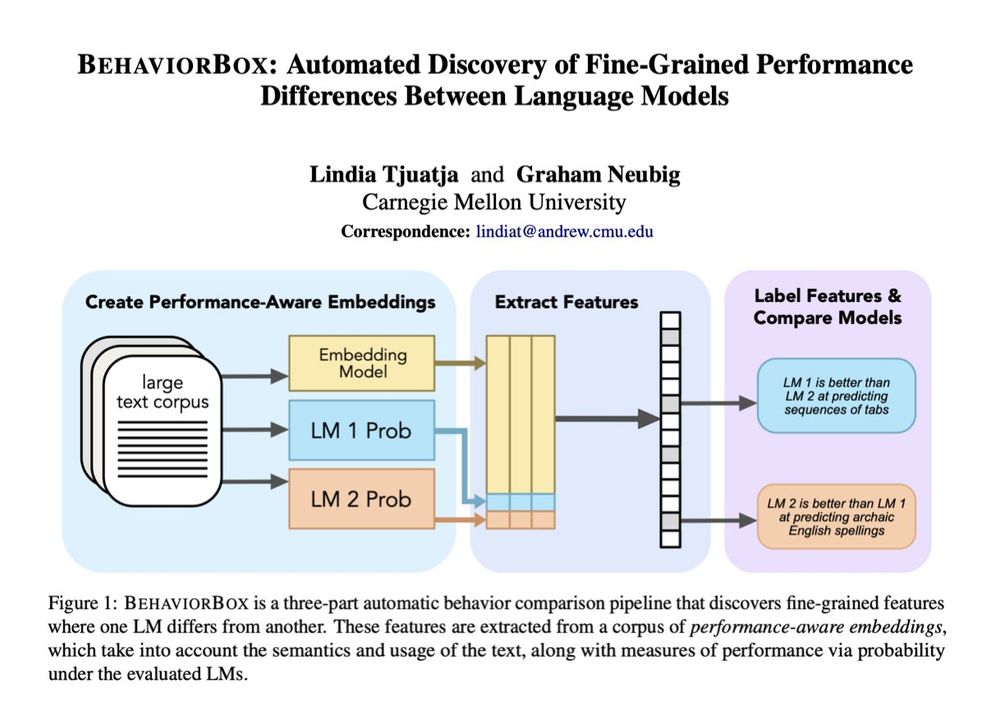

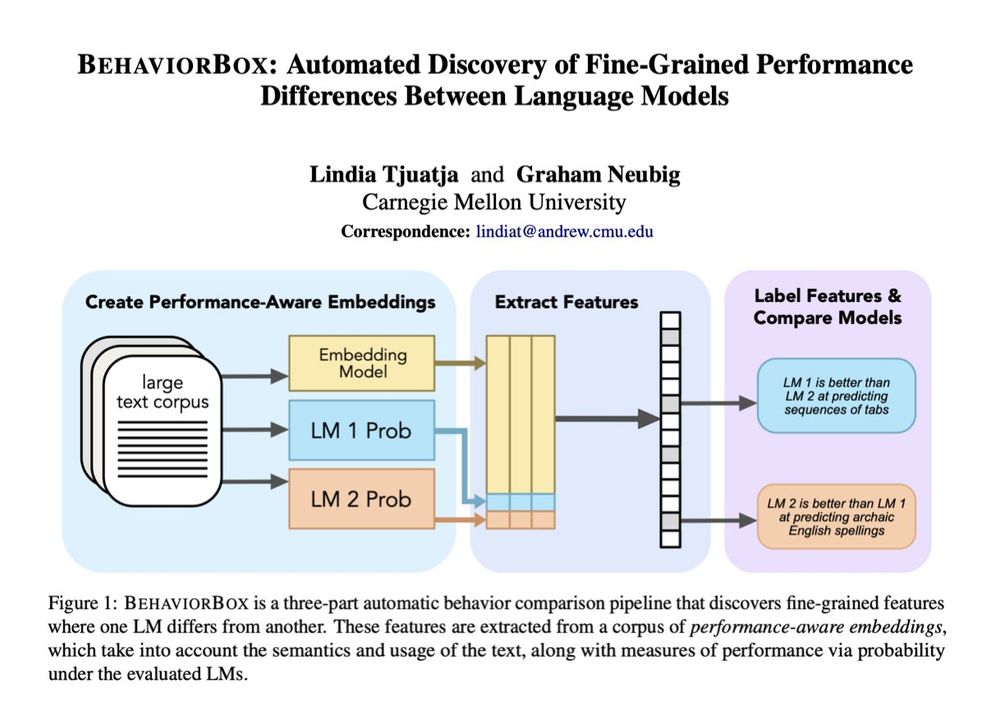

When it comes to text prediction, where does one LM outperform another? If you've ever worked on LM evals, you know this question is a lot more complex than it seems. In our new #acl2025 paper, we developed a method to find fine-grained differences between LMs:

🧵1/9

09.06.2025 13:47 — 👍 64 🔁 19 💬 1 📌 2

The MA lottery is the follow-up season - it's the same folks and the same feed!

07.06.2025 16:19 — 👍 2 🔁 0 💬 0 📌 0

I'm excited to read (and download/cite, ofc) this when it's out!!

05.06.2025 16:35 — 👍 1 🔁 0 💬 0 📌 0

👀

05.06.2025 16:16 — 👍 0 🔁 0 💬 1 📌 0

🤩🤩

26.05.2025 21:38 — 👍 0 🔁 0 💬 0 📌 0

Cherry Springs State Park

Cherry Springs State Park

I don't know if conditions being bad in PA is a weather thing, but if you haven't, consider cherry springs state park! www.pa.gov/agencies/dcn...

26.05.2025 21:33 — 👍 0 🔁 0 💬 1 📌 0

Assistant Professor in Digital Sociology @ University of Cambridge; researching tech workers, digital economy, class, and culture

https://research.sociology.cam.ac.uk/profile/dr-robert-dorschel

PhD Candidate at @icepfl.bsky.social | Ex Research Intern@Google DeepMind

👩🏻💻 Working on multi-modal AI reasoning models in scientific domains

https://smamooler.github.io/

Associate Professor of Law; Affiliated Professor of Political Science at University of Minnesota. AdLaw, Bureaucracy, Administrative Capacity, and the Federal Workforce. Contributing Editor for Lawfare. Signal: Nbednar.46

Opinions are my own; Not UMN.

Philosopher/AI Ethicist at Univ of Edinburgh, Director @technomoralfutures.bsky.social, co-Director @braiduk.bsky.social, author of Technology and the Virtues (2016) and The AI Mirror (2024). Views my own.

http://www.henryfarrell.net and newsletter at http://www.programmablemutter.com Now out with Abe Newman, Underground Empire: How America Weaponized the World Economy (Holt, Penguin). https://www.publishersweekly.com/9781250840554.

💾 associate prof of computers + video games @ MCC @ NYU

🕹️ managing ed of ROMchip: A Journal of Game Histories @romchip.bsky.social https://romchip.org

🍎 The Apple II Age: How the Computer Became Personal

https://linktr.ee/lainenooney

they/them

Annual Conference of the Special Interest Group in Computing, Information, and Society of SHOT. SIGCIS 2025: Power Surge will take place virtually on Sept 25-27. See details and CFP here: https://meetings.sigcis.org/

PhD Student University of Michigan School of Information & Michigan Institute for Computational Discovery and Engineering | formerly engineering @csmapnyu.org | NYU ‘19

UC Berkeley/BAIR, AI2 || Prev: UWNLP, Meta/FAIR || sewonmin.com

AI writer at the Economist. I write about it, that is. I’m still human. One of literally dozens of people online who is not American.

Wrote a book about the history of keyboards: shifthappens.site · Design director @figma · Typographer · Occasional speaker · Chicagoan in training

The New York Amsterdam News • The New Black View • Founded 1909 • NYC's oldest Black newspaper

A nonprofit, nonpartisan newsroom working to provide journalism that keeps Wisconsin's communities strong, informed and connected.

👉 Visit us at wisconsinwatch.org

💸 Support us at wisconsinwatch.org/donate

Filling information and connection gaps in Detroit through local reporting since 2016 💛

Managing partner for Detroit Documenters

In-depth news and expert analysis on global affairs. Get our free newsletter: http://wpr.vu/WLXl50xfgXt

News for LA People by LA People!

Nonprofit independent journalism in LA // a project of @groundgamela.bsky.social

Pitch us➡️pitches@knock.la

Fund us➡️nc.la/patreon

Nonprofit news for New Orleans elevating voices from communities that have been historically dismissed or ignored to create thoughtful, solution-based coverage on crucial topics.

Sign up for our newsletters here: https://veritenews.org/newsletter-signup/

The Emancipator is a digital magazine dedicated to examining and confronting racism and the inequities it creates. Visit our website: https://theemancipator.org