📢want to create realistic dynamic 3D worlds (>100 splats)?

my NVIDIA internship project, VoMP, is the first feed-forward approach turning surface geometry into volumetric sim-ready assets with real-world materials.

🌐Project: research.nvidia.com/labs/sil/pro...

📜Paper: arxiv.org/abs/2510.22975

30.10.2025 16:23 — 👍 46 🔁 5 💬 4 📌 4

NVIDIA Spatial Intelligence Lab (SIL)

Advancing foundational technologies enabling AI systems to perceive, model, and interact with the physical world.

The Spatial Intelligence Lab at NVIDIA (research.nvidia.com/labs/sil/) is looking for 2026 research interns! We do all kinds of cool work across graphics/vision, geometry, physics, & ML. Now is the time to apply & reach out!

nvidia.eightfold.ai/careers/job/... (not limited to Canada-only)

15.10.2025 15:49 — 👍 6 🔁 0 💬 0 📌 0

Code is now out! Try it for yourself here: github.com/abhimadan/st...

30.07.2025 21:42 — 👍 10 🔁 4 💬 0 📌 0

Also: this paper was recognized with a best paper award at SGP! Huge thanks to the organizers & congrats to the other awardees.

I was super lucky to work with Yousuf on this one, he's truly the mastermind behind it all!

04.07.2025 15:52 — 👍 13 🔁 0 💬 0 📌 0

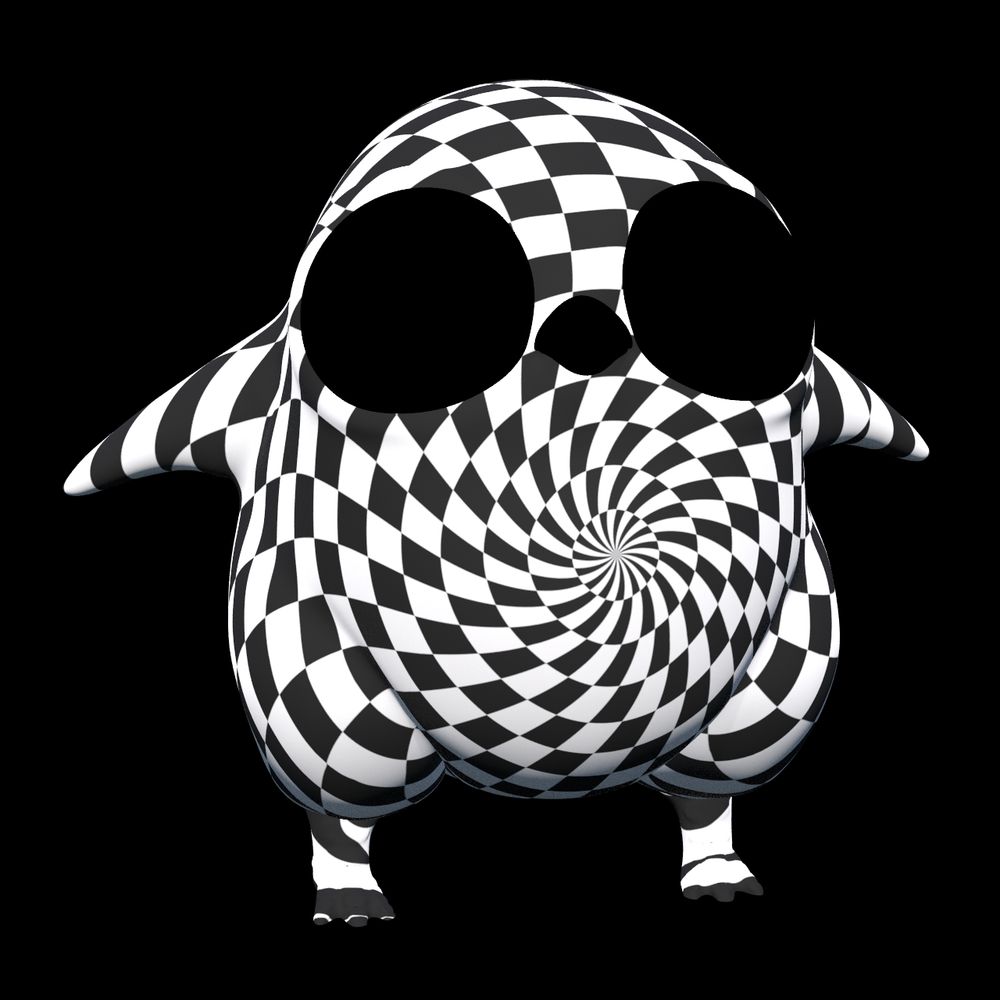

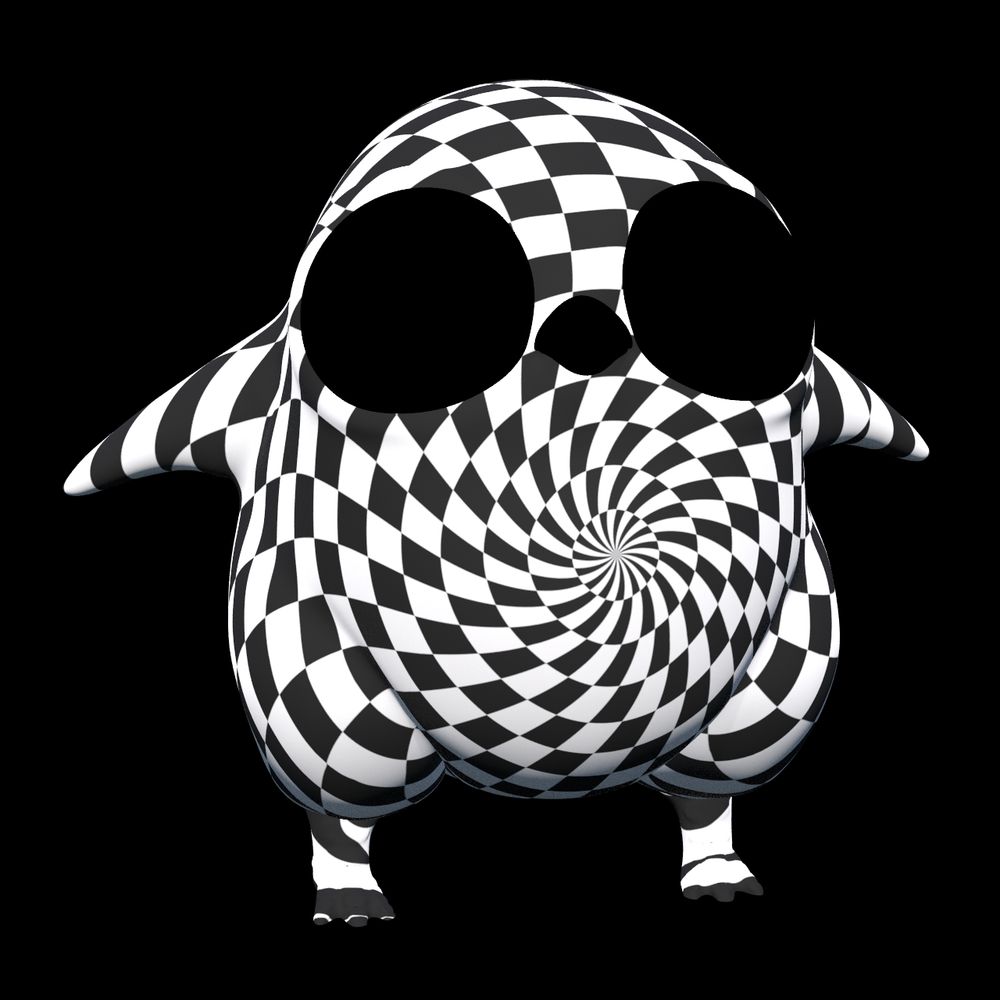

Actually, Yousuf did a quick experiment which is related (though a different formulation), using @markgillespie64.bsky.social et al's Discrete Torsion Connection markjgillespie.com/Research/Dis.... You get fun spiraling log maps! (image attached)

02.07.2025 18:54 — 👍 1 🔁 0 💬 0 📌 0

Yeah! That diffused frame is "the most regular frame field in the sense of transport along geodesics from the source", so you get out a log map that is as-regular-as-possible, in the same sense.

You could definitely use another frame field, and you'd get "log maps" warped along that field.

02.07.2025 18:54 — 👍 1 🔁 0 💬 1 📌 0

💻 Website: www.yousufsoliman.com/projects/the...

📗 Paper: www.yousufsoliman.com/projects/dow...

🔬 Code (C++ library): geometry-central.net/surface/algo...

🐍 Code (python bindings): github.com/nmwsharp/pot...

(point cloud code not available yet, let us know if you're interested!)

02.07.2025 06:23 — 👍 8 🔁 1 💬 0 📌 0

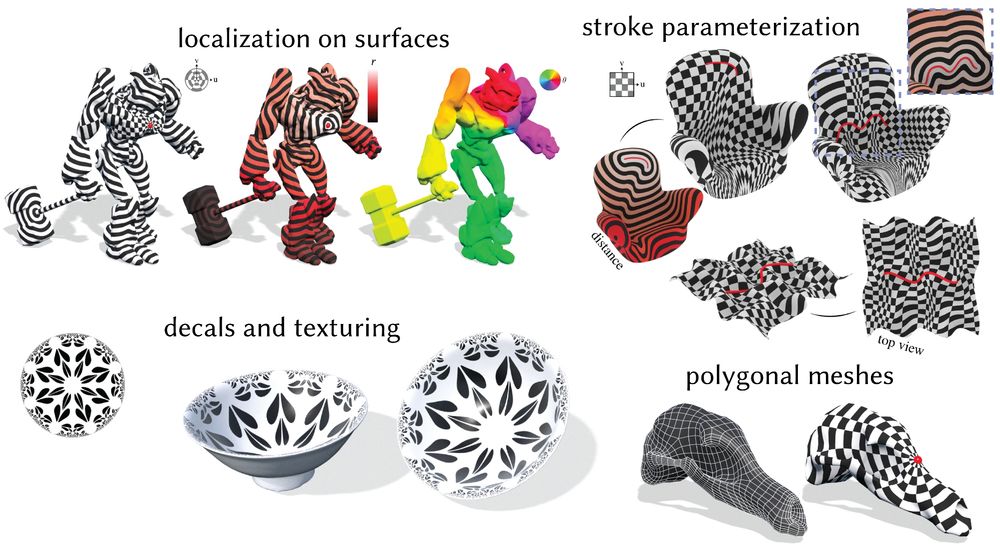

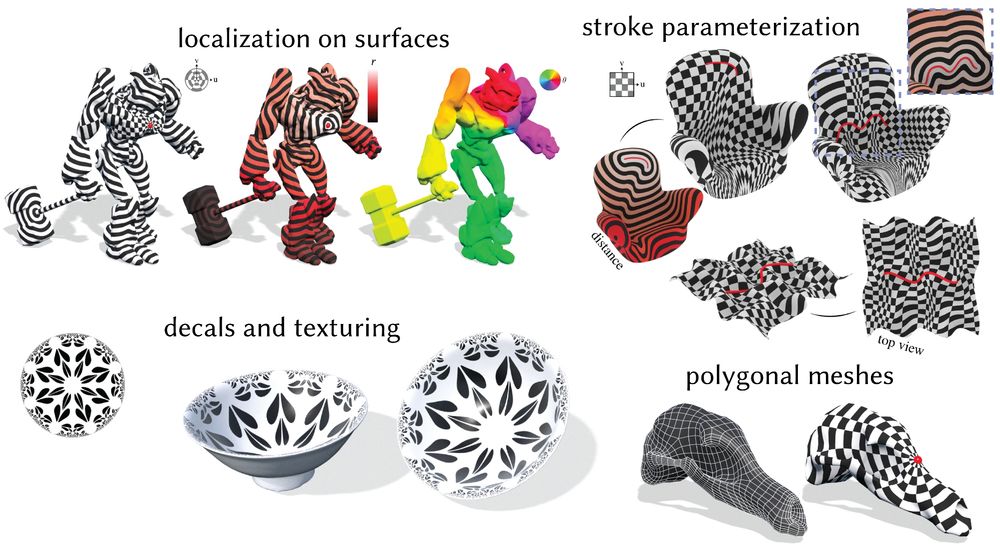

We give two variants of the algorithm, and show use cases for many problems like averaging values on surfaces, decaling, and stroke-aligned parameterization. It even works on point clouds!

02.07.2025 06:23 — 👍 8 🔁 0 💬 1 📌 0

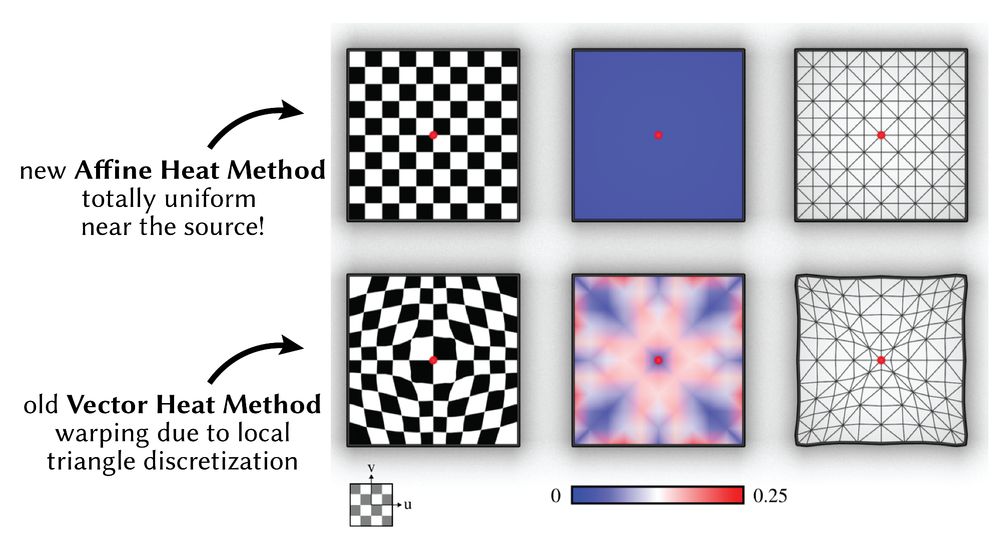

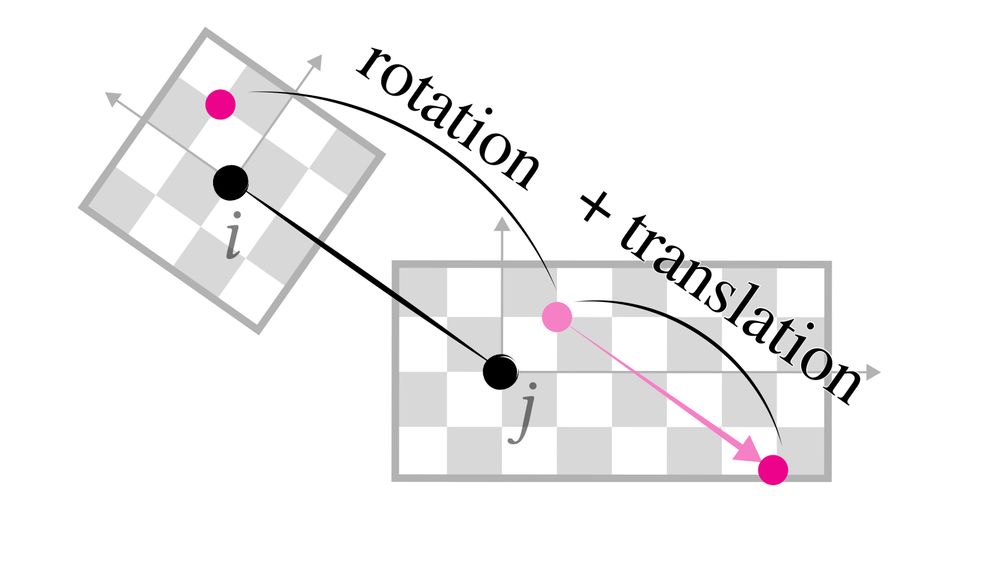

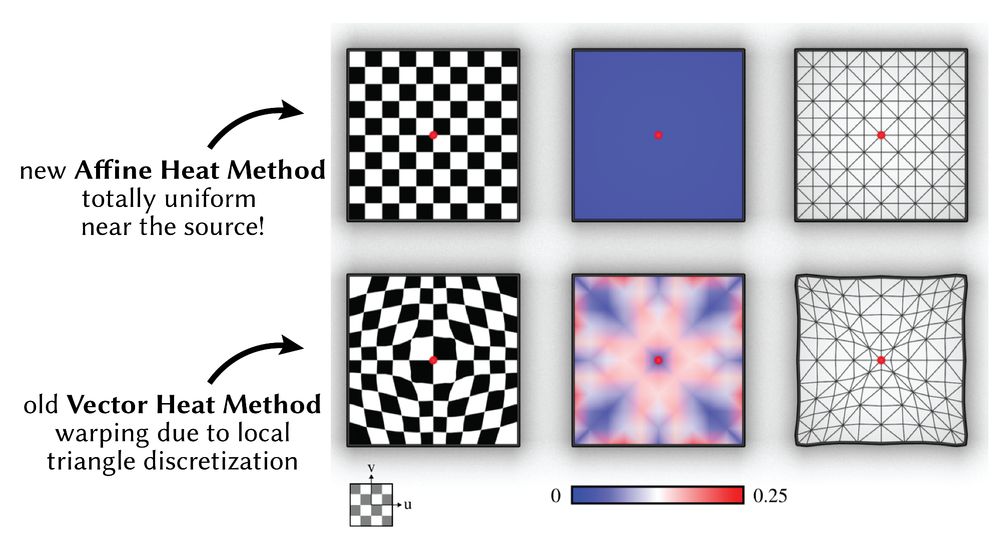

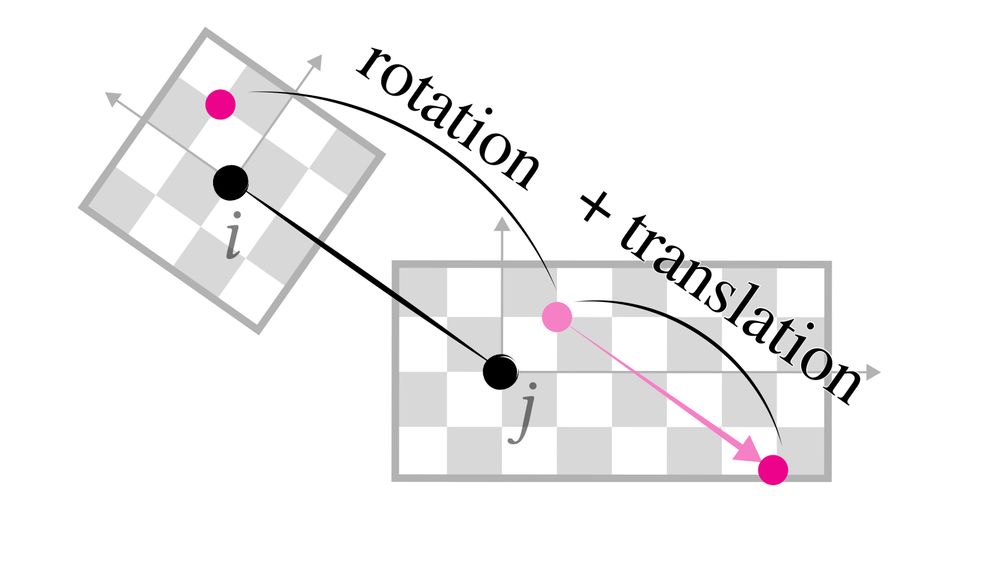

Instead of the usual VxV scalar Laplacian, or a 2Vx2V vector Laplacian, we build a 3Vx3V homogenous "affine" Laplacian! This Laplacian allows new algorithms for simpler and more accurate computation of the logarithmic map, since it captures rotation and translation at once.

02.07.2025 06:23 — 👍 6 🔁 0 💬 1 📌 0

Previously in "The Vector Heat Method", we computed log maps with short-time heat flow, via a vector-valued Laplace matrix rotating between adjacent vertex tangent spaces.

The big new idea is to rotate **and translate** vectors, by working homogenous coordinates.

02.07.2025 06:23 — 👍 6 🔁 0 💬 1 📌 0

Logarithmic maps are incredibly useful for algorithms on surfaces--they're local 2D coordinates centered at a given source.

Yousuf Soliman and I found a better way to compute log maps w/ fast short-time heat flow in "The Affine Heat Method" presented @ SGP2025 today! 🧵

02.07.2025 06:23 — 👍 66 🔁 14 💬 2 📌 2

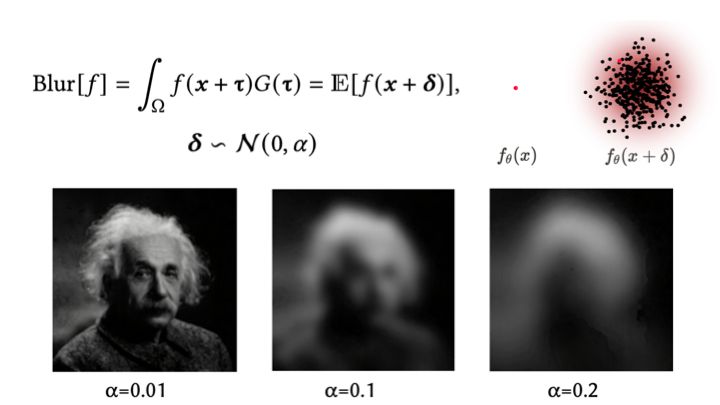

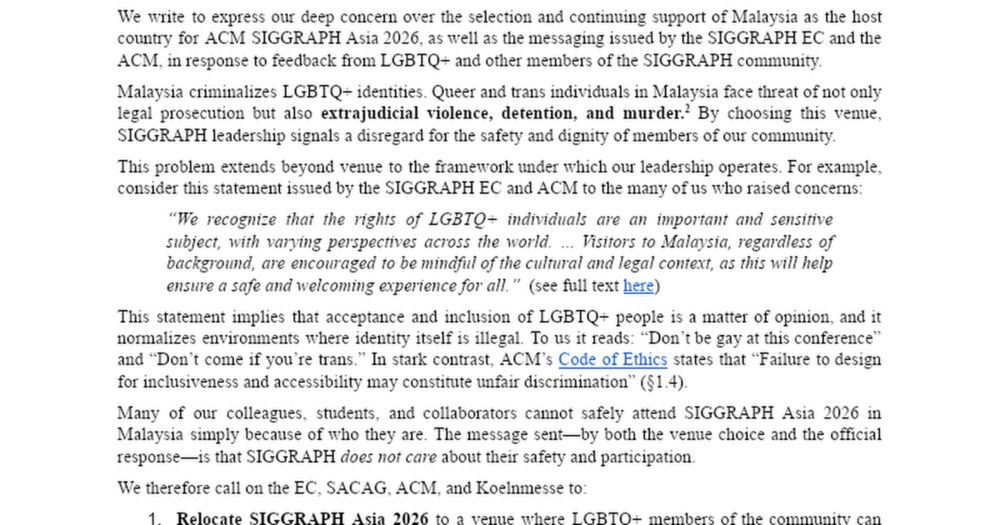

Open Letter to the SIGGRAPH Leadership

RE: Call for SIGGRAPH Asia to relocate from Malaysia and commit to a venue selection process that safeguards LGBTQ+ and other at-risk communities. To the SIGGRAPH Leadership: SIGGRAPH Executive Commit...

Holding SIGGRAPH Asia 2026 in Malaysia is a slap in the face to the rights of LGBTQ+ people. Especially now, when underrepresented people need as much support as we can possibly give them ! Angry like me ? Sign this open letter to let them know. 🏳️⚧️🏳️🌈

docs.google.com/document/d/1...

18.06.2025 14:57 — 👍 29 🔁 15 💬 1 📌 1

Sampling points on an implicit surface is surprisingly tricky, but we know how to cast rays against implicit surfaces! There's a classic relationship between line-intersections and surface-sampling, which turns out to be quite useful for geometry processing.

10.06.2025 18:01 — 👍 21 🔁 2 💬 1 📌 0

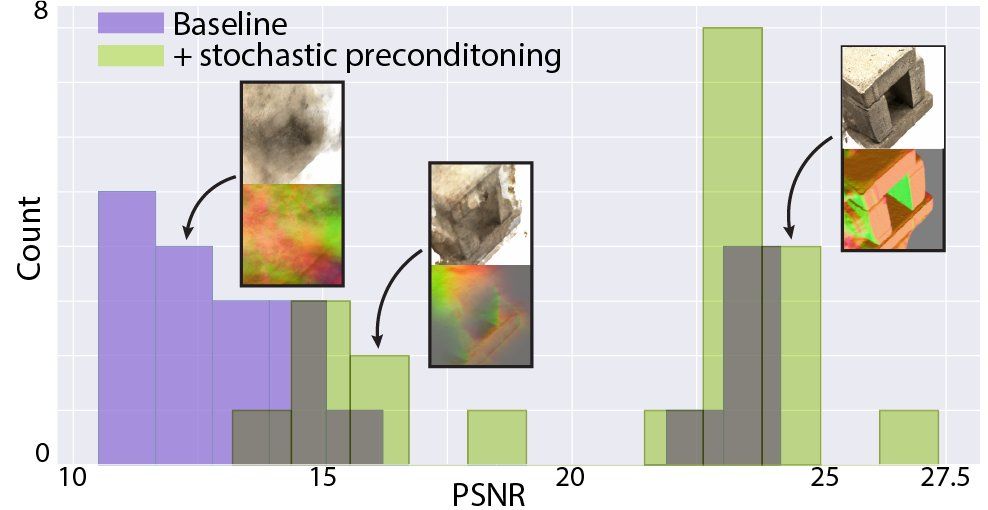

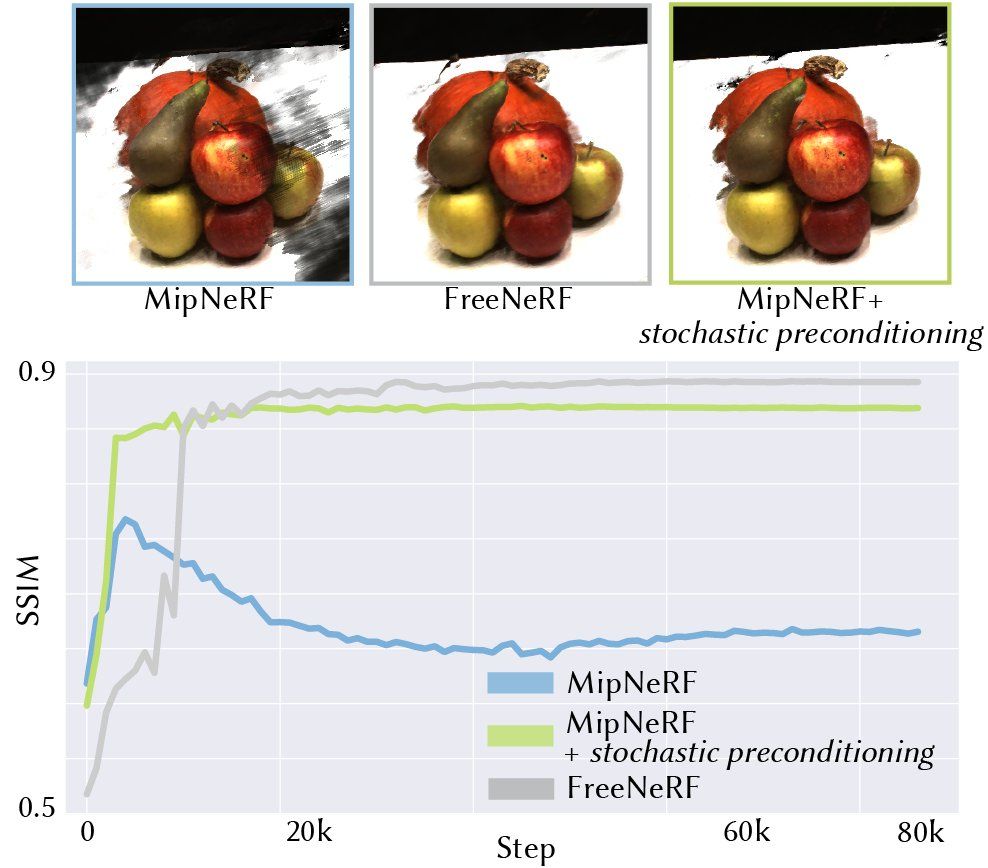

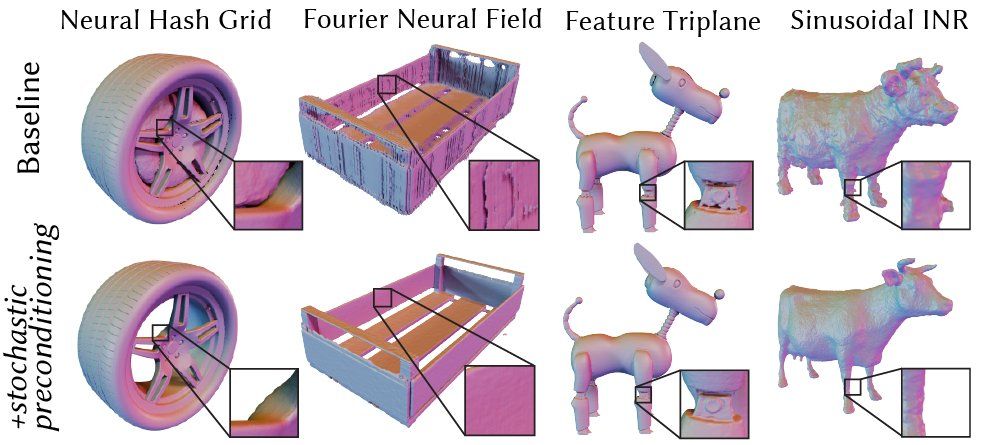

Thank you! There's definitely a low-frequency bias when stochastic preconditioning is enabled, but we only use it for the first ~half of training, then train as-usual. The hypothesis is that the bias in the 1st half helps escape bad minima, then we fit high-freqs in the 2nd half. Coarse to fine!

08.06.2025 05:57 — 👍 0 🔁 0 💬 0 📌 0

My child’s doll and tools I captured as 3D Gaussians, turned digital with collisions and dynamics. We are getting closer to bridging the gap between the world we can touch and digital 3D. Experience the bleeding edge at #NVIDIA Kaolin hands-on lab, #CVPR2025! Wed, 8-noon. tinyurl.com/nv-kaolin-cv...

06.06.2025 15:05 — 👍 11 🔁 2 💬 3 📌 0

Check out Abhishek's research!

I was honestly surprised by this result: classic Barnes-Hut already builds a good spatial hierarchy for approximating kernel summations, but you can do even better by adding some stochastic sampling, for significant speedups on the GPU @ matching average error.

05.06.2025 21:58 — 👍 15 🔁 1 💬 0 📌 0

Ah yes absolutely. That's a great example, we totally should have cited it!

When we looked around we found mannnnnny various "coarse-to-fine" like schemes appearing in the context of particular problems or architectures. As you say, what most excited us here is having simple+general option.

04.06.2025 22:14 — 👍 0 🔁 0 💬 1 📌 0

Thank you for the kind words :) The technique is very much in-the-vein of lots of related ideas in ML, graphics, and elsewhere, but hopefully directly studying it & sharing is useful to the community!

04.06.2025 22:10 — 👍 1 🔁 0 💬 0 📌 0

We did not try it w/ the Gaussians in this project (we really focused on the "query an Eulerian field" setting, which is not quite how Gaussian rendering works).

There are some very cool projects doing related things in that setting:

- ubc-vision.github.io/3dgs-mcmc/

- diglib.eg.org/items/b8ace7...

04.06.2025 22:06 — 👍 1 🔁 0 💬 0 📌 0

Tagging @selenaling.bsky.social and @merlin.ninja, who are both on here it turns out! 😁

03.06.2025 01:19 — 👍 1 🔁 0 💬 1 📌 0

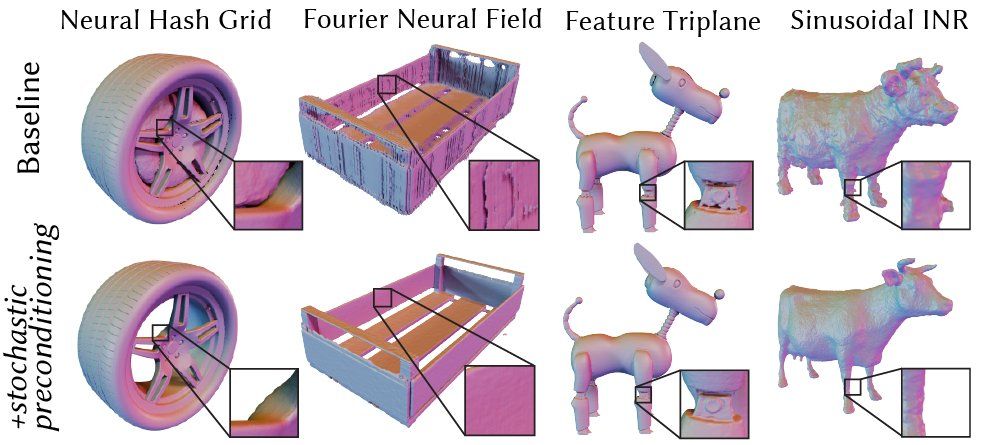

Stochastic Preconditioning for Neural Field Optimization

Stochastic Preconditioning for Neural Field Optimization

website: research.nvidia.com/labs/toronto...

arxiv: arxiv.org/abs/2505.20473

code: github.com/iszihan/stoc...

Kudos go to Selena Ling who is the lead author of this work, during her internship with us at NVIDIA. Reach out to Selena or myself if you have any questions!

03.06.2025 00:43 — 👍 2 🔁 0 💬 1 📌 0

Closing thought: In geometry, half our algorithms are "just" Laplacians/smoothness/heat flow under the hood. In ML, half our techniques are "just" adding noise in the right place. Unsurprisingly, these two tools work great together in this project. I think there's a lot more to do in this vein!

03.06.2025 00:43 — 👍 4 🔁 0 💬 2 📌 0

Geometric initialization is a commonly-used technique to accelerate SDF field fitting, yet it often results in disastrous artifacts for non-object centric scenes. Stochastic preconditioning also helps to avoid floaters both with and without geometric initialization.

03.06.2025 00:43 — 👍 0 🔁 0 💬 1 📌 0

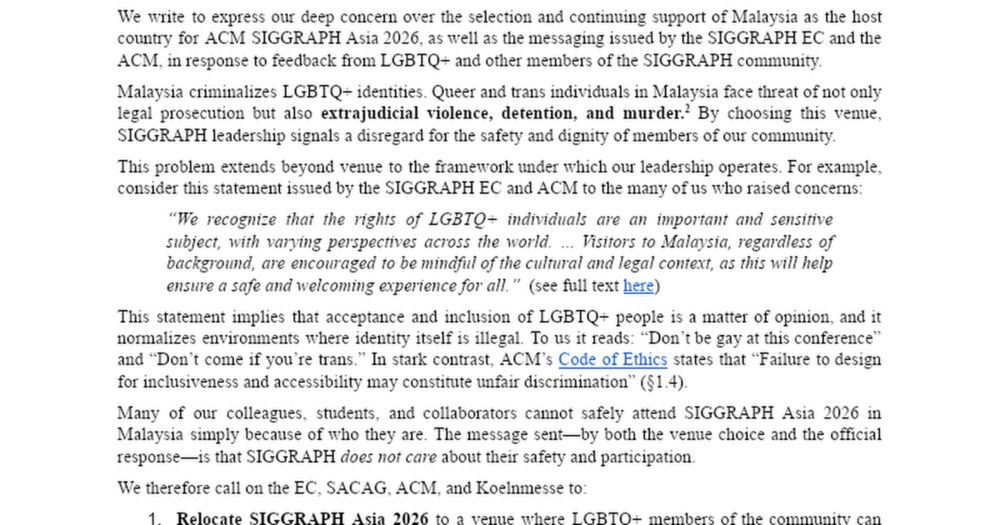

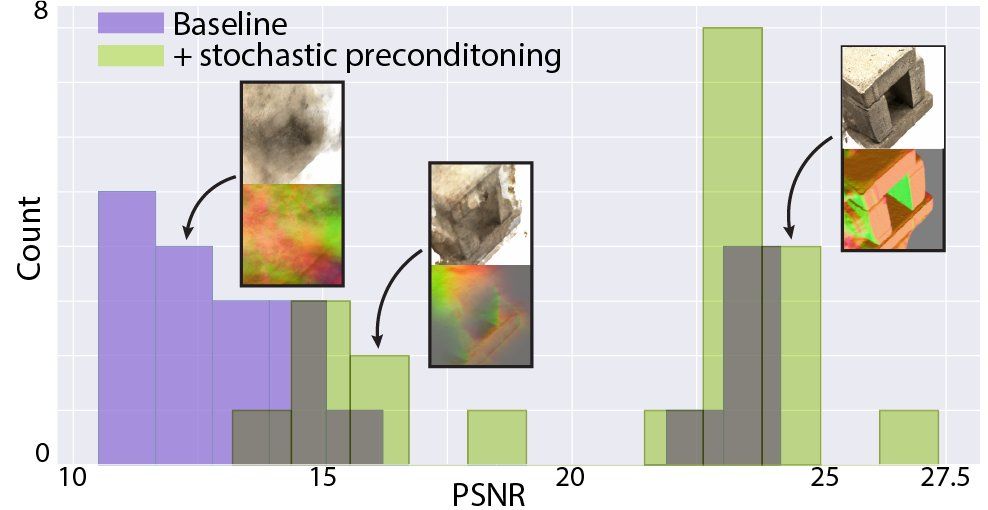

Neural field training can be sensitive to changes to hyperparameters. Stochastic preconditioning makes training more robust to hyperparameter choices, shown here in a histogram of PSNRs from fitting preconditioned and non-preconditioned fields across a range of hyperparameters.

03.06.2025 00:43 — 👍 0 🔁 0 💬 1 📌 0

We argue that this is a quick and easy form of coarse-to-fine optimization, applicable to nearly any objective or field representation. It matches or outperforms custom designed polices and staged coarse-to-fine schemes.

03.06.2025 00:43 — 👍 2 🔁 0 💬 1 📌 1

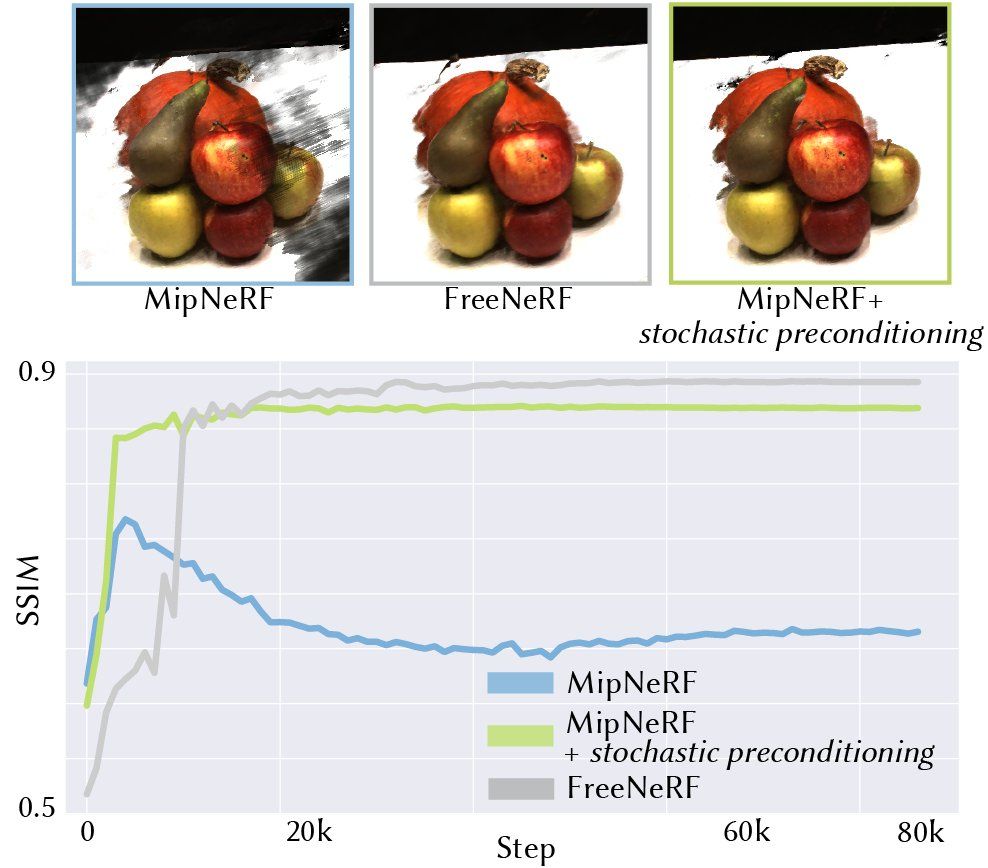

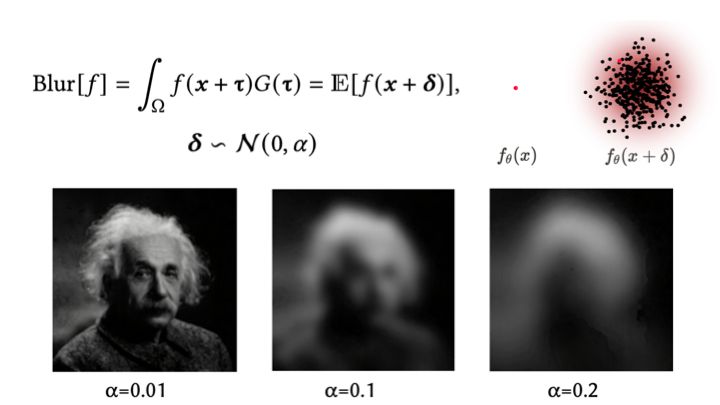

Surprisingly, optimizing this blurred field to fit the objective greatly improves convergence, and in the end we anneal 𝛼 to 0 and are left with an ordinary un-blurred field.

03.06.2025 00:43 — 👍 0 🔁 0 💬 1 📌 0

And implementing our method requires changing just a few lines of code!

03.06.2025 00:43 — 👍 0 🔁 0 💬 1 📌 0

It’s as simple as perturbing query locations according to a normal distribution. This produces a stochastic estimate of the blurred neural field, with the level of blur proportional to a scale parameter 𝛼.

03.06.2025 00:43 — 👍 2 🔁 0 💬 1 📌 0

Selena's #Siggraph25 work found a simple, nearly one-line change that greatly eases neural field optimization for a wide variety of existing representations.

“Stochastic Preconditioning for Neural Field Optimization” by Selena Ling, Merlin Nimier-David, Alec Jacobson, & me.

03.06.2025 00:43 — 👍 54 🔁 8 💬 3 📌 1

Fun new paper at #SIGGRAPH2025:

What if instead of two 6-sided dice, you could roll a single "funky-shaped" die that gives the same statistics (e.g, 7 is twice as likely as 4 or 10).

Or make fair dice in any shape—e.g., dragons rather than cubes?

That's exactly what we do! 1/n

21.05.2025 16:29 — 👍 150 🔁 46 💬 8 📌 6

CS PhD student at UTexas Austin. | Geometry, Simulation, Deep Learning | yyuezhi.github.io

🏳️🌈 ally, BLM, gamedev (Thief, Promesst), Indie Game Jam cofounder, popularized C header-file-only libs w/stb. he/him

Larry, I'm on DuckTales.

https://nothings.org

https://thenumb.at

Computer Graphics, Programming, Math, OxCaml, C++

Rendering at Respawn Entertainment. Previously Luxology, The Foundry, Google. Enthusiast landscape photographer (andrewhelmer.com/photography). All views my own. He/him.

Generating meshes. Prior work: 3D capture/reconstruction, avatars, game engines, simulation, vfx, phd. Always learning something new.

Researcher in Neural Rendering at Huawei, Zürich

https://iszihan.github.io/

Senior Research Scientist at NVIDIA, formerly PhD student at EPFL.

Geometry processing postdoc at école polytechnique

https://markjgillespie.com/

SIMD Sorceress

💼: Slang at NVIDIA: https://shader-slang.com

Slang WG Chair, Khronos

Past: Chrome, Android graphics at Google, 3D APIs at Transgaming

Fun: guitar/vox w/ https://youtube.com/@courtneysshotgunshack

👫: @meggsomatic.tv

🇮🇱 Researcher / Educator / Father / Podcaster. Creator of 3D computer vision algorithms. Follow the podcast feed at @talking-papers.bsky.social

🔗 itzikbs.com

Postdoc at MIT. HPC - Geometric Data Processing (he/him) ahdhn.github.io

Postdoc @ UC Berkeley. 3D Vision/Graphics/Robotics. Prev: CS PhD @ Stanford.

janehwu.github.io

🧙🏻♀️ scientist at Meta NYC | http://bamos.github.io

Geometry & computer graphics assistant professor at USC 👨🔬🖥.

odedstein.com

Trending papers in Vision and Graphics on www.scholar-inbox.com.

Scholar Inbox is a personal paper recommender which keeps you up-to-date with the most relevant progress in your field. Follow us and never miss a beat again!

Professor of Computer Vision and AI at TU Munich, Director of the Munich Center for Machine Learning mcml.ai and of ELLIS Munich ellismunich.ai

cvg.cit.tum.de

Professor, University of Tübingen @unituebingen.bsky.social.

Head of Department of Computer Science 🎓.

Faculty, Tübingen AI Center 🇩🇪 @tuebingen-ai.bsky.social.

ELLIS Fellow, Founding Board Member 🇪🇺 @ellis.eu.

CV 📷, ML 🧠, Self-Driving 🚗, NLP 🖺