Oh, and I must mention the BAIR espresso machine! It was only huddled around freshly ground coffee machines did we come up with this idea (initially wondering if content length matters for statistical behaviors). If you want good research, provide your students with coffee.

10.03.2025 17:32 — 👍 2 🔁 0 💬 0 📌 0

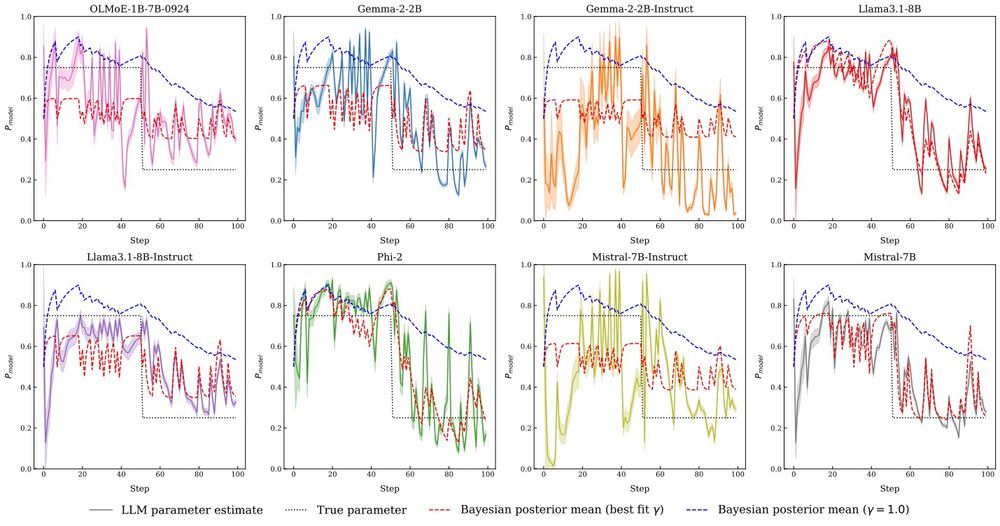

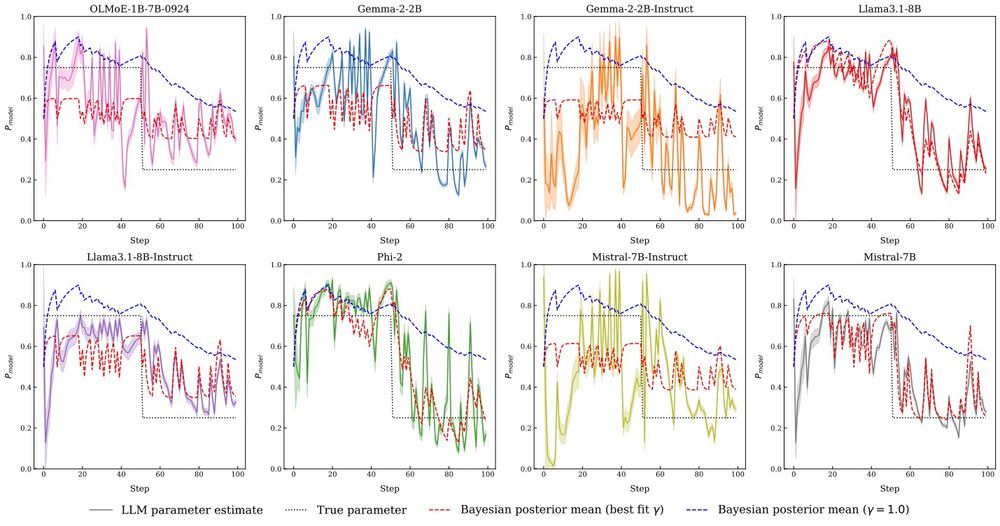

This behavior has very interesting quirks. LLMs implicitly demonstrate time-discounting over the ICL examples. That is, recent evidence matters more!

10.03.2025 17:32 — 👍 2 🔁 0 💬 1 📌 0

Interestingly, models follow a very similar trajectory to what the true Bayesian posterior should look like with the same amount of evidence! When we prompt for coin flips from a 60% heads-biased coin but give it evidence the follows 70% heads, models converge to the latter.

10.03.2025 17:32 — 👍 1 🔁 0 💬 1 📌 0

Can we control this behavior? We tried many things before settling on in-context learning as a working mechanism. If we prompt an LLM to flip a biased coin, and then show increasing rollouts of flips from such a distribution, models converge to the right underlying parameter.

10.03.2025 17:32 — 👍 1 🔁 0 💬 1 📌 0

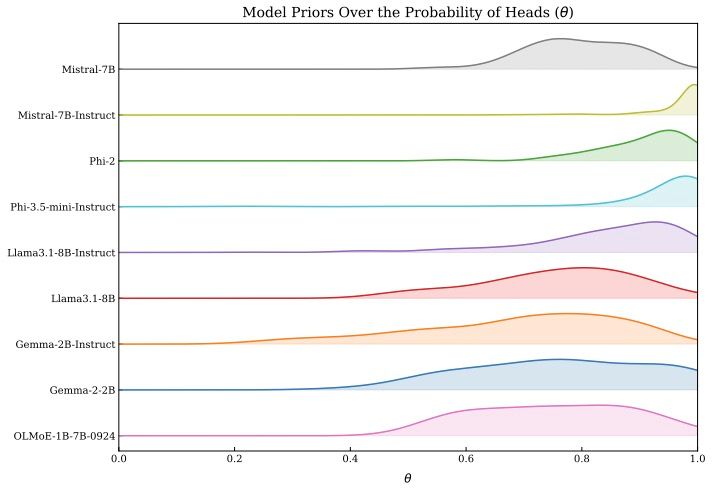

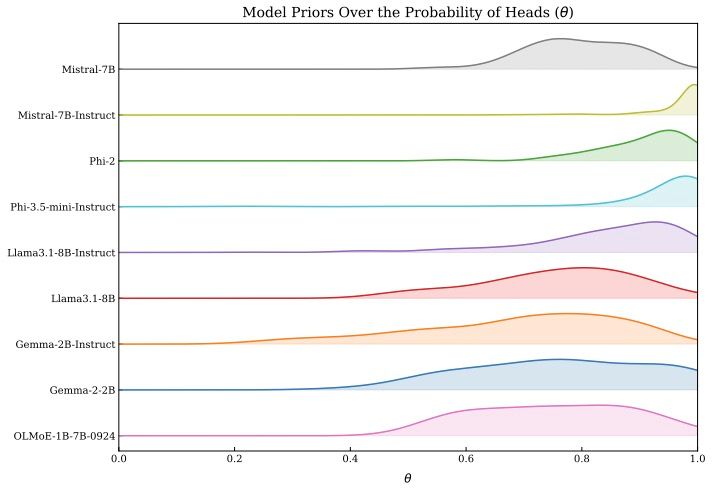

Biased coin flips follow a simple probability distribution that LLMs should be able to simulate explicitly. In fact, when prompted to flip a fair coin, most LLMs predict heads 70-85% of the time! This holds true even if you prompt the model to flip a biased coin 🪙

10.03.2025 17:32 — 👍 1 🔁 0 💬 1 📌 0

Enough Coin Flips Can Make LLMs Act Bayesian

Large language models (LLMs) exhibit the ability to generalize given few-shot examples in their input prompt, an emergent capability known as in-context learning (ICL). We investigate whether LLMs uti...

Do LLMs understand probability distributions? Can they serve as effective simulators of probability? No!

However, in our latest paper that via in-context learning, LLMs update their broken priors in a manner akin to Bayseian updating.

📝 arxiv.org/abs/2503.04722

10.03.2025 17:32 — 👍 17 🔁 5 💬 1 📌 0

Computer Science Seminar Series. Making AI Work in the Crucible: Perception and Reasoning in Chaotic Environments. February 25, 2025, 228 Malone Hall. Refreshments available 10:30 a.m. Seminar begins 10:45 a.m. Ritwik Gupta, University of California, Berkeley.

Seminar with @ritwikgupta.bsky.social coming up! Learn more here: www.cs.jhu.edu/event/cs-sem...

11.02.2025 15:00 — 👍 3 🔁 2 💬 0 📌 0

Recent proposals to kill the influence of “Chinese AI” in America will have devastating knock-on effects to American innovation. In this article, I discuss the statelessness of AI and the overly broad nature of Senator Hawley’s proposed legislation.

10.02.2025 16:32 — 👍 3 🔁 0 💬 0 📌 0

It is a false premise that America has a lead in AI over China. So many articles have come out recently about DeepSeek threatening our lead. The lead in *meaningful* capabilities has never existed.

24.01.2025 19:23 — 👍 3 🔁 2 💬 0 📌 1

The narrative that we have achieved peak data is so absurd to me. Humans are still around. New data is constantly being created. We just have to be more efficient about using it.

15.12.2024 02:55 — 👍 3 🔁 0 💬 0 📌 0

PhD Student working on bioimaging inverse problems with @florianjug.bsky.social at @humantechnopole.bsky.social + @tudresden.bsky.social | Prev: computer vision Hitachi R&D, Tokyo.

🔗: https://rayanirban.github.io/

Likes 🏸🏋️🏔️🏓 and ✈️

Principal analyst at Google's Threat Intelligence Group. Former Deputy National Intelligence Officer for East Asia and senior nonresident fellow at the Atlantic Council. Views my own.

https://markparkeryoung.net

Dad, husband, President, citizen. barackobama.com

Researching planning, reasoning, and RL in LLMs @ Reflection AI. Previously: Google DeepMind, UC Berkeley, MIT. I post about: AI 🤖, flowers 🌷, parenting 👶, public transit 🚆. She/her.

http://www.jesshamrick.com

AI Innovation and Law Fellow at Texas Law | Cover AI for the Lawfare Institute | Author the Appleseed AI substack.

Muckraker + local/climate journo turned strategic investigative researcher. Runner. 🐈 guy. History nerd. Cinephile. Adjunct Prof, UFL + Bd Chair, CA Streets Initiative. San Diegan. My posts only.

Journalist @decoherence.media using open sources to expose far-right networks

Other bylines @bellingcat.com @texasobserver.org

🇨🇴 she/her journalist in LA. I write about jails (+ other stuff). Managing editor of @lapublicpress.bsky.social

Journalist, audio producer, cat lady, native plant gardener ⚡️ Past: NPR, LAist ✨ Bylines: Prism, LA Public Press 💫

🌐 andreagtrrz.com

💌 andrea@andreagtrrz.com

Mountains and High Deserts.

I’m a civics and democracy reporter for the Modesto Bee with the California Local Reporting Fellowship and a USC Health Equity fellow.

Former a UC Berkeley Graduate School of Journalism and member of IRP

Signal: katsphilosophy.74

✒️ Deputy Director of Advocacy @freedom.press

💽 Fellow and former COO of Starling Lab (Stanford+USC)

🌴 Board of Directors @lapressclub.bsky.social

Assistant Visuals Editor at CalMatters • Born and raised in West Chula Vista •

https://www.adrianaheldiz.com/

Security Research / Digital Privacy / Pro Lurker

I teach data journalism at the University of Maryland, run OpenElections. Fan of WBB & test cricket. Posting obscure things about campaign finance, Congress & elections.

https://github.com/dwillis

https://thescoop.org

Covering Wall Street and doing financial investigations for the Wall Street Journal. 313-330-4323 on all the apps (Signal preferred). Ben.foldy at wsj.com or on ProtonMail.

investigative reporter, professor of data journalism, co-founder, justice media co-lab at boston university.

priors at nytimes, center for public integrity, sd union-trib, inewsource & intercept

we’re all here together, and the weather’s fine ♬ (~);}