🤔💭What even is reasoning? It's time to answer the hard questions!

We built the first unified taxonomy of 28 cognitive elements underlying reasoning

Spoiler—LLMs commonly employ sequential reasoning, rarely self-awareness, and often fail to use correct reasoning structures🧠

25.11.2025 18:25 — 👍 45 🔁 8 💬 2 📌 0

Forget modeling every belief and goal! What if we represented people as following simple scripts instead (i.e "cross the crosswalk")?

Our new paper shows AI which models others’ minds as Python code 💻 can quickly and accurately predict human behavior!

shorturl.at/siUYI%F0%9F%...

03.10.2025 02:24 — 👍 36 🔁 14 💬 3 📌 3

New paper challenges how we think about Theory of Mind. What if we model others as executing simple behavioral scripts rather than reasoning about complex mental states? Our algorithm, ROTE (Representing Others' Trajectories as Executables), treats behavior prediction as program synthesis.

03.10.2025 05:01 — 👍 14 🔁 2 💬 2 📌 0

Definitely, we should look closer at sample complexity for training but for things like webnav there are massive datasets so could be good fit.

03.10.2025 00:12 — 👍 1 🔁 0 💬 0 📌 0

In some sense, yes, in that you need diverse trajectories of the agent's behavior in different contexts, but you don't need to have access to those goals, or even the distribution, and the agent might be doing non-goal-directed behavior, such as exploration.

02.10.2025 19:49 — 👍 1 🔁 0 💬 1 📌 0

When values collide, what do LLMs choose? In our new paper, "Generative Value Conflicts Reveal LLM Priorities," we generate scenarios where values are traded off against each other. We find models prioritize "protective" values in multiple-choice, but shift toward "personal" values when interacting.

02.10.2025 18:37 — 👍 10 🔁 0 💬 1 📌 0

Very cool! Thanks for sharing! Would be interesting to compare your exploration ideas on open ended tasks beyond little alchemy with EELMA

02.10.2025 05:04 — 👍 1 🔁 0 💬 0 📌 0

Excited by our new work estimating the empowerment of LLM-based agents in text and code. Empowerment is the causal influence an agent has over its environment and measures an agent's capabilities without requiring knowledge of its goals or intentions.

01.10.2025 04:27 — 👍 17 🔁 2 💬 3 📌 0

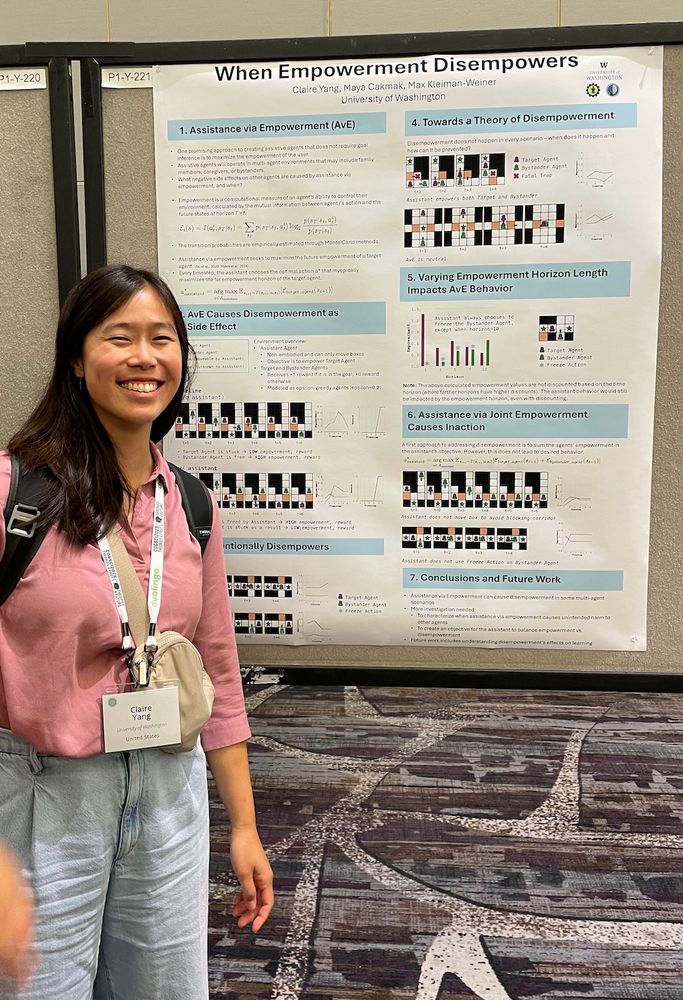

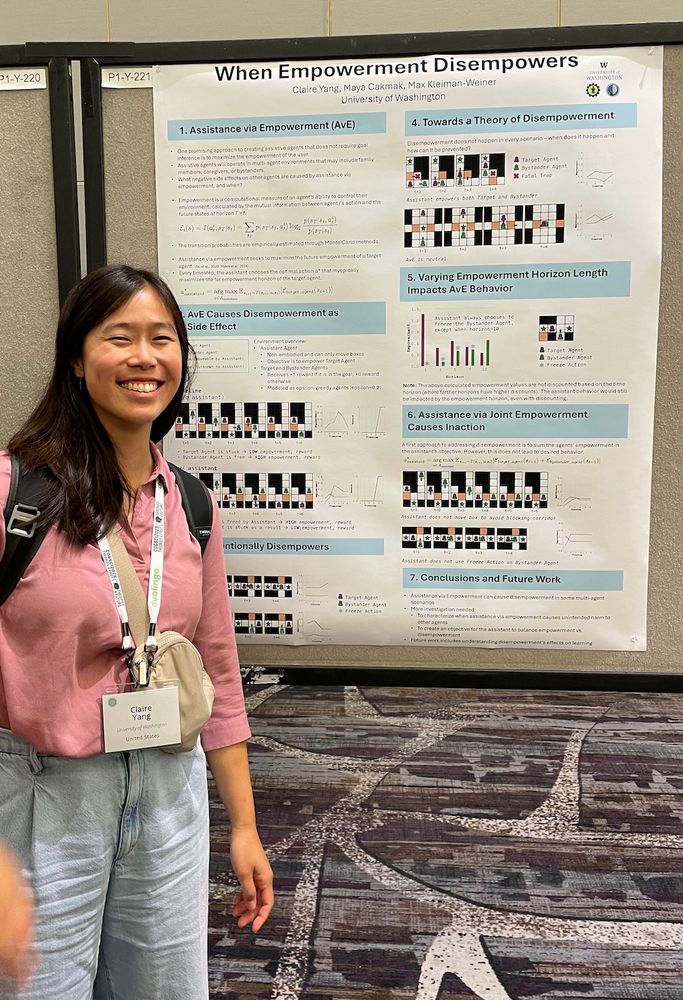

Claire's new work showing that when an assistant aims to optimize another's empowerment, it can lead to others being disempowered (both as a side effect and as an intentional outcome)!

06.08.2025 22:44 — 👍 7 🔁 0 💬 0 📌 0

Person standing next to poster titled "When Empowerment Disempowers"

Still catching up on my notes after my first #cogsci2025, but I'm so grateful for all the conversations and new friends and connections! I presented my poster "When Empowerment Disempowers" -- if we didn't get the chance to chat or you would like to chat more, please reach out!

06.08.2025 22:31 — 👍 16 🔁 3 💬 0 📌 1

It’s forgivable =) We just do the best we can with what we have (i.e., resource rational) 🤣

31.07.2025 23:56 — 👍 2 🔁 0 💬 0 📌 0

Max giving a talk w the slide in OP

lol this may be the most cogsci cogsci slide I've ever seen, from @maxkw.bsky.social

"before I got married I had six theories about raising children, now I have six kids and no theories"......but here's another theory #cogsci2025

31.07.2025 18:18 — 👍 67 🔁 9 💬 2 📌 1

Evolving general cooperation with a Bayesian theory of mind | PNAS

Theories of the evolution of cooperation through reciprocity explain how unrelated

self-interested individuals can accomplish more together than th...

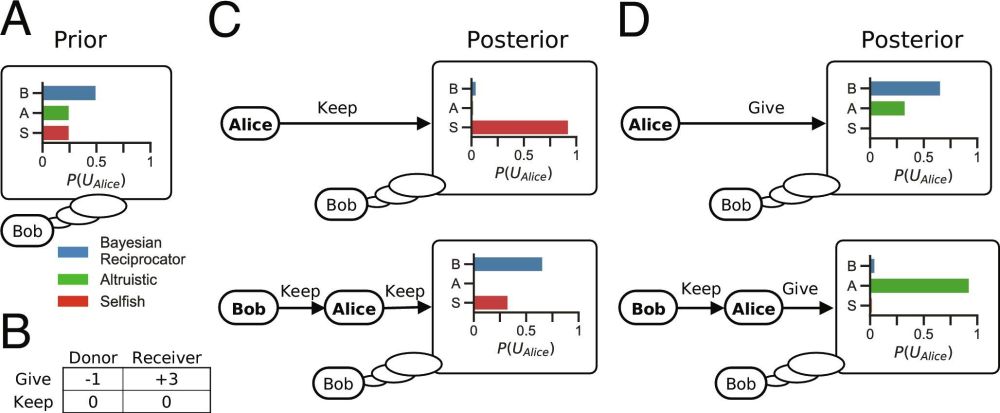

Quantifying the cooperative advantage shows why humans, the most sophisticated cooperators, also have the most sophisticated machinery for understanding the minds of others. It also offers principles for building more cooperative AI systems. Check out the full paper!

www.pnas.org/doi/10.1073/...

22.07.2025 06:03 — 👍 8 🔁 0 💬 2 📌 0

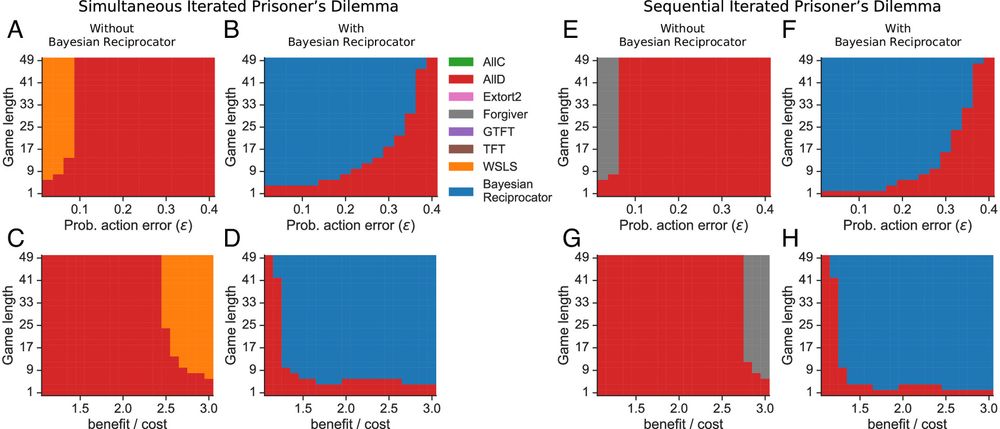

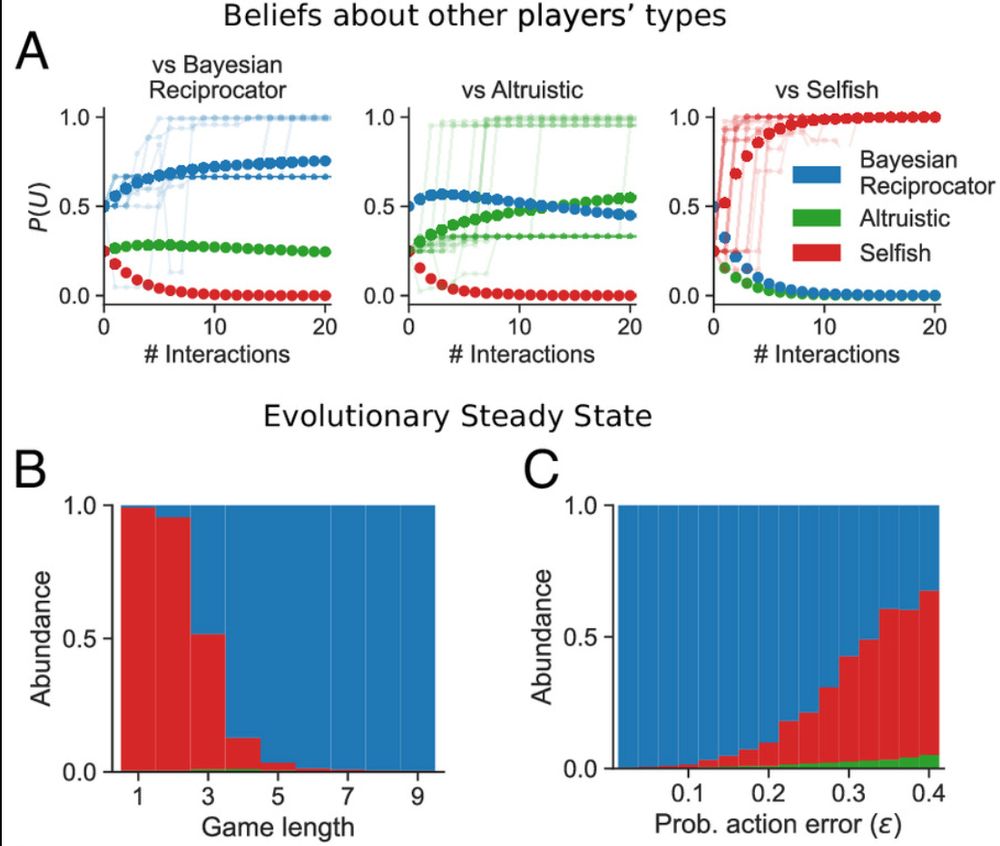

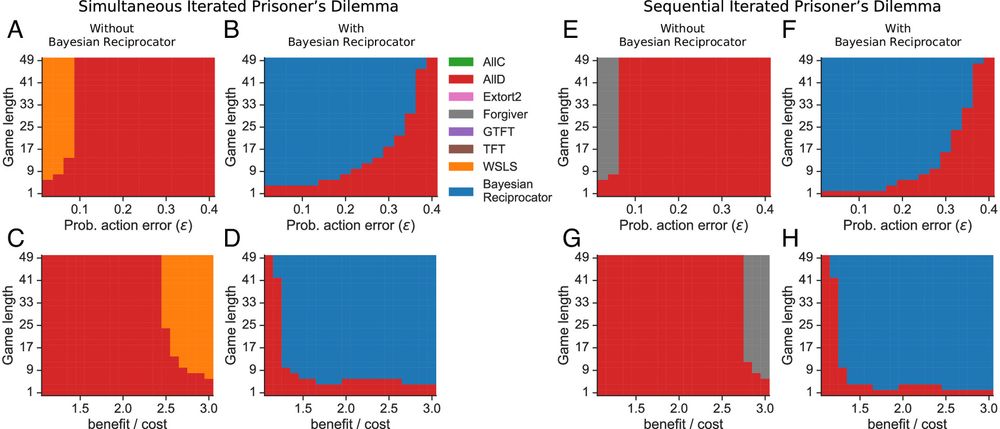

Finally, when we tested it against memory-1 strategies (such as TFT and WSLS) in the iterated prisoner's dilemma, the Bayesian Reciprocator: expanded the range where cooperation is possible and dominated prior algorithms using the *same* model across simultaneous & sequential games.

22.07.2025 06:03 — 👍 5 🔁 0 💬 1 📌 0

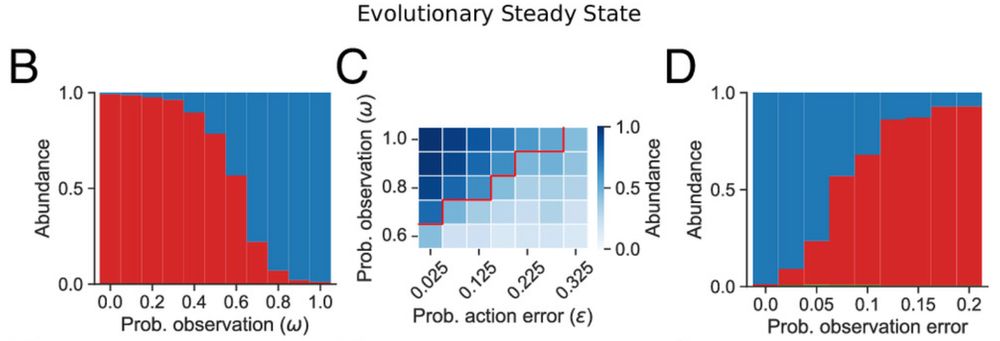

Even in one-shot games with observability, the Bayesian Reciprocator learns from observing others' interactions and enables cooperation through indirect reciprocity

22.07.2025 06:03 — 👍 6 🔁 0 💬 1 📌 0

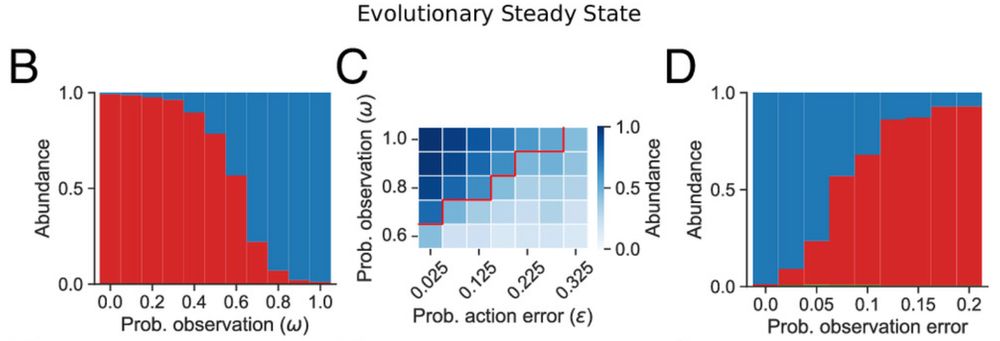

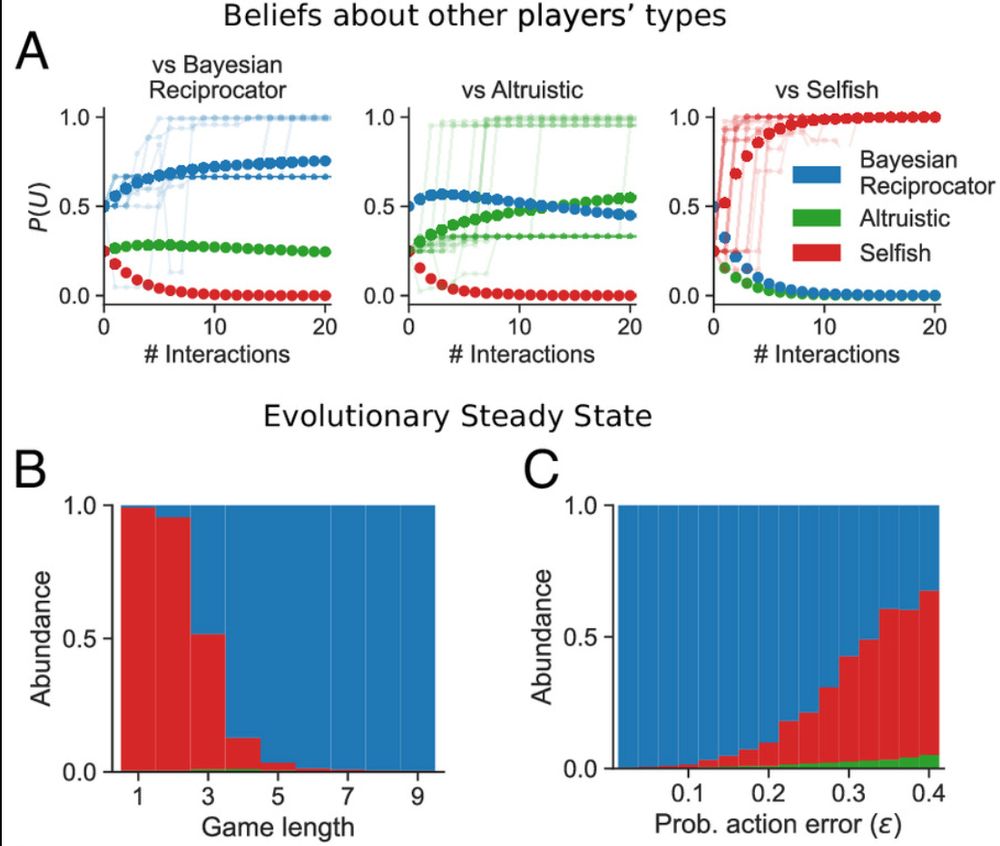

In dyadic repeated interactions in the Game Generator, the Bayesian Reciprocator quickly learns to distinguish cooperators from cheaters, remains robust to errors, and achieves high population payoffs through sustained cooperation.

22.07.2025 06:03 — 👍 6 🔁 0 💬 2 📌 0

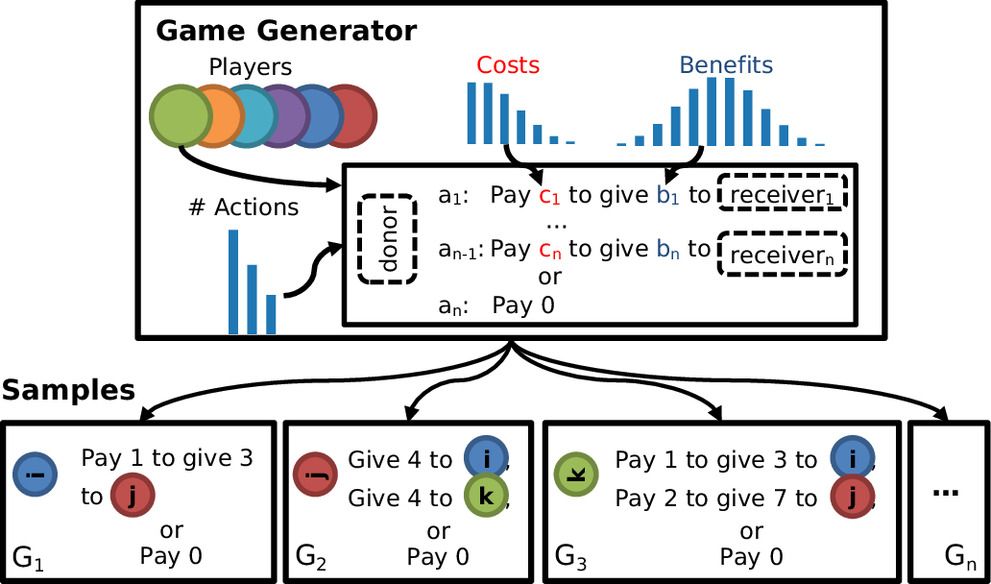

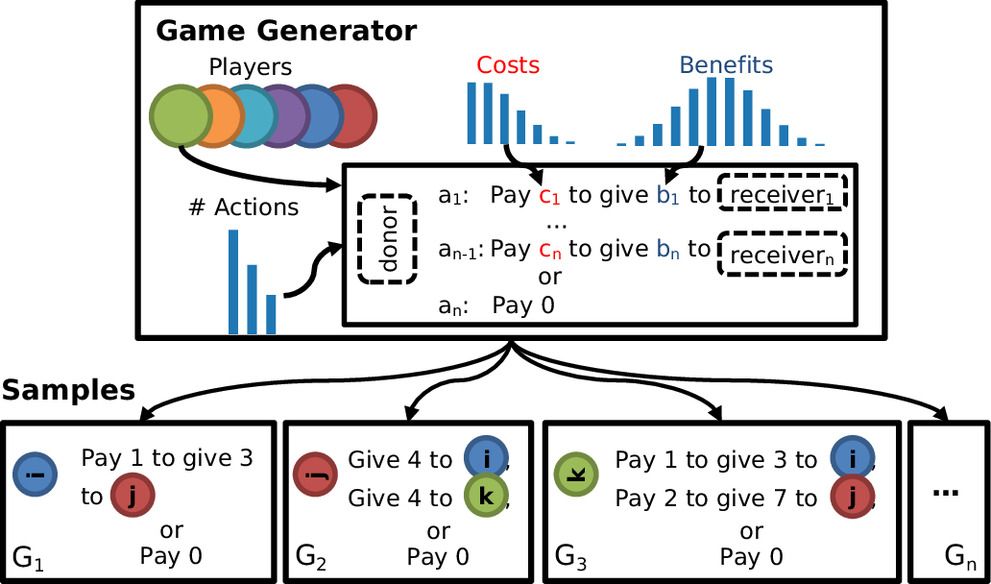

Instead of just testing on repeated prisoners' dilemma, we created a "Game Generator" which creates infinite cooperation challenges where no two interactions are alike. Many classic games, like the prisoner’s dilemma or resource allocation games, are just special cases.

22.07.2025 06:03 — 👍 9 🔁 0 💬 1 📌 0

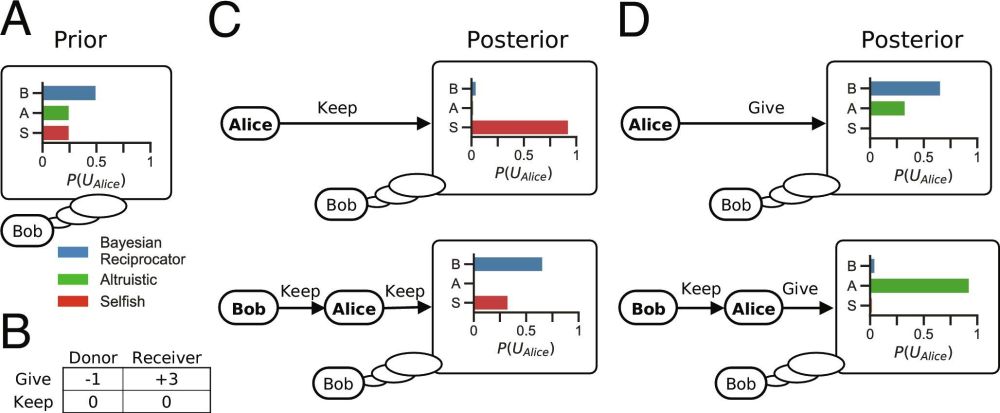

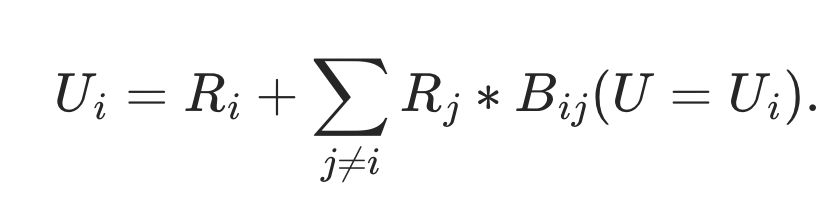

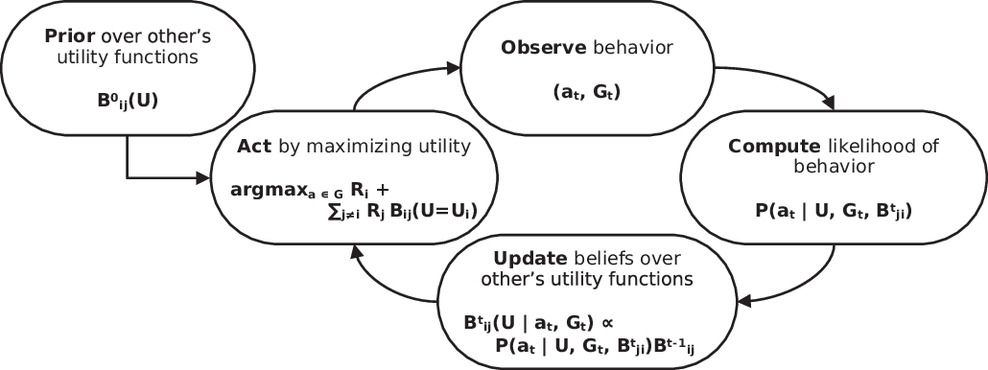

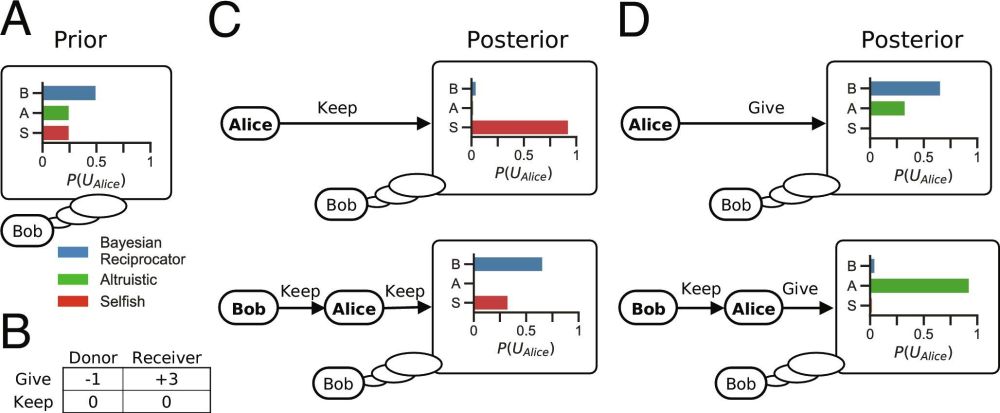

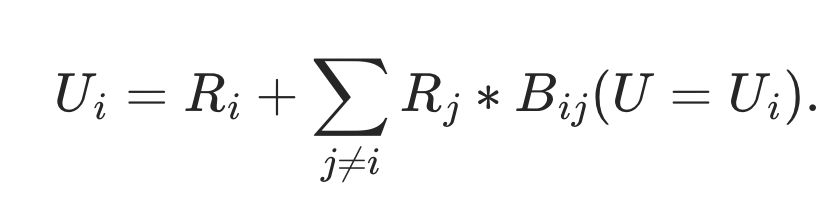

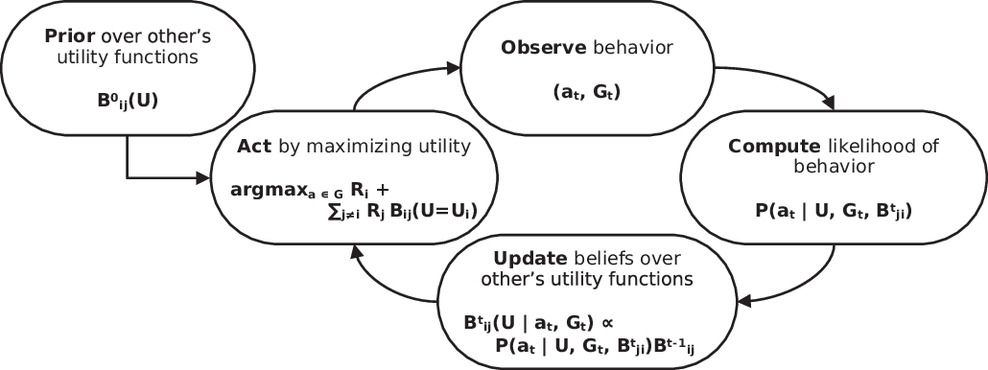

It uses theory of mind to infer the latent utility functions of others through Bayesian inference and an abstract utility calculus to work across ANY game.

22.07.2025 06:03 — 👍 5 🔁 0 💬 1 📌 0

We introduce the "Bayesian Reciprocator," an agent that cooperates with others proportional to its belief that others share its utility function.

22.07.2025 06:03 — 👍 7 🔁 0 💬 1 📌 0

Classic models of cooperation like tit-for-tat are simple but brittle. They only work in specific games, can't handle noise and stochasticity and don't understand others' intentions. But human cooperation is remarkably flexible and robust. How and why?

22.07.2025 06:03 — 👍 6 🔁 0 💬 1 📌 0

This project was first presented back in 2018 (!) and was born from a collaboration between Alejandro Vientos, Dave Rand @dgrand.bsky.social & Josh Tenenbaum @joshtenenbaum.bsky.social

22.07.2025 06:03 — 👍 7 🔁 0 💬 1 📌 0

Evolving general cooperation with a Bayesian theory of mind | PNAS

Theories of the evolution of cooperation through reciprocity explain how unrelated

self-interested individuals can accomplish more together than th...

Our new paper is out in PNAS: "Evolving general cooperation with a Bayesian theory of mind"!

Humans are the ultimate cooperators. We coordinate on a scale and scope no other species (nor AI) can match. What makes this possible? 🧵

www.pnas.org/doi/10.1073/...

22.07.2025 06:03 — 👍 92 🔁 36 💬 2 📌 2

As always, CogSci has a fantastic lineup of workshops this year. An embarrassment of riches!

Still deciding which to pick? If you are interested in building computational models of social cognition, I hope you consider joining @maxkw.bsky.social, @dae.bsky.social, and me for a crash course on memo!

18.07.2025 13:56 — 👍 21 🔁 6 💬 1 📌 0

Very excited for this workshop!

17.07.2025 04:42 — 👍 14 🔁 2 💬 0 📌 0

Promotional image for a #CogSci2025 workshop titled “Building computational models of social cognition in memo.” Organized and presented by Kartik Chandra, Sean Dae Houlihan, and Max Kleiman-Weiner. Scheduled for July 30 at 8:30 AM in room Pacifica I. The banner features the conference theme “Theories of the Past / Theories of the Future,” and the dates: July 30–August 2 in San Francisco.

#Workshop at #CogSci2025

Building computational models of social cognition in memo

🗓️ Wednesday, July 30

📍 Pacifica I - 8:30-10:00

🗣️ Kartik Chandra, Sean Dae Houlihan, and Max Kleiman-Weiner

🧑💻 underline.io/events/489/s...

16.07.2025 20:32 — 👍 12 🔁 2 💬 1 📌 2

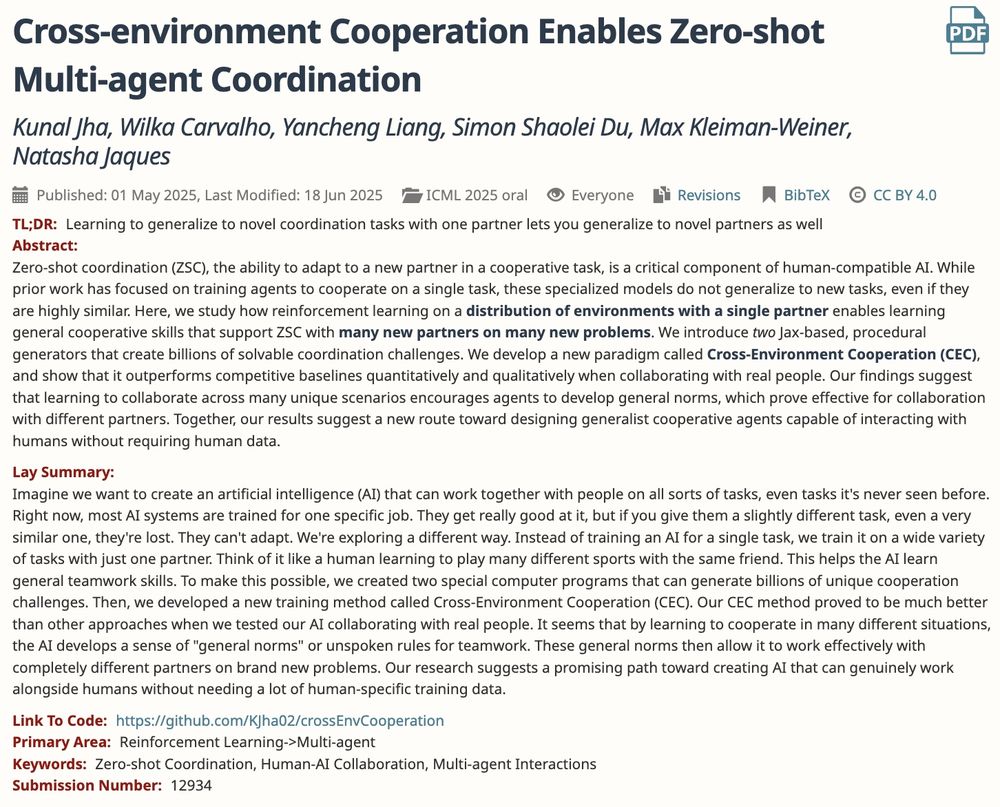

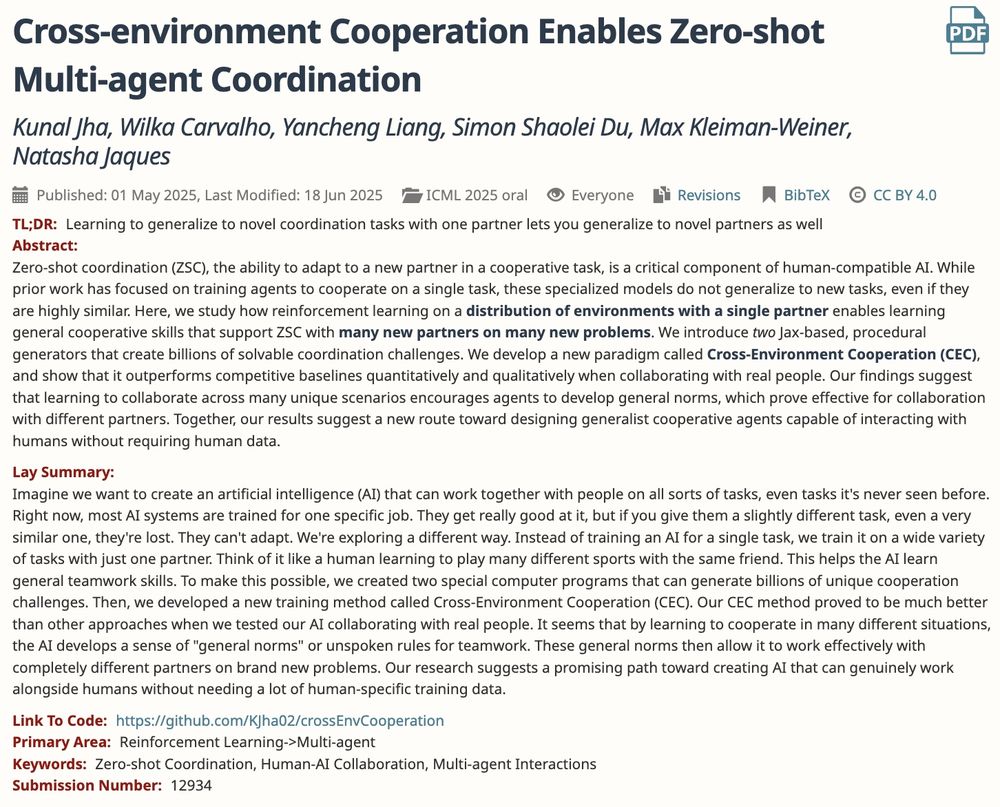

'Cross-environment Cooperation Enables Zero-shot Multi-agent Coordination'

@kjha02.bsky.social · Wilka Carvalho · Yancheng Liang · Simon Du ·

@maxkw.bsky.social · @natashajaques.bsky.social

doi.org/10.48550/arX...

(3/20)

15.07.2025 13:44 — 👍 6 🔁 2 💬 1 📌 0

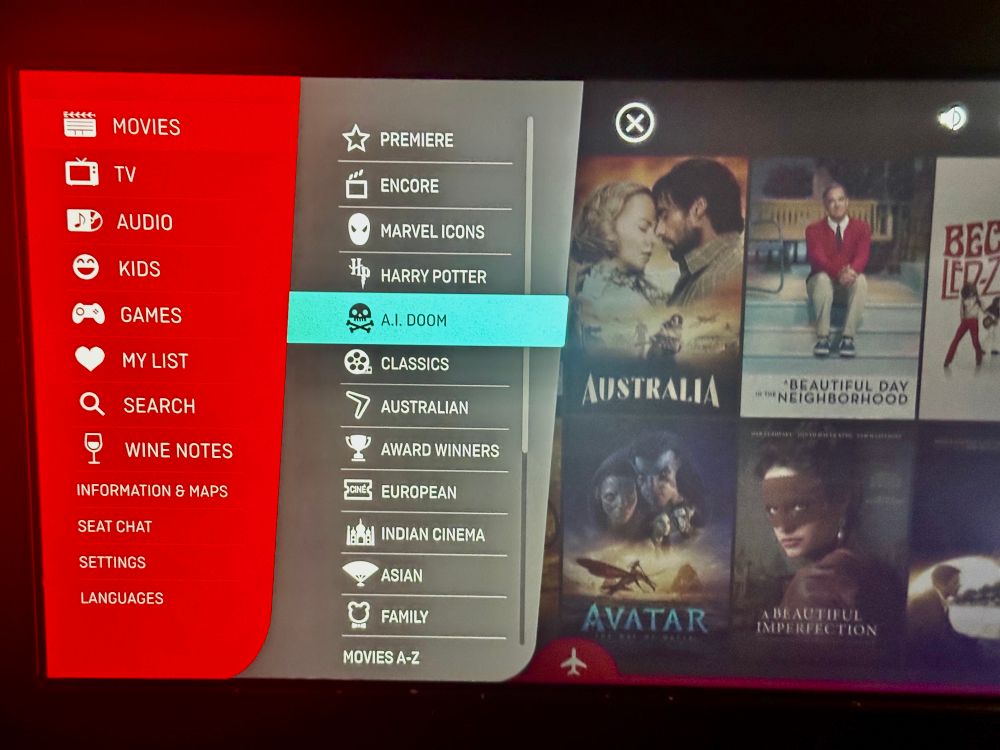

AI DOOM

Settling in for my flight and apparently A.I. DOOM is now a movie genre between Harry Potter and Classics. Nothing better than an existential crisis with pretzels and a ginger ale.

29.06.2025 22:52 — 👍 6 🔁 0 💬 0 📌 0

PhD student in psychology at Harvard, research fellow at Goodfire AI

AI interpretability + computational cognitive science

language, (social) cognition, AI

societal impacts of AI | assistant professor of philosophy & software and societal systems at Carnegie Mellon University & AI2050 Schmidt Sciences early-career fellow | system engineering + AI + philosophy | https://kasirzadeh.org/

Inspired by Cognitive Science and Philosophy.

AGI safety researcher at Google DeepMind, leading causalincentives.com

Personal website: tomeveritt.se

Studying multi-agent collaboration 🤝🧩🤖

PhD Candidate at Princeton CS with Tom Griffiths & Natalia Vélez @cocoscilab.bsky.social @velezcolab.bsky.social

Prev: Cornell CS, MIT BCS

Computational cognitive science of learning, reasoning and creativity, in both social and non-social contexts 🧠🎾🌈🥘

Assistant Professor @ ISMMS

NIH Director's Early Independence Awardee

Lindau Nobel Laureate Young Scientist

PI @ sinclaboratory.com

Using computational models, fMRI, & intracranial EEG to study social inference, learning, empathy, loneliness, & well-being

Assistant Professor at University of New Hampshire | studying how kids and adults explore, explain, and learn | she/her 🏳️🌈 | emilyliquin.com

Assistant Professor @MIT Sloan exploring principles of rationality and irrationality. Cognitive science, computational neuroscience, and behavioral economics. https://mitmgmtfaculty.mit.edu/rbhui/

Anthropologist studying motherhood, cooperative foraging & childcare, and knowledge transmission among BaYaka foragers in the Congolese rainforest

Research scientist @ird-fr.bsky.social

https://sites.google.com/view/haneuljang

Psychology postdoc studying how people think about truth and credibility, and interested in open science and metascience.

Fostering interdisciplinary collaboration among scholars and storytellers interested in the origins, nature, and future of intelligences.

Web: www.disi.org

Podcast: Many Minds (@manymindspod.bsky.social)

Catherine Hartley's research group in the Department of Psychology at NYU, focused on characterizing the development and dynamics of the learning, memory, and decision-making processes that shape our behavior

https://www.hartleylab.org/

phd student in psychology at oxford uni. she/her

licezhang.github.io

https://jessyli.com Associate Professor, UT Austin Linguistics.

Part of UT Computational Linguistics https://sites.utexas.edu/compling/ and UT NLP https://www.nlp.utexas.edu/

Google Chief Scientist, Gemini Lead. Opinions stated here are my own, not those of Google. Gemini, TensorFlow, MapReduce, Bigtable, Spanner, ML things, ...

Cognitive science + information systems at Stevens Institute of Technology. Studying minds, brains, and machines. 💡🧠🤖

Job hunting psychologist of monkeys, wolves, dogs and anything interesting and fundable. Incapable of reading anyone's mind including my own. I research animal personality, happiness, and cognition when paid to do so. Views my own unless they're wrong

Associate Professor of Computer Science and Psychology @ Princeton. Posts are my views only. https://www.cs.princeton.edu/~bl8144/