This work is a collaboration with a team of talented researchers at the AreaSciencePark of Trieste, Italy.

Special thanks to @alexpietroserra.bsky.social, Alessio Ansuini and @albecazzaniga.bsky.social !

If you are @neuripsconf.bsky.social don't miss our poster tomorrow, Dec 11, at 11am!!

🧵6/6

10.12.2024 21:46 — 👍 2 🔁 0 💬 0 📌 0

⚒️ We applied an advanced density-based clustering algorithm, showing its potential as an interpretability tool and in guiding novel strategies for the effective finetuning of LLMs.

🧵5/6

10.12.2024 19:54 — 👍 2 🔁 0 💬 1 📌 0

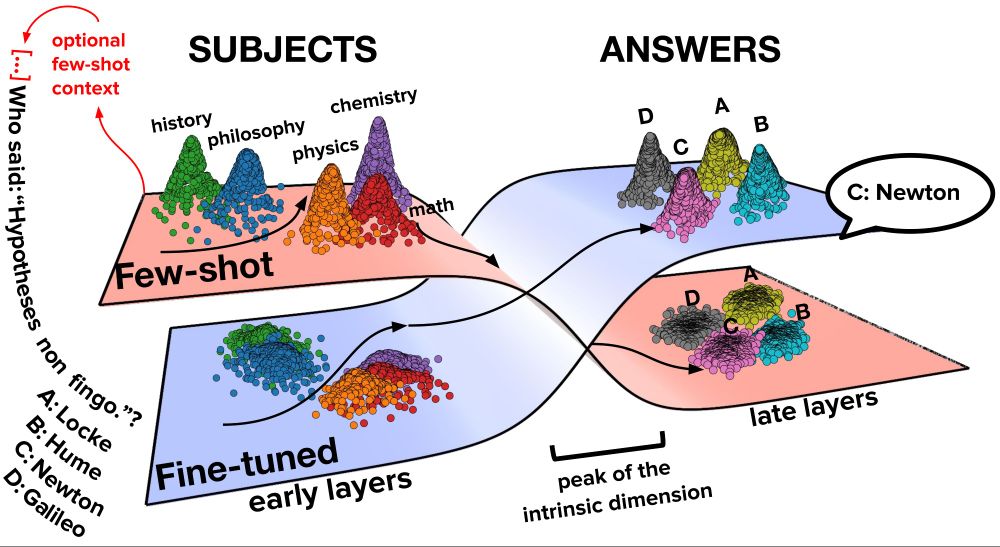

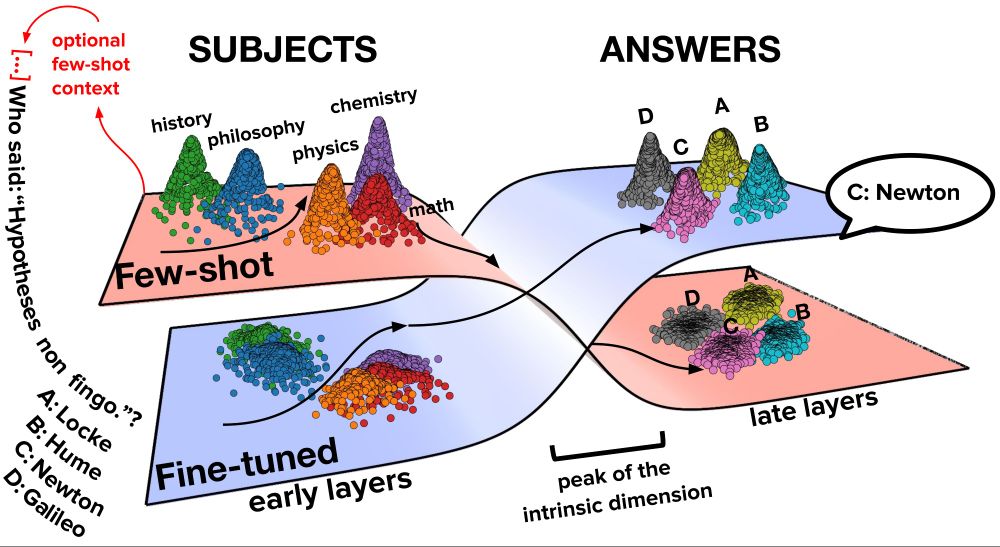

In fine-tuning, answer-focused modes rapidly emerge midway through the network, just after the intrinsic dimension peak.

Early layers remain largely unchanged.

🧵4/6

10.12.2024 19:52 — 👍 2 🔁 0 💬 1 📌 0

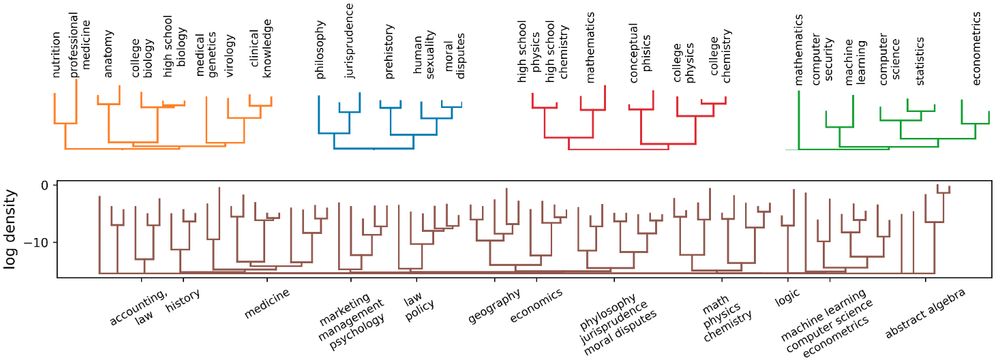

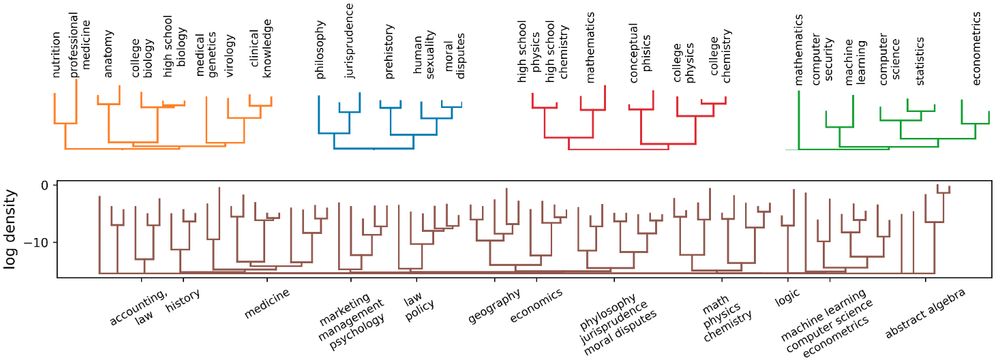

In few-shot learning, the prompt topic defines the modes of data distribution early in the network, and density modes are hierarchically organized based on the similarity of the subjects.

🧵3/6

10.12.2024 19:49 — 👍 2 🔁 0 💬 1 📌 0

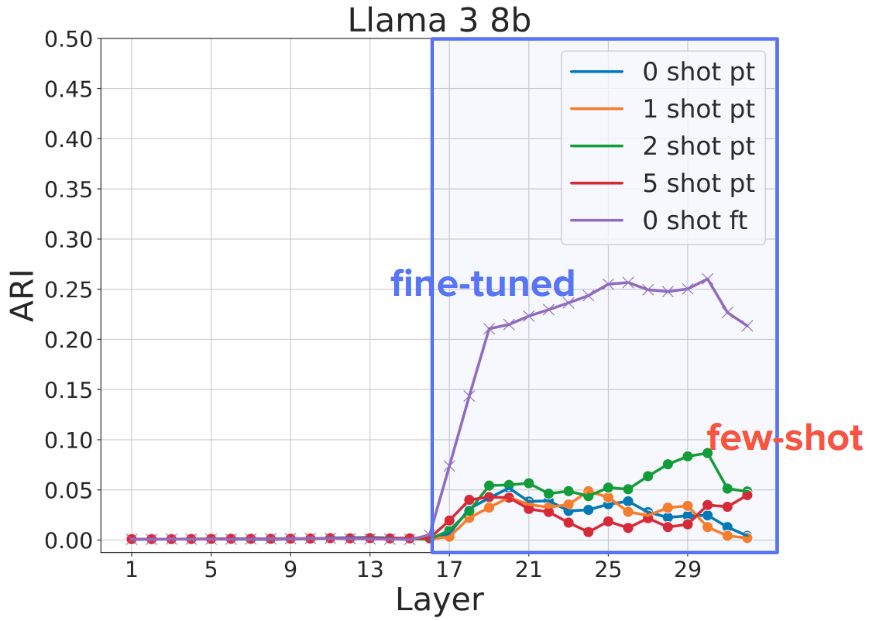

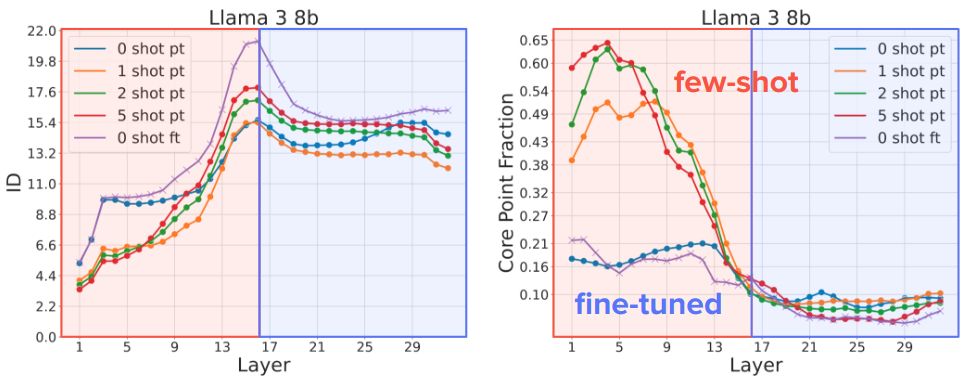

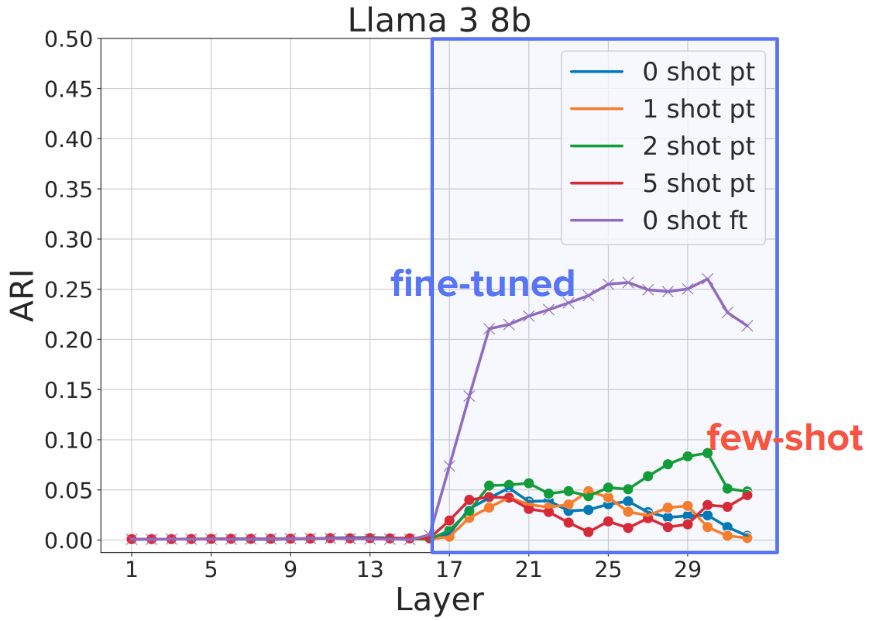

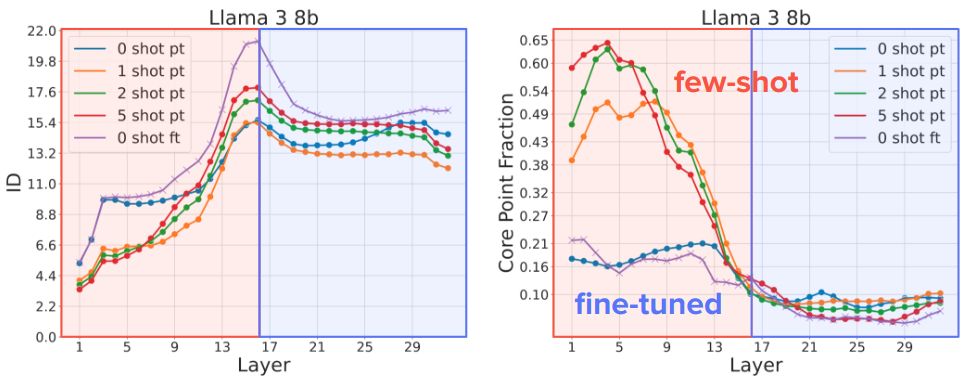

🎯 Key results: few-shot learning and fine-tuning show two distinct processing phases inside LLMs.

These phases are separated by a peak of the data intrinsic dimension and a sharp decrease in the separation of the probability modes.

Paper: arxiv.org/abs/2409.03662

🧵2/6

10.12.2024 19:48 — 👍 2 🔁 0 💬 1 📌 0

Just landed in Vancouver to present @neuripsconf.bsky.social the results of our new work!

Few-shot learning and fine-tuning change the layers inside LLMs in a dramatically different way, even when they perform equally well on multiple-choice question-answering tasks.

🧵1/6

10.12.2024 19:47 — 👍 10 🔁 0 💬 1 📌 3

Associate Professor at GroNLP ( @gronlp.bsky.social ) #NLP | Multilingualism | Interpretability | Language Learning in Humans vs NeuralNets | Mum^2

Head of the InClow research group: https://inclow-lm.github.io/

Language Scientist @ Pompeu Fabra University

https://generalstrikeus.com/

PhD student in computational linguistics at UPF

chengemily1.github.io

Previously: MIT CSAIL, ENS Paris

Barcelona

PhD Student in Colt UPF

https://mahautm.github.io/

https://ellis-jena.eu is developing+applying #AI #ML in #earth system, #climate & #environmental research.

Partner: @uni-jena.de, https://bgc-jena.mpg.de/en, @dlr-spaceagency.bsky.social, @carlzeissstiftung.bsky.social, https://aiforgood.itu.int

Prof (CS @Stanford), Co-Director @StanfordHAI, Cofounder/CEO @theworldlabs, CoFounder @ai4allorg #AI #computervision #robotics #AI-healthcare

Personal Account

Founder: The Distributed AI Research Institute @dairinstitute.bsky.social.

Author: The View from Somewhere, a memoir & manifesto arguing for a technological future that serves our communities (to be published by One Signal / Atria

I only post positive Black content. ✊🏾❤️

SUBSCRIBE TO MY YOUTUBE: YouTube.com/pedakin

Physics-AI fellow at the University of Cambridge using physics to understand AI. Prev: EPFL, Amazon AWS AI.

https://alesfav.github.io/

Researcher in machine learning

Searching for principles of neural representation | Neuro + AI @ enigmaproject.ai | Stanford | sophiasanborn.com

https://unireps.org

Discover why, when and how distinct learning processes yield similar representations, and the degree to which these can be unified.

PhD student in NLP at Sapienza | Prev: Apple MLR, @colt-upf.bsky.social , HF Bigscience, PiSchool, HumanCentricArt #NLProc

www.santilli.xyz

Apple MLR (Barcelona) intern | ELLIS Ph.D. student in representation learning @SapienzaRoma & @ISTAustria | Former NLP Engineer @babelscape

flegyas.github.io

San Diego Dec 2-7, 25 and Mexico City Nov 30-Dec 5, 25. Comments to this account are not monitored. Please send feedback to townhall@neurips.cc.

Molecular simulations and AI at Area Science Park

NLP & Interpretability | PhD Student @ University of Trieste & Laboratory of Data Engineering of Area Science Park | Prev MPI-IS