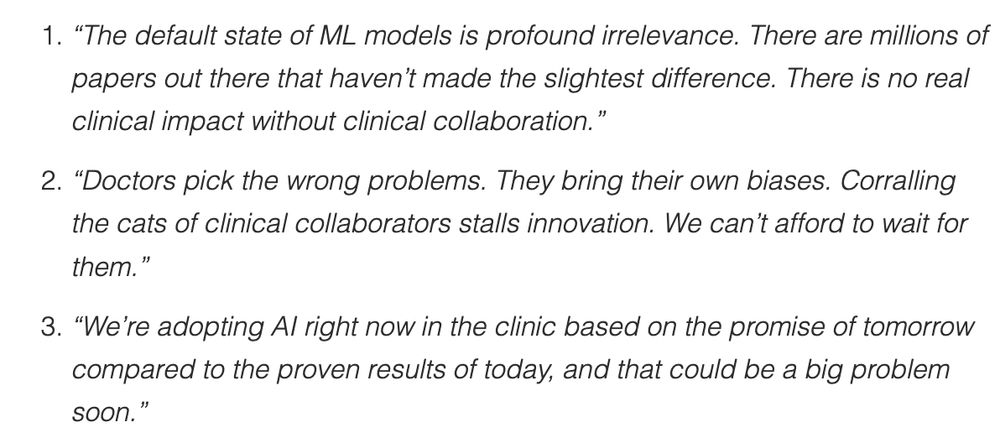

What happens in SAIL 2025 stays in SAIL 2025 -- except for these anonymized hot takes! 🔥 Jotted down 17 de-identified quotes on AI and medicine from medical executives, journal editors, and academics in off-the-record discussions in Puerto Rico

irenechen.net/sail2025/

12.05.2025 14:02 —

👍 27

🔁 5

💬 0

📌 3

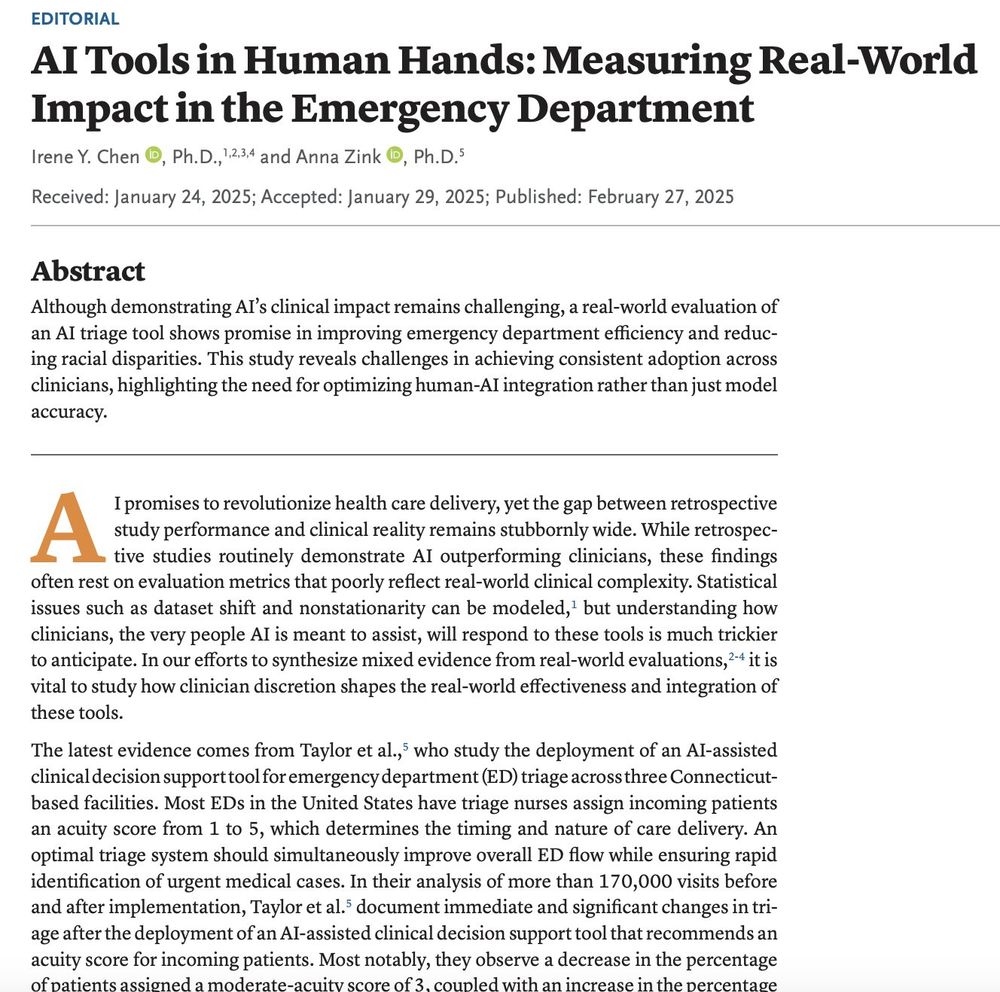

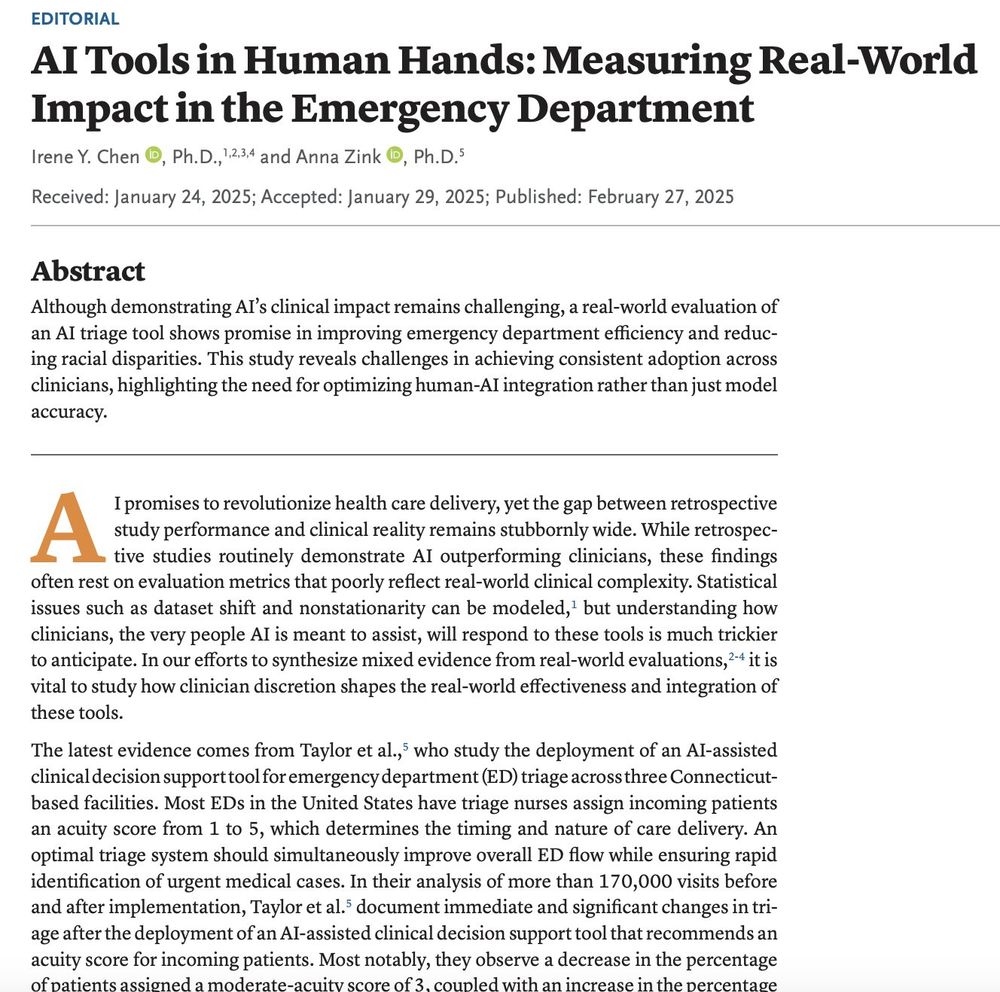

AI deployments in health are often understudied because they require time and careful analysis.⌛️🤔

We share thoughts in @ai.nejm.org about a recent AI tool for emergency dept triage that: 1) improves wait times and fairness (!), and 2) helps nurses unevenly based on triage ability

27.02.2025 21:06 —

👍 30

🔁 7

💬 2

📌 1

Great q! We found that standard ML training (incl rebalancing data, etc) still reflected the differences in EHR data quality. Later we started experimenting with different options such as adding self-report data to the model, but using regular ML training did not seem to help!

20.12.2024 17:44 —

👍 1

🔁 0

💬 1

📌 0

tl;dr Healthcare access disparities cascade through the entire ML pipeline.

Check out our working paper here: arxiv.org/pdf/2412.07712

20.12.2024 01:04 —

👍 18

🔁 7

💬 1

📌 2

Finding 3: Here's what helped: Adding patient self-reported data boosted model sensitivity by 11.2% for underserved patients. (Note adding the low/high access info did NOT help)

20.12.2024 01:04 —

👍 2

🔁 0

💬 1

📌 1

Finding 2: "Just train a better model" isn't enough. EHR reliability has large effects on balanced accuracy (5.8% drop) and sensitivity (9.4% drop).

20.12.2024 01:04 —

👍 1

🔁 0

💬 2

📌 0

Finding 1: For 78% of medical conditions we examined, data quality was worse for patients facing cost/time barriers to health. EHR reliability is defined compared against patient self-report

20.12.2024 01:04 —

👍 1

🔁 0

💬 1

📌 0

Bar chart of different barriers to healthcare

How do disparities in healthcare access affect ML models? 💰📉🧐 We found that low access to care -> worse EHR data quality -> worse ML performance in a dataset of 134k patients. Work with Anna Zink (on the faculty job market rn!) + Hongzhou Luan, presented at #ML4H2024

20.12.2024 01:04 —

👍 40

🔁 14

💬 1

📌 1

Very cool! Excited to check it out

18.12.2024 02:17 —

👍 1

🔁 0

💬 0

📌 0

This year our CHEN lab holiday party featured cookie decorating! 🎄 Grateful to have such creative and inspiring students and collaborators. 🥰 Can you spot all of the ML-related cookies? 📈

12.12.2024 19:05 —

👍 9

🔁 0

💬 0

📌 0

jamanetwork.com/journals/jam...

19.11.2024 01:05 —

👍 0

🔁 0

💬 0

📌 0

Important caveats: 1) Very small sample size (6 medical cases) -> p=0.03 which is kinda sus, 2) human physicians in study had only 3 yrs of training, 3) no nuance of how to use LLMs for diag reasoning: clinical notes != clean cases; paper does not engage with this.

18.11.2024 21:08 —

👍 38

🔁 4

💬 5

📌 0

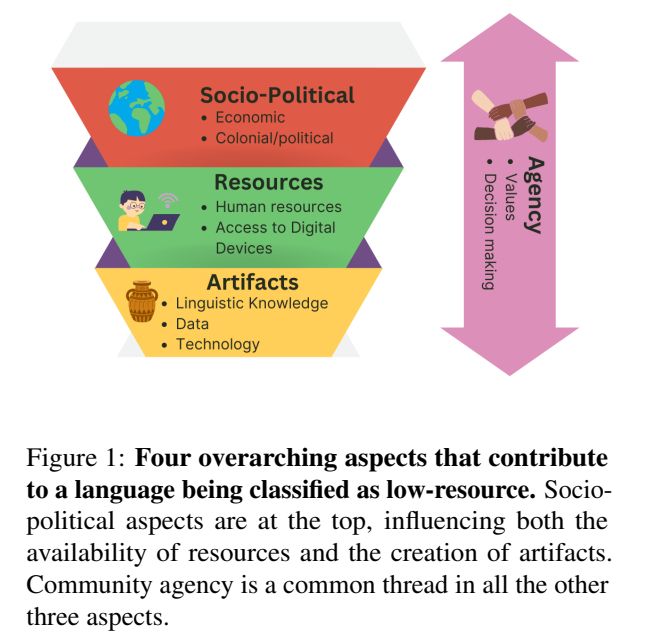

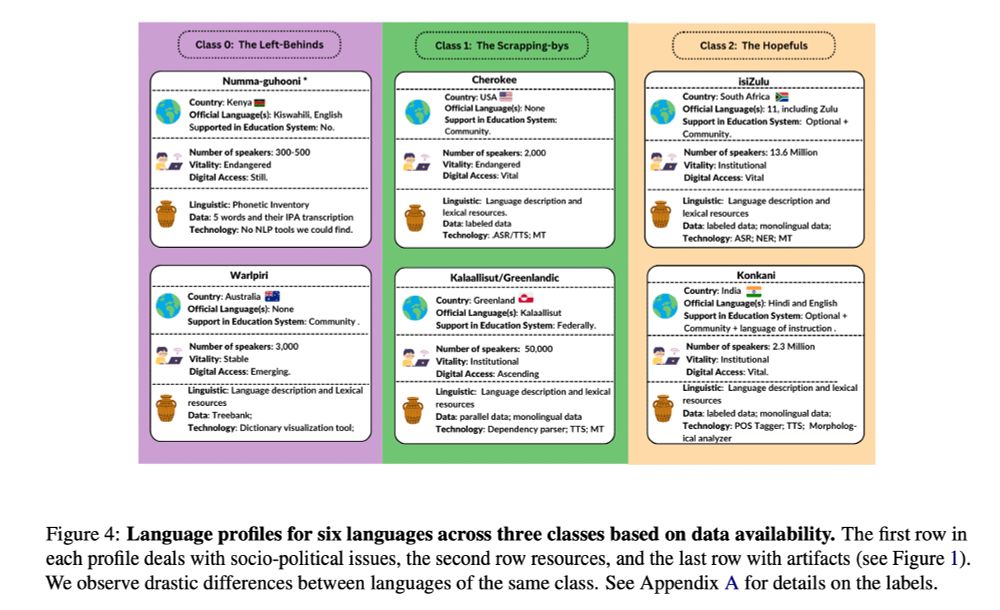

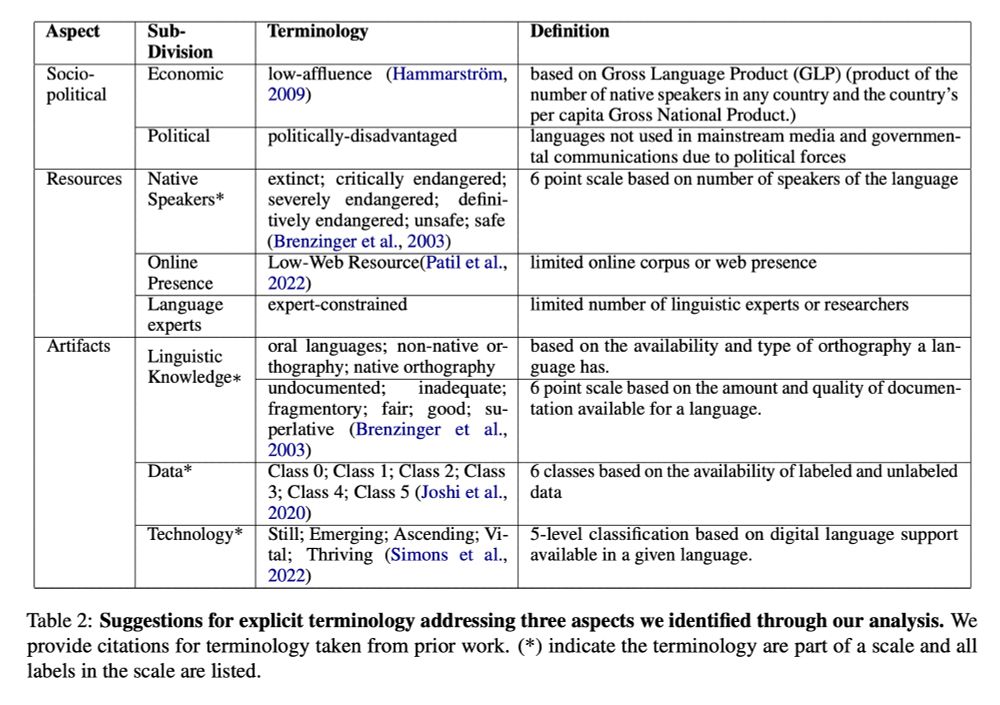

Informative recap of EMNLP papers related to multilingual models and low resource languages! Thanks @catherinearnett.bsky.social

15.11.2024 20:49 —

👍 7

🔁 1

💬 0

📌 0

Fairness definitions differ across groups! For white respondents, fairness = "proximity'' to assigned school. For Hispanic or Latino parents, fairness = "same rules'' for everyone. Cool work by @nilou.bsky.social + students

14.11.2024 08:15 —

👍 9

🔁 2

💬 0

📌 0

Thank you thank you!!

14.11.2024 03:32 —

👍 0

🔁 0

💬 0

📌 0

Summary of #AMIA2024 presentations related to health equity and algorithmic fairness! Thanks for pulling together @alyssapradhan.bsky.social

14.11.2024 01:58 —

👍 9

🔁 1

💬 0

📌 0

Super interested in this use case! Do you remember who the presenter was here?

14.11.2024 01:57 —

👍 0

🔁 0

💬 1

📌 0

If you trained 10 models and they had a huge variance on predictions for you, would you have any faith in the model? Enjoyed this paper defining self-consistency -- and showing enforcing that makes models more fair! Cool AAAI24 paper from A. Feder Cooper et al.

katelee168.github.io/pdfs/arbitra...

13.11.2024 17:21 —

👍 11

🔁 3

💬 0

📌 0

You got this Jessilyn! 🔥

12.11.2024 04:29 —

👍 1

🔁 0

💬 1

📌 0

12 hours later, I've realized how much I've been missing a place like OldTwitter where you can share candid thoughts on research without bots clogging up the feed. Thanks Bluesky 💙

12.11.2024 02:12 —

👍 40

🔁 0

💬 0

📌 0

Giving a talk tomorrow 11:40am PT at the Simons Domain Adaptation Workshop. I'll be speaking about our recent paper on the Data Addition Dilemma! Catch the talk on live-stream or recorded afterwards

Paper: arxiv.org/pdf/2408.04154

Workshop: simons.berkeley.edu/workshops/do...

12.11.2024 02:06 —

👍 15

🔁 1

💬 0

📌 1

Creative AIES 2024 paper by andreawwenyi.bsky.social that uses NLP to help uncover gender bias for men/women defendants. Legal experts used NLP to build consensus and evidence on annotation rules. Could have relevant tie-ins to healthcare and bias in clinical notes

12.11.2024 01:55 —

👍 8

🔁 2

💬 0

📌 0

Kind words from a wise lady!

12.11.2024 01:39 —

👍 1

🔁 0

💬 0

📌 0

First post! I'm recruiting PhD students this PhD admission cycle who want to work on: a) impactful ML methods for healthcare 🤖, b) computational methods to improve health equity ⚖️, or c) AI for women's health or climate health 🤰🌎

Apply via UC Berkeley CPH or EECS (AI-H) 🌉.

irenechen.net/join-lab/

12.11.2024 01:35 —

👍 44

🔁 21

💬 2

📌 3

Can't figure out how to DM on bsky, but are you defining bioML as distinctly from clinical here?

12.11.2024 00:56 —

👍 0

🔁 0

💬 1

📌 0