EEML'25, our yearly machine learning summer school event, will be organised next summer in the beautiful city of Sarajevo - the place where East meets West 🇧🇦🇧🇦🇧🇦.

More details coming soon, please see the link in the thread!

@amanolache.bsky.social

ELLIS PhD Student @ IMPRS-IS/Uni Stuttgart; ML Research @ Bitdefender | https://andreimano.github.io/

EEML'25, our yearly machine learning summer school event, will be organised next summer in the beautiful city of Sarajevo - the place where East meets West 🇧🇦🇧🇦🇧🇦.

More details coming soon, please see the link in the thread!

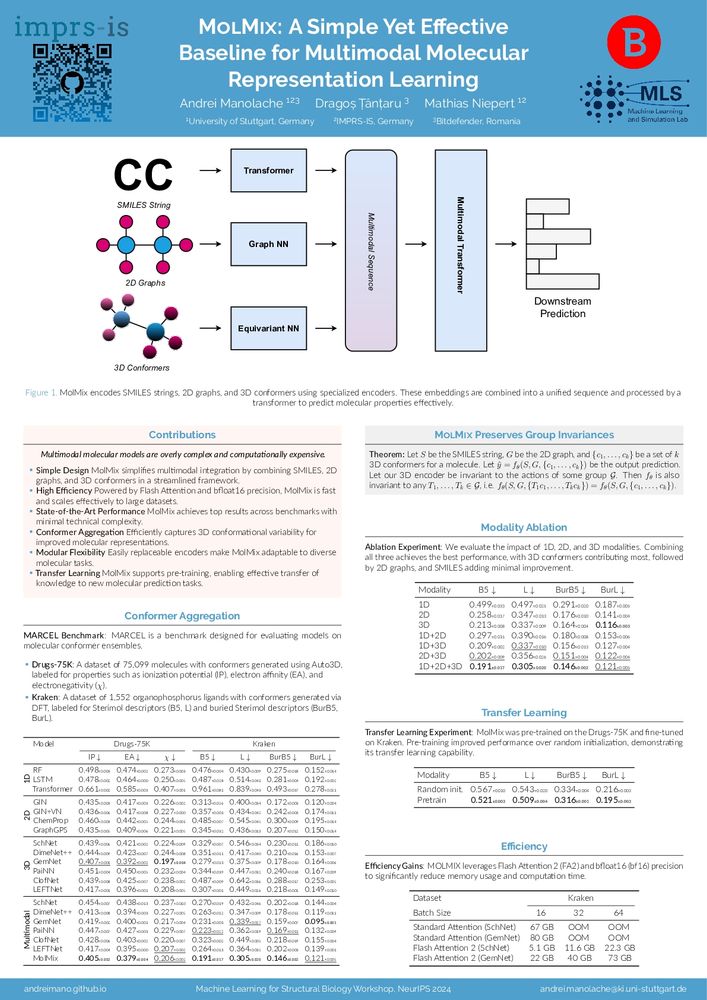

Catch my poster tomorrow at the NeurIPS MLSB Workshop! We present a simple (yet effective 😁) multimodal Transformer for molecules, supporting multiple 3D conformations & showing promise for transfer learning.

Interested in molecular representation learning? Let’s chat 👋!

* in the afternoon session 😅

11.12.2024 22:27 — 👍 0 🔁 0 💬 0 📌 0Happening today, East Exhibit Hall A-C, poster #3110. Come say "Hi!"! 👋

11.12.2024 18:21 — 👍 1 🔁 0 💬 1 📌 06/6 Interested in learning more? Check out our preprint here: arxiv.org/pdf/2405.17311.

If you’d like to discuss, I’d be very happy to chat during the poster session in Vancouver! :)

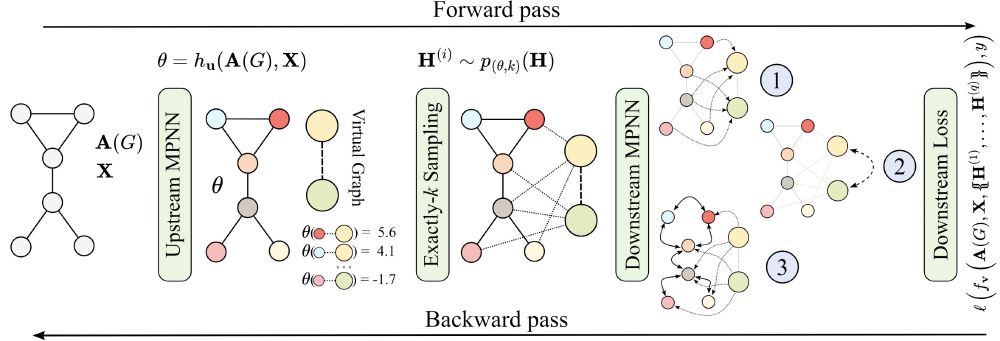

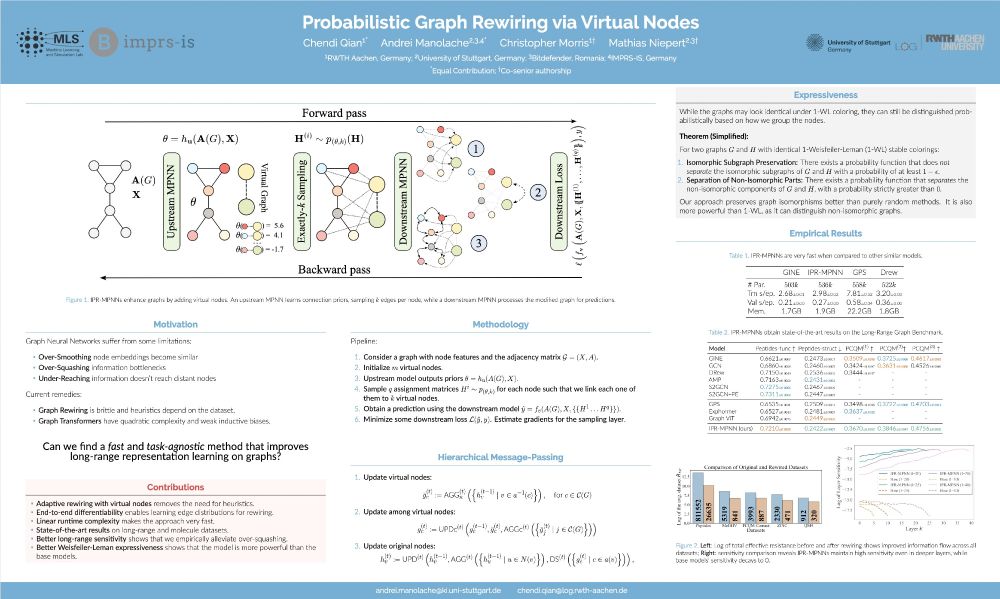

5/6 How it works: Probabilistic sampling connects original nodes to virtual ones, enhancing connectivity without explicit pairwise computations. The result is a framework that achieves both higher WL expressiveness and efficiency in graph-based learning.

07.12.2024 17:52 — 👍 0 🔁 0 💬 1 📌 0

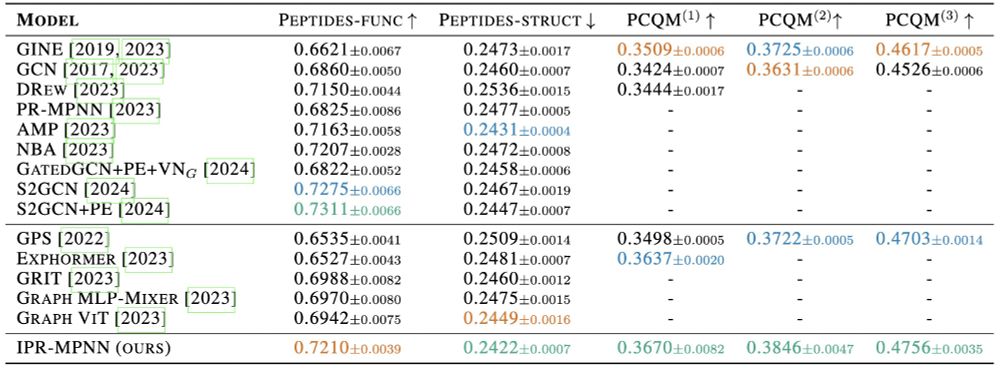

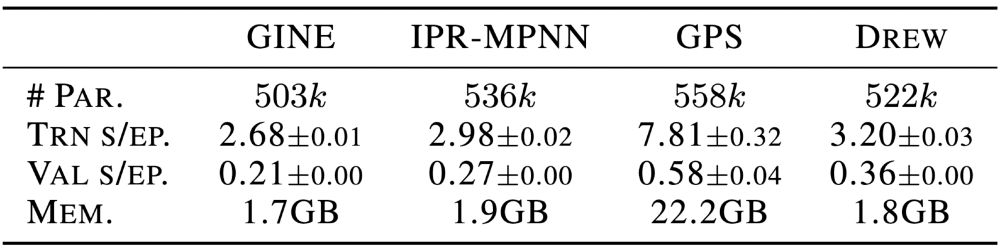

4/6 We demonstrate SOTA results on various benchmarks, effectively addressing over-squashing and under-reaching. IPR-MPNNs also surpass standard MPNNs in expressiveness, distinguishing complex graph structures—all while being faster and more memory-efficient than GTs. 🚀

07.12.2024 17:51 — 👍 0 🔁 0 💬 1 📌 0

3/6 Enter IPR-MPNNs: Our approach learns to rewire graphs probabilistically by adding virtual nodes. This eliminates the need for heuristics, making the method more flexible and task-adaptive, while maintaining computational efficiency. 🎯

07.12.2024 17:51 — 👍 1 🔁 0 💬 1 📌 02/6 Standard MPNNs struggle with long-range interactions, making them less effective for large, complex graphs. Transformers help but come with quadratic complexity, which is computationally expensive. Rewiring heuristics? Often brittle and task-specific.

07.12.2024 17:50 — 👍 0 🔁 0 💬 1 📌 0

1/6 We're excited to share our #NeurIPS2024 paper: Probabilistic Graph Rewiring via Virtual Nodes! It addresses key challenges in GNNs, such as over-squashing and under-reaching, while reducing reliance on heuristic rewiring. w/ Chendi Qian, @christophermorris.bsky.social @mniepert.bsky.social 🧵

07.12.2024 17:50 — 👍 30 🔁 7 💬 1 📌 0Genuine question - why do captions go above tables? I've always assumed that this is due to wanting to make tables distinct from figures, but it seems like a convention.

29.11.2024 01:20 — 👍 2 🔁 0 💬 1 📌 1👀👋

18.11.2024 15:35 — 👍 1 🔁 0 💬 1 📌 0