#BMVC2025

24.11.2025 06:40 — 👍 2 🔁 0 💬 0 📌 0

Key findings:

1️⃣ The LiDAR backbone architecture has a major impact on cross-domain generalization.

2️⃣ A single pretrained backbone can generalize to many domain shifts.

3️⃣ Freezing the pretrained backbone + training only a small MLP head gives the best results.

24.11.2025 05:00 — 👍 0 🔁 0 💬 1 📌 0

We systematically study how to best exploit vision foundation models (like DINOv2) for UDA on LiDAR data and identify practical “recipes” that consistently give strong performance across challenging real-world domain gaps.

24.11.2025 05:00 — 👍 1 🔁 0 💬 1 📌 0

🚗🌐 Working on domain adaptation for 3D point clouds / LiDAR?

We'll present MuDDoS at BMVC: a method that boosts multimodal distillation for 3D semantic segmentation under domain shift.

📍 BMVC

🕚 Monday, Poster Session 1: Multimodal Learning (11:00–12:30)

📌 Hadfield Hall #859

24.11.2025 05:00 — 👍 13 🔁 4 💬 1 📌 3

One of those internships is on Gromov $\delta$-hyperbolicity for GNNs, and will be cosupervised together with Nicolas, myself and Laetitia Chapel. Take a look and spread the words !

07.11.2025 13:45 — 👍 10 🔁 3 💬 1 📌 0

Happy to represent Ukraine at #ICCV2025 . Come see my poster today at 11:45 (#399)!

21.10.2025 19:35 — 👍 17 🔁 2 💬 0 📌 0

Our recent research will be presented at @iccv.bsky.social! #ICCV2025

We’ll present 5 papers about:

💡 self-supervised & representation learning

🌍 3D occupancy & multi-sensor perception

🧩 open-vocabulary segmentation

🧠 multimodal LLMs & explainability

valeoai.github.io/posts/iccv-2...

17.10.2025 22:09 — 👍 8 🔁 5 💬 1 📌 1

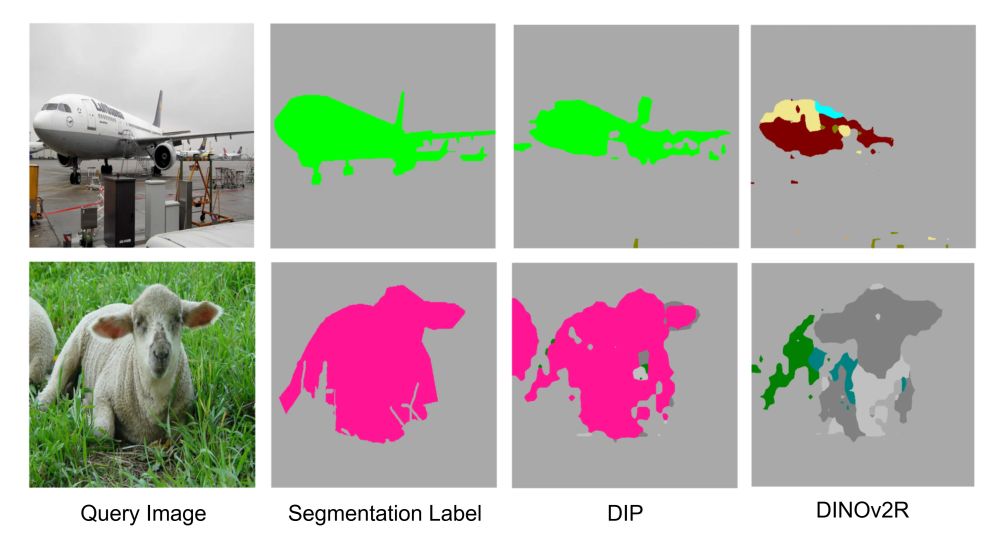

Come say hi to our poster October 21st at 11:45 poster session 1 (#399)! We introduce unsupervised post-training of ViTs that enhances dense features for in-context tasks.

First conference as a PhD student, really excited to meet new people.

18.10.2025 19:22 — 👍 6 🔁 1 💬 0 📌 0

ICCV 2025

5 papers, invited speaker, WiCV sponsor and Challenge sponsor

Aloha #iccv25 – here we come! Excited to be presenting new *St3R models PANSt3R, HAMSt3R & HOSt3R. We're also introducing ‘Geo4D' and ‘LUDVIG’ 🫢 giving invited talks and mentoring! Full @iccv.bsky.social

programme below (or tinyurl.com/asbn5b5d) 🧵1/9

18.10.2025 06:56 — 👍 17 🔁 4 💬 1 📌 1

Another great event for @valeoai.bsky.social team: a PhD defense of Corentin Sautier.

His thesis «Learning Actionable LiDAR Representations w/o Annotations» covers the papers BEVContrast (learning self-sup LiDAR features), SLidR, ScaLR (distillation), UNIT and Alpine (solving tasks w/o labels).

07.10.2025 12:29 — 👍 9 🔁 4 💬 1 📌 1

Thank you @skamalas.bsky.social ! Looking Forward to my Journey in Grenoble !

06.10.2025 22:15 — 👍 4 🔁 0 💬 0 📌 0

So excited to attend the PhD defense of @bjoernmichele.bsky.social at @valeoai.bsky.social! He’s presenting his research results of the last 3 years in 3D domain adaptation: SALUDA (unsupervised DA), MuDDoS (multimodal UDA), TTYD (source-free UDA).

06.10.2025 12:18 — 👍 12 🔁 2 💬 2 📌 0

It’s PhD graduation season in the team!

Today, @bjoernmichele.bsky.social is defending his PhD on "Domain Adaptation for 3D Data"

Best of luck! 🚀

06.10.2025 12:09 — 👍 10 🔁 2 💬 0 📌 0

2025 ICCV Program Committee

Congratulations to our lab colleagues who have been named Outstanding Reviewers at #ICCV2025 👏

Andrei Bursuc @abursuc.bsky.social

Anh-Quan Cao @anhquancao.bsky.social

Renaud Marlet

Eloi Zablocki @eloizablocki.bsky.social

@iccv.bsky.social

iccv.thecvf.com/Conferences/...

02.10.2025 15:28 — 👍 20 🔁 6 💬 0 📌 1

Discovered that our RangeViT paper keeps being cited in what might be LLM-generated papers. Number of citations increased rapidly in the last weeks. Too good to be true.

Papers popped up on different platforms, but mainly on ResearchGate with ~80 papers in just 3 weeks.

[1/]

16.09.2025 10:20 — 👍 6 🔁 5 💬 1 📌 2

SKADA-Bench: Benchmarking Unsupervised Domain Adaptation Methods...

Unsupervised Domain Adaptation (DA) consists of adapting a model trained on a labeled source domain to perform well on an unlabeled target domain with some data distribution shift. While many...

SKADA-Bench : Benchmarking Unsupervised Domain Adaptation Methods with Realistic Validation On Diverse Modalities, has been published published in TMLR today 🚀. It was a huge team effort to design (and publish) an open source fully reproducible DA benchmark 🧵1/n. openreview.net/forum?id=k9F...

29.07.2025 12:54 — 👍 16 🔁 7 💬 1 📌 0

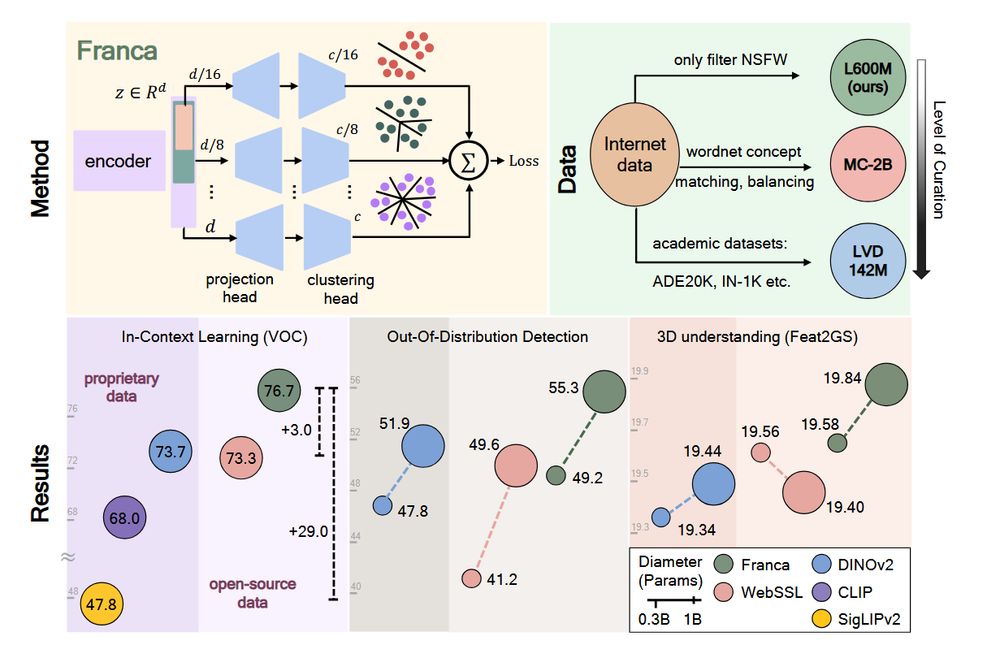

1/ Can open-data models beat DINOv2? Today we release Franca, a fully open-sourced vision foundation model. Franca with ViT-G backbone matches (and often beats) proprietary models like SigLIPv2, CLIP, DINOv2 on various benchmarks setting a new standard for open-source research.

21.07.2025 14:47 — 👍 85 🔁 21 💬 2 📌 3

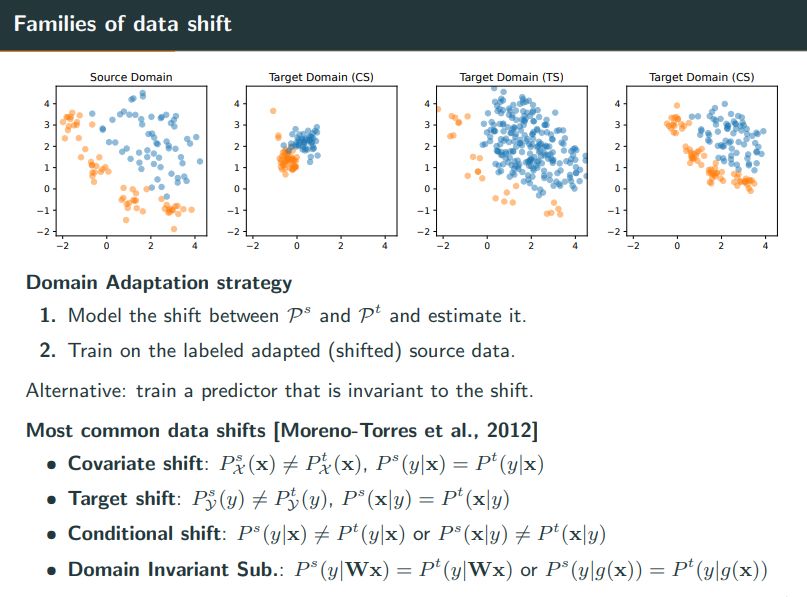

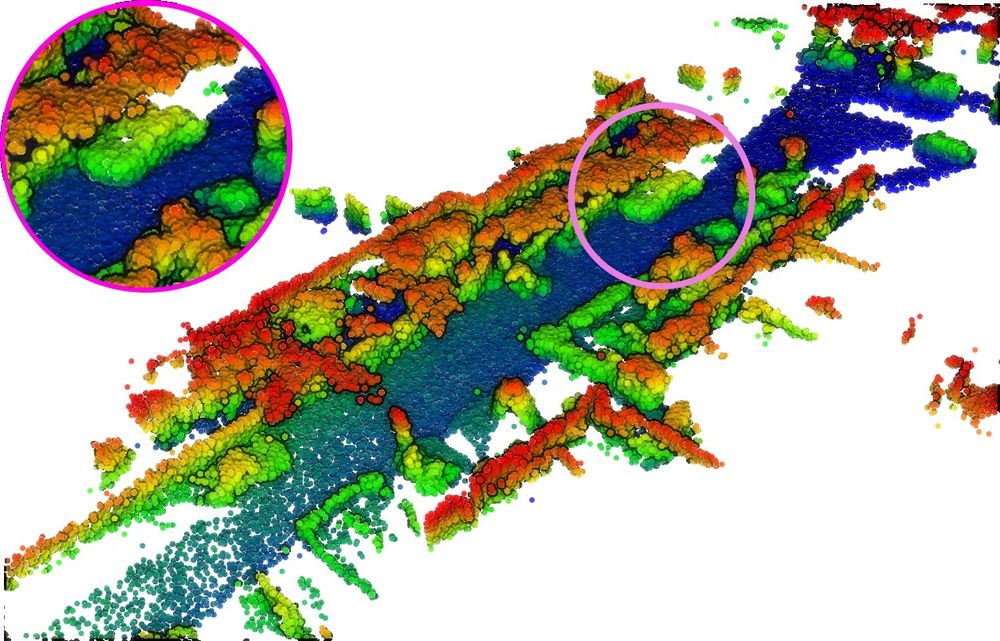

The visualisation of the shifts is really great! Although finishing a thesis on domain adaptation for 3D, these shifts in the formal definition always remain a bit abstract for me, whereas with the visualisation in the space(s) it is much clearer.

02.07.2025 07:18 — 👍 1 🔁 0 💬 0 📌 0

The most important aspect when facing data shift is the type of shift present in the data. I will give below a few examples of shifts and some existing methods to compensate for it.🧵1/6

01.07.2025 09:38 — 👍 30 🔁 16 💬 2 📌 1

I really enjoyed it! Generating the dataset myself, made it very easy to start and play with. Also while knowing on a high level the ideas of flow matching, it was great to do it once myself and to see also the steps in the code.

29.06.2025 14:56 — 👍 3 🔁 0 💬 1 📌 0

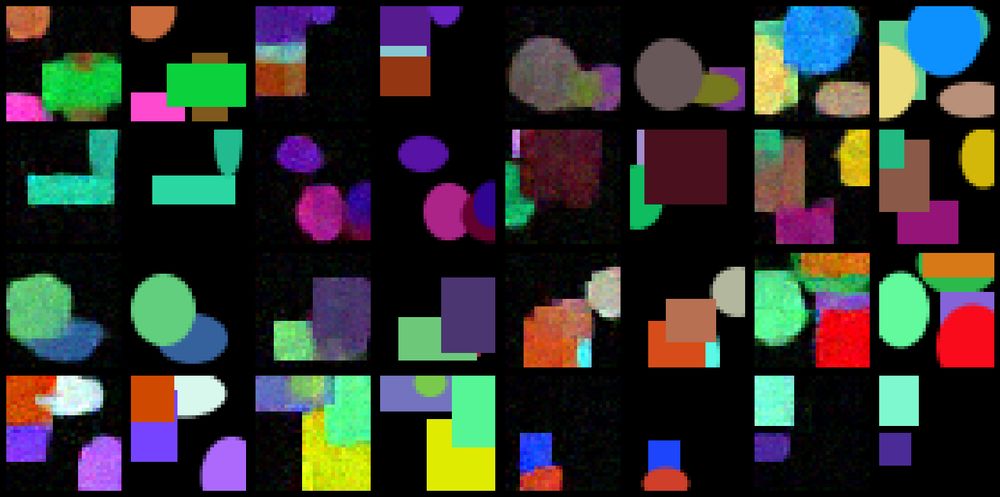

I wrote a notebook for a lecture/exercice on image generation with flow matching. The idea is to use FM to render images composed of simple shapes using their attributes (type, size, color, etc). Not super useful but fun and easy to train!

colab.research.google.com/drive/16GJyb...

Comments welcome!

27.06.2025 16:52 — 👍 41 🔁 8 💬 2 📌 0

Looks great ! I am sure some of your colleagues in the lab would also be interested to have a look in a lunch break on these handhelds 😅

29.06.2025 10:15 — 👍 2 🔁 0 💬 1 📌 0

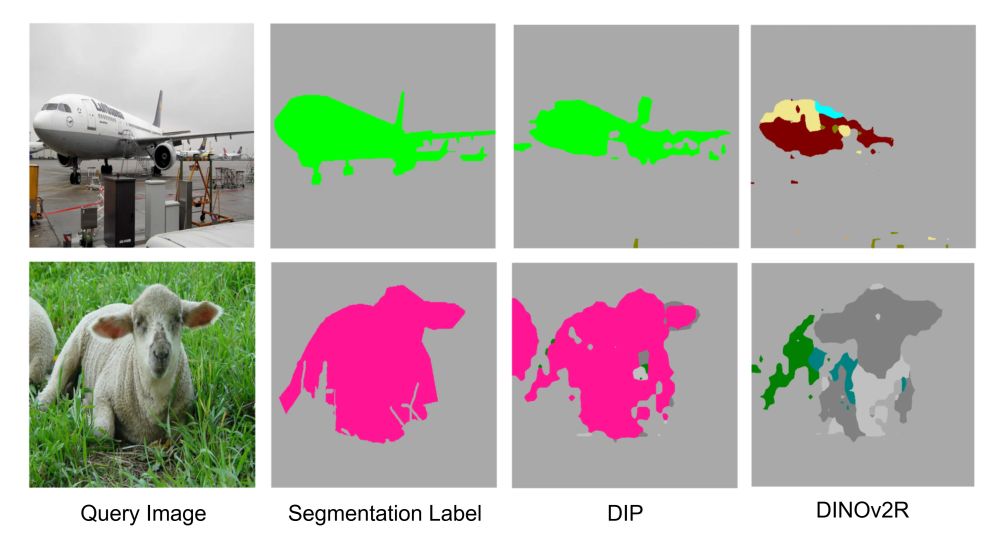

1/n 🚀New paper out - accepted at #ICCV2025!

Introducing DIP: unsupervised post-training that enhances dense features in pretrained ViTs for dense in-context scene understanding

Below: Low-shot in-context semantic segmentation examples. DIP features outperform DINOv2!

25.06.2025 19:21 — 👍 20 🔁 6 💬 1 📌 4

Thank you for higlighting this article. While it is written for AI-for-science, many of the author's remarks and statements are, in my opinion, also strongly reasonate with my own "AI subfield".

20.05.2025 09:34 — 👍 3 🔁 0 💬 0 📌 0

Behind every great conference is a team of dedicated reviewers. Congratulations to this year’s #CVPR2025 Outstanding Reviewers!

cvpr.thecvf.com/Conferences/...

10.05.2025 13:59 — 👍 48 🔁 12 💬 0 📌 19

For me citing and a one-liner why we think that a quantitative comparison is not useful (as e.g. unclear how the results are obtained) had mostly not been challenged by the reviewers. But there were the one-line reviews, that put this as a major weakness which then also causes frustration.

29.04.2025 09:10 — 👍 1 🔁 0 💬 0 📌 0

Our paper "LiDPM: Rethinking Point Diffusion for Lidar Scene Completion" got accepted to IEEE IV 2025!

tldr: LiDPM enables high-quality LiDAR completion by applying a vanilla DDPM with tailored initialization, avoiding local diffusion approximations.

Project page: astra-vision.github.io/LiDPM/

28.04.2025 10:18 — 👍 12 🔁 4 💬 0 📌 1

Postdoctoral researcher @ CIIRC, CTU, Prague working in vision & language. Also robotics noob. PhD from University of Bristol. Ex. Samsung Research (SAIC-C). I love coffee and plants. And socks.

AI Researcher Samsung Research AI Center Cambridge 🇬🇧 Ph.D. @ KAUST. Prev. Adobe Research 🇺🇸, Qualcomm AI 🇳🇱.

Latino 🇨🇴. Let go FOMO, frustration & excitement via tweets

Daily-updated stream of AI news || Monitoring research blog sites || Research articles from ArXiv

Professor, University Of Copenhagen 🇩🇰 PI @belongielab.org 🕵️♂️ Director @aicentre.dk 🤖 Board member @ellis.eu 🇪🇺 Formerly: Cornell, Google, UCSD

#ComputerVision #MachineLearning

PhD Candidate@Chair for Machine Learning, University of Mannheim.

Working on Machine Learning and Computer Vision.

Webpage: https://www.uni-mannheim.de/dws/people/researchers/phd-students/shashank/

Views are my own 😄

Ph.D. Student @ University of Freiburg | Research Scientist @ Continental AI Lab

PhD Student in #ComputerVision at the University of Bologna and Verizon Connect. Entre Firenze e Compostela.

PhD student @ Uni Tübingen and IMPRS-IS, working on 3D vision

patriciagschossmann.github.io

PhD student @ TUM with Daniel Cremers

PhD @RWTH.bsky.social | 3D Computer Vision

🔗 https://ka.codes

Computer Vision Engineer @ Neurolabs

Professor of Computer Vision, @BristolUni. Senior Research Scientist @GoogleDeepMind - passionate about the temporal stream in our lives.

http://dimadamen.github.io

From SLAM to Spatial AI; Professor of Robot Vision, Imperial College London; Director of the Dyson Robotics Lab; Co-Founder of Slamcore. FREng, FRS.

Research faculty @ImagineENPC. https://gulvarol.github.io/

Associate Professor in EECS at MIT. Neural nets, generative models, representation learning, computer vision, robotics, cog sci, AI.

https://web.mit.edu/phillipi/

Associate Professor at University of 📍Catania, Sicily, Italy🇮🇹 Interested in Computer Vision and Egocentric Vision. Member of @ellis.eu, part of the #EPIC-KITCHENS, #EGO4D, #EGO-EXO4D teams. 🔗 https://antoninofurnari.it

Researcher in machine learning