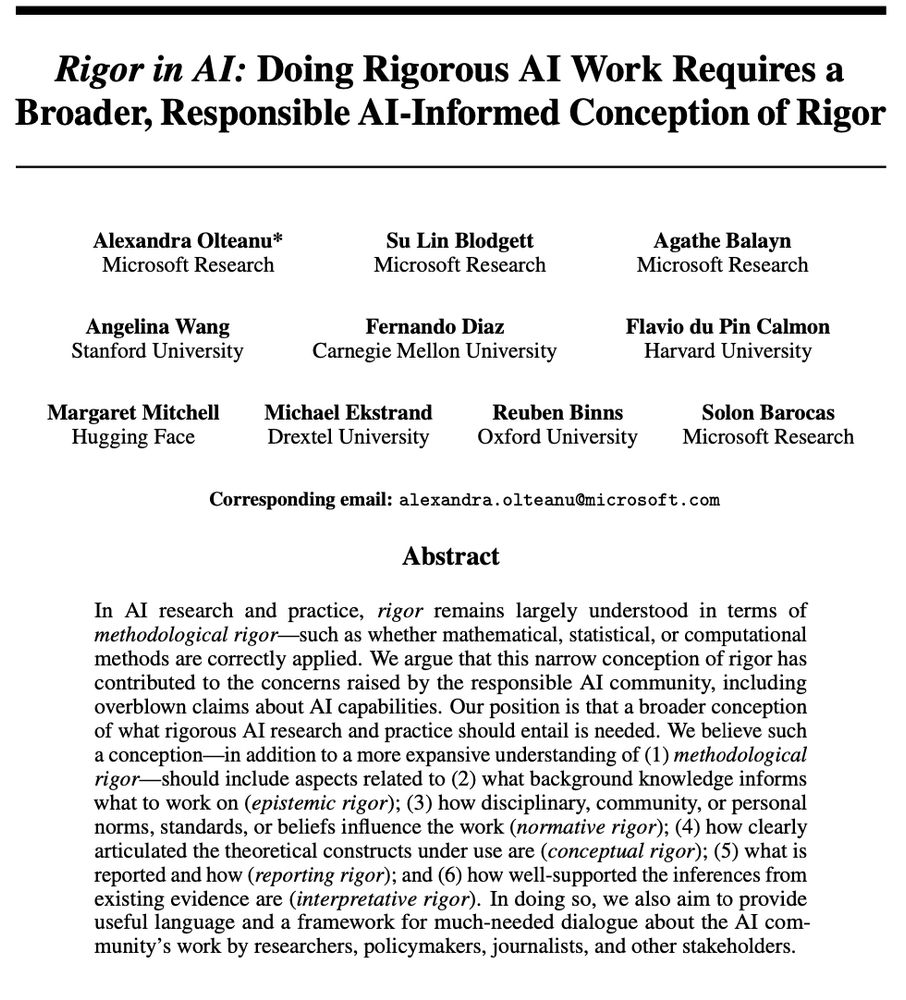

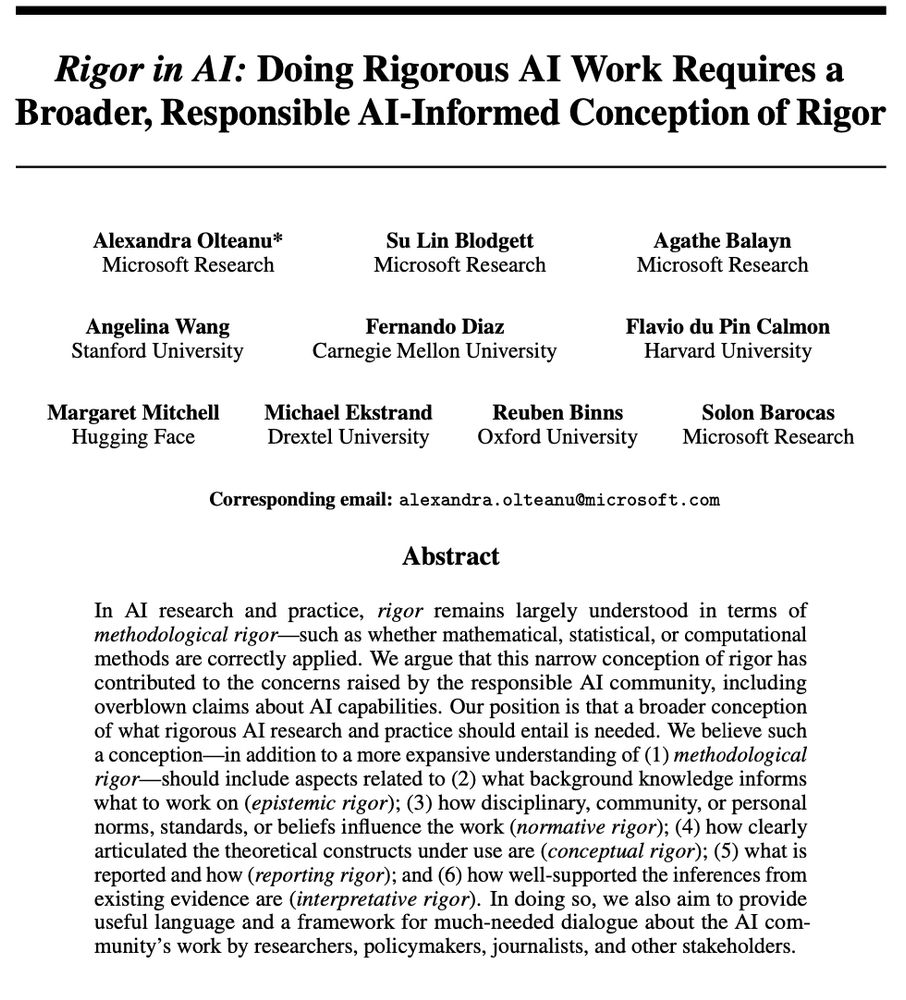

Print screen of the first page of a paper pre-print titled "Rigor in AI: Doing Rigorous AI Work Requires a Broader, Responsible AI-Informed Conception of Rigor" by Olteanu et al. Paper abstract: "In AI research and practice, rigor remains largely understood in terms of methodological rigor -- such as whether mathematical, statistical, or computational methods are correctly applied. We argue that this narrow conception of rigor has contributed to the concerns raised by the responsible AI community, including overblown claims about AI capabilities. Our position is that a broader conception of what rigorous AI research and practice should entail is needed. We believe such a conception -- in addition to a more expansive understanding of (1) methodological rigor -- should include aspects related to (2) what background knowledge informs what to work on (epistemic rigor); (3) how disciplinary, community, or personal norms, standards, or beliefs influence the work (normative rigor); (4) how clearly articulated the theoretical constructs under use are (conceptual rigor); (5) what is reported and how (reporting rigor); and (6) how well-supported the inferences from existing evidence are (interpretative rigor). In doing so, we also aim to provide useful language and a framework for much-needed dialogue about the AI community's work by researchers, policymakers, journalists, and other stakeholders."

We have to talk about rigor in AI work and what it should entail. The reality is that impoverished notions of rigor do not only lead to some one-off undesirable outcomes but can have a deeply formative impact on the scientific integrity and quality of both AI research and practice 1/

18.06.2025 11:48 — 👍 62 🔁 18 💬 2 📌 3

At the #HEAL workshop, I'll present "Systematizing During Measurement Enables Broader Stakeholder Participation" on the ways we can further structure LLM evaluations and open them for deliberation. A project led by @hannawallach.bsky.social

25.04.2025 22:57 — 👍 3 🔁 1 💬 0 📌 0

These results can serve to refine current AI regulations that touch upon "trust" **within the AI supply chain** and the "trustworthiness" of the resulting AI systems.

agathe-balayn.github.io/assets/pdf/b...

25.04.2025 22:57 — 👍 1 🔁 0 💬 1 📌 0

At the main conference, I'll present our work "Unpacking Trust Dynamics in the LLM Supply Chain: An Empirical Exploration to Foster Trustworthy LLM Production And Use" (honorable mention) on how trust relations in the LLM supply chain affect the resulting AI system.

25.04.2025 22:57 — 👍 1 🔁 0 💬 1 📌 0

At the #STAIG workshop, I'll discuss our empirical study of *pig farming* supply chains. 🐷

We show how inconspicuous software engineering practices might transform farming environments negatively, and how the harm-based approach to AI regulation might not enable to attend to these transformations.

25.04.2025 22:57 — 👍 0 🔁 0 💬 1 📌 0

I will be at #CHI25 in person this week 🇯🇵

I'm looking forward to chat about **AI supply chains** from socio-technical & organizational / regulatory & governance / political economic lenses.

I'll present my work at the main conference (honorable mention), and attend the #HEAL and #STAIG workshops.

25.04.2025 22:57 — 👍 9 🔁 2 💬 1 📌 0

phd student-worker at penn, nsf grfp fellow, spelman alum. autonomy and identity in algorithmic systems.

they/she. 🧋 🍑 🧑🏾💻

https://psampson.net

PhD student @ Cornell info sci | Sociotechnical fairness & algorithm auditing | Previously Stanford RegLab, MSR FATE, Penn | https://emmaharv.github.io/

Exploring workforce skills for Industry 5.0. We are a #HorizonEU initiative funded by EU HaDEA

https://bridges5-0.eu/

Postdoctoral Researcher in Design, HCI, Futures and Social Computing at the University of Helsinki and Aalto University

Current: role of values and ideologies in the design of technologies.

Active at the Research Professionals Union

https://felix.science

👉 Welcome to @SlothSpree 🦥💚

🌿 We share daily #sloth content! 🦥📸

👉 Follow us if you really love sloths 💚🦥💤

PhD student at Johns Hopkins University

Alumni from McGill University & MILA

Working on NLP Evaluation, Responsible AI, Human-AI interaction

she/her 🇨🇦

public interest technologist // luddite hacker.

information science phd at cornell tech.

inequality, ai, and the information ecosystem.

🤠 traeve.com 🤠

Designer turned design researcher. Postdoc at TU Delft. Exploring contestable AI. www.contestable.ai

Math Assoc. Prof. (on leave, Aix-Marseille, France)

Interest: Prob / Stat / ANT. See: https://sites.google.com/view/sebastien-darses/research?authuser=0

Teaching Project (non-profit): https://highcolle.com/

The AI community building the future!

Applied #IR & #NLP Research @zeta-alpha.bsky.social, making search good.

CS PhD @tudelft. Former @naverlabseurope.bsky.social, @bloomberg.com

CNF✈️AMS

I create technology that helps users. http://kennethhuang.cc/

We are an independent nonprofit organization that believes collaboration opportunities and research training should be openly accessible and free.

Web: https://mlcollective.org/

Twitter: @ml_collective

Responsible AI & Human-AI Interaction

Currently: Research Scientist at Apple

Previously: Princeton CS PhD, Yale S&DS BSc, MSR FATE & TTIC Intern

https://sunniesuhyoung.github.io/

Professor in Artificial Intelligence, The University of Queensland, Australia

Human-Centred AI, Decision support, Human-agent interaction, Explainable AI

https://uqtmiller.github.io

Prof at Uni Bayreuth, Germany. Human-computer interaction, interactive AI. Building future tools for creative people, while critically evaluating the impact of AI on users, workflows, and outcomes.

AI is not inevitable. We DAIR to imagine, build & use AI deliberately.

Website: http://dair-institute.org

Mastodon: @DAIR@dair-community.social

LinkedIn: https://www.linkedin.com/company/dair-institute/