Abstract: Under the banner of progress, products have been uncritically adopted or

even imposed on users — in past centuries with tobacco and combustion engines, and in

the 21st with social media. For these collective blunders, we now regret our involvement or

apathy as scientists, and society struggles to put the genie back in the bottle. Currently, we

are similarly entangled with artificial intelligence (AI) technology. For example, software updates are rolled out seamlessly and non-consensually, Microsoft Office is bundled with chatbots, and we, our students, and our employers have had no say, as it is not

considered a valid position to reject AI technologies in our teaching and research. This

is why in June 2025, we co-authored an Open Letter calling on our employers to reverse

and rethink their stance on uncritically adopting AI technologies. In this position piece,

we expound on why universities must take their role seriously toa) counter the technology

industry’s marketing, hype, and harm; and to b) safeguard higher education, critical

thinking, expertise, academic freedom, and scientific integrity. We include pointers to

relevant work to further inform our colleagues.

Figure 1. A cartoon set theoretic view on various terms (see Table 1) used when discussing the superset AI

(black outline, hatched background): LLMs are in orange; ANNs are in magenta; generative models are

in blue; and finally, chatbots are in green. Where these intersect, the colours reflect that, e.g. generative adversarial network (GAN) and Boltzmann machine (BM) models are in the purple subset because they are

both generative and ANNs. In the case of proprietary closed source models, e.g. OpenAI’s ChatGPT and

Apple’s Siri, we cannot verify their implementation and so academics can only make educated guesses (cf.

Dingemanse 2025). Undefined terms used above: BERT (Devlin et al. 2019); AlexNet (Krizhevsky et al.

2017); A.L.I.C.E. (Wallace 2009); ELIZA (Weizenbaum 1966); Jabberwacky (Twist 2003); linear discriminant analysis (LDA); quadratic discriminant analysis (QDA).

Table 1. Below some of the typical terminological disarray is untangled. Importantly, none of these terms

are orthogonal nor do they exclusively pick out the types of products we may wish to critique or proscribe.

Protecting the Ecosystem of Human Knowledge: Five Principles

Finally! 🤩 Our position piece: Against the Uncritical Adoption of 'AI' Technologies in Academia:

doi.org/10.5281/zeno...

We unpick the tech industry’s marketing, hype, & harm; and we argue for safeguarding higher education, critical

thinking, expertise, academic freedom, & scientific integrity.

1/n

06.09.2025 08:13 — 👍 3242 🔁 1652 💬 100 📌 285

Once again I request, please, AI which can do something useful like clean bathrooms or my cats' litter trays. An "artificial intelligence-powered online encyclopedia" is NOT useful, especially when it "promotes far-right perspectives & favors Musk's viewpoints"

en.wikipedia.org/wiki/Grokipe...

28.10.2025 10:47 — 👍 22 🔁 9 💬 1 📌 2

Detail of the "Glasses Apostle" painting in the altarpiece of the church of Bad Wildungen, Germany. Painted by Conrad von Soest in 1403, the painting is considered to be among the oldest depictions of eyeglasses north of the Alps

Nothing to see here? Well, this is a slow moving 🧵 for #skystorians and others about #eyeglasses of the past, about how to read in the past, where to buy eyeglasses, and how to do with them in general. The hashtag is #HowToDoWithGlassesInThePast

Let's roll.

27.10.2025 10:28 — 👍 254 🔁 101 💬 7 📌 11

From Mexico to Ireland, Fury Mounts Over a Global A.I. Frenzy

Very glad to see this piece by the @nytimes.com on data center struggles from Mexico to Ireland:

"Government support worldwide has helped tech firms build with little accountability, said Ana Valdivia, an Oxford University lecturer studying data center development."

www.nytimes.com/2025/10/20/t...

21.10.2025 07:40 — 👍 6 🔁 4 💬 0 📌 0

Monotone image of man asleep at a desk surrounded by monsters.

This well-known aquatint by Goya came to mind as symbolising where we are right now. It’s called The Sleep of Reason Produces Monsters.

20.10.2025 06:07 — 👍 130 🔁 31 💬 6 📌 0

This morning my ChatGPT quota was inexplicably exhausted.

It took a while but I pieced it together. Voice mode somehow got activated when I went to bed.

The bot then engaged in a 10 hour conversation with my snoring dog, answering questions the pup wasn’t asking and praising him for his insight.

18.10.2025 09:04 — 👍 3330 🔁 581 💬 94 📌 84

And now what is next?

18.10.2025 11:50 — 👍 0 🔁 0 💬 0 📌 0

🫶🫶

18.10.2025 11:00 — 👍 1 🔁 0 💬 1 📌 0

It just started!

14.10.2025 19:09 — 👍 1 🔁 0 💬 0 📌 0

My favorite #sport is running from one platform to another to catch my #train. 👟

#HighAdrenaline #NS.nl

13.10.2025 07:57 — 👍 1 🔁 0 💬 0 📌 0

Added 🗓✅️

09.10.2025 19:01 — 👍 0 🔁 0 💬 0 📌 1

This week was hectic. Puuuf.🫠

03.10.2025 15:43 — 👍 0 🔁 0 💬 0 📌 0

#AmCAT is proudly developed by the @societal-analytics.nl

You can learn more about it in the:

* Book: amcat.nl/book/

* Blog post: societal-analytics.nl/blogs/202501...

30.09.2025 09:52 — 👍 5 🔁 3 💬 0 📌 0

Sofia Gil-Clavel stands at a podium presenting AmCAT at the 3rd MEDem Conference. Behind her, a slide shows the AmCAT team (Kasper Welbers, Wouter van Atteveldt, Johannes Gruber, Sofia Gil-Clavel) with the tagline: “Developed by researchers for researchers, society, and data savvy users.” Logos of MEDem, VU Amsterdam, and the Societal Analytics Lab are displayed at the top.

Sebastian Ziaja stands at a podium presenting HarDIS (Harmony in the Democratic Ideological Space) at the 3rd MEDem Conference. A slide behind him shows the HarDIS team (Lea Kaftan, Paul Bederke, Selçuk Timur Uluer) with the logos of MEDem, GESIS Leibniz Institute for the Social Sciences, and OSCARS (the funding initiative).

Day 2 of the #MEDemConference at @gesis.org starts with powerful tool demos:

🔍 AmCAT @sof14g1l.bsky.social on enabling large-scale text analysis of media & political debates.

🌐 HarDIS @sziaja.bsky.social on harmonizing and sustaining cross-national democracy data (surveys, parties, experts).

30.09.2025 09:02 — 👍 11 🔁 3 💬 0 📌 2

This is such a good example.

25.09.2025 11:28 — 👍 0 🔁 0 💬 0 📌 0

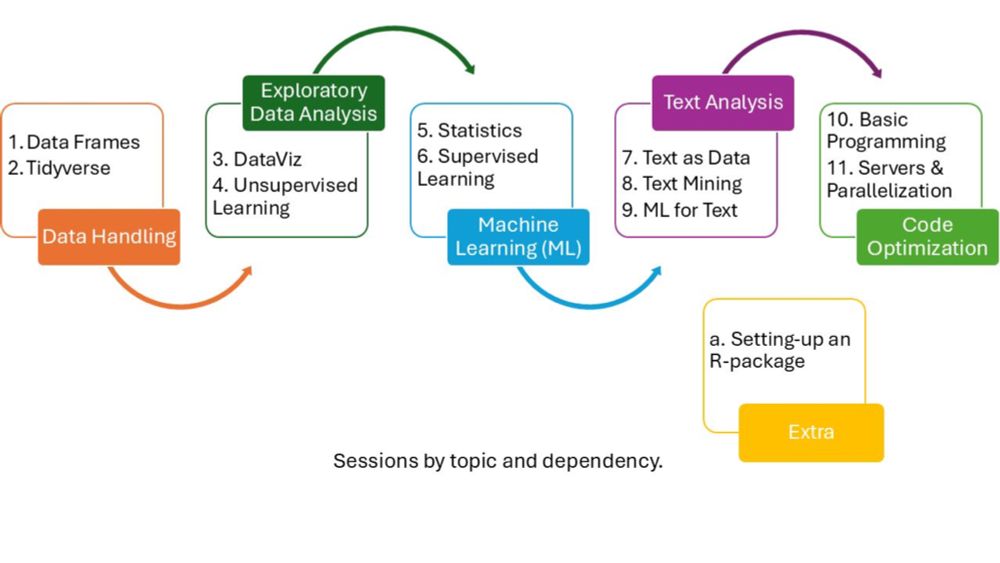

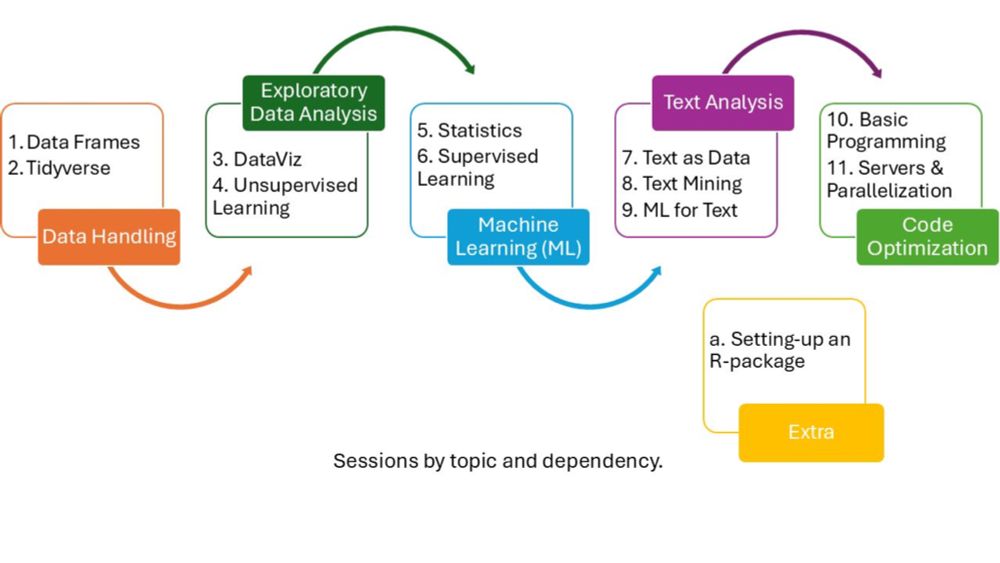

Did you find it useful? Then, do not forget to cite it. (:

Gil-Clavel, S. (2025). R-Course: R for Social Scientists (Version 1.0) [Data set]. github.com/SofiaG1l/R_C.... doi: doi.org/10.5281/zeno....

24.09.2025 06:03 — 👍 1 🔁 0 💬 0 📌 0

Are you a social scientist that wants to learn R?

Then, you may find my course "R for Social Scientists" very useful!

I designed this course to give social scientists all that is necessary to start using R in their everyday work.

github.com/SofiaG1l/R_C...

24.09.2025 06:02 — 👍 18 🔁 5 💬 1 📌 1

That secret code shared among those who read a book in public transport. 📖🚄

20.09.2025 12:22 — 👍 0 🔁 0 💬 0 📌 0

So true

19.09.2025 07:04 — 👍 2 🔁 0 💬 0 📌 0

👏🏽👏🏽👏🏽

10.09.2025 06:54 — 👍 1 🔁 0 💬 0 📌 0

AI als stemadviseur? Chatbot haalt VVD en PVV door elkaar

Om niet honderden pagina's partijprogramma's door te hoeven spitten, zijn AI-chatbots een verleidelijk hulpmiddel.

"Large language models like ChatGPT contain all kinds of noise," says Claes de Vreese, professor of Artificial Intelligence and Society at the University of Amsterdam. "You get answers that incorporate opinions. The answers are polluted."

nos.nl/nieuwsuur/co...

10.09.2025 06:53 — 👍 0 🔁 0 💬 0 📌 0

03.09.2025 14:35 — 👍 24671 🔁 4511 💬 250 📌 207

03.09.2025 14:35 — 👍 24671 🔁 4511 💬 250 📌 207

Summer break is over. 🥹

01.09.2025 06:00 — 👍 1 🔁 0 💬 0 📌 0

my keynote happening in a few mins. registration here to stream it

aiforgood.itu.int/summit25/reg...

08.07.2025 08:55 — 👍 111 🔁 21 💬 9 📌 6

“The company cut millions of print books from their bindings, scanned them into digital files, and threw away the originals solely for the purpose of training AI.”

28.06.2025 10:30 — 👍 104 🔁 45 💬 10 📌 8

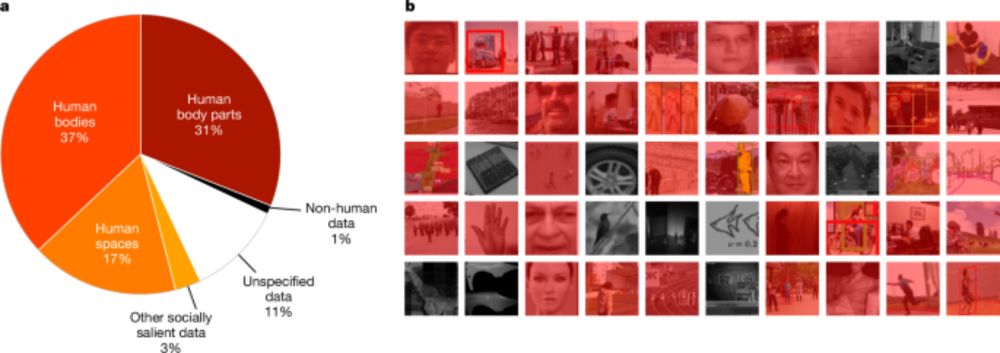

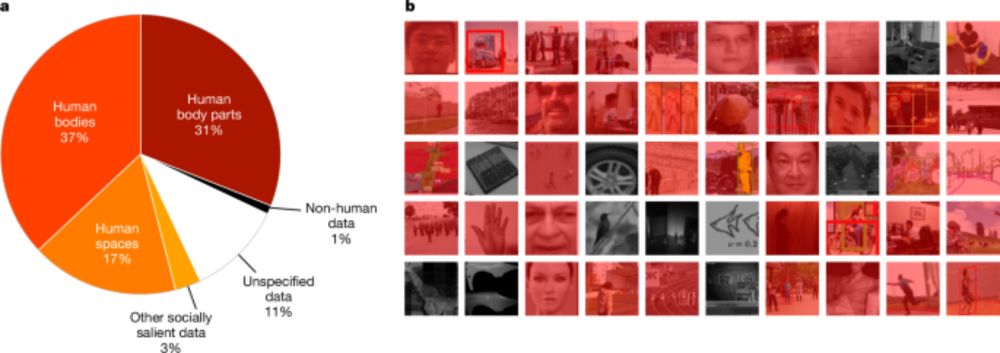

Computer-vision research powers surveillance technology - Nature

An analysis of research papers and citing patents indicates the extensive ties between computer-vision research and surveillance.

New paper hot off the press www.nature.com/articles/s41...

We analysed over 40,000 computer vision papers from CVPR (the longest standing CV conf) & associated patents tracing pathways from research to application. We found that 90% of papers & 86% of downstream patents power surveillance

1/

25.06.2025 17:29 — 👍 814 🔁 472 💬 27 📌 71

It possible to cycle everywhere regardless of the occasion and the weather.

27.06.2025 09:01 — 👍 0 🔁 0 💬 1 📌 0

https://olivia.science

assistant professor of computational cognitive science · she/they · cypriot/kıbrıslı/κυπραία · σὺν Ἀθηνᾷ καὶ χεῖρα κίνει

AI is not inevitable. We DAIR to imagine, build & use AI deliberately.

Website: http://dair-institute.org

Mastodon: @DAIR@dair-community.social

LinkedIn: https://www.linkedin.com/company/dair-institute/

I like to poke AI systems (sociotechnical translation and AI safety/ethics evaluation)

Researcher @aial.ie @tcddublin.bsky.social | Formerly Research Engineer @ DeepMind

Environmental scientist @University of Buenos Aires and @Humboldt University, Berlin. Working on land use change, forests, and environmental governance.

Communication scholar @ Uni Vienna. Political communication & computational methods.

Political Scientist: democratic crises and political behaviour

Head of Survey Data Curation at @gesisorg.bsky.social

Professor of Empirical Social Research at the University of Cologne

Political psychology | PostDoc researcher @ Institute for Interdisciplinary Research on Conflict and Violence • Uni Bielefeld | Peace and Conflict - Democracy - Uncertainy, Insecurity and Crises

https://www.researchgate.net/profile/Elif-Sandal-Oenal

Assistant Prof of Computational Social Science Uni Bremen

guest fellow WZB Berlin | PhD EUI | interested in protest, party competition, populism #FirstGen

International research centre for regional development and planning located in the heart of Stockholm. We are bridging policy and research for a thriving, sustainable Nordic region and beyond.

We study human populations. BSPS is supported by the Population Investigation Committee, who run Population Studies - a journal of demography http://popstudies.net

Find out about BSPS Conference, Awards, Grants & more at http://www.bsps.org.uk/bsps

/Engaged scholar in critical demography and environmental thought

/Decolonial and eco-feminist, obviously

/Scientific Advisor Just Transition

/Climate Justice Advocate

/Author of ‘Consuming Like Rabbits.The Persistent Myth of Overpopulation’

NYT bestselling author of EMPIRE OF AI: empireofai.com. ai reporter. national magazine award & american humanist media award winner. words in The Atlantic. formerly WSJ, MIT Tech Review, KSJ@MIT. email: http://karendhao.com/contact.

Political Scientist, Providence College

Strategic Co-Director, Climate and Community Institute

Author / Resource Radicals (Duke UP 2020)

Co-Author / A Planet to Win (Verso 2019)

Forthcoming / Extraction: The Frontiers of Green Capitalism (WW Norton)

Professor, researcher, maker of things

~Book: Atlas of AI

~Installation: Calculating Empires

~NYT video: AI's Real Environmental Impact https://www.nytimes.com/2025/09/26/opinion/ai-quartz-mining-hurricane-helene.html

✍🏽 • RACE AFTER TECHNOLOGY: Abolitionist Tools for the New Jim Code • VIRAL JUSTICE: How We Grow the World We Want • IMAGINATION: A Manifesto 📚www.ruhabenjamin.com

Assoc. Prof. CEDUA Colmex | Coordinator MIGDEP @migdepcolmex.bsky.social | AE IMR | demography, sociology, migration, family, policy

https://linktr.ee/cmasferrer

Official account for the Computational Methods Division of @icahdq.bsky.social. Posts by @shugars.bsky.social.

Lecturer in AI, Government & Policy at the OII (University of Oxford) | Associate Editor at Big Data & Society | Investigating algorithmic accountability | Writing a book on the Materiality of AI

https://www.oii.ox.ac.uk/people/profiles/ana-valdivia

Assistant professor at Georgia State University, formerly at BYU. 6 kids. Study NGOs, human rights, #PublicPolicy, #Nonprofits, #Dataviz, #CausalInference.

#rstats forever.

andrewheiss.com

Signal: andrewheiss.01

Assistant Professor of Sociology, NYU. Core Faculty, CSMaP. Research Fellow Oxford Sociology. Computational social science, Methods, Conflict, Communication. Webpage: cjbarrie.com

03.09.2025 14:35 — 👍 24671 🔁 4511 💬 250 📌 207

03.09.2025 14:35 — 👍 24671 🔁 4511 💬 250 📌 207