🚨The Formalism-Implementation Gap in RL research🚨

Lots of progress in RL research over last 10 years, but too much performance-driven => overfitting to benchmarks (like the ALE).

1⃣ Let's advance science of RL

2⃣ Let's be explicit about how benchmarks map to formalism

1/X

28.10.2025 13:55 — 👍 41 🔁 4 💬 1 📌 2

Put CASH on Bandits: A Max K-Armed Problem for Automated Machine Learning

The Combined Algorithm Selection and Hyperparameter optimization (CASH) is a challenging resource allocation problem in the field of AutoML. We propose MaxUCB, a max $k$-armed bandit method to trade o...

I am happy to share that our paper "Put CASH on Bandits: A Max K-Armed Problem for Automated Machine Learning" has been accepted at NeurIPS 2025!

Endless thanks to my amazing co-authors @claireve.bsky.social and @keggensperger.bsky.social

📄 Read it on arXiv: arxiv.org/abs/2505.05226

(1/3)

06.10.2025 16:53 — 👍 7 🔁 1 💬 1 📌 1

a close up of a sad cat with the words pleeeaasse written below it

ALT: a close up of a sad cat with the words pleeeaasse written below it

cvoelcker.de/blog/2025/re...

I finally gave in and made a nice blog post about my most recent paper. This was a surprising amount of work, so please be nice and go read it!

02.10.2025 21:34 — 👍 29 🔁 7 💬 0 📌 3

Thanks a lot! That was lightning fast 🚀

02.10.2025 22:26 — 👍 0 🔁 0 💬 0 📌 0

Maybe a blog post would also help =)

26.09.2025 14:59 — 👍 0 🔁 0 💬 2 📌 0

Could you add me please?

09.09.2025 21:59 — 👍 1 🔁 0 💬 1 📌 0

Definition of dynamic programming in RL, from Csaba Szepesvári’s RL theory lecture notes (Lecture 2, "Planning in MDPs")

Definition of dynamic programming, from Puterman’s Markov Decision Processes — chapter 1.

I came across a couple of other definitions that might be helpful to mention (apologies if you’re already considering these).

The first one is from Csaba Szepesvári’s RL theory lecture notes (lecture 2, planning in MDPs), and the second one is from Puterman's MDP book (chapter 1).

04.08.2025 09:45 — 👍 2 🔁 0 💬 1 📌 0

What are we talking about when we talk about Dynamic Programming?

#ReinforcementLearning

03.08.2025 20:14 — 👍 8 🔁 1 💬 2 📌 0

What if all mathematicians had great visualization skills, tools, and public notes!

31.07.2025 16:22 — 👍 0 🔁 0 💬 0 📌 0

Onno and I will be presenting our poster at # W1005 tomorrow (Wed) morning.

He made a great thread about it, come chat with us about POMDP theory :)

16.07.2025 03:45 — 👍 19 🔁 5 💬 0 📌 0

I will not be at #ICML2025 this year, but 3 of my PhD students at 🤖 Adage (Adaptive Agents Lab) 🤖 are, presenting 3 papers.

⭐ Avery Ma

⭐ Claas Voelcker (cvoelcker.bsky.social)

⭐ Tyler Kastner

Meet them to talk about Model-based RL, Distributional RL, and Jailbreaking LLMs.

14.07.2025 18:54 — 👍 5 🔁 2 💬 1 📌 0

Levine's take on the success of LLMs compared to video models is interesting, but I'll expand on how efforts toward AI could take two different paths, and why I think AI and NeuroAI could take different approaches moving forward. 🧵

🧠🤖 #MLSky

12.06.2025 14:30 — 👍 7 🔁 2 💬 1 📌 0

Preprint Alert 🚀

Can we simultaneously learn transformation-invariant and transformation-equivariant representations with self-supervised learning?

TL;DR Yes! This is possible via simple predictive learning & architectural inductive biases – without extra loss terms and predictors!

🧵 (1/10)

14.05.2025 12:52 — 👍 51 🔁 16 💬 1 📌 5

GitHub - vwxyzjn/cleanrl: High-quality single file implementation of Deep Reinforcement Learning algorithms with research-friendly features (PPO, DQN, C51, DDPG, TD3, SAC, PPG)

High-quality single file implementation of Deep Reinforcement Learning algorithms with research-friendly features (PPO, DQN, C51, DDPG, TD3, SAC, PPG) - vwxyzjn/cleanrl

cleanrl is amazing (github.com/vwxyzjn/clea...) and its structure makes sense for teaching but an actual research codebase should not inherit this style! you do not want this amount of code duplication

11.05.2025 20:01 — 👍 32 🔁 2 💬 4 📌 0

YouTube video by Natasha Jaques

Reinforcement Learning (RL) for LLMs

Recorded a recent "talk" / rant about RL fine-tuning of LLMs for a guest lecture in Stanford CSE234: youtube.com/watch?v=NTSY.... Covers some of my lab's recent work on personalized RLHF, as well as some mild Schmidhubering about my own early contributions to this space

27.03.2025 21:31 — 👍 51 🔁 10 💬 5 📌 1

PQN puts Q-learning back on the map and now comes with a blog post + Colab demo! Also, congrats to the team for the spotlight at #ICLR2025

20.03.2025 11:51 — 👍 16 🔁 4 💬 0 📌 0

Happy #Nowruz and the beginning of the spring!

20.03.2025 17:37 — 👍 0 🔁 0 💬 0 📌 0

I wanted to send you the link just now but hopefully you have found it =)

18.03.2025 21:08 — 👍 1 🔁 0 💬 0 📌 0

Sure *_*

Looking forward to it :)

17.03.2025 20:55 — 👍 0 🔁 0 💬 0 📌 0

Not yet. Just the classical claim that they're trying to learn the distribuition of the return =))

Do yo have any insights?

17.03.2025 18:37 — 👍 0 🔁 0 💬 1 📌 0

I was reading about the ways that I can enhance the performance of dqn on a real-world problem. One of the candidates was c51 but i haven't implement it yet becuase of computational costs. But it was interesting for becuase i haven't read the papers before

17.03.2025 14:23 — 👍 1 🔁 0 💬 1 📌 0

I didn't know until last week that it can cause a huge performance boost using it with dqn.

17.03.2025 14:06 — 👍 1 🔁 0 💬 1 📌 0

Claire Vernade - European career opportunities

European Academic Career Opportunities in 2025

I’ve put together a short list of opportunities for early career academics willing to come to Europe: www.cvernade.com/miscellaneou...

This mostly covers France and Germany for now but I’m willing to extend it. I build on @ellis.eu resources and my own knowledge of these systems.

11.03.2025 09:19 — 👍 75 🔁 26 💬 3 📌 0

First 11 chapters of RLHF Book have v0 draft done. Should be useful now.

Next:

* Crafting more blog content into future topics,

* DPO+ chapter,

* Meeting with publishers to get wheels turning on physical copies,

* Cleaning & cohesiveness

rlhfbook.com

26.02.2025 16:35 — 👍 48 🔁 9 💬 0 📌 0

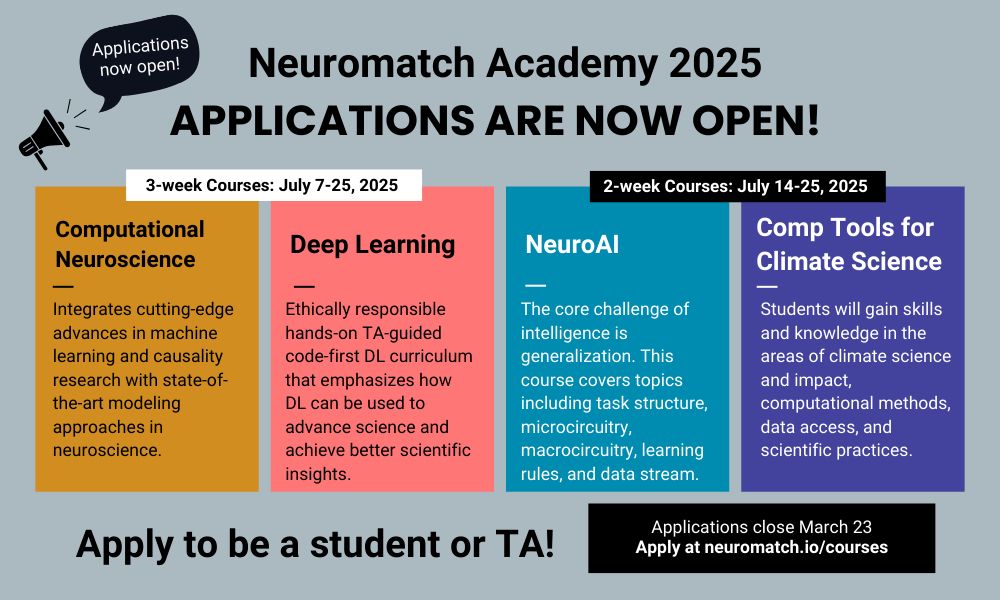

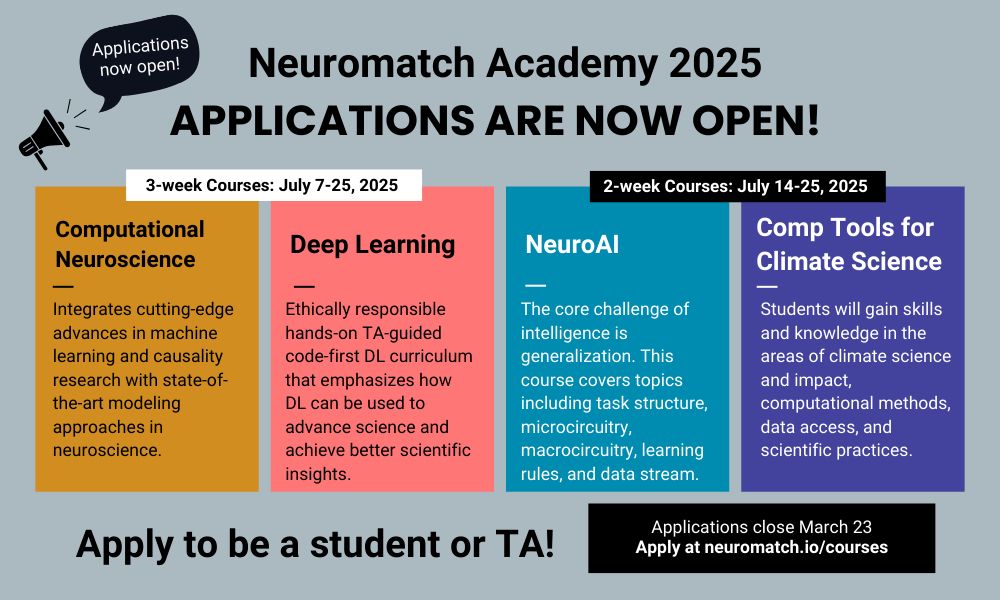

Applications are now open! 3-week courses: Comp Neuro and Deep Learning. 2-week courses: NeuroAI and Comp Tools for Climate Science.

🚨 Neuromatch Academy Course Applications are OPEN for 2025!! 🚨

Get your application in early to be a student or teaching assistant for this year’s courses!

Applications are due Sunday, March 23.

Apply & learn more: neuromatch.io/courses/

#mlsky #compneurosky #ai #climatesolutions #ScienceEdu 🧪

24.02.2025 17:58 — 👍 86 🔁 75 💬 0 📌 12

2014 GoogLeNet: The best image classifier was only trainable using weeks of Google's custom infrastructure.

2018 ResNet: A more accurate model is trainable in a 1/2 hour on a single GPU.

What stops this from happening for LLMs?

27.01.2025 15:16 — 👍 52 🔁 9 💬 3 📌 3

I am teaching a class on #FoundationalModels for #robotics and Scaling #DeepRL algorithms. This class expands on last year's class and my generalist robotics policies tutorial and code. I plan to share the lectures and code assignments. Starting with the first lectures below.

19.01.2025 19:14 — 👍 21 🔁 6 💬 1 📌 0

PhD candidate at UCSD. Prev: NVIDIA, Meta AI, UC Berkeley, DTU. I like robots 🤖, plants 🪴, and they/them pronouns 🏳️🌈

https://www.nicklashansen.com

Gradient surfer at UCL. FR, EN, also trying ES. 🇹🇼🇨🇦🇬🇳🇺🇸🇩🇴🇫🇷🇪🇸🇬🇧🇿🇦. Also on Twitter.

Husband, dad, computational neuroscientist, @mcgillu.bsky.social and Mila

Reinforcement Learning PhD Student at the University of Tokyo, Prev: Intern at Sakana AI, PFN, M.Sc/B.Sc. from TU Munich

johannesack.github.io

PhD student at MIT CSAIL; previously at MPI-IS Tübingen, Mila, NVIDIA HK, CUHK MMLab. Interested in robustness: https://chinglamchoi.github.io/cchoi/.

Probabilistic {Machine,Reinforcement} Learning and more at SDU

PhD student in AutoML @ University of Tübingen

Interested in open-ended machine learning and agentic systems. Studying a DPhil on world models at Oxford Robotics.

Professor, author of book on Simulation-Based Optimization, #ReinforcementLearning #MDPs #ORMS www.simoptim.com

Researcher on MDPs and RL. Retired prof. #orms #rl

At Microsoft Research. Lead of https://aka.ms/game-intelligence - we drive innovation in machine learning with applications in games. https://iclr.cc Board.

Living in and probably posting about seattle. Warriors fan, ml engineer (posts my own yada yada)

Graduate student studying learning algorithms

Thinking on dopamine heterogeneity

NeuroAI PhD student at @mcgillu.

Prev: @GeorgiaTech @FlatironCCN.

Associate Prof @ LMU Munich

PI @ Munich Center for Machine Learning

Ellis Member

Associate Fellow @ relAI

-----

https://davidruegamer.github.io/ | https://www.muniq.ai/

-----

BNNs, UQ in DL, DL Theory (Overparam, Implicit Bias, Optim), Sparsity

Postdoc at Boston University with Aldo Pacchiano (PLAIA Lab plaia.ai). Interested in RL, Bandit problems and Adaptive Control.

Website: alessiorusso.net

Research in AI / ML / RL @Mila_Quebec / @UMontreal, formerly research @Ualberta, @AmiiThinks, @rlai_lab

Aligning Reinforcement Learning Experimentalists and Theorists workshop.

> https://arlet-workshop.github.io

Security and Privacy of Machine Learning at UofT, Vector Institute, and Google 🇨🇦🇫🇷🇪🇺 Co-Director of Canadian AI Safety Institute (CAISI) Research Program at CIFAR. Opinions mine