DUSt3R et al. are impressive, but how do they actually work? We investigate this in our project 𝘜𝘯𝘥𝘦𝘳𝘴𝘵𝘢𝘯𝘥𝘪𝘯𝘨 𝘔𝘶𝘭𝘵𝘪-𝘝𝘪𝘦𝘸 𝘛𝘳𝘢𝘯𝘴𝘧𝘰𝘳𝘮𝘦𝘳𝘴!

We share findings on the iterative nature of reconstruction, the roles of cross and self-attention, and the emergence of correspondences across the network [1/8] ⬇️

04.11.2025 19:40 —

👍 7

🔁 4

💬 1

📌 0

ICCV 2025 🌺 Aloha from Hawaii! MPI-INF (D2) is presenting 4 papers this year (one Highlight). Thread 👇

19.10.2025 07:48 —

👍 13

🔁 6

💬 1

📌 0

I am on my way to #ICCV2025 to present DIY-SC, where we refine foundational features for better semantic correspondence performance. Please come by our poster poster #538 (Session 2) if you're interested or want to chat about my latest project, AnyUp!

18.10.2025 04:10 —

👍 7

🔁 0

💬 0

📌 0

🤔 What if you could generate an entire image using just one continuous token?

💡 It works if we leverage a self-supervised representation!

Meet RepTok🦎: A generative model that encodes an image into a single continuous latent while keeping realism and semantics. 🧵 👇

17.10.2025 10:20 —

👍 10

🔁 4

💬 1

📌 1

AnyUp

Universal Feature Upsampling

Try it out now! Code and model weights are public.

💻 Code: github.com/wimmerth/anyup

Great collaboration with Prune Truong, Marie-Julie Rakotosaona, Michael Oechsle, Federico Tombari, Bernt Schiele, and @janericlenssen.bsky.social!

CC: @cvml.mpi-inf.mpg.de @mpi-inf.mpg.de

16.10.2025 09:06 —

👍 3

🔁 0

💬 0

📌 0

Generalization: AnyUp is the first learned upsampler that can be applied out-of-the-box to other features of potentially different dimensionality.

In our experiments, we show that it matches encoder-specific upsamplers and that trends between different model sizes are preserved.

16.10.2025 09:06 —

👍 3

🔁 1

💬 1

📌 0

When performing linear probing for semantic segmentation or normal and depth estimation, AnyUp consistently outperforms prior upsamplers.

Importantly, the upsampled features also stay faithful to the input feature space, as we show in experiments with pre-trained DINOv2 probes.

16.10.2025 09:06 —

👍 1

🔁 0

💬 1

📌 0

AnyUp is a lightweight model that uses a feature-agnostic layer to obtain a canonical representation that is independent of the input dimensionality.

Together with window attention-based upsampling, a new training pipeline and consistency regularization, we achieve SOTA results.

16.10.2025 09:06 —

👍 1

🔁 0

💬 1

📌 0

Foundation models like DINO or CLIP are used in almost all modern computer vision applications.

However, their features are of low resolution and many applications need pixel-wise features instead.

AnyUp can upsample any features of any dimensionality to any resolution.

16.10.2025 09:06 —

👍 1

🔁 0

💬 1

📌 0

Super excited to introduce

✨ AnyUp: Universal Feature Upsampling 🔎

Upsample any feature - really any feature - with the same upsampler, no need for cumbersome retraining.

SOTA feature upsampling results while being feature-agnostic at inference time.

🌐 wimmerth.github.io/anyup/

16.10.2025 09:06 —

👍 28

🔁 5

💬 2

📌 2

Architecture for Unpaired Multimodal Learner.

Suppose you have separate datasets X, Y, Z, without known correspondences.

We do the simplest thing: just train a model (e.g., a next-token predictor) on all elements of the concatenated dataset [X,Y,Z].

You end up with a better model of dataset X than if you had trained on X alone!

6/9

10.10.2025 22:13 —

👍 23

🔁 1

💬 2

📌 0

Happy to find my name on the list of outstanding reviewers :]

Come and check out our poster on learning better features for semantic correspondence in Hawaii!

📍 Poster #538 (Session 2)

🗓️ Oct 21 | 3:15 – 5:00 p.m. HST

genintel.github.io/DIY-SC

07.10.2025 15:05 —

👍 3

🔁 0

💬 0

📌 0

What was the patch size used here?

21.08.2025 11:44 —

👍 0

🔁 0

💬 1

📌 0

All the links can be found here. Great collaborators!

bsky.app/profile/odue...

26.06.2025 14:30 —

👍 2

🔁 0

💬 0

📌 0

🚀 Just accepted to ICCV 2025!

In DIY-SC, we improve foundational features using a light-weight adapter trained with carefully filtered and refined pseudo-labels.

🔧 Drop-in alternative to plain DINOv2 features!

📦 Code + pre-trained weights available now.

🔥 Try it in your next vision project!

26.06.2025 14:28 —

👍 10

🔁 2

💬 1

📌 0

The CVML group at the @mpi-inf.mpg.de has been busy for CVPR. Check out our papers and come by the presentations!

11.06.2025 12:07 —

👍 4

🔁 1

💬 0

📌 0

Hello world, we are now on Bluesky 🦋! Follow us to receive updates on exciting research and projects from our group!

#computervision #machinelearning #research

09.04.2025 13:03 —

👍 11

🔁 4

💬 0

📌 0

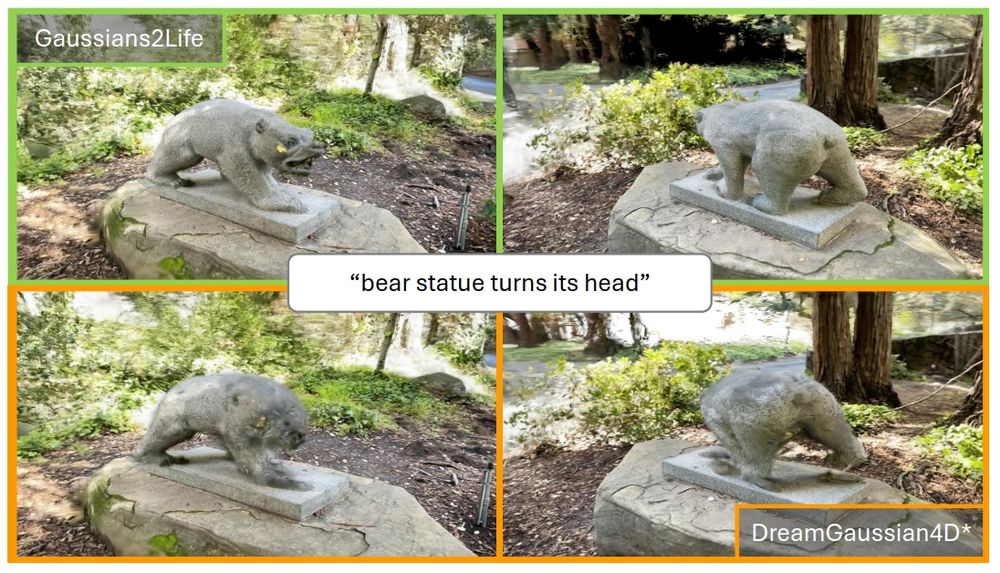

We can animate arbitrary 3D scenes within 10 minutes on a RTX4090 while keeping scene appearance and geometry in tact.

Note, that since the time I worked on this, open-sourced video diffusion models have improved significantly, which will directly improve the results of this method as well.

🧵⬇️

28.03.2025 08:35 —

👍 0

🔁 0

💬 1

📌 0

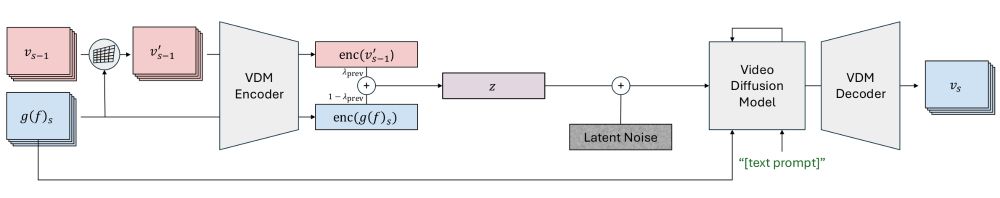

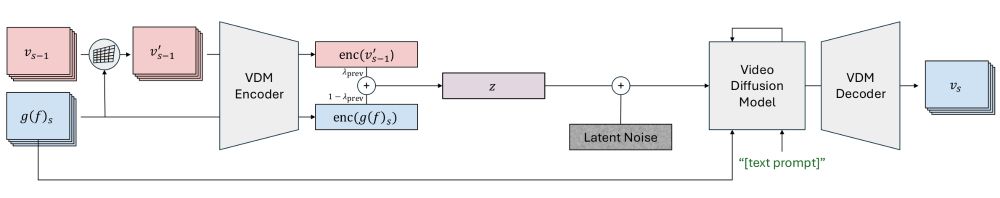

Improvement of multi-view consistency of generated videos through latent interpolation. In addition to the rendering of the dynamic scene f, using the rendering function g from the current viewpoint g(f)_s, we compute the latent embedding of the warped video output v_{s-1} of the previous optimization step (from a different viewpoint). We linearly interpolate the latents before passing them through the video diffusion model (VDM), which is additionally conditioned on the static scene view from the current viewpoint. The resulting output is finally decoded to a new video output v_s.

While we can now transfer motion into 3D, we still have to deal with a fundamental problem: Lacking 3D consistency of generated videos.

With limited resources, we can't fine-tune or retrain a VDM to be pose-conditioned. Thus, we propose a zero-shot technique to generate more 3D-consistent videos!

🧵⬇️

28.03.2025 08:35 —

👍 0

🔁 0

💬 1

📌 0

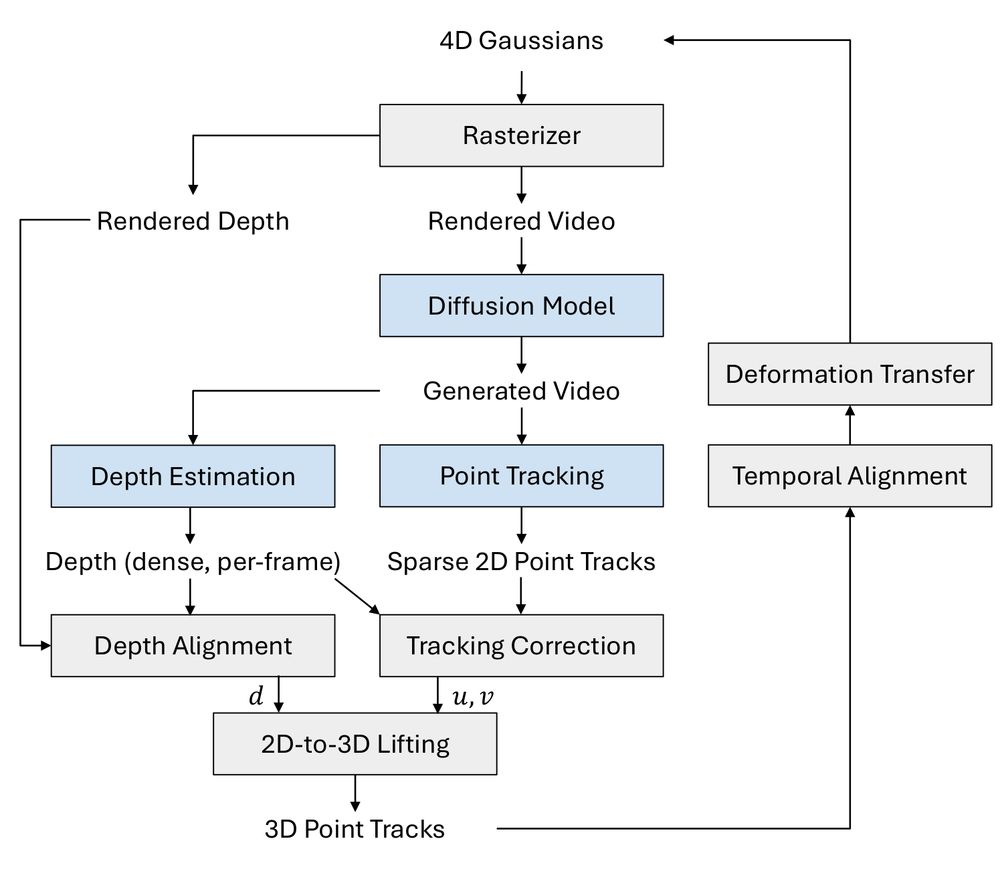

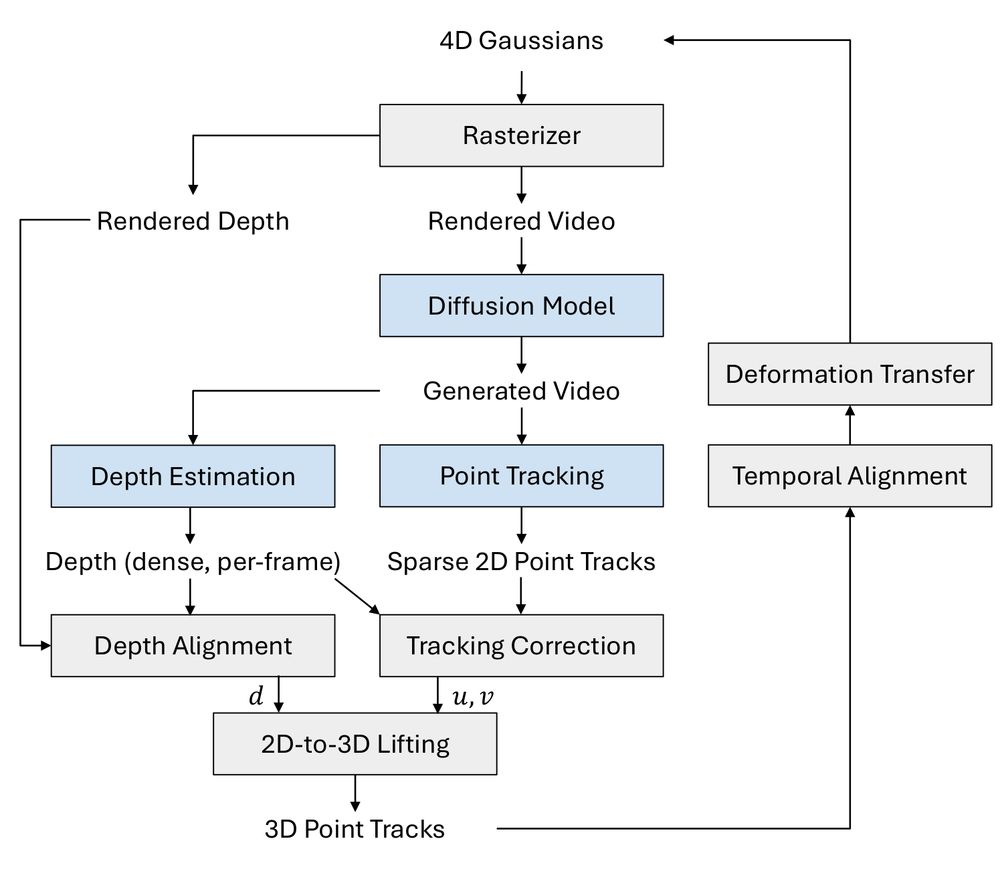

Method overview for lifting 2D dynamics into 3D. Pre-trained models are shown in blue. We detect 2D point tracks and use aligned estimated depth values to lift them into 3D.

The 4D (dynamic 3D) Gaussians are initialized with the static 3D scene input.

Standard practices like SDS fail for this task as VDMs provide a guidance signal that is too noisy, resulting in "exploding" scenes.

Instead, we propose to employ several pre-trained 2D models to directly lift motion from tracked points in the generated videos to 3D Gaussians.

🧵⬇️

28.03.2025 08:35 —

👍 1

🔁 0

💬 1

📌 0

Had the honor to present "Gaussians-to-Life" at #3DV2025 yesterday. In this work, we used video diffusion models to animate arbitrary 3D Gaussian Splatting scenes.

This work was a great collaboration with @moechsle.bsky.social, @miniemeyer.bsky.social, and Federico Tombari.

🧵⬇️

28.03.2025 08:35 —

👍 13

🔁 1

💬 2

📌 1

Can you do reasoning with diffusion models?

The answer is yes!

Take a look at Spatial Reasoning Models. Hats off for this amazing work!

03.03.2025 17:48 —

👍 3

🔁 0

💬 0

📌 0

I wonder to which degree one could artificially make real images (with GT depth) more abstract during training in order to make depth models learn these priors that we would have (like green=field, blue=sky) and whether that would actually give us any benefit, like increased robustness...

14.02.2025 14:30 —

👍 1

🔁 0

💬 1

📌 0

Ah, thanks, I overlooked that :)

14.02.2025 14:19 —

👍 1

🔁 0

💬 1

📌 0

Nice experiments! What model did you use?

14.02.2025 14:07 —

👍 1

🔁 0

💬 1

📌 0

🏔️⛷️ Looking back on a fantastic week full of talks, research discussions, and skiing in the Austrian mountains!

31.01.2025 19:38 —

👍 32

🔁 11

💬 0

📌 0

Give a warm welcome to @janericlenssen.bsky.social!

16.01.2025 17:29 —

👍 2

🔁 0

💬 0

📌 0

Well well, it turns out that GIFs aren't yet supported on this platform. Here is the teaser video as an MP4 instead:

15.01.2025 17:27 —

👍 1

🔁 0

💬 0

📌 0

MEt3R

Measuring Multi-View Consistency in Generated Images.

This work was led by @mohammadasim98.bsky.social and is a collaboration with Christopher Wewer, Bernt Schiele and Jan Eric Lenssen.

Check out the website with lots of nice visuals that show how our metric works and use it in your next diffusion model project!

geometric-rl.mpi-inf.mpg.de/met3r/

15.01.2025 17:21 —

👍 2

🔁 1

💬 0

📌 0