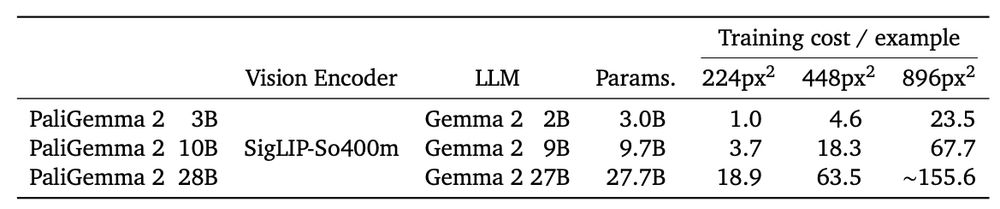

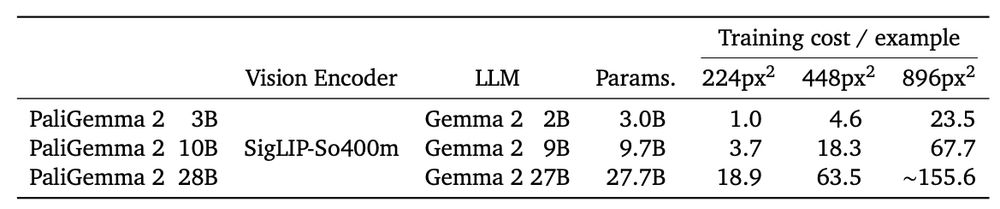

Looking for a small or medium sized VLM? PaliGemma 2 spans more than 150x of compute!

Not sure yet if you want to invest the time 🪄finetuning🪄 on your data? Give it a try with our ready-to-use "mix" checkpoints:

🤗 huggingface.co/blog/paligem...

🎤 developers.googleblog.com/en/introduci...

19.02.2025 17:47 — 👍 19 🔁 7 💬 0 📌 0

Attending #NeurIPS2024? If you're interested in multimodal systems, building inclusive & culturally aware models, and how fractals relate to LLMs, we've 3 posters for you. I look forward to presenting them on behalf of our GDM team @ Zurich & collaborators. Details below (1/4)

07.12.2024 18:50 — 👍 12 🔁 5 💬 1 📌 0

Want to get started using PaliGemma 2?

🎤 developers.googleblog.com/en/introduci...

🤗 huggingface.co/blog/paligem...

💾 kaggle.com/models/googl...

🔧 github.com/google-resea...

7/7

05.12.2024 18:19 — 👍 7 🔁 1 💬 0 📌 0

If you want to know more, now is a good time to head over to the 31 page tech report.

Brought to you by an amazing team of collaborators from

@GoogleDeepMind

and

@GoogleAI

.

arxiv.org/abs/2412.03555

6/7

05.12.2024 18:18 — 👍 2 🔁 2 💬 1 📌 0

In addition to the pre-trained checkpoints, we also release two checkpoints fine-tuned on the DOCCI dataset, which generate fine-grained captions with a great quality/compute trade-off – and no yapping!

5/7

05.12.2024 18:18 — 👍 3 🔁 0 💬 1 📌 0

After 🪄finetuning🪄 on your data, you can expect to see great results, like the sota we got on recognizing table structures, music scores, molecular structures, and text, and on radiography report generation.

4/7

05.12.2024 18:17 — 👍 4 🔁 0 💬 1 📌 0

As the original PaliGemma, the pre-trained PaliGemma 2 models have segmentation and detection capabilities, and excel at OCR – which makes them extremely versatile for 🪄finetuning🪄. The original demo hf.co/spaces/big-v... gives you an idea of the capabilities.

3/7

05.12.2024 18:17 — 👍 4 🔁 0 💬 1 📌 0

Adding this new "model size" dimension unlocks substantial improvements for some tasks (blue, e.g. AI2D), and compounds with improvements from increased resolution for most tasks (green, e.g. InfoVQA).

2/7

05.12.2024 18:16 — 👍 2 🔁 0 💬 1 📌 0

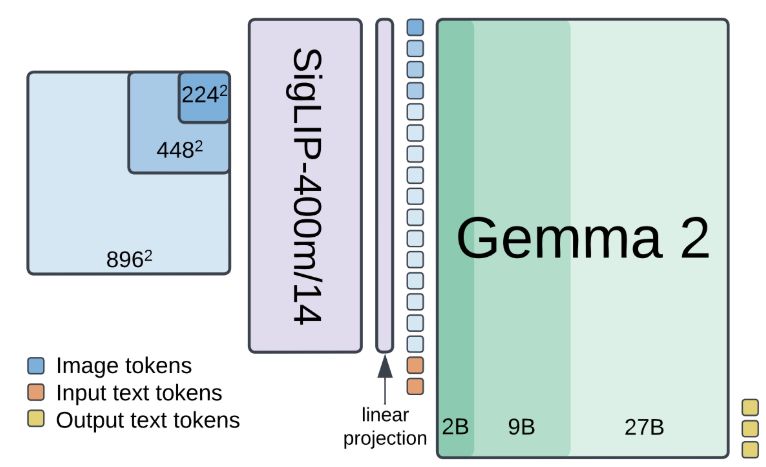

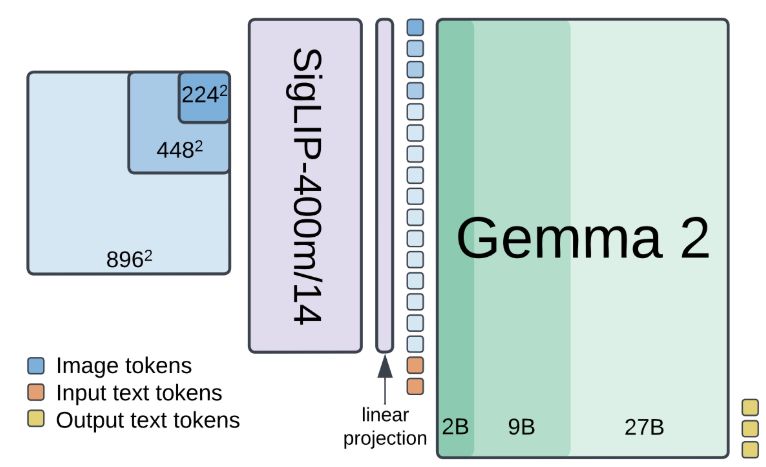

🚀🚀PaliGemma 2 is our updated and improved PaliGemma release using the Gemma 2 models and providing new pre-trained checkpoints for the full cross product of {224px,448px,896px} resolutions and {3B,10B,28B} model sizes.

1/7

05.12.2024 18:16 — 👍 69 🔁 21 💬 1 📌 5