1/n Introducing ReDi (Representation Diffusion): a new generative approach that leverages a diffusion model to jointly capture

– Low-level image details (via VAE latents)

– High-level semantic features (via DINOv2)🧵

@sta8is.bsky.social

1/n Introducing ReDi (Representation Diffusion): a new generative approach that leverages a diffusion model to jointly capture

– Low-level image details (via VAE latents)

– High-level semantic features (via DINOv2)🧵

📄 Check out our paper at arxiv.org/abs/2501.08303 and 🖥️code at github.com/Sta8is/FUTUR... to learn more about FUTURIST and its applications in autonomous systems! (9/n)

Joint work with @ikakogeorgiou.bsky.social, @spyrosgidaris.bsky.social and Nikos Komodakis

🚀 The architecture demonstrates significant performance improvements with extended training—indicating substantial potential for future enhancements (8/n)

26.02.2025 19:57 — 👍 0 🔁 0 💬 1 📌 0

💡 Our multimodal approach significantly outperforms single-modality variants, demonstrating the power of learning cross-modal relationships (7/n)

26.02.2025 19:57 — 👍 0 🔁 0 💬 1 📌 0

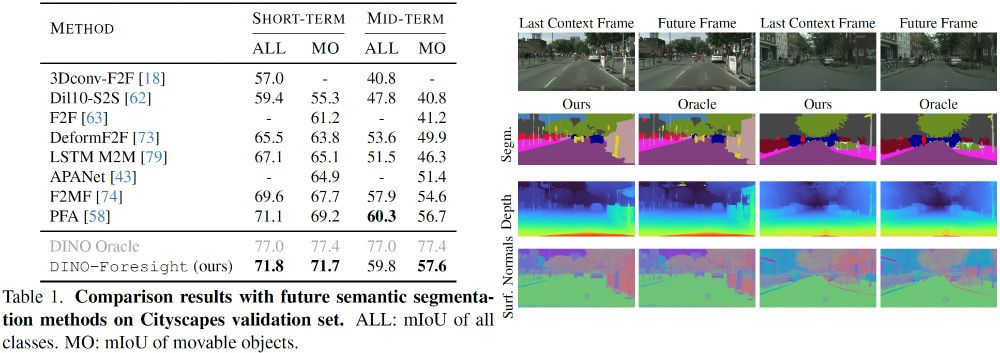

📈 Results are impressive! We achieve state-of-the-art performance in future semantic segmentation on Cityscapes, with strong improvements in both short-term (0.18s) and mid-term (0.54s) predictions (6/n)

26.02.2025 19:57 — 👍 0 🔁 0 💬 1 📌 0

🎭 Key innovation #3: We developed a novel multimodal masked visual modeling objective specifically designed for future prediction tasks (5/n)

26.02.2025 19:57 — 👍 0 🔁 0 💬 1 📌 0

🔗 Key innovation #2: Our model features an efficient cross-modality fusion mechanism that improves predictions by learning synergies between different modalities (segmentation + depth) (4/n)

26.02.2025 19:57 — 👍 0 🔁 0 💬 1 📌 0

🎯 Key innovation #1: We introduce a VAE-free hierarchical tokenization process integrated directly into our transformer. This simplifies training, reduces computational overhead, and enables true end-to-end optimization (3/n)

26.02.2025 19:57 — 👍 0 🔁 0 💬 1 📌 0

🔍 FUTURIST employs a multimodal visual sequence transformer to directly predict multiple future semantic modalities. We focus on two key modalities: semantic segmentation and depth estimation—critical capabilities for autonomous systems operating in dynamic environments (2/n)

26.02.2025 19:57 — 👍 0 🔁 0 💬 1 📌 0🧵 Excited to share our latest work: FUTURIST - A unified transformer architecture for multimodal semantic future prediction, is accepted to #CVPR2025! Here's how it works (1/n)

👇 Links to the arxiv and github below

1/n🚀If you’re working on generative image modeling, check out our latest work! We introduce EQ-VAE, a simple yet powerful regularization approach that makes latent representations equivariant to spatial transformations, leading to smoother latents and better generative models.👇

18.02.2025 14:26 — 👍 18 🔁 8 💬 1 📌 1

8/n 💡Our work shows that by leveraging the semantic power of VFMs, we create more efficient and effective future prediction systems.

📄 Paper: arxiv.org/abs/2412.11673

🖥️Code available at: github.com/Sta8is/DINO-...

Joint work with @ikakogeorgiou.bsky.social, @spyrosgidaris.bsky.social, N. Komodakis

7/n 🔬Interesting discovery: The intermediate features from our transformer can actually enhance the already-strong VFM features, suggesting potential for self-supervised learning.

07.02.2025 17:05 — 👍 2 🔁 0 💬 1 📌 0

6/n 📊And it works amazingly well! We achieve state-of-the-art results in semantic segmentation forecasting, with strong performance across multiple tasks using a single feature prediction model.

07.02.2025 17:05 — 👍 2 🔁 0 💬 1 📌 0

5/n 🎨The beauty of our method? It's completely modular - different task-specific heads (segmentation, depth estimation, surface normals) can be plugged in without retraining the core model.

07.02.2025 17:05 — 👍 1 🔁 0 💬 1 📌 0

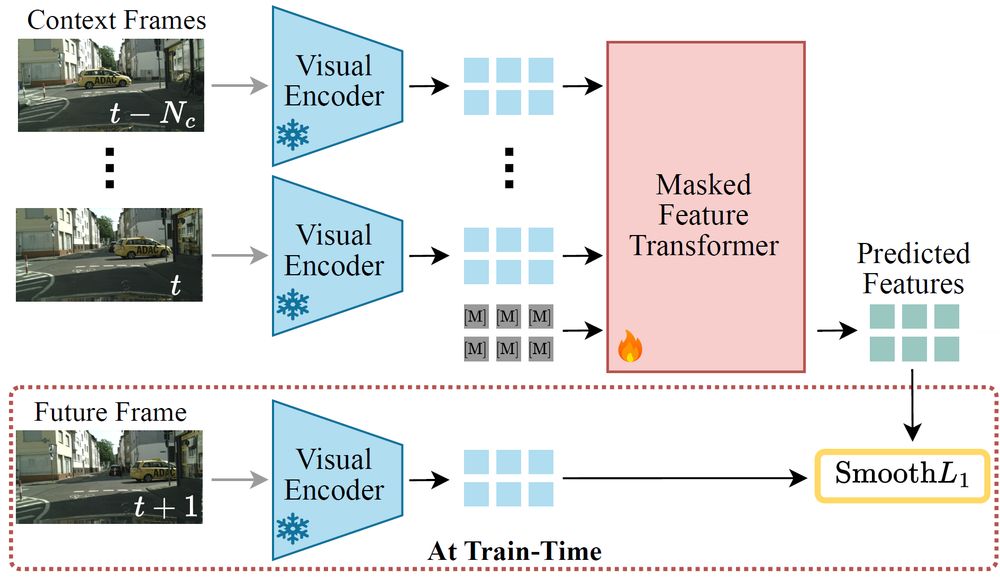

4/n 🔄Our approach: We train a masked feature transformer to predict how VFM features change over time. These predicted features can then be used for various scene understanding tasks!

07.02.2025 17:05 — 👍 1 🔁 0 💬 1 📌 03/n 🧩Why is this important? Most existing approaches focus on pixel-level prediction, which wastes computation on irrelevant visual details. We focus directly on meaningful semantic features!

07.02.2025 17:05 — 👍 1 🔁 0 💬 1 📌 02/n 🎯Our key insight: Instead of predicting future RGB frames directly, we can forecast how semantic features from Vision Foundation Models (VFMs) evolve over time.

07.02.2025 17:05 — 👍 1 🔁 0 💬 1 📌 0

1/n 🚀 Excited to share our latest work: DINO-Foresight, a new framework for predicting the future states of scenes using Vision Foundation Model features!

Links to the arXiv and Github 👇