Thrilled to present DIP at #ICCV2025! Great discussions and insightful questions during the poster session. Thank you to everyone who stopped by!

24.10.2025 01:46 — 👍 7 🔁 0 💬 1 📌 0@ssirko.bsky.social

PhD student in visual representation learning at Valeo.ai and Sorbonne Université (MLIA)

Thrilled to present DIP at #ICCV2025! Great discussions and insightful questions during the poster session. Thank you to everyone who stopped by!

24.10.2025 01:46 — 👍 7 🔁 0 💬 1 📌 0

Happy to represent Ukraine at #ICCV2025 . Come see my poster today at 11:45 (#399)!

21.10.2025 19:35 — 👍 17 🔁 2 💬 0 📌 0Come say hi to our poster October 21st at 11:45 poster session 1 (#399)! We introduce unsupervised post-training of ViTs that enhances dense features for in-context tasks.

First conference as a PhD student, really excited to meet new people.

Our recent research will be presented at @iccv.bsky.social! #ICCV2025

We’ll present 5 papers about:

💡 self-supervised & representation learning

🌍 3D occupancy & multi-sensor perception

🧩 open-vocabulary segmentation

🧠 multimodal LLMs & explainability

valeoai.github.io/posts/iccv-2...

Another great event for @valeoai.bsky.social team: a PhD defense of Corentin Sautier.

His thesis «Learning Actionable LiDAR Representations w/o Annotations» covers the papers BEVContrast (learning self-sup LiDAR features), SLidR, ScaLR (distillation), UNIT and Alpine (solving tasks w/o labels).

So excited to attend the PhD defense of @bjoernmichele.bsky.social at @valeoai.bsky.social! He’s presenting his research results of the last 3 years in 3D domain adaptation: SALUDA (unsupervised DA), MuDDoS (multimodal UDA), TTYD (source-free UDA).

06.10.2025 12:18 — 👍 12 🔁 2 💬 2 📌 0

Work done in collaboration with

@spyrosgidaris.bsky.social @vobeckya.bsky.social @abursuc.bsky.social and Nicolas Thome

Paper: arxiv.org/abs/2506.18463

Github: github.com/sirkosophia...

6/n Benefits 💪

- < 9h on a single A100 gpu.

- Improves across 6 segmentation benchmarks

- Boosts performance for in-context depth prediction.

- Plug-and-play for different ViTs: DINOv2, CLIP, MAE.

- Robust in low-shot and domain shift.

5/n Why is DIP unsupervised?

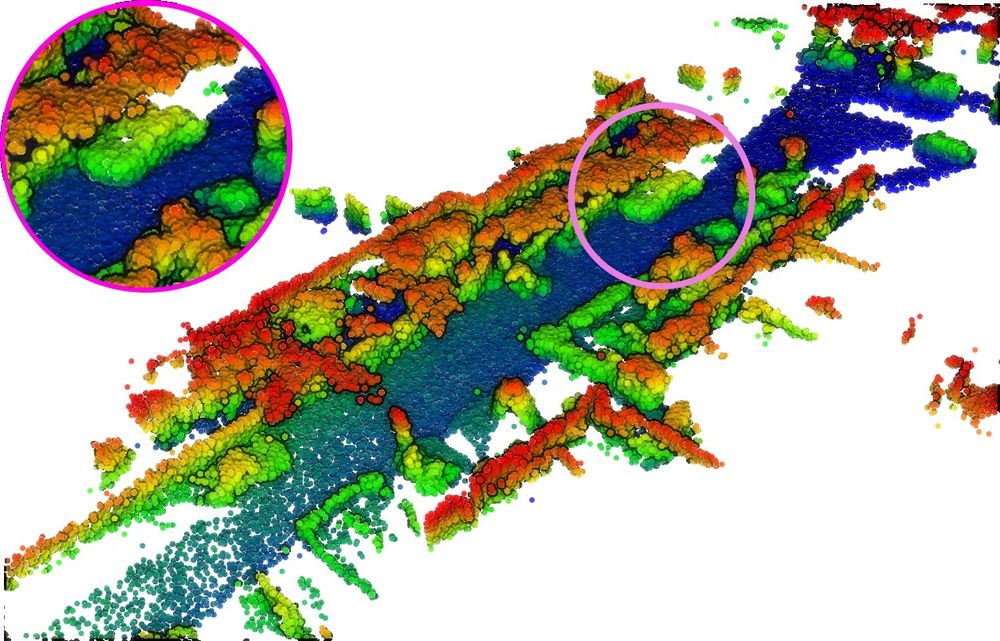

DIP doesn't require manually annotated segmentation masks for its post-training. To accomplish this, it leverages Stable Diffusion (via DiffCut) alongside DINOv2R features to automatically construct in-context pseudo-tasks for its post-training.

4/n Meet Dense In-context Post-training (DIP)! 🔄

- Meta-learning inspired: adopts episodic training principles

- Task-aligned: Explicitly mimics downstream dense in-context tasks during post-training.

- Purpose-built: Optimizes the model for dense in-context performance.

3/n Most unsupervised (post-)training methods for dense in-context scene understanding rely on self-distillation frameworks with (somewhat) complicated objectives and network components. Hard to interpret, tricky to tune.

Is there a simpler alternative? 👀

2/n What is dense in-context scene understanding?

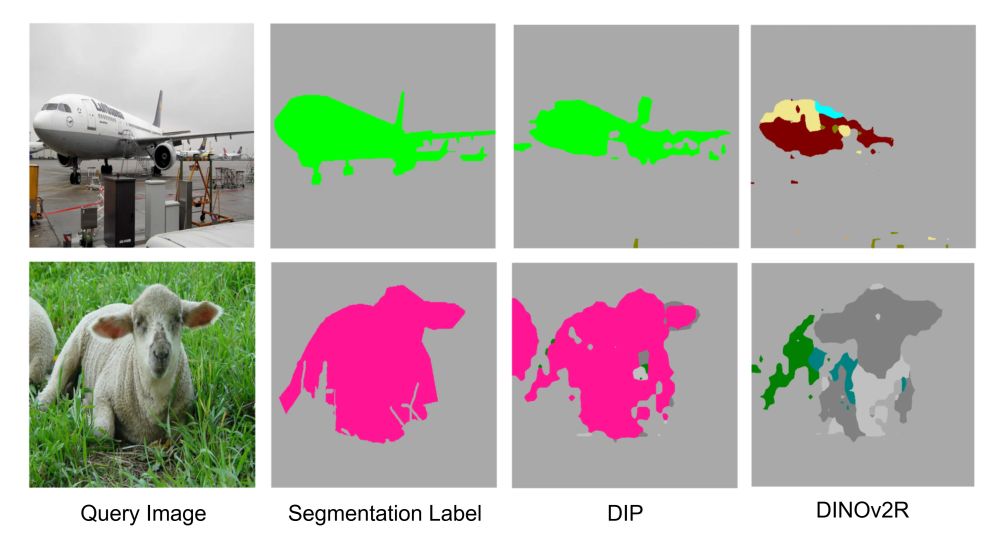

Formulate dense prediction tasks as nearest-neighbor retrieval problems using patch feature similarities between query and the labeled prompt images (introduced in @ibalazevic.bsky.social et al.’s HummingBird; figure below from their work).

1/n 🚀New paper out - accepted at #ICCV2025!

Introducing DIP: unsupervised post-training that enhances dense features in pretrained ViTs for dense in-context scene understanding

Below: Low-shot in-context semantic segmentation examples. DIP features outperform DINOv2!

🚀Thrilled to introduce JAFAR—a lightweight, flexible, plug-and-play module that upsamples features from any Foundation Vision Encoder to any desired output resolution (1/n)

Paper : arxiv.org/abs/2506.11136

Project Page: jafar-upsampler.github.io

Github: github.com/PaulCouairon...

Our paper "LiDPM: Rethinking Point Diffusion for Lidar Scene Completion" got accepted to IEEE IV 2025!

tldr: LiDPM enables high-quality LiDAR completion by applying a vanilla DDPM with tailored initialization, avoiding local diffusion approximations.

Project page: astra-vision.github.io/LiDPM/

🔥🔥🔥 CV Folks, I have some news! We're organizing a 1-day meeting in center Paris on June 6th before CVPR called CVPR@Paris (similar as NeurIPS@Paris) 🥐🍾🥖🍷

Registration is open (it's free) with priority given to authors of accepted papers: cvprinparis.github.io/CVPR2025InPa...

Big 🧵👇 with details!

This amazing team ❤️

27.01.2025 17:01 — 👍 19 🔁 3 💬 1 📌 0As I haven't found it out there yet, I made the Women in computer vision started pack.

Many more missing, please let me know how is already in bsky to add them!

go.bsky.app/BowzivT