Cool!

31.07.2025 18:13 — 👍 3 🔁 0 💬 0 📌 0David G. Clark

@david-g-clark.bsky.social

Theoretical neuroscientist Grad student @ Columbia dclark.io

@david-g-clark.bsky.social

Theoretical neuroscientist Grad student @ Columbia dclark.io

Cool!

31.07.2025 18:13 — 👍 3 🔁 0 💬 0 📌 0

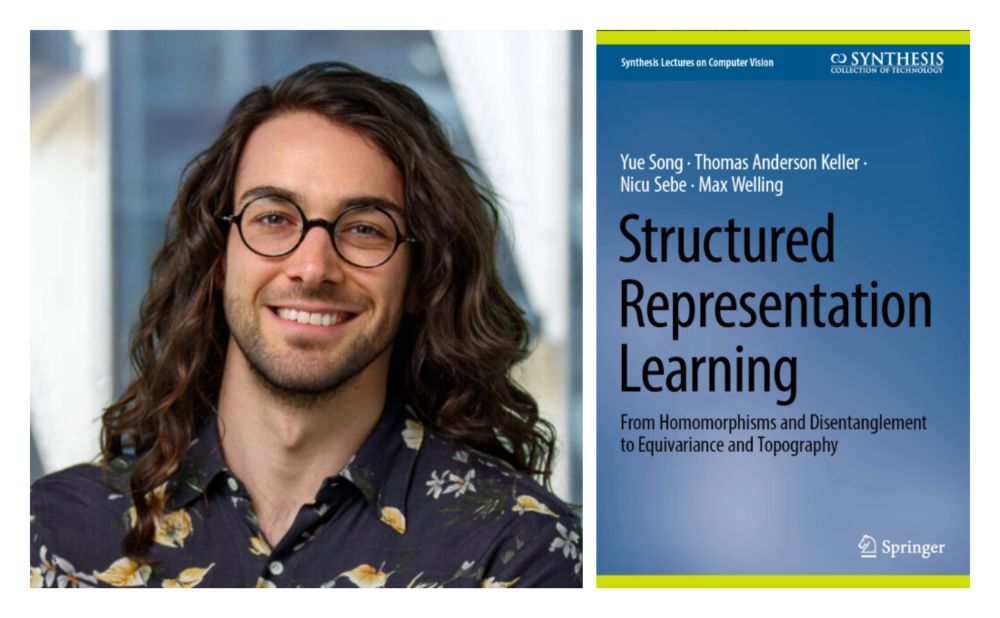

#KempnerInstitute research fellow @andykeller.bsky.social and coauthors Yue Song, Max Welling and Nicu Sebe have a new book out that introduces a framework for developing equivariant #AI & #neuroscience models. Read more:

kempnerinstitute.harvard.edu/news/kempner...

#NeuroAI

When neurons change, but behavior doesn’t: Excitability changes driving representational drift

New preprint of work with Christian Machens: www.biorxiv.org/content/10.1...

The summer schools at Les Houches are a magnificent tradition. I was honored to lecture there in 2023, and my notes now are published as "Ambitions for theory in the physics of life." #physics #physicsoflife scipost.org/SciPostPhysL...

25.07.2025 21:57 — 👍 22 🔁 6 💬 2 📌 2Trying to train RNNs in a biol plausible (local) way? Well, try our new method using predictive alignment. Paper just out in Nat. Com. Toshitake Asabuki deserves all the credit!

www.nature.com/articles/s41...

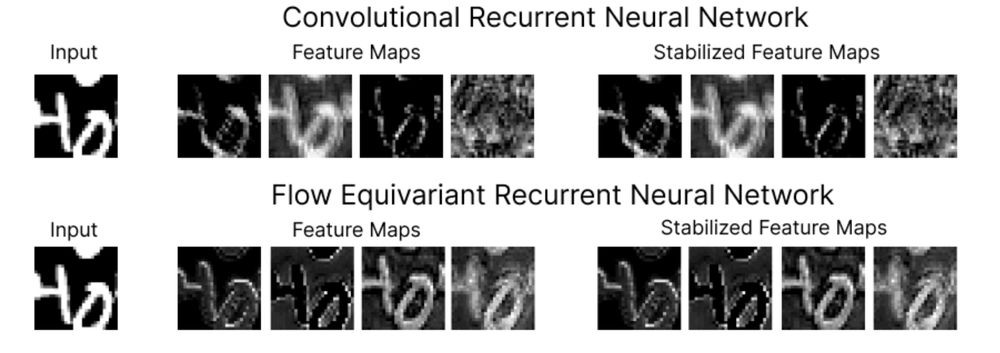

New in the #DeeperLearningBlog: #KempnerInstitute research fellow @andykeller.bsky.social introduces the first flow equivariant neural networks, which reflect motion symmetries, greatly enhancing generalization and sequence modeling.

bit.ly/451fQ48

#AI #NeuroAI

Excited!

16.07.2025 19:05 — 👍 30 🔁 1 💬 3 📌 0🆒

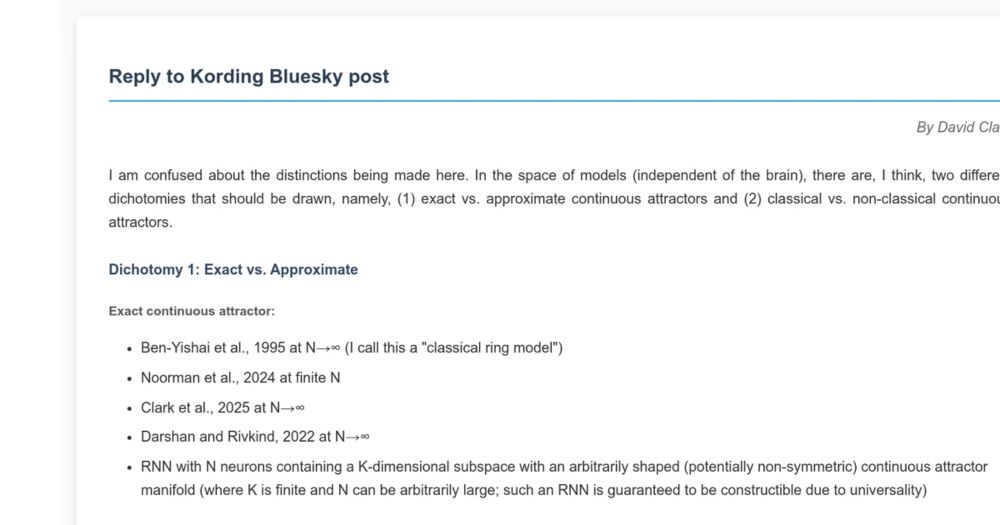

14.07.2025 22:24 — 👍 2 🔁 0 💬 0 📌 0Independent of how one defines “mechanism,” a reasonable program seems to be

1. Figure out if (approx) attractor dynamics occur

2. If so, figure out what kind of model is being implemented

Where nailing each step requires various manipulations (or connectome) beyond passive neural recordings. Agree?

Thanks a lot for the post! Some thoughts:

claude.ai/public/artif...

Exploring neural manifolds across a wide range of intrinsic dimensions https://www.biorxiv.org/content/10.1101/2025.07.01.662533v1

04.07.2025 19:15 — 👍 6 🔁 3 💬 0 📌 0

Humans and animals can rapidly learn in new environments. What computations support this? We study the mechanisms of in-context reinforcement learning in transformers, and propose how episodic memory can support rapid learning. Work w/ @kanakarajanphd.bsky.social : arxiv.org/abs/2506.19686

26.06.2025 19:01 — 👍 73 🔁 24 💬 3 📌 1Spatially and non-spatially tuned hippocampal neurons are linear perceptual and nonlinear memory encoders https://www.biorxiv.org/content/10.1101/2025.06.23.661173v1

25.06.2025 06:15 — 👍 2 🔁 1 💬 0 📌 0How do task dynamics impact learning in networks with internal dynamics?

Excited to share our ICML Oral paper on learning dynamics in linear RNNs!

with @clementinedomine.bsky.social @mpshanahan.bsky.social and Pedro Mediano

openreview.net/forum?id=KGO...

On behalf of Nicole Carr: new preprint from the Chand/Moore labs! High-resolution laminar recordings reveal structure-function relationships in monkey V1.

1/4

www.biorxiv.org/content/10.1...

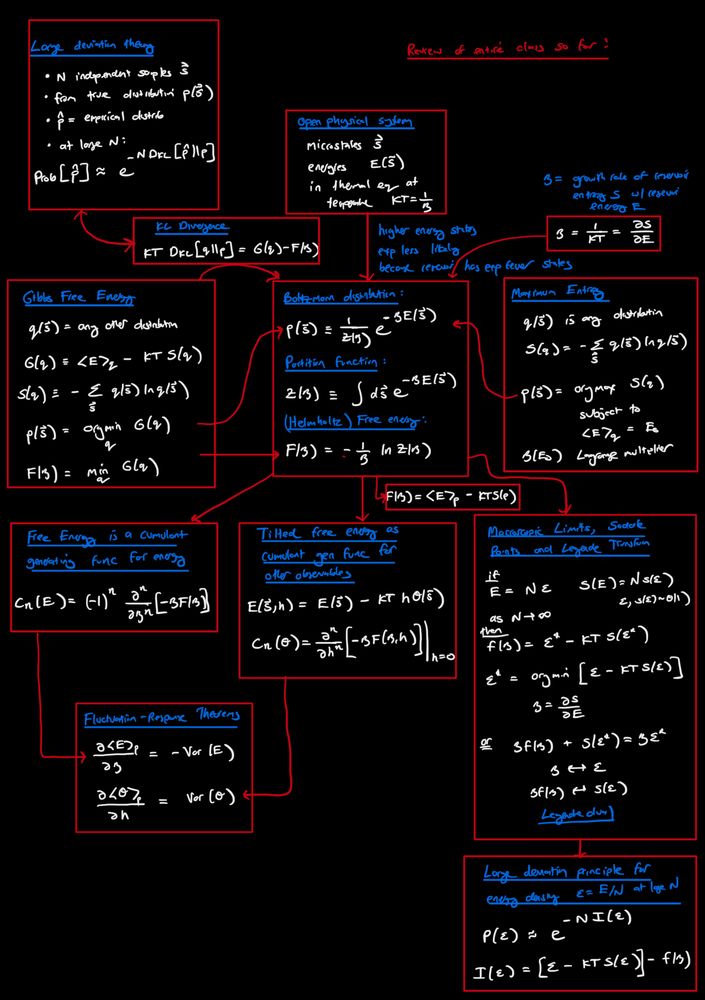

Many recent posts on free energy. Here is a summary from my class “Statistical mechanics of learning and computation” on the many relations between free energy, KL divergence, large deviation theory, entropy, Boltzmann distribution, cumulants, Legendre duality, saddle points, fluctuation-response…

02.05.2025 19:22 — 👍 63 🔁 9 💬 1 📌 0

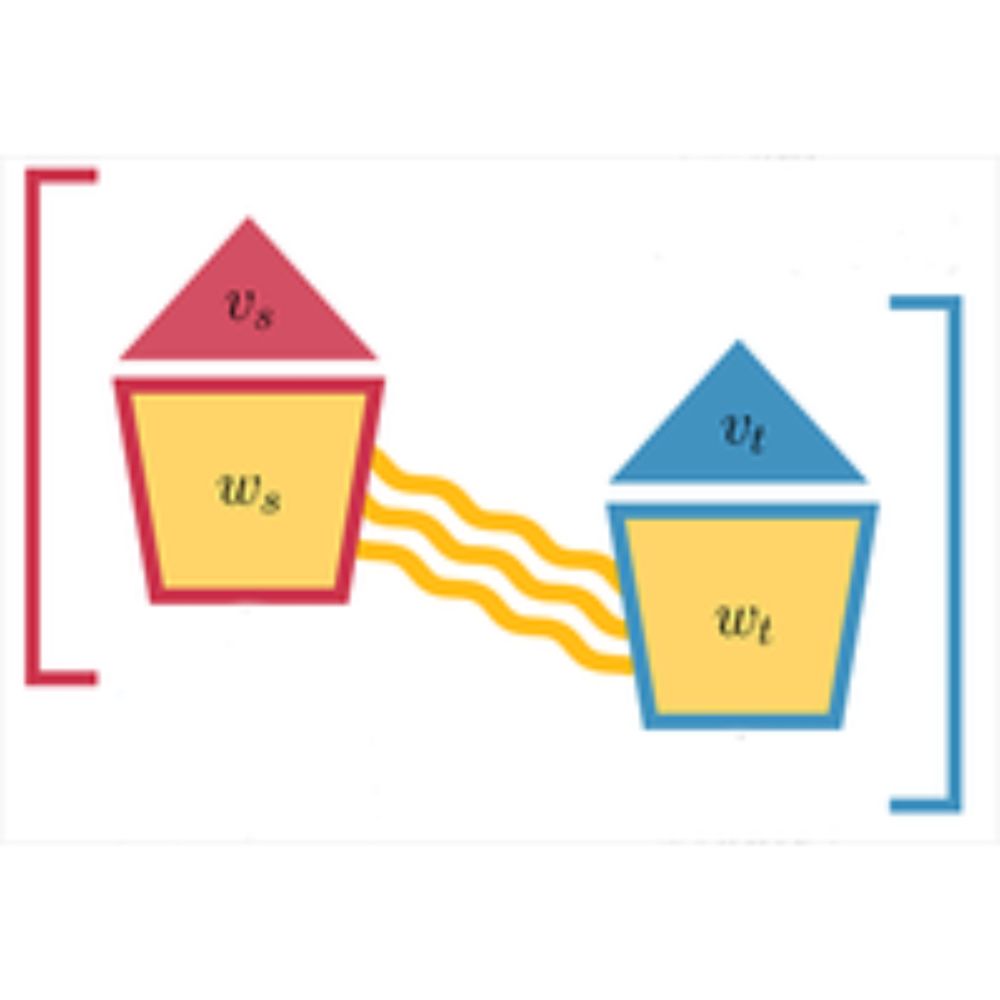

Our paper on the statistical mechanics of transfer learning is now published in PRL. Franz-Parisi meets Kernel Renormalization in this nice collaboration with friends in Bologna (F. Gerace) and Parma (P. Rodondo, R. Pacelli).

journals.aps.org/prl/abstract...

Neat! Thanks a ton for the responses -- very helpful. 🙂

This is a cool example.

Cool!

arxiv.org/abs/2504.19657

So, is the key message that eigendecomposition provides more insight than SVD (or similarly that we must consider the overlaps between singular vectors, which would be negative in the negative-eigenvalue case)?

24.04.2025 13:25 — 👍 3 🔁 0 💬 1 📌 0Looks cool!

This assumes LR structure is linked to a neg eigval. But alternatives exist: stable linear dynamics w/ LR structure could have tiny bulk & nearly-unstable pos eigval (1-ε). Or nonlinear networks could have large bulk & large pos eigval. Neither shows "low-rank suppression," correct?

🚨new paper alert

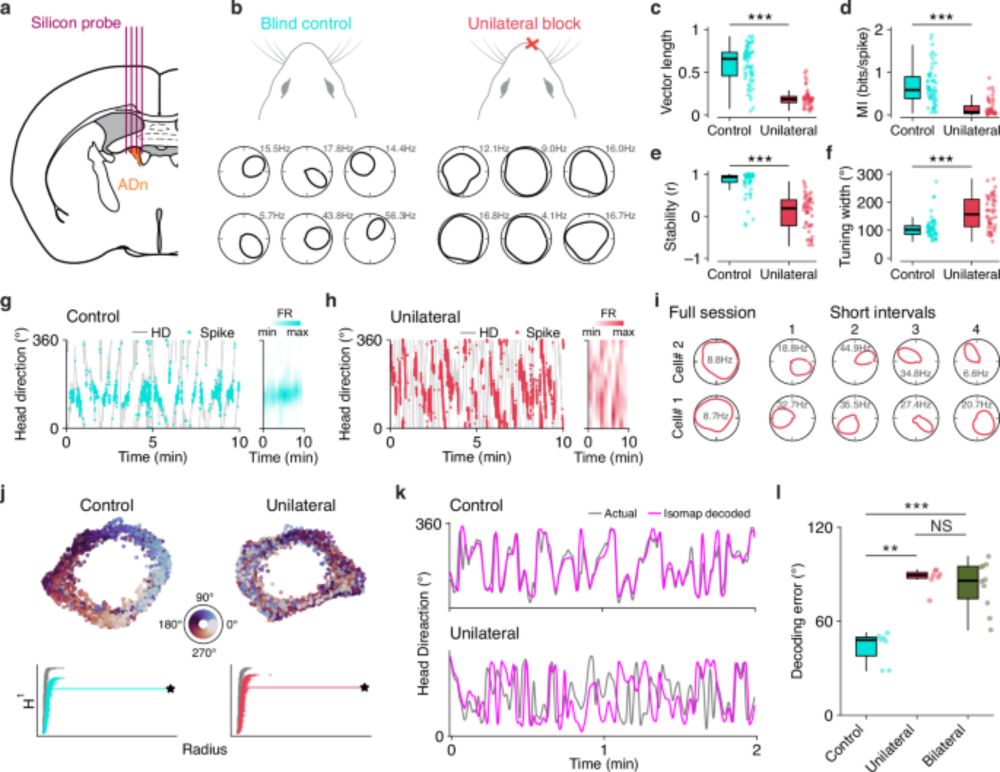

Blind mice use stereo olfaction, comparing smells between nostrils, to maintain a stable sense of direction. Blocking this ability disrupts their internal compass.

Kudos to @kasumbisa.bsky.social! Another cool chapter of the Trenholm-Peyrache collab😉

www.nature.com/articles/s41...

Woah

28.03.2025 11:46 — 👍 1 🔁 0 💬 0 📌 0Also! Check out "A theory of multi-task computation and task selection" (poster 2-98) by Owen Marschall, with me and Ashok Litwin-Kumar. Owen analyzes RNNs that embed lots of tasks in different subspaces and transition between a "spontaneous" state and task-specific dynamics via phase transitions.

26.03.2025 21:38 — 👍 2 🔁 0 💬 0 📌 0How does barcode activity in the hippocampus enable precise and flexible memory? How does this relate to key-value memory systems? Our work (w/ Jack Lindsey, Larry Abbott, Dmitriy Aronov, @selmaan.bsky.social ) is now in eLife as a reviewed preprint: elifesciences.org/reviewed-pre...

24.03.2025 19:46 — 👍 21 🔁 9 💬 1 📌 2I'll be presenting this at #cosyne2025 (poster 3-50)!

I'll also be giving a talk at the "Collectively Emerged Timescales" workshop on this work, plus other projects on emergent dynamics in neural circuits.

Looking forward to seeing everyone in 🇨🇦!

Our paper on key-value memory in the brain (updated from the preprint version) is now out in Neuron @cp-neuron.bsky.social

authors.elsevier.com/a/1kqIJ3BtfH...

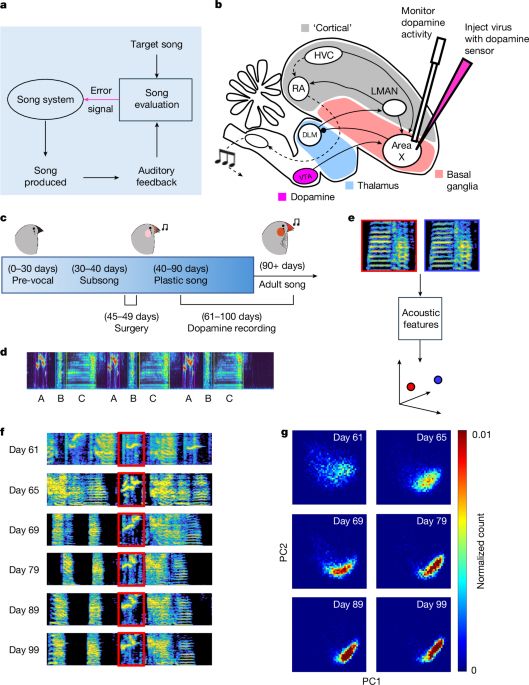

We know dopamine guides reinforcement learning in externally rewarded behaviors—think a mouse learning to press a lever for food or juice. But what about skills like speech or athletics, where there’s no explicit external reward, just an internal goal to match? 🧵 (1/7)

17.03.2025 17:48 — 👍 22 🔁 9 💬 2 📌 0

Transformers employ different strategies through training to minimize loss, but how do these tradeoff and why?

Excited to share our newest work, where we show remarkably rich competitive and cooperative interactions (termed "coopetition") as a transformer learns.

Read on 🔎⏬

New paper from Kasdin and Duffy (feat. @pantamallion.bsky.social, @neurokim.bsky.social, and others) on how dopamine shapes song-learning trajectories in juvenile birds.

www.nature.com/articles/s41...