13.12.2024 13:06 — 👍 2 🔁 0 💬 1 📌 0

13.12.2024 13:06 — 👍 2 🔁 0 💬 1 📌 0

@asaf-yehudai.bsky.social

@asaf-yehudai.bsky.social

Checkout our full leaderboard here:

huggingface.co/spaces/ibm/J...

Many more details are in the paper:

huggingface.co/papers/2412....

Thanks for the amazing collaborators: Ariel Gera, Odellia Boni, @yperlitz.bsky.social, Roy Bar-Haim, Lilach Eden, from IBM Research.

Overall, we found:

1⃣strong correlation between judge ranking abilities and decisiveness

2⃣and Negative correlation with its tendency for System-specific biases

Surprisingly, we found that self-bias is less prevalent than we thought

13.12.2024 10:16 — 👍 0 🔁 0 💬 1 📌 0

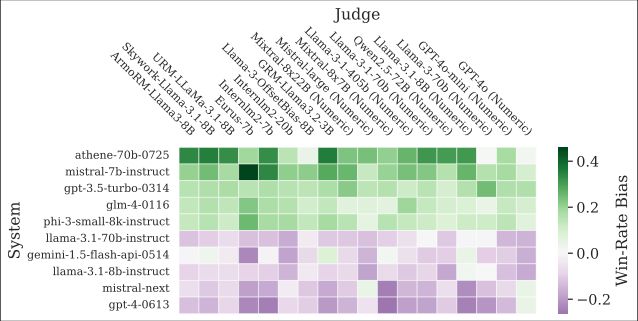

Secondly, we define a new type of Bias:

System-specific bias

Where a judge prefers or dislikes a specific system

Our results demonstrate large biases that affect systems-ranking

Analyzing these figures, we found an emergent judge behavior:

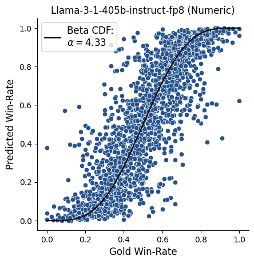

We call it decisiveness!

decisive judges prefer stronger systems, more than humans do!

We measure it based on the empirical fit

What does JuStRank tell us about general judge behavior?

For that, we turn to the system preference task

Given a pair of systems, which one is better!

We plot gold and judge predicted win-rates

With JuStRank we found:

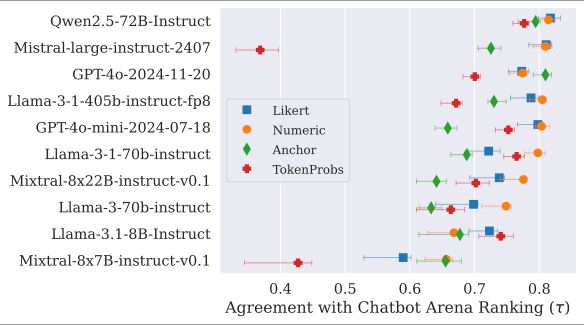

1⃣Smaller dedicated judges are on par with big ones

2⃣LLM judge's realization matters a lot

3⃣Comparative judgment is not the best for most judges

🕺💃

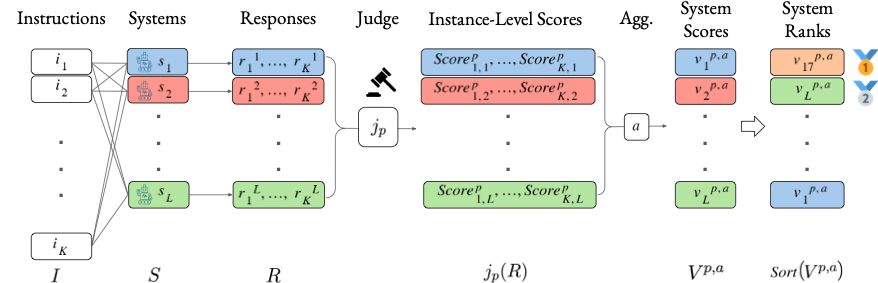

So how did we do it?

For LLMs, we took 4 unique realizations

➕ Reward models

they judge the responses of 64 systems

and got each judge's system ranking

Then we compare the ranking to Arena's gold rank

There are many new judge benchmarks

But most focus on evaluating the judge's ability to choose a better response

We focus on the judge's ability to choose a better system

New preprint! ✨

Interested in LLM-as-a-Judge?

Want to get the best judge for ranking your system?

our new work is just for you:

"JuStRank: Benchmarking LLM Judges for System Ranking"

🕺💃

arxiv.org/abs/2412.09569