#dktech

14.05.2025 19:51 — 👍 3 🔁 0 💬 0 📌 0Dan Saattrup Smart

@saattrupdan.com.bsky.social

Researcher and consultant in low-resource NLP, with a focus on evaluation. saattrupdan.com

@saattrupdan.com.bsky.social

Researcher and consultant in low-resource NLP, with a focus on evaluation. saattrupdan.com

#dktech

14.05.2025 19:51 — 👍 3 🔁 0 💬 0 📌 0

NoDaLiDa 2027 will be held at the Center of Language Technology at the University of Copenhagen!!

#nodalida #nlp

Wanna keep up with our @milanlp.bsky.social lab? Here is a starter pack of current and former members:

bsky.app/starter-pack...

NoDaLiDa x Baltic-HLT 2025 is a wrap!

Thank you all for joining for a fruitful conference! Safe trip home and see you in Copenhagen or Vilnius in 2027!!

#nlp #nodalida #baltichlt

Amazing, well done! Have you conducted any experiments with finetuning LLMs on the data?

06.03.2025 13:44 — 👍 0 🔁 0 💬 0 📌 0

WebFAQ: Massive Multilingual Q&A Dataset

- 96M QA pairs extracted from schema.org/FAQPage annotations

- 75 languages with standardized structured markup

- Leverages existing web publisher content intent

- No synthetic data generation needed

huggingface.co/datasets/PaD...

🚀 Thank you all for waiting! The full program of NoDaLiDa x Baltic-HLT is online:

www.nodalida-bhlt2025.eu/program

#nodalida #baltichlt #nlp #nlproc

Screenshot of 'SHADES: Towards a Multilingual Assessment of Stereotypes in Large Language Models.' SHADES is in multiple grey colors (shades).

⚫⚪ It's coming...SHADES. ⚪⚫

The first ever resource of multilingual, multicultural, and multigeographical stereotypes, built to support nuanced LLM evaluation and bias mitigation. We have been working on this around the world for almost **4 years** and I am thrilled to share it with you all soon.

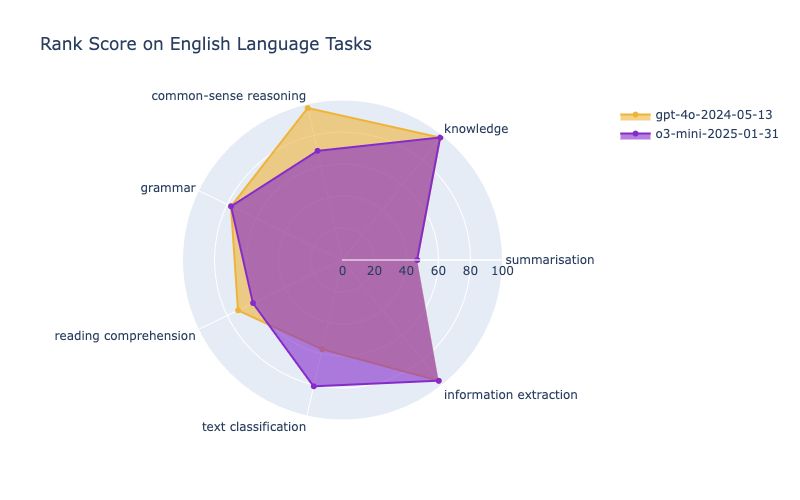

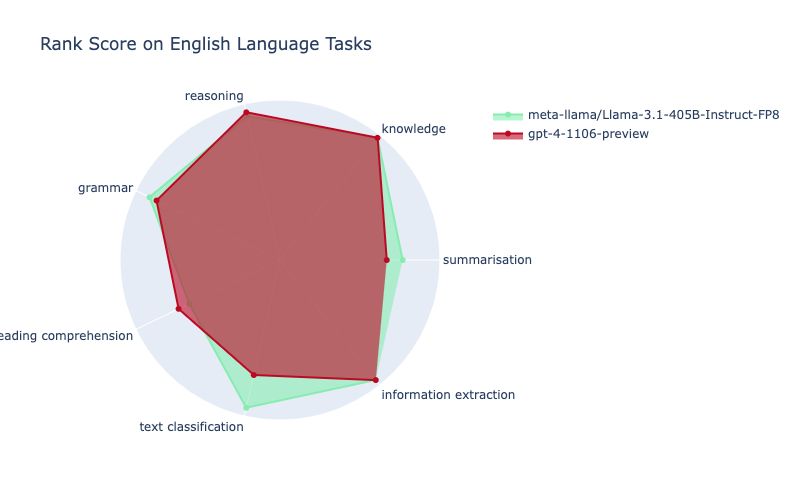

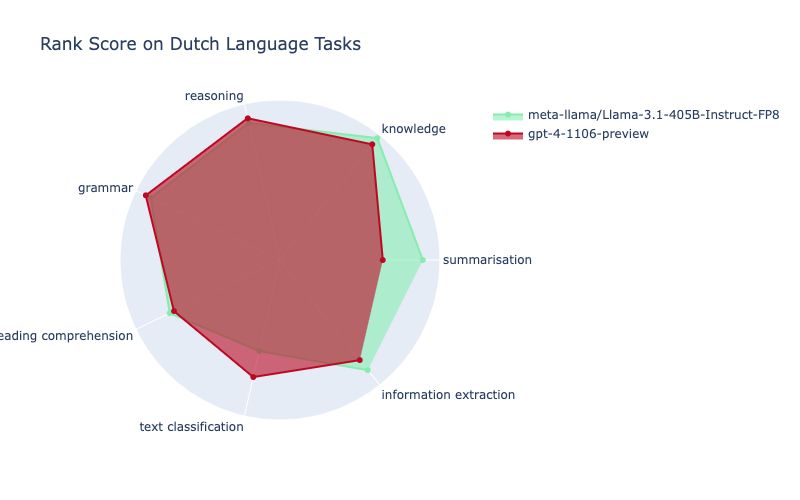

See the full English leaderboard here: scandeval.com/leaderboards...

You can make your own radial plots, like the one above, using this tool: scandeval.com/extras/radia...

(4/4)

If we dig down into more granular evaluations, we see that the main discrepancies between the two models lie in that o3-mini gets a higher text classification performance, where gpt-4o performs better at common-sense reasoning.

(3/4)

Overall, the gpt-4o model achieves a slightly better rank score of 1.46, compared to o3-mini's 1.51. Here lower is better, with 1 being the best score possible (indicating that the model beats all other models at all tasks).

We use the default 'medium' reasoning effort of o3-mini here.

(2/4)

Some new evaluation results from the European evaluation benchmark ScandEval! This time of the new o3-mini model by OpenAI - how well does it compare to the existing gpt-4o model on English tasks?

(1/4)

#nlp #evaluation #reasoning #llm #o3

Check out the full leaderboards on scandeval.com, which also includes results on the Llama-3.3-70B, Qwen2.5-72B, QwQ-32B-preview, Gemma-27B and Nemotron-4-340B.

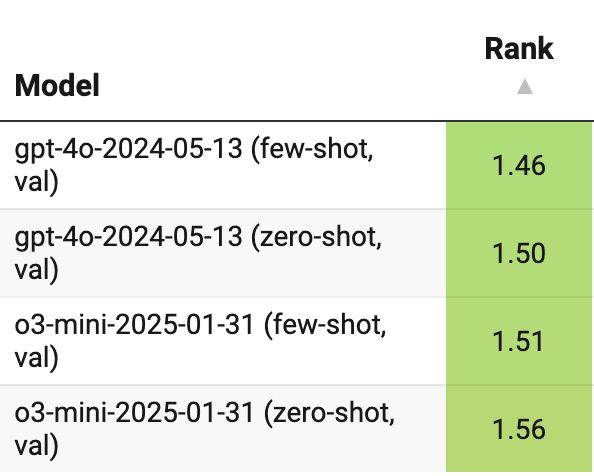

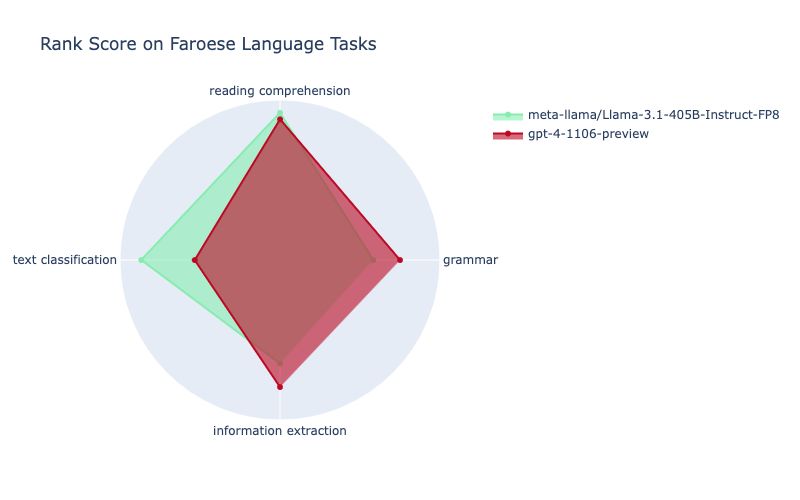

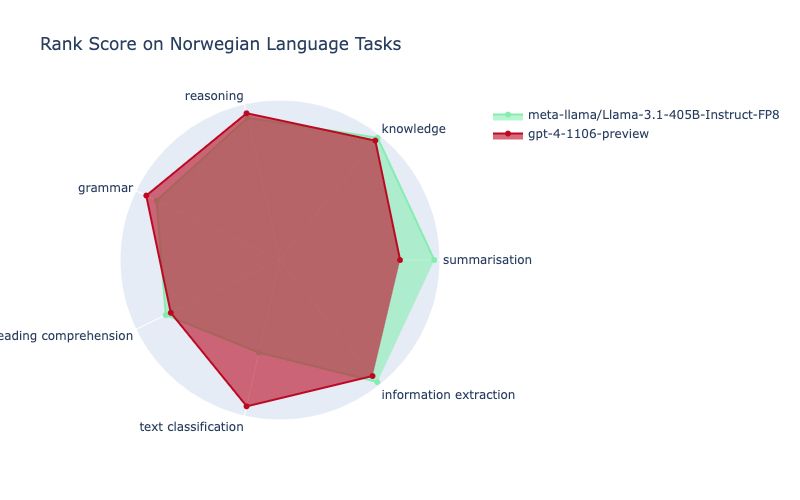

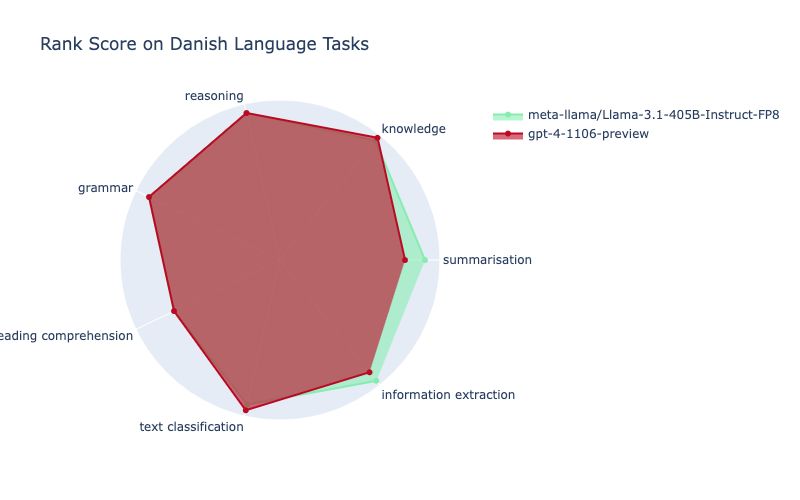

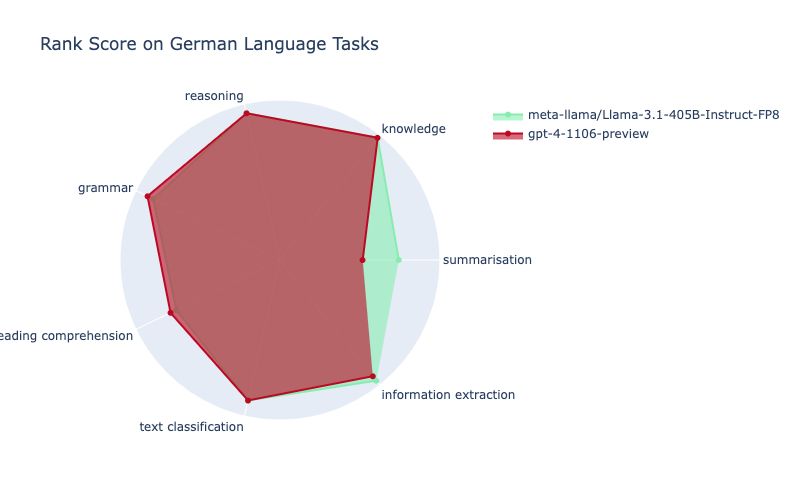

20.01.2025 14:01 — 👍 0 🔁 0 💬 0 📌 0On average, the 405B Llama-3.1 model achieves a solid second place with ScandEval rank of 1.53, where GPT-4-turbo is in the lead with a ScandEval rank of 1.39 🎉

20.01.2025 14:01 — 👍 0 🔁 0 💬 1 📌 0

However, for Icelandic, Faroese and Norwegian, it's not quite there yet.

20.01.2025 14:01 — 👍 0 🔁 0 💬 1 📌 0

For Danish, Swedish, Dutch, German and English, it turns out that it is roughly on par with GPT-4-turbo!

20.01.2025 14:01 — 👍 0 🔁 0 💬 1 📌 0Recently, we got a lot of new ScandEval evaluations of large LLMs, including the 405B Llama-3.1 model. So how well does it perform?

A 🧵 (1/n)

#llm #evaluation

The image shows an illustration titled "Hygge Web Data" featuring three cartoon animals - a fox, an owl, and what appears to be a bear or similar animal - sitting at a table or surface reviewing various documents and papers. The style is cute and whimsical, with the animals drawn in a simple, friendly manner. Each animal is looking at different papers with sketched symbols, text, and designs on them. The illustration has a gentle, cozy feel to it, fitting with the "hygge" (Danish concept of coziness and comfort) mentioned in the title.

Introducing Scandi-fine-web-cleaner, a decoder model trained to remove low-quality web from FineWeb 2 for Danish and Swedish

- Uses FineWeb-c community annotations

- 90%+ precision + minimal compute required

- Enables efficient filtering of 43M+ documents

huggingface.co/davanstrien/...

Brugerdrevet faktatjek kan betyde, at minoriteters interesser bliver overset, advarer ITU-lektor @lrossi.bsky.social.

Påstande om fx grønlandske forhold risikerer at undslippe faktatjek, simpelthen fordi der er få grønlandske brugere i forhold til andre grupper.

www.berlingske.dk/kultur/faceb...

#dkai

28.12.2024 13:14 — 👍 3 🔁 0 💬 0 📌 0

A minimalist illustration showing a packaged charger box labeled "one Union one Charger." The box features an image of a blue charger with the European Union flag symbol and a USB-C cable. The scene is set within a holiday theme, with decorative Christmas trees, ornaments, and gift boxes surrounding the charger box. In the top right corner, there is a small EU flag symbol.

It’s time for THE charger.

Today, the USB-C becomes officially the common standard for charging new mobile electronic devices in the EU.

It means better-charging technology, reduced e-waste, and less fuss to find the chargers you need!

#DigitalEU

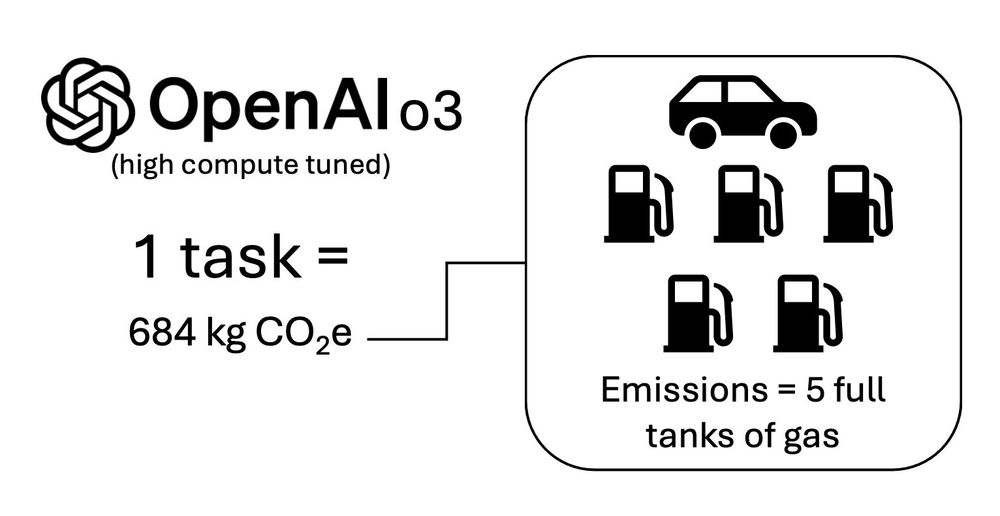

OpenAl03 (high compute tuned) 1 task = 684 kg CO₂e R Emissions = 5 full tanks of gas

"Each task consumed approximately 1,785 kWh of energy—about the same amount of electricity an average U.S. household uses in two months"

This is one per-task estimate from Salesforce's head of sustainability -->>

www.linkedin.com/posts/bgamaz...

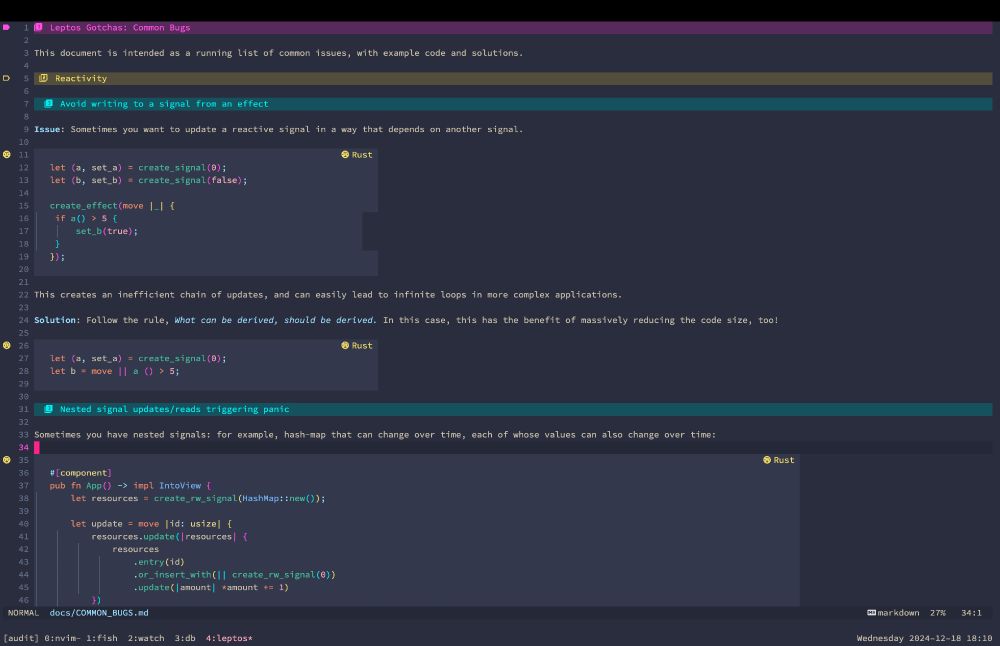

A markdown preview within Neovim, showing syntax-highlighted code blocks, including gutter icons for each filetype, and custom rendering of headers, with unique colors for each level and a replacement of the hash syntax (###) with custom icons.

I'm so impressed with the markview #Neovim plugin. Look at the preview you get out of the box:

github.com/OXY2DEV/mark...

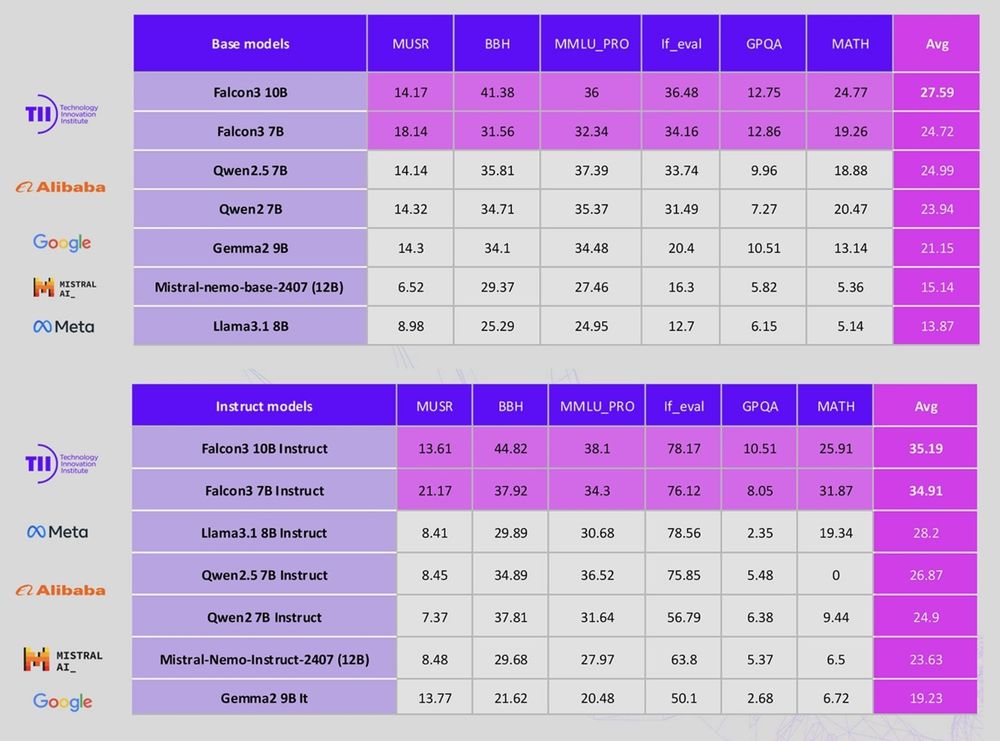

TII UAE's Falcon 3

1B, 3B, 7B, 10B (Base + Instruct) & 7B Mamba, trained on 14 trillion tokens!

- 1B-Base surpasses SmolLM2-1.7B and matches gemma-2-2b

- 3B-Base outperforms larger models like Llama-3.1-8B and Minitron-4B-Base

- 7B-Base is on par with Qwen2.5-7B in the under-9B category

40,7% med hjælp fra 15 annotators! 🇩🇰😎🔥

Vi er kommet langt men ikke helt i mål endnu :) Det drejer sig virkelig ikke om mange annoteringer efterhånden.

Drømmer lidt om at vi kan få en lille slutspurt i løbet af ugen! Hjælp til her: data-is-better-together-fineweb-c.hf.space/dataset/5a58...

Loving this Neovim plugin ❄️

Source: github.com/marcussimons...

Dansk er gået fra 0.1% -> 12.3% i dag! Det svarer til at 123 tekster er annoteret af 3 personer.

Enhver annotering hjælper os med det første mål på 1000 tekster :)

Hjælp med til at annotere datasættet her: data-is-better-together-fineweb-c.hf.space/dataset/5a58... #dkai

Vil du hjælpe med at forbedre kvaliteten af danske sprogmodeller?

Vær med til at hjælpe i annoteringssprintet! Det kræver ingen erfaring - bare gå ind på linket og begynd med annotering:)

huggingface.co/spaces/data-... #dkai #dktech

Længere opslag på LinkedIn: www.linkedin.com/posts/rasgaa...

Danmark Starter Pack för dig i Malmö Öresundsregionen eller bara intresserad av Danmark och danskar.

Nyheter, tidningar, media, politik, organisationer...

#danmark #danskar #köpenhamn #öresund #malmö #skåne #nyheter #tidningar #media #politik #starterpack

go.bsky.app/U2VkkfU

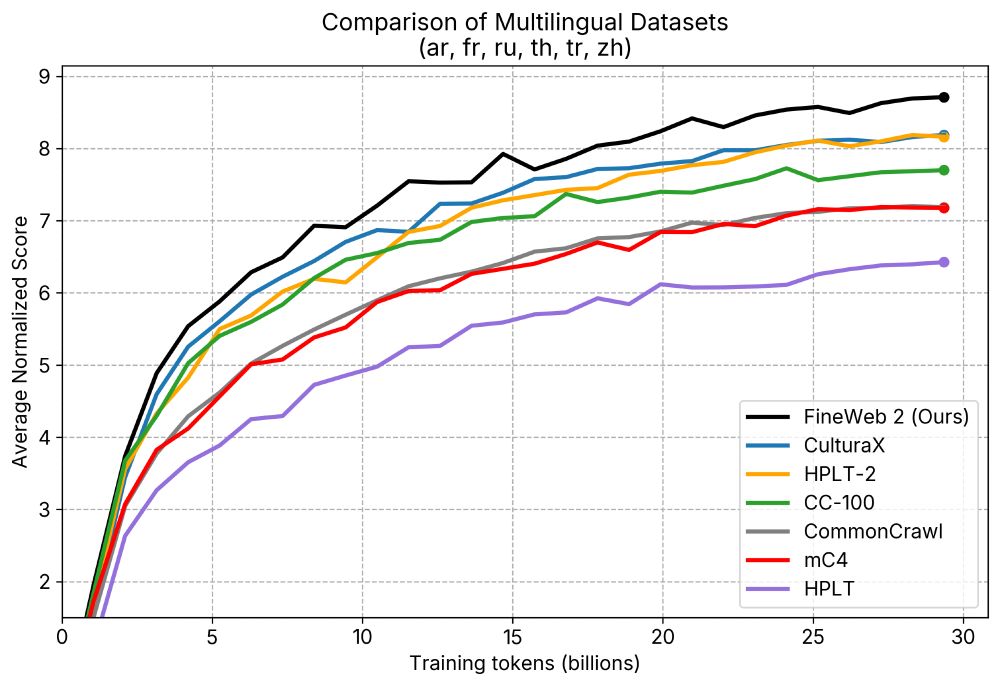

Announcing 🥂 FineWeb2: A sparkling update with 1000s of 🗣️languages.

We applied the same data-driven approach that led to SOTA English performance in🍷 FineWeb to thousands of languages.

🥂 FineWeb2 has 8TB of compressed text data and outperforms other datasets.