Pretty shocking vulnerability that the community should be aware of!

11.03.2025 23:18 — 👍 3 🔁 0 💬 0 📌 0@danielmisrael.bsky.social

@danielmisrael.bsky.social

Pretty shocking vulnerability that the community should be aware of!

11.03.2025 23:18 — 👍 3 🔁 0 💬 0 📌 0

Please check out the rest of the paper! We propose: how MARIA can be used for test time scaling, how to initialize MARIA weights for efficient training, how MARIA representations differ, and more…

arxiv.org/abs/2502.06901

Thanks to my advisors Aditya Grover and @guyvdb.bsky.social

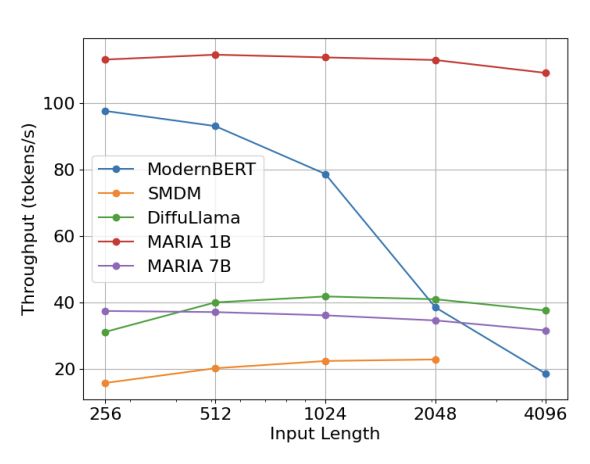

MARIA 1B achieves the best throughput numbers, and MARIA 7B achieves similar throughput to DiffuLlama, but better samples as previously noted. Here, we see that ModernBERT despite being much smaller does not scale well for masked infilling because it cannot KV cache.

14.02.2025 00:28 — 👍 0 🔁 0 💬 1 📌 0

We perform infilling on downstream data with words masked 50 percent. Using GPT4o-mini as a judge we compute the ELO scores for each model respectively. MARIA 7B and 1B have the highest rating ELO rating under the Bradley-Terry model.

14.02.2025 00:28 — 👍 0 🔁 0 💬 1 📌 0

MARIA achieves far better perplexity than just using ModernBERT autoregressively and discrete diffusion models on downstream masked infilling test sets. Based on parameter counts, MARIA presents the most effective way to scale models for masked token infilling.

14.02.2025 00:28 — 👍 0 🔁 0 💬 1 📌 0

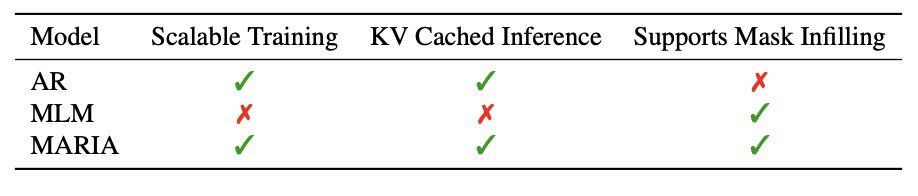

We can get the best of both worlds with MARIA: train a linear decoder to combine the hidden states of an AR and MLM model. This enables AR masked infilling with the advantages of a more scalable AR architecture, such as KV cached inference. We combine OLMo and ModernBERT.

14.02.2025 00:28 — 👍 1 🔁 0 💬 1 📌 0

Autoregressive (AR) LMs are more compute efficient to train than Masked LMs (MLM), which compute a loss on some fixed ratio e.g. 30% of the tokens instead of 100% like AR. Unlike MLM, AR models can also KV cache at inference time, but they cannot infill masked tokens.

14.02.2025 00:28 — 👍 0 🔁 0 💬 1 📌 0“That’s one small [MASK] for [MASK], a giant [MASK] for mankind.” – [MASK] Armstrong

Can autoregressive models predict the next [MASK]? It turns out yes, and quite easily…

Introducing MARIA (Masked and Autoregressive Infilling Architecture)

arxiv.org/abs/2502.06901

Interesting. I always felt the reviews should be even more independent so that the aggregate score has lower variance

03.01.2025 21:21 — 👍 0 🔁 0 💬 0 📌 0